Video description method based on space-time super-resolution and electronic equipment

A super-resolution and video description technology, applied in the fields of computer vision and natural language, can solve the problems of reduced model running speed and large computing costs, and achieve the effects of high-efficiency operation, low computing overhead, and low computing cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be described in detail below with reference to the accompanying drawings and specific embodiments. This embodiment is implemented on the premise of the technical solution of the present invention, and provides a detailed implementation manner and a specific operation process, but the protection scope of the present invention is not limited to the following embodiments.

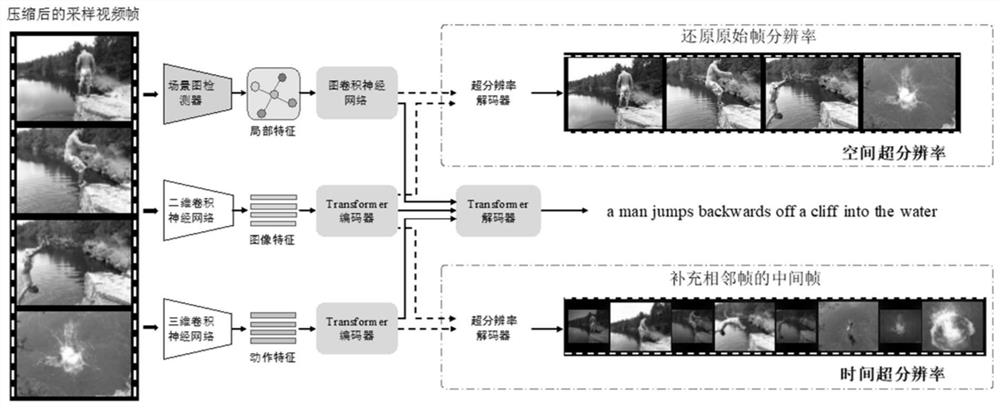

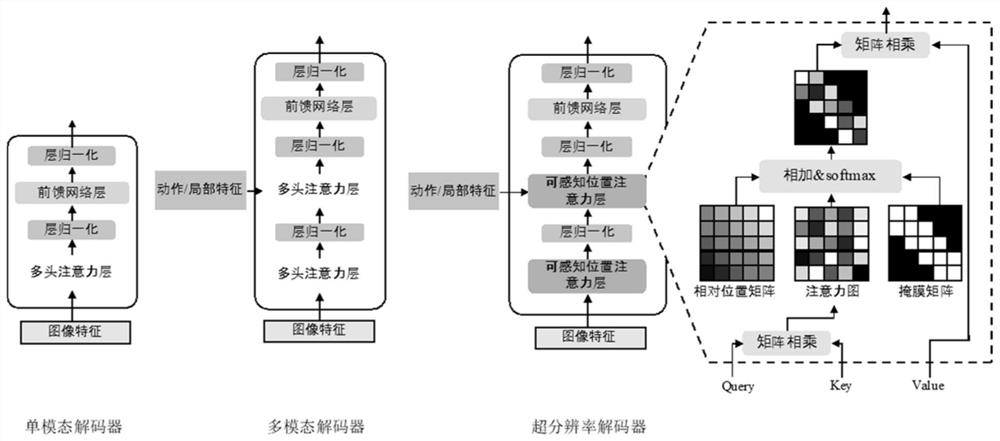

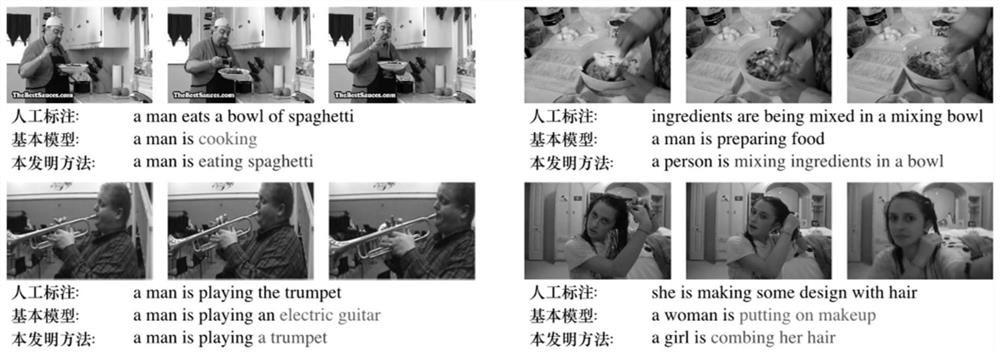

[0033] This embodiment proposes a video description method based on spatiotemporal super-resolution, such as figure 1 As shown, the method is implemented based on a video description model, and includes the following steps: acquiring an input video, sampling the input video to obtain a video frame sequence including several compressed size frames; Perform multi-modal feature extraction and feature encoding, dynamically fuse the encoded multi-modal features, and gradually decode to generate video description sentences. During the training of the video description model, the or...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com