Conditional adversarial sample-based model poisoning method and system

A technology against samples and conditions, applied in neural learning methods, biological neural network models, computer components, etc., can solve the problems of data defense, invalid poisoning, and model performance degradation, etc., to reduce the amount of poisoning and performance Decreases and strengthens the effect of concealment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below with reference to the accompanying drawings and specific embodiments.

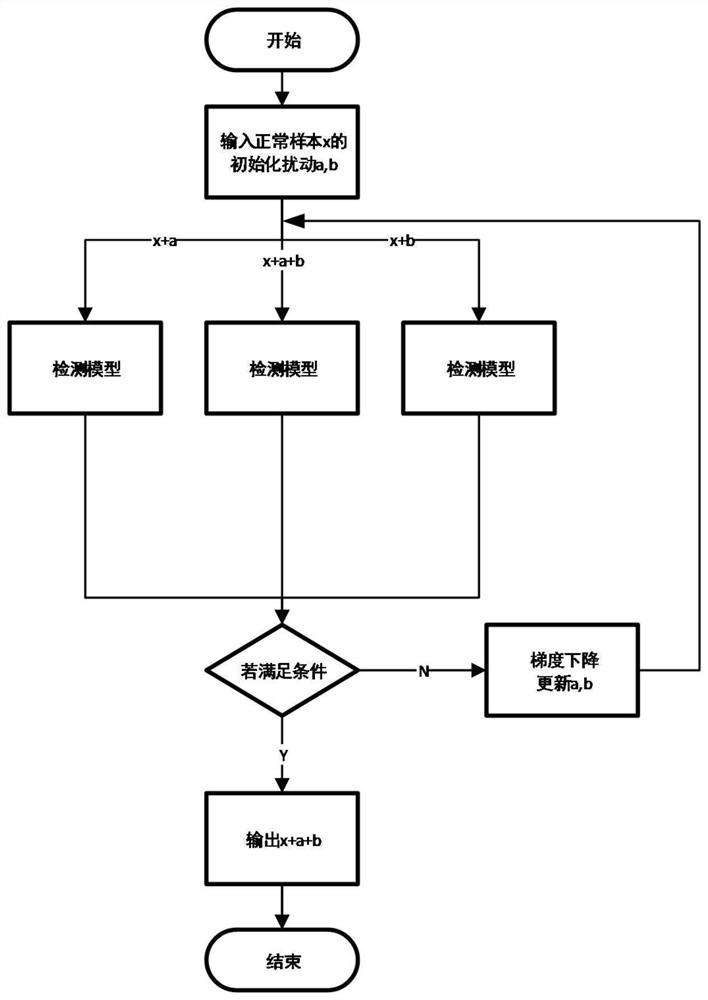

[0039] This case proposes a model poisoning method based on conditional adversarial examples. Specifically, the adversarial perturbation is divided into two perturbations, a and b. The purpose is to make the original sample show the characteristics of an adversarial sample after adding perturbation b, and show the characteristics of a normal sample when a and b are added at the same time. Through the addition of a and b, the generated conditional adversarial samples show normal classification in the classification model, but the classification logic is quite different from the normal training samples. Adding such conditional adversarial samples to the training process of the detection model will lead to a decrease in the efficiency of the detection model training classification features, and even failure to learn the classification features corre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com