Federal learning-oriented sample poisoning attack resisting method

A technology against samples and federation, applied in the direction of equipment, computing, and platform integrity maintenance, to achieve the effect of weakening the attack effect and weakening the toxicity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

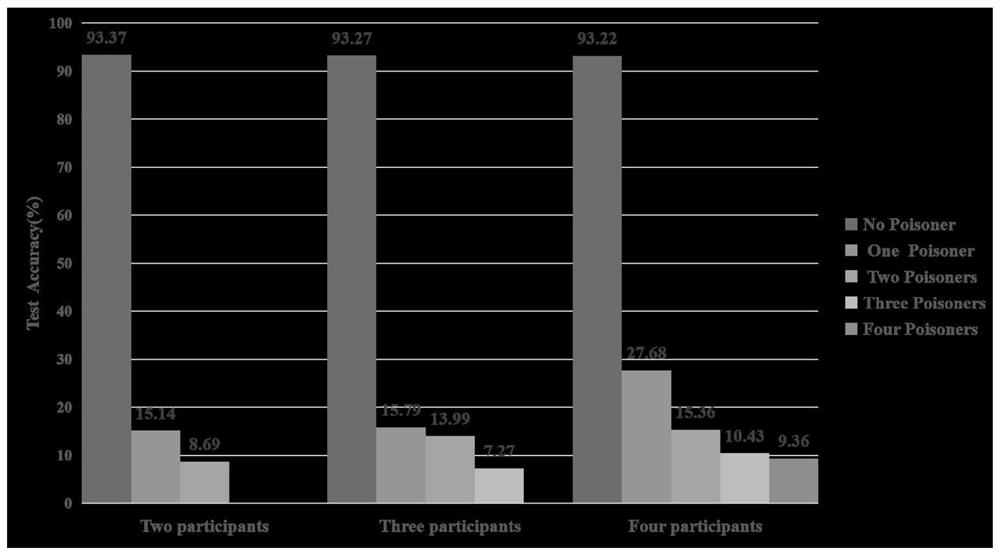

Image

Examples

Embodiment Construction

[0023] An adversarial sample poisoning attack method for federated learning, including the following steps:

[0024] S1. The attacker passes local private training samples Add some adversarial perturbations that are imperceptible to the human eye to generate “toxic” adversarial samples and train locally based on these samples;

[0025] S2. In order to dominate the training process of the global model, the attacker increases the training learning rate during the local training process to accelerate the generation of malicious model parameters;

[0026] S3. The attacker uploads its local model parameters to the server to participate in aggregation to affect the global model.

[0027] Among them, in the step 1, the following scenario is defined, assuming that there are m participants participating in the training, m>=2, assuming that the kth participant is the attacker, in the federated learning system, the local training of each participant is regarded as It is a traditiona...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com