Cross-modal retrieval method based on modal relation learning

A cross-modal and modal technology, applied in the field of cross-modal retrieval based on modal relationship learning, can solve the problem of inconvenient retrieval of useful information, achieve good mutual retrieval performance of images and texts, and improve retrieval accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

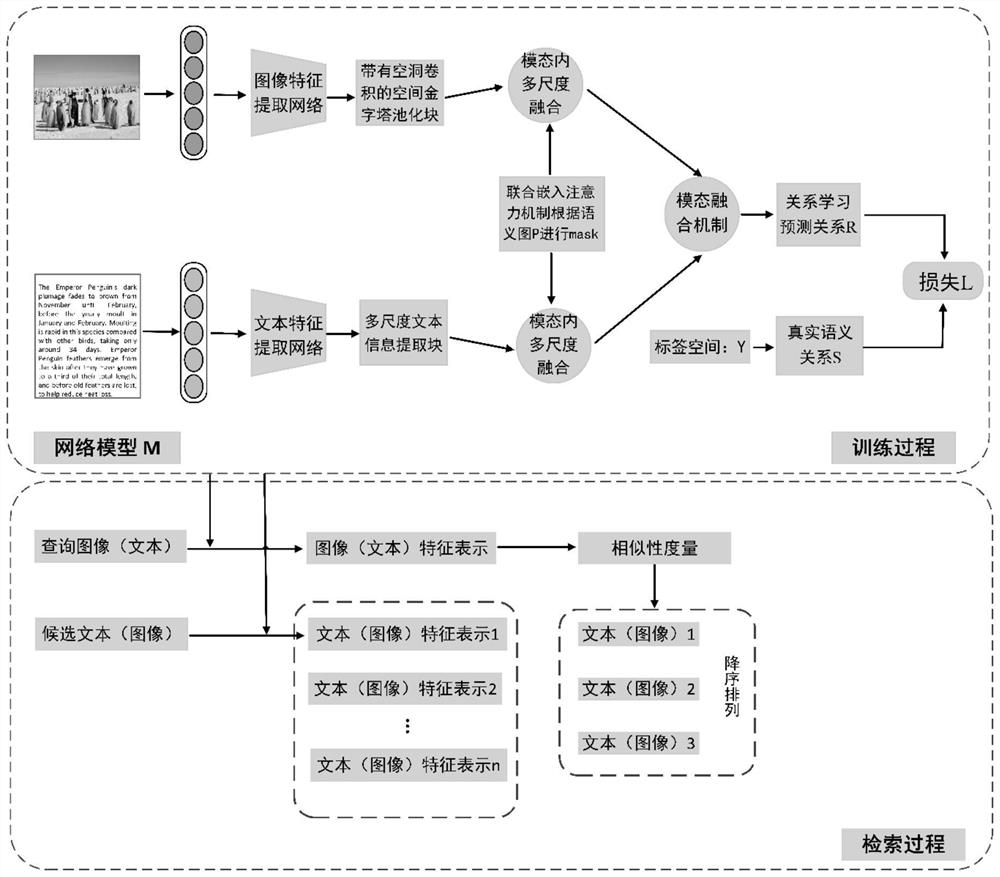

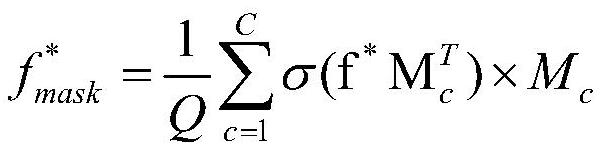

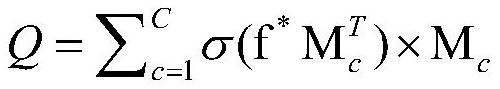

[0052] The present invention proposes a cross-modal retrieval method based on modal relationship learning. By constructing a cross-modal-specific multi-modal deep learning network, a dual fusion mechanism between modalities and intra-modalities is established to perform intermodal relationship learning. Not only the multi-scale features are fused within the modality, but also the relationship information of the labels is used between the modalities to directly learn the complementary relationship of the fused features. In addition, the attention mechanism between the modalities is added for joint feature embedding, so that the fused features Retaining as much inter-modal invariance and intra-modal discriminative as possible further improves the retrieval performance across modalities.

[0053] see figure 1 As shown, the present invention is a cross-modal retrieval method based on modal relationship learning. The model includes a training process and a retrieval process. Specif...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com