Static gesture intention recognition method and system based on dynamic feature assistance and vehicle

A technology of dynamic features and recognition methods, applied in the field of vehicle control, can solve problems such as increased cost of components, inaccuracy, and unsuitable applications, and achieve the effects of improving gesture control performance, reducing misidentification, and improving recognition performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be described in detail below with reference to the accompanying drawings.

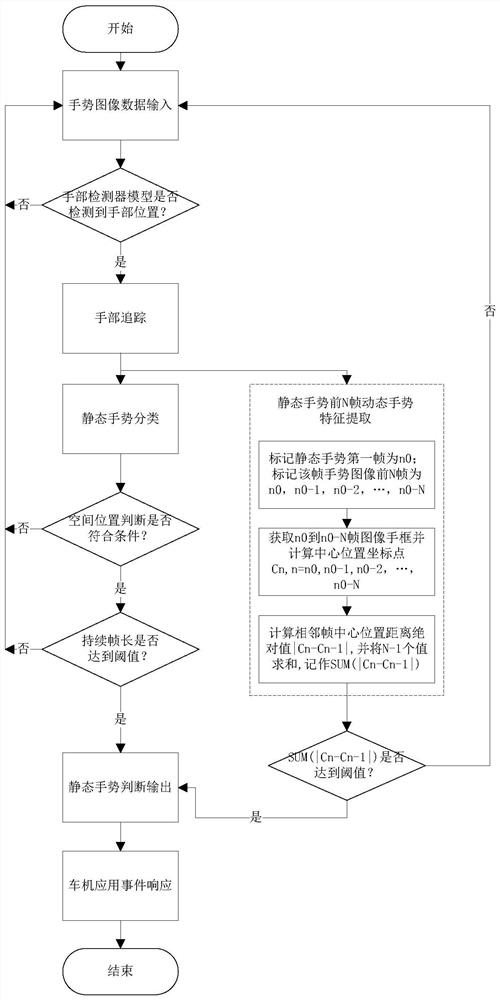

[0031] like figure 1 As shown, in this embodiment, a method for recognizing static gesture intent based on dynamic feature assistance includes the following steps:

[0032] Step 1: Hand detection stage: acquire gesture image data, and detect the gesture image data. If the position of the hand is detected, output the position of the hand and go to Step 2, otherwise, repeat Step 1.

[0033] In this embodiment, the original gesture image data is input into the hand detector model, YOLO V3 is selected as the target detection network in the hand detection model, the detection category is the hand category, and the training data is used to determine the parameters of the convolutional neural network. Adjustment, and perform scene analysis for the samples of false detection and missed detection in the actual scene, iteratively complete the model training, the model detects a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com