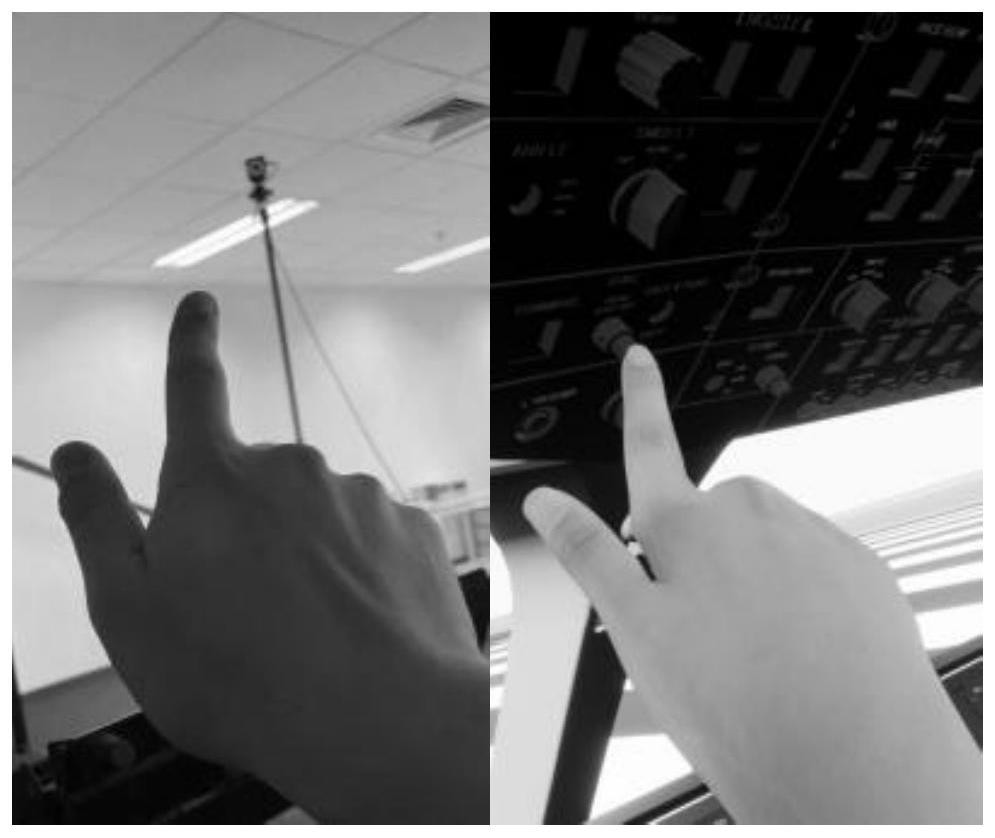

Man-machine interaction virtual-real fusion method and device based on mixed reality head-mounted display

A technology of human-computer interaction and mixed reality, applied in the input/output of user/computer interaction, mechanical mode conversion, computer components, etc., can solve the problems of reduced authenticity of interaction effects and poor guarantee of modeling accuracy, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

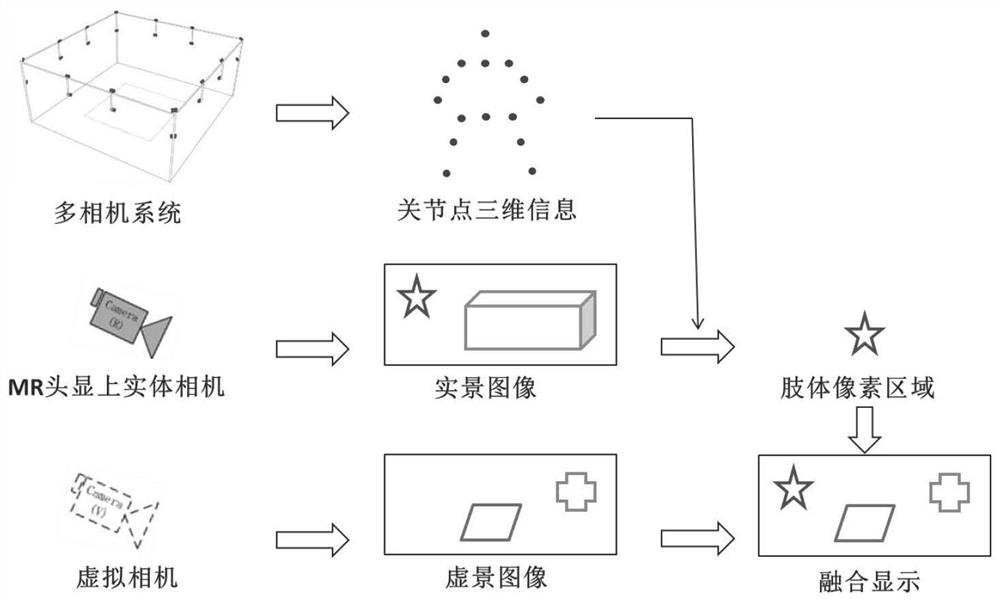

Method used

Image

Examples

Embodiment 1

[0036] Figure 7 It is a flow chart of a virtual-real fusion method for human-computer interaction based on a mixed reality head-mounted display according to an embodiment of the present invention, such as Figure 7 As shown, the method includes the following steps:

[0037] Step S702, obtain the internal parameter matrix M of the camera 内 and the extrinsic parameter matrix M 外 .

[0038] Step S704, obtain the tracking coordinate system related information of the MR device according to the internal parameter matrix and the external parameter matrix {P ori , R ori } and the pose relationship t between the entity camera relative to the tracking origin related .

[0039] Step S706, using the MR device to track the pose relationship of the camera on the device and the head-mounted display relative to the tracking origin, using the formula M' 外 =T(P ori ,R ori ,t related ) to obtain the extrinsic parameters M' of the camera 外 , where T(*) means that through P ori ,R or...

Embodiment 2

[0069] Figure 8 It is a structural block diagram of a virtual reality fusion device for human-computer interaction based on a mixed reality head-mounted display according to an embodiment of the present invention, such as Figure 8 As shown, the device includes:

[0070] The acquisition module 80 is used to acquire the internal parameter matrix M of the camera 内 and the extrinsic parameter matrix M 外 .

[0071] The coordinate module 82 is used to obtain the tracking coordinate system related information of the MR device according to the internal parameter matrix and the external parameter matrix {P ori , R ori } and the pose relationship t between the entity camera relative to the tracking origin related .

[0072] The calculation module 84 is used to use the MR device to track the pose relationship of the camera on the device and the head-mounted display relative to the tracking origin, using the formula M' 外 =T(P ori ,R ori ,t related ) to obtain the extrinsic par...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com