A mixing motion detection method combining with video encoder

A video encoder and hybrid motion technology, applied in digital video signal modification, television, instrument, etc., can solve the problem of high computational complexity, achieve the effect of small data calculation and reduce hardware cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] Various preferred embodiments of the present invention will be described in more detail below in conjunction with the accompanying drawings.

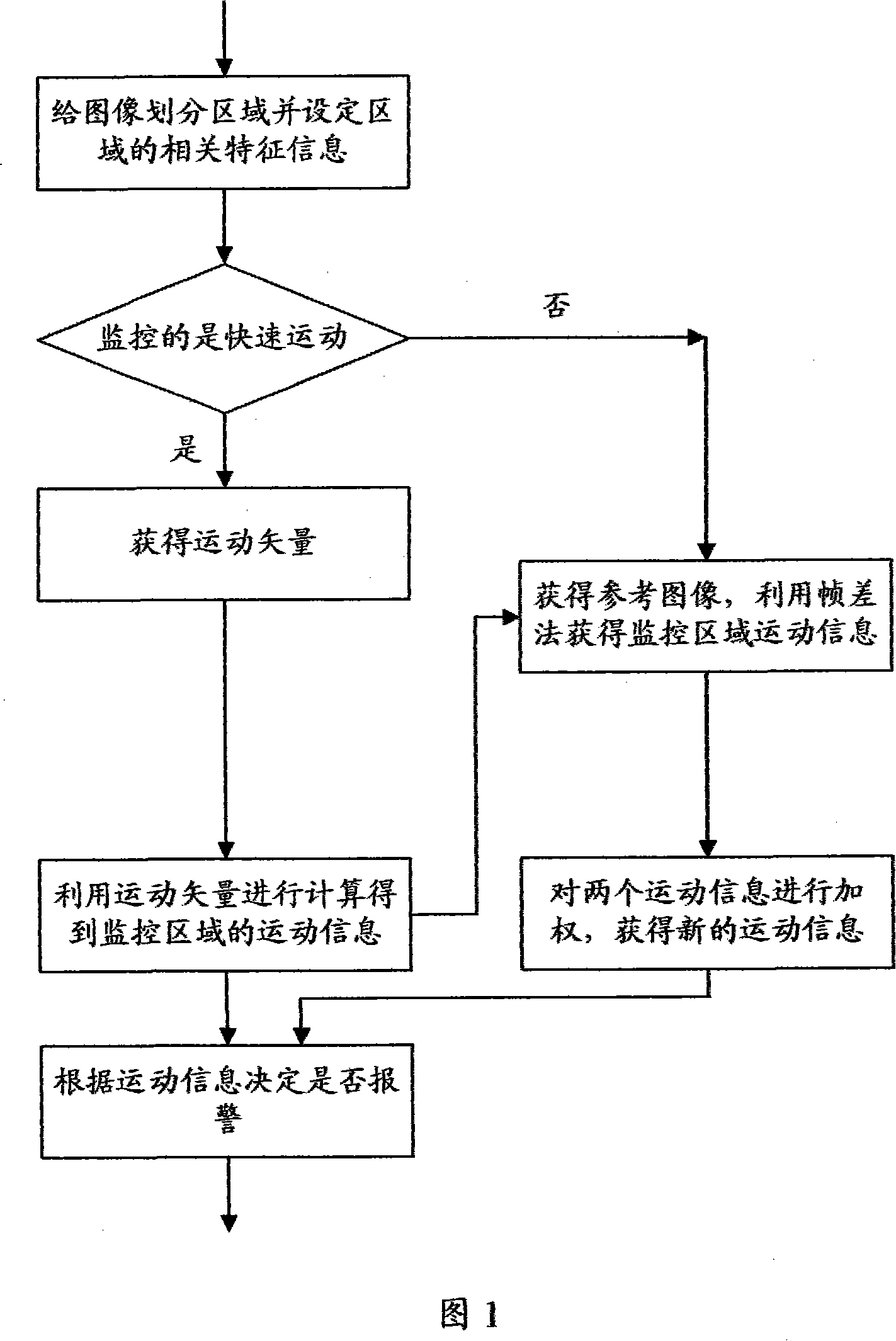

[0026] The hybrid motion detection method in conjunction with video encoder of the present invention, as shown in Figure 1, it comprises the steps:

[0027] The first step is to divide the image of the video surveillance area into several areas and set the relevant feature information in the area. The division of this area is determined by the application program, such as by artificially demarcating the range of the area to be monitored, and the number of areas to be monitored. These areas can be overlapped; the motion feature information includes: thresholds of various monitoring parameters, such as motion information thresholds, including monitoring slow targets, fast targets, or targets exceeding a specific speed, etc.;

[0028] The second step is to judge whether it is monitoring fast motion, and if so, obtain the motion vect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com