Intelligent video monitoring method and system thereof

An intelligent video surveillance, frame image technology, applied in closed-circuit television systems, image data processing, instruments, etc., can solve the problems of false alarms, images are not emotional enough, poor real-time interactivity, etc., achieve high accuracy, improve alarm accuracy, The effect of improving the response speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

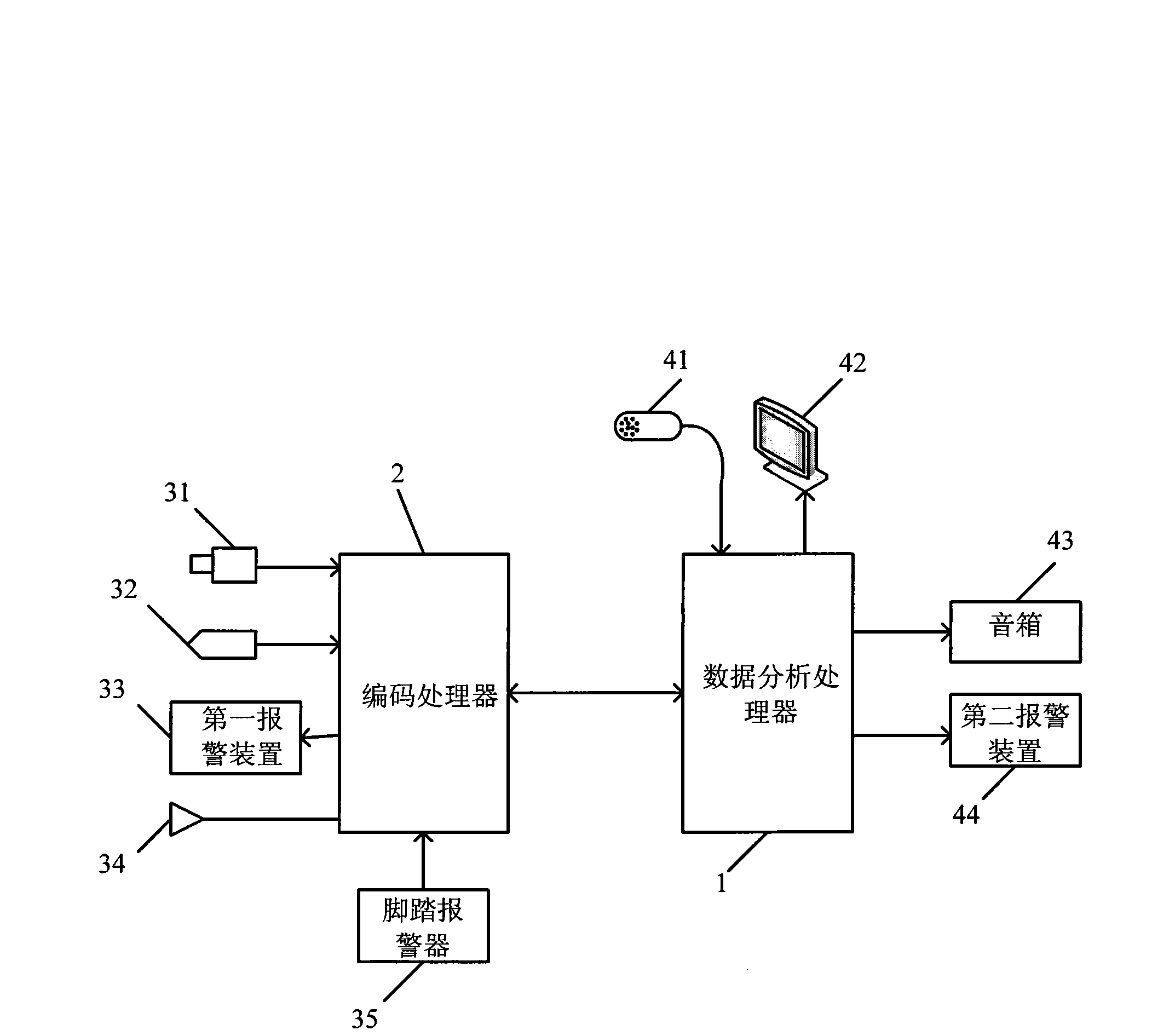

[0066] like figure 1 As shown, in the intelligent video surveillance system of the present invention, comprise camera 31, the first warning device 33, coding processor 2, data analysis processor 1, display 42; Wherein, camera 31 and the first warning device 33 and coding respectively The processor 2 is connected, and the encoding processor 2 and the display 42 are respectively connected with the data analysis processor 1; the encoding processor 2 is used to compress and encode the video stream sent by the camera 31 into a video signal, especially, the encoding processor 2 performs image processing The signal is compressed and encoded by MPEG4, H.263, H.264 or M-JPEG; the data analysis processor 1 is used to send the video signal sent by the encoding processor 2 to the display 42 for display, and analyze and process it. , the code processor 2 sends an alarm signal to the first alarm device 33 for alarming. In addition, the number of the camera 31, the first alarm device 33 and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com