Multi-source image fusion method

A fusion method and source image technology, applied in the field of image fusion, can solve the problems of consuming a lot of time, ignoring the overall picture of the image, occupying a lot of memory, etc., to improve the visual effect and reduce the effect of "scratch".

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Preferred embodiments of the present invention will be described in detail below.

[0037] Overall steps:

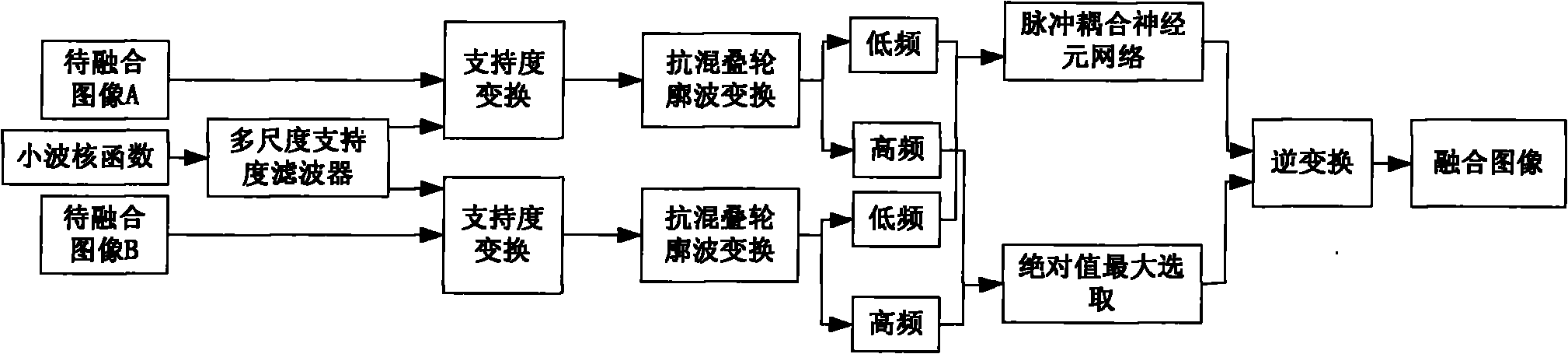

[0038] Such as figure 1 As shown, a multi-source image fusion method, the steps are:

[0039] In the image feature extraction stage:

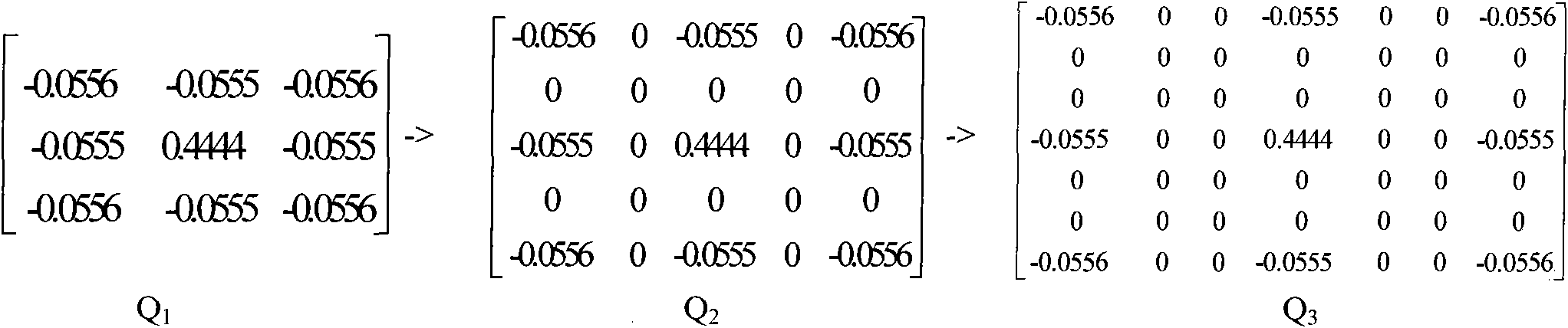

[0040] 1) Using the wavelet kernel function to treat the fused image A and the image B to be fused to establish a multi-scale support filter;

[0041] 2) Use a multi-scale support filter to perform support transformation (ie: SVT transformation) on the image to be fused to form high and low frequency information;

[0042] 3) Use the anti-aliasing contourlet transform method (ie: NACT transform) to process the high and low frequency information to obtain high and low frequency anti-aliasing information;

[0043] In the image fusion stage:

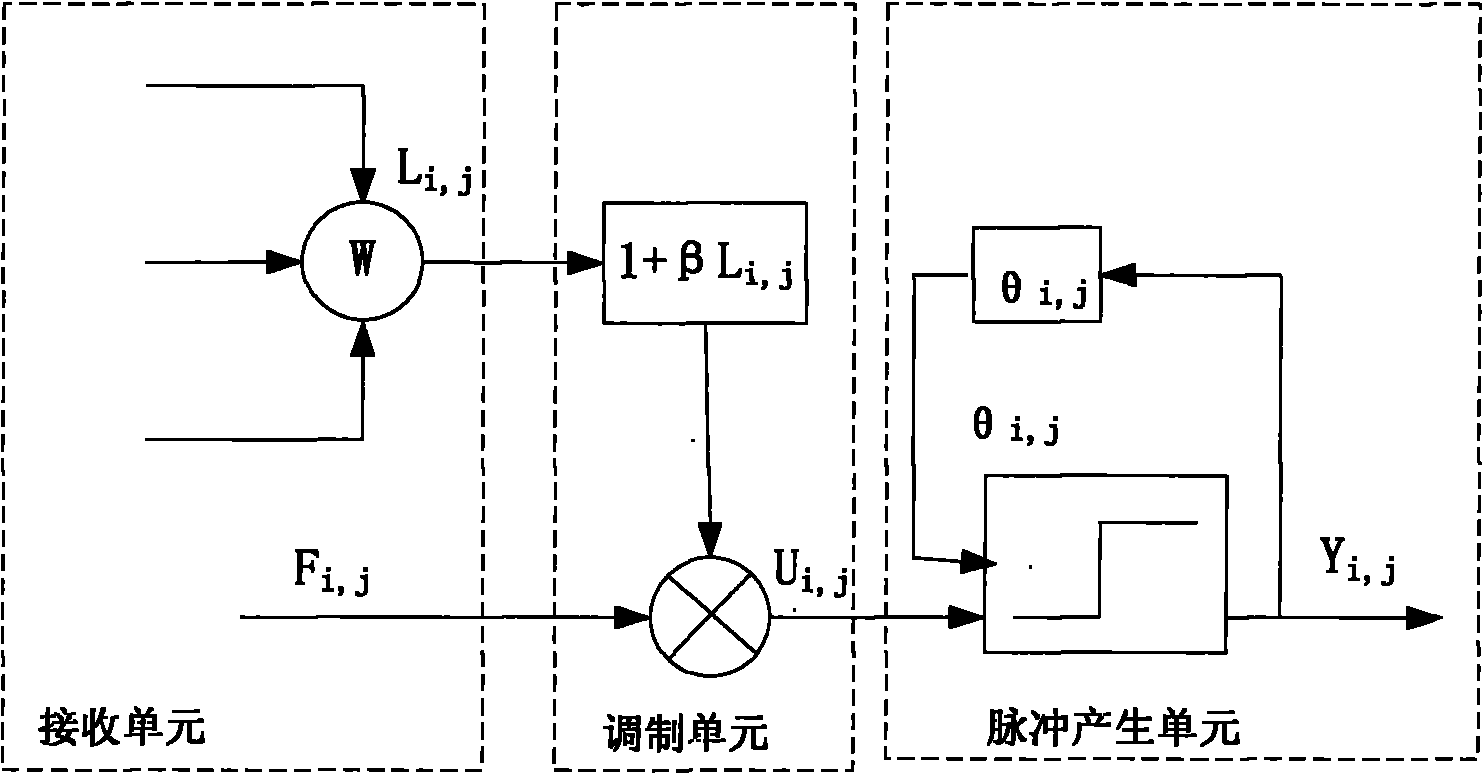

[0044] 4) For the low-frequency anti-aliasing information of each image to be fused, the pulse-coupled neuron network (PCNN) fusion rule is used to select the low-frequency anti-aliasing inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com