Distribution transform-based multi-sensor image fusion method

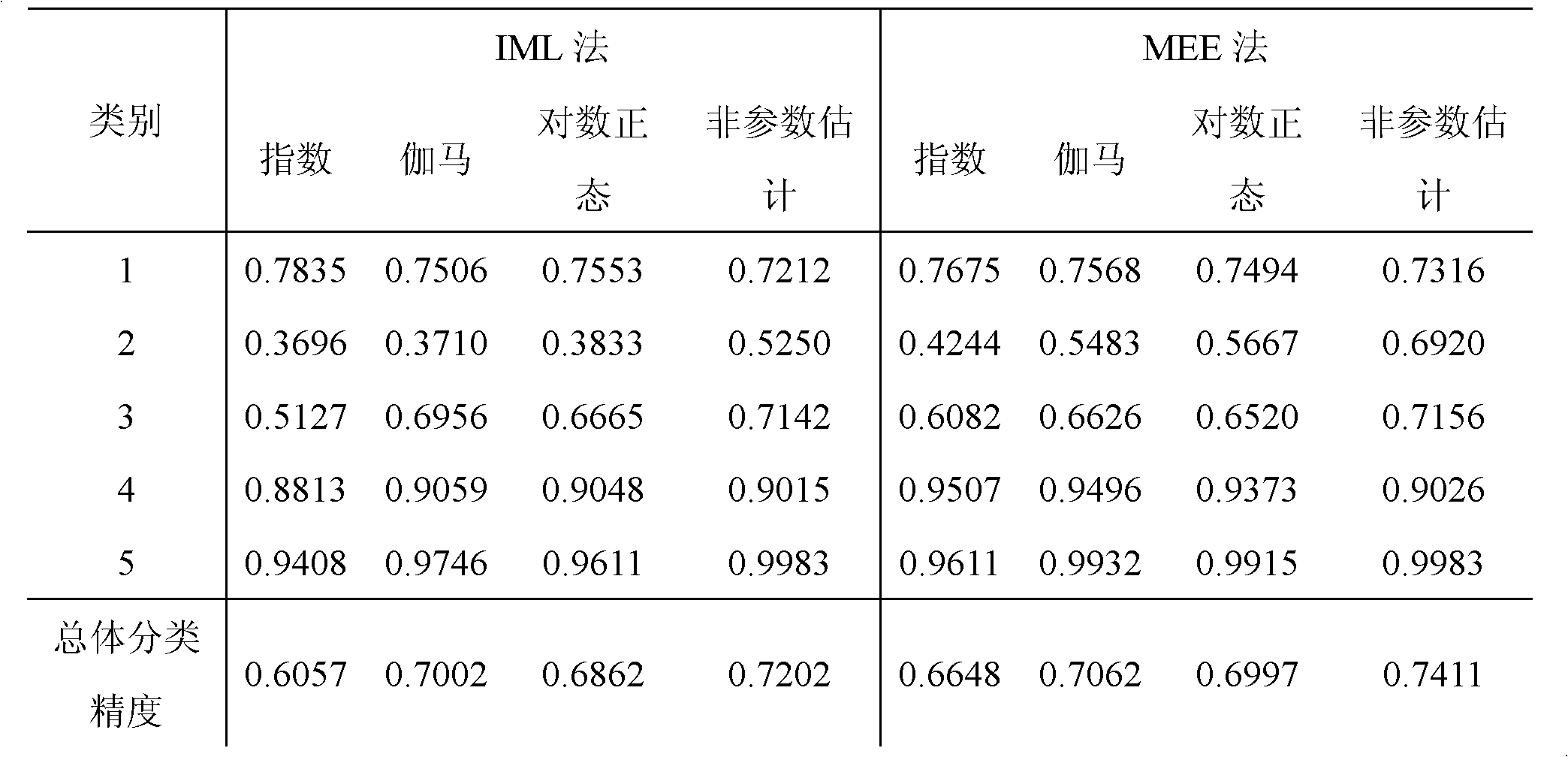

An image fusion and multi-sensor technology, applied in image enhancement, image data processing, instruments, etc., can solve problems such as difficulty in establishing a unified data distribution model, lack of data, etc., to avoid overfitting effects, improve instability, and improve classification accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0031] Specific implementation mode one: this implementation mode is realized by the following several steps:

[0032] Step 1: Transform the data format of the SAR image and the multispectral image file to be fused, so that the gray value of each image to be fused is converted into a corresponding vector form;

[0033] Step 2: Analyze the characteristics of each converted image data to be fused, and respectively establish a PDF model of the synthetic aperture radar image and a PDF model of the multispectral image;

[0034] Step 3: Apply the distribution transformation theory, and establish a joint probability density function model of multi-source data according to the correlation between multiple sources;

[0035] Step 4: According to the scale parameters and shape parameters in the joint probability density model obtained in step 3, perform estimation operations;

[0036] Step 5: Substituting the parameters obtained in Step 4 into the joint probability density model of Step...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com