Cache management method of single-carrier multi-target cache system

A cache system and cache management technology, applied in memory systems, input/output to record carriers, electrical digital data processing, etc., can solve the problems of wasting cache space and different disk frequency, and achieve the effect of good IO performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

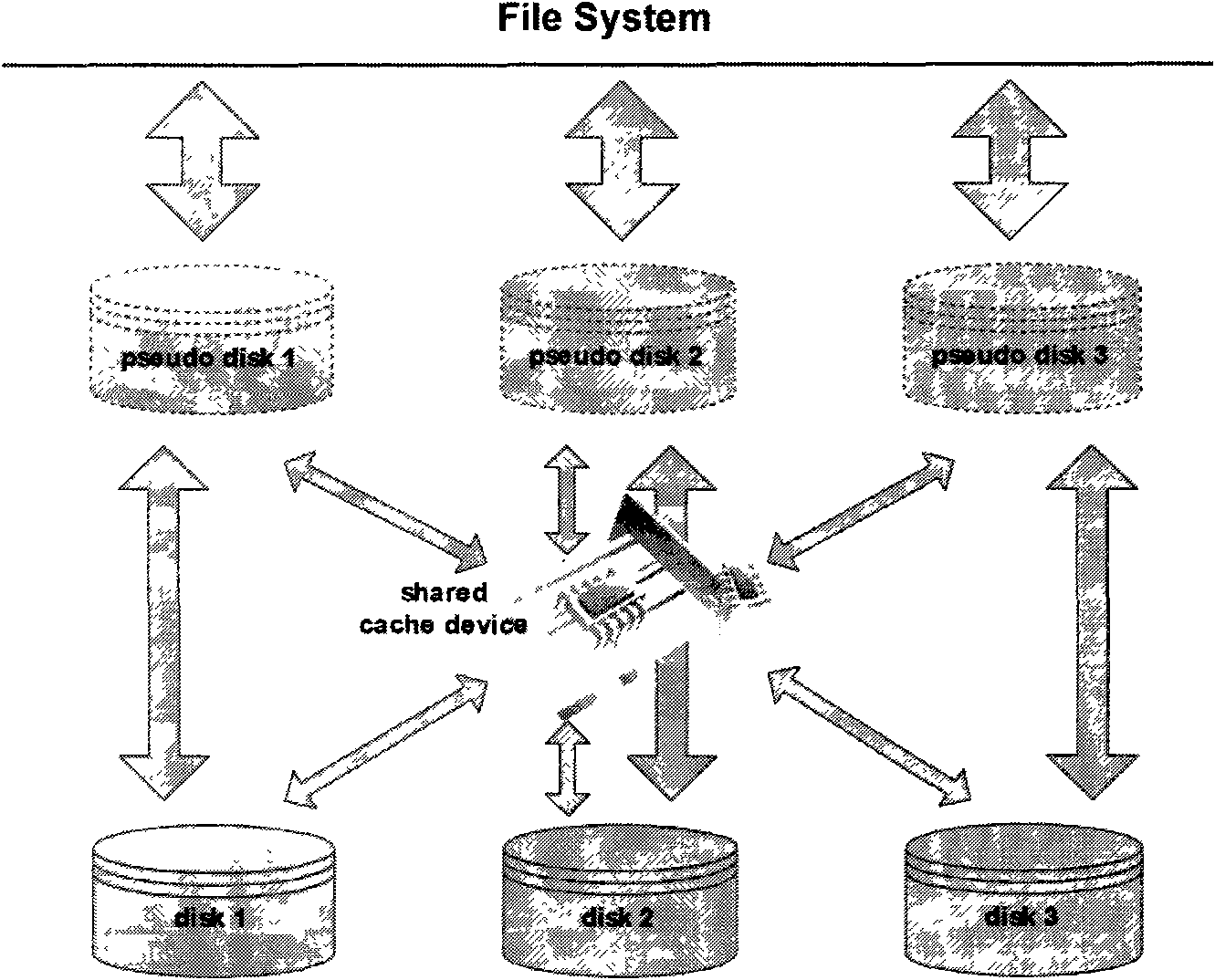

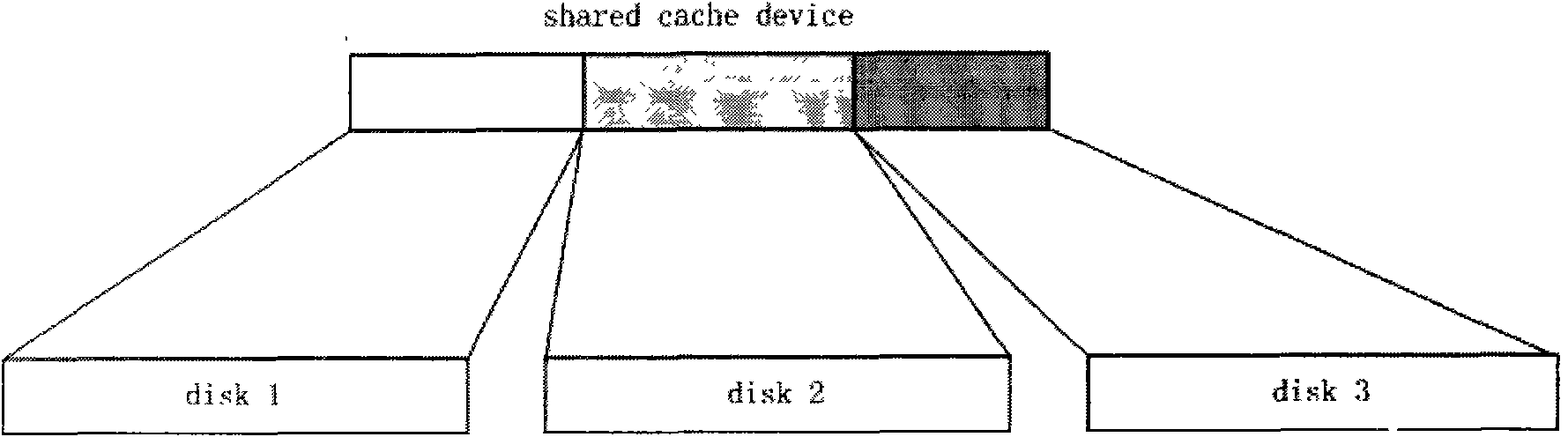

[0021] In the implementation process, it is equivalent to artificially dividing the only cache device into multiple shares (determine the number of shares and the size of each share according to the number and capacity of the disk devices to be cached), and then each disk device corresponds to one of them , the system saves all the mapping relationships. When receiving an IO request, first detect which disk device the IO operation belongs to; according to the mapping relationship between the disk device and the cache device, the offset address of the corresponding cache device can be found, since the size of the cache used by it has been determined, Therefore, the address information of this IO can be easily mapped to the address space of the cache device according to the rules of group connection; and then further read and write operations can be performed according to the address information of the cache device.

[0022] The whole process is as Figure 4 shown.

[0023] Wh...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com