A Recognition Method of Remote Sensing Artificial Objects Based on Object Semantic Tree Model

A technology of artificial ground objects and semantic trees, applied in character and pattern recognition, image data processing, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] The present invention is further described below in conjunction with embodiment and accompanying drawing.

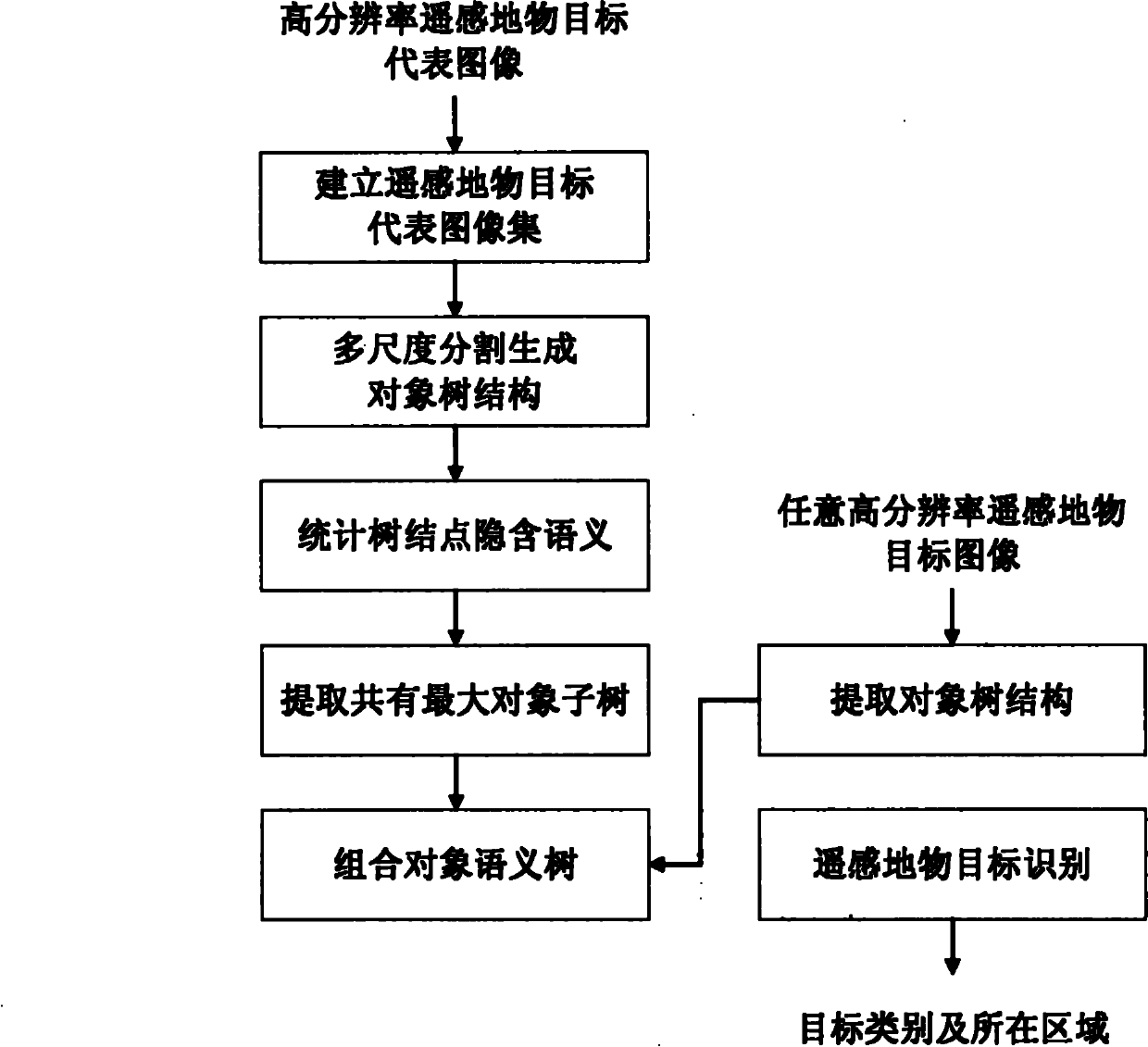

[0068] figure 1 It is a schematic flow chart of the method for man-made object target recognition based on the object semantic tree model of the present invention, and the specific steps include:

[0069] The first step is to establish a representative image set of high-resolution remote sensing surface objects:

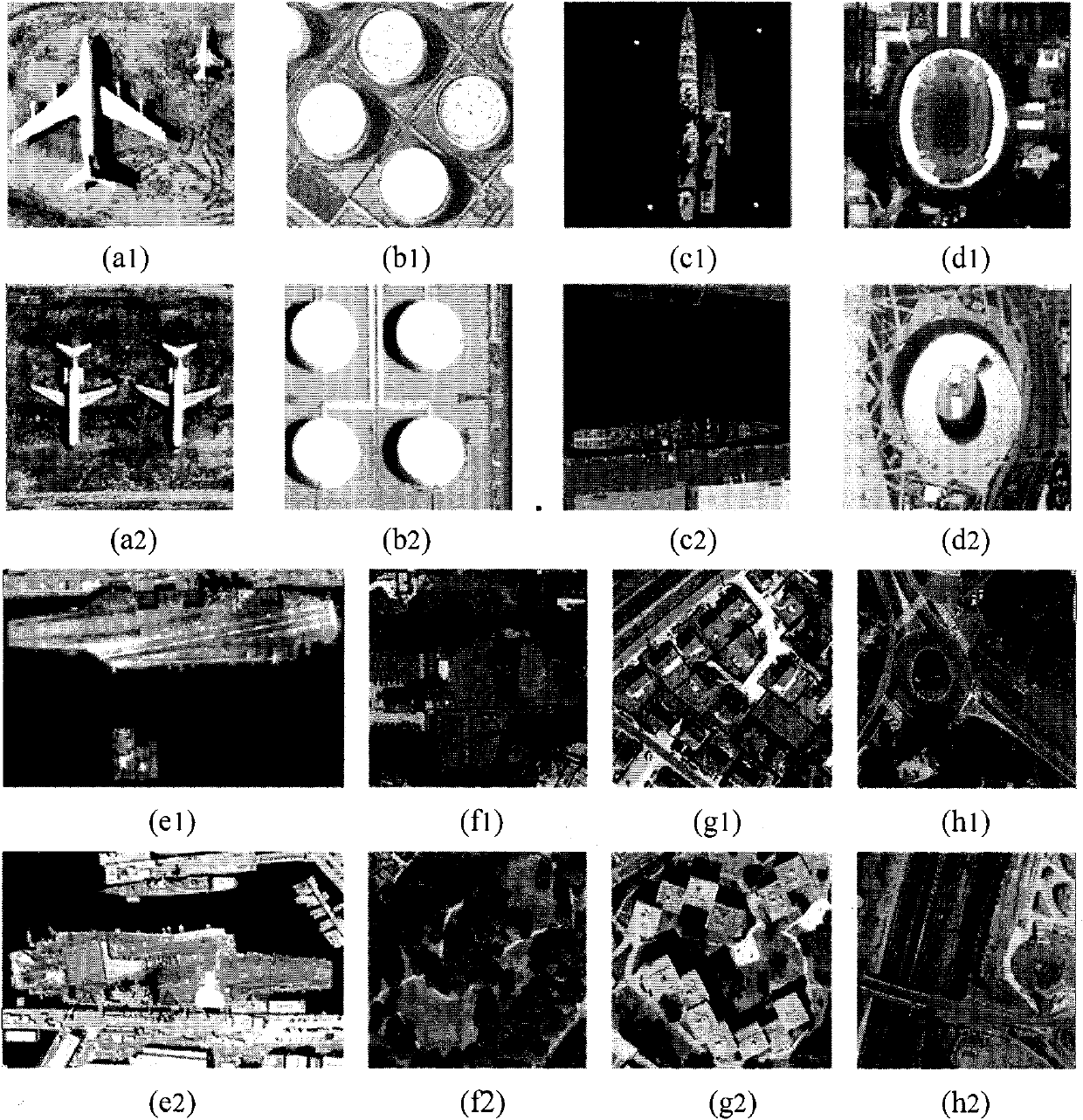

[0070] The pictures in the dataset of remote sensing man-made objects are obtained from the Internet. The resolution of these images is around 1 meter. The data set includes eight categories of targets, including aircraft, oil tanks, ships, stadiums, aircraft carriers, buildings, roads, and vegetation, and each category consists of 200 images. The average size of the images is approximately 300x300 and 300x450 pixels in size. Such as image 3 shown.

[0071] When making a dataset image, it is necessary to mark the actual ground object category (Gro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com