Methods for extracting and modeling Chinese speech emotion in combination with glottis excitation and sound channel modulation information

An extraction method and technology for modulating information, applied in the information field, can solve the problems of the complex emotional state of the speaker, the inability to completely separate the glottal excitation and the vocal tract modulation information, etc., to achieve the effect of improving the robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The technical solution of the present invention will be further elaborated below in conjunction with the accompanying drawings.

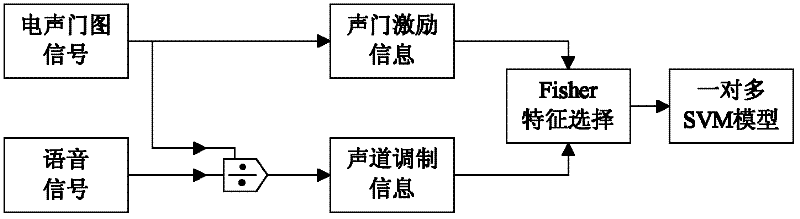

[0044] Such as figure 1 As shown, it is a flow chart of the extraction and recognition method of Chinese speech emotion points combined with glottal excitation and tone modulation, which is mainly divided into two parts: the extraction method of Chinese speech emotion points and the recognition method of Chinese speech emotion points.

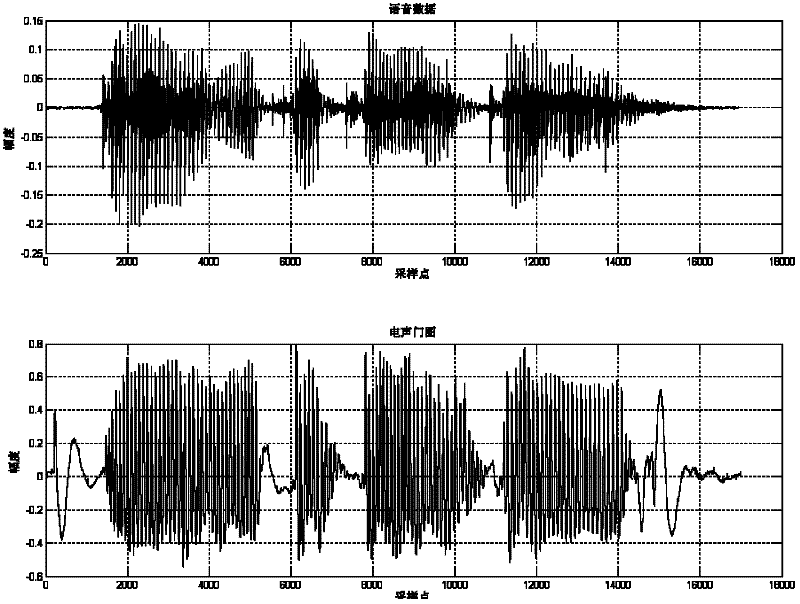

[0045] One, the extracting method of Chinese glottal excitation information, the method steps are as follows:

[0046] Step 1. Formulate the electroglottogram emotional voice database specification;

[0047] Every step in the entire production process of the voice library should comply with specific specifications, including speaker specifications, recording script design specifications, recording specifications, audio file naming specifications, and experiment recording specifications. The production specific...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com