Facial expression synthetic method based on feature points

A face expression and synthesis method technology, applied in the field of image processing, can solve the problem of not fully considering the special structure of the face mesh, and achieve the effect of small calculation amount, cost saving, and meeting the needs of real-time animation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

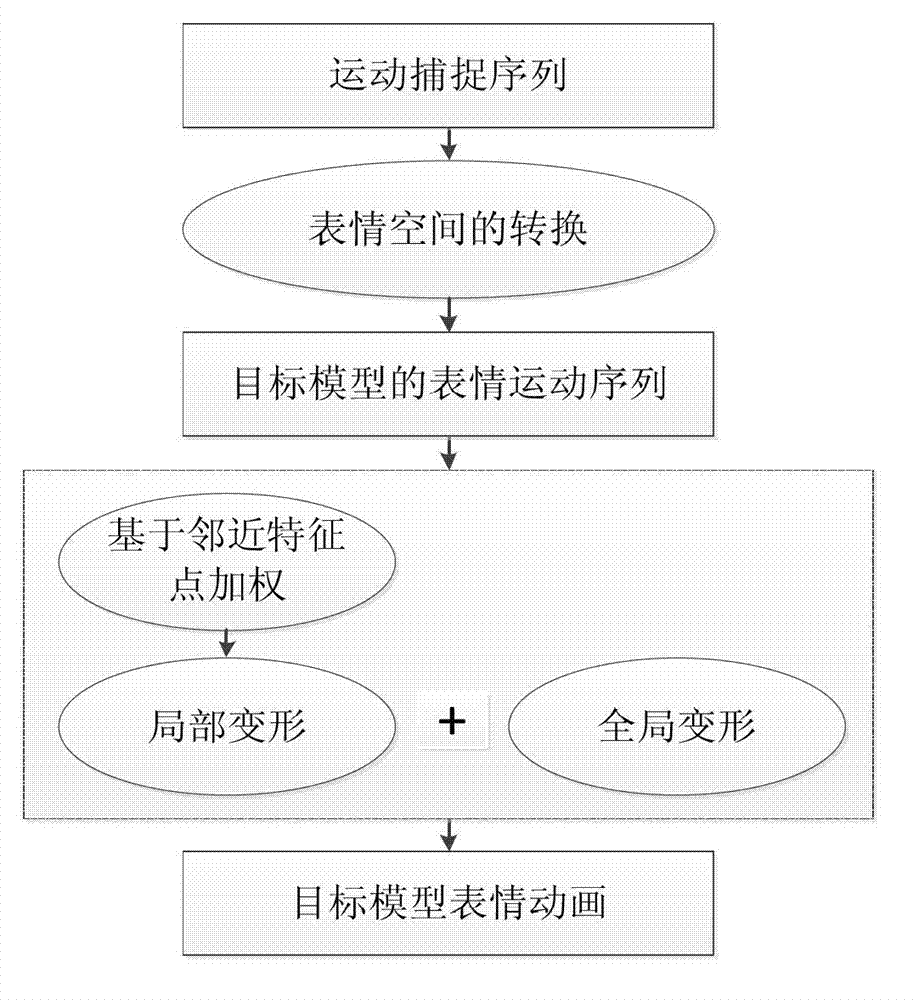

[0025] figure 1 Shown is the algorithm flow chart of the present invention, and it specifically comprises the following steps:

[0026] The first step: conversion of expression space

[0027] Establish the mapping relationship between the first frame of motion capture data and the target face model markers, the mapping relationship can be expressed as follows:

[0028]

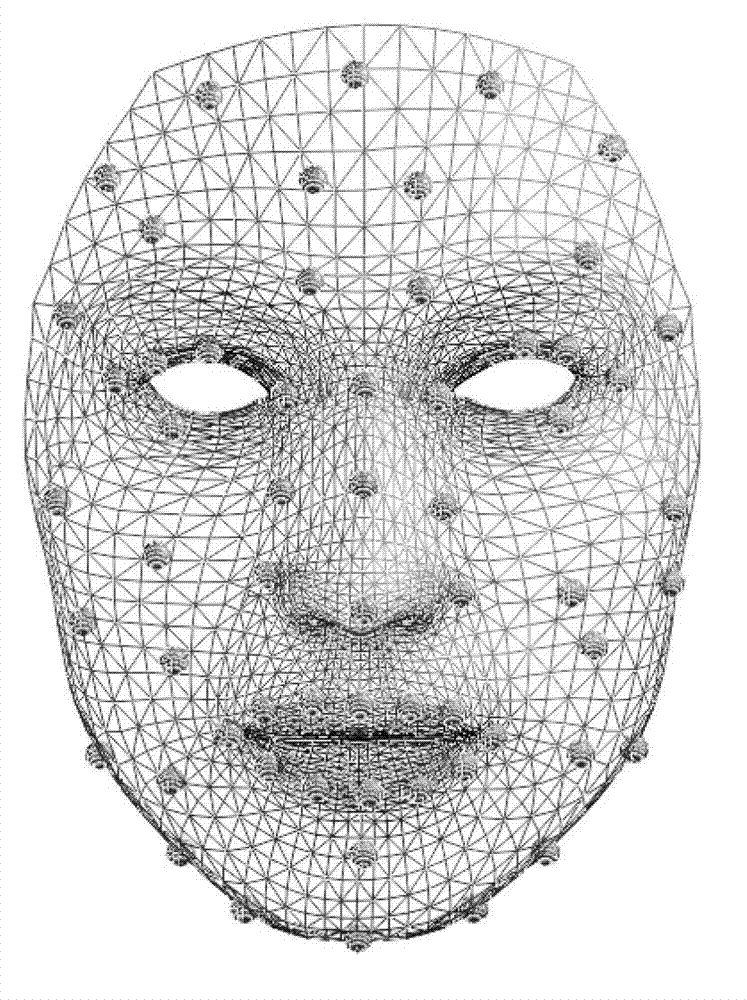

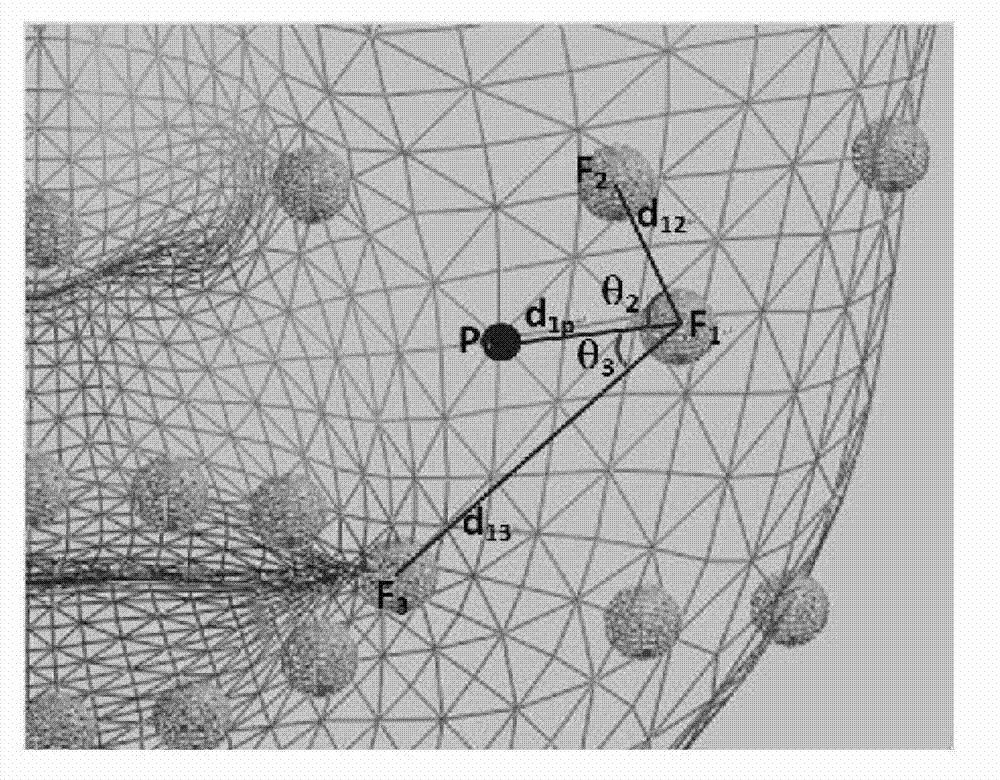

[0029] said is the spatial coordinate of the marker point in the first frame motion capture sequence (x i ,y i ,z i ); the x i ,y i and z i The units are millimeters; is the geodesic distance between the i-th marker point and the j-th marker point in the first frame sequence, and the unit of the geodesic distance is millimeter; w j Is the weight coefficient to be sought; n is the number of markers, said n is an integer, and its value is 60 according to the number of markers initially set; is the spatial coordinate of the i-th marker point on the target face model (x i ,y i ,z i ); the x i ,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com