Indoor three-dimensional scene rebuilding method based on double-layer rectification method

A 3D scene and registration technology, which is used in the reconstruction of large-scale indoor scenes and the field of 3D reconstruction of indoor environment, can solve problems such as inability to meet the requirements of GPU hardware configuration, and achieve the goal of improving reconstruction accuracy and solving cost and real-time problems. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

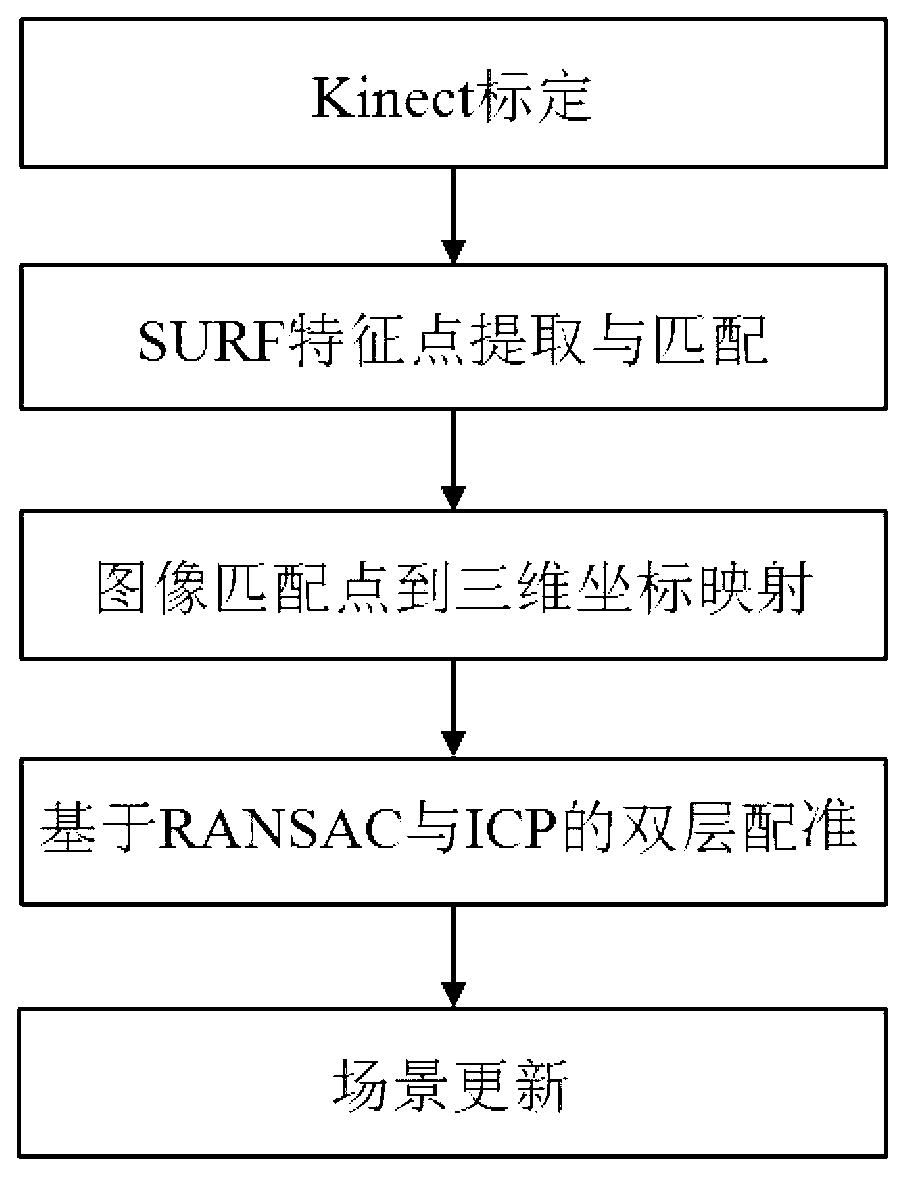

[0036] The present invention will be described in further detail in conjunction with the accompanying drawings. as attached figure 1 Shown, the present invention comprises the following steps:

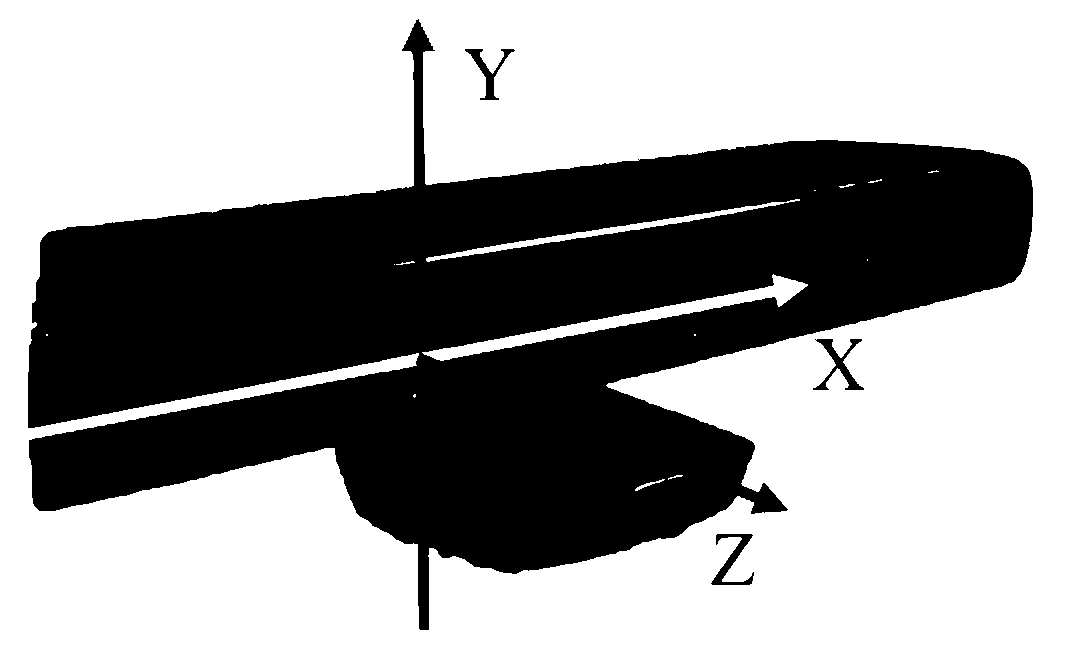

[0037] Step 1, carry out Kinect calibration, the specific method is as follows:

[0038] (1) Print a chessboard template. The present invention adopts a piece of A4 paper, and the interval of chessboard is 0.25cm.

[0039] (2) Photograph the chessboard from multiple angles. When shooting, try to make the chessboard fill the screen as much as possible, and ensure that every corner of the chessboard is on the screen, and shoot a total of 8 template pictures.

[0040] (3) Detect the feature points in the image, that is, every black intersection of the chessboard.

[0041] (4) Calculate the Kinect calibration parameters.

[0042] The internal reference matrix K of the infrared camera ir :

[0043] K ir = ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com