Calibration method for 3D (three-dimensional) acquisition system

A technology for acquiring systems and calibration methods, applied in the field of system calibration, which can solve problems such as low efficiency, slow speed, and unguaranteed calibration accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

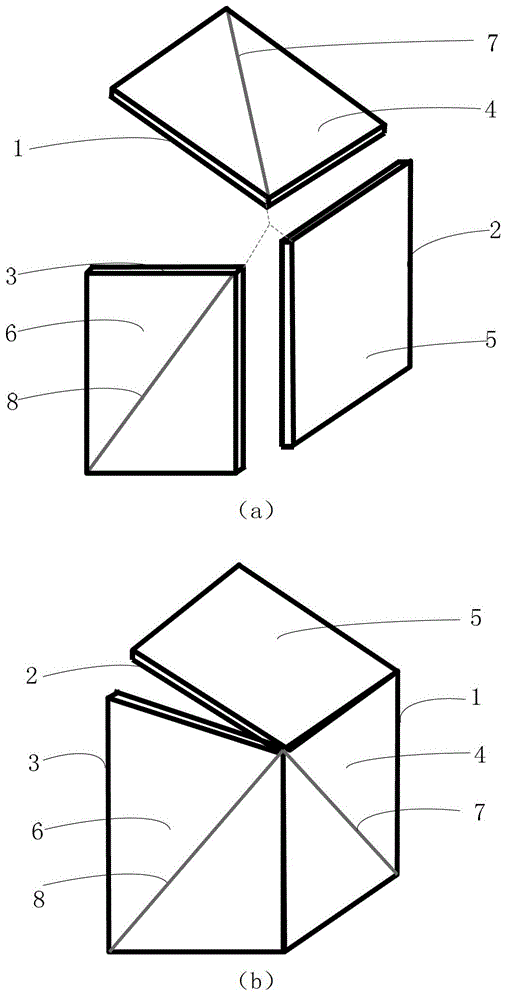

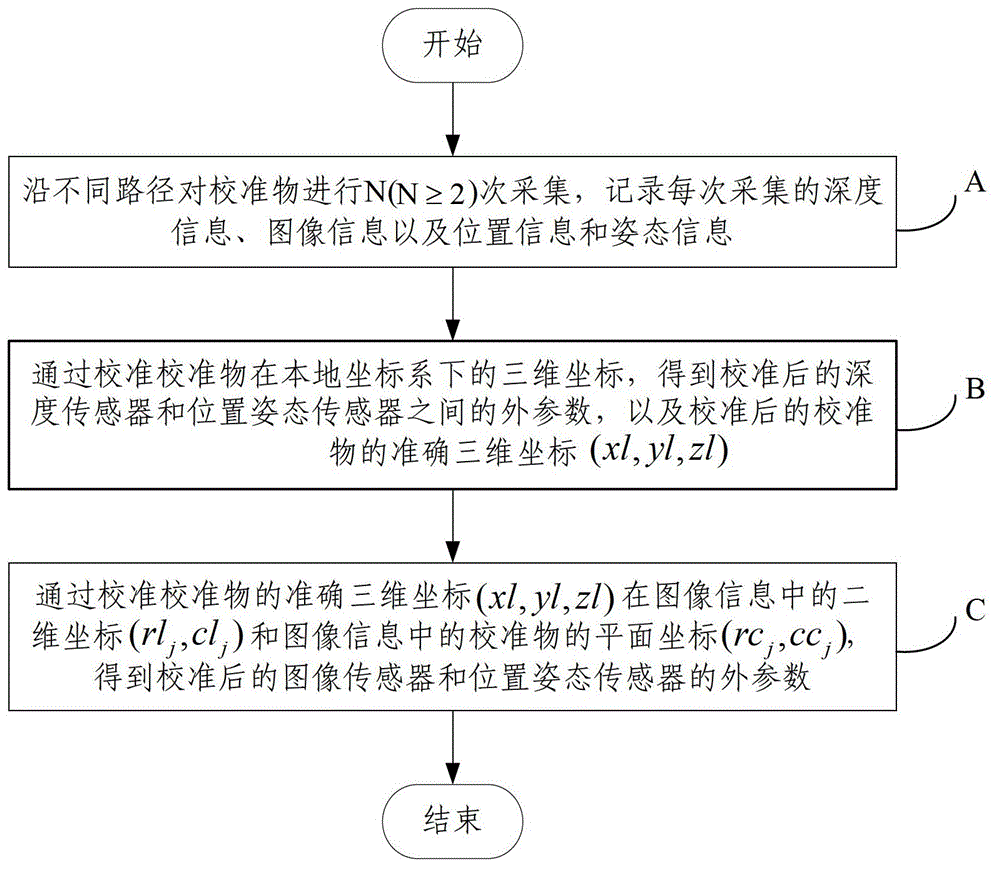

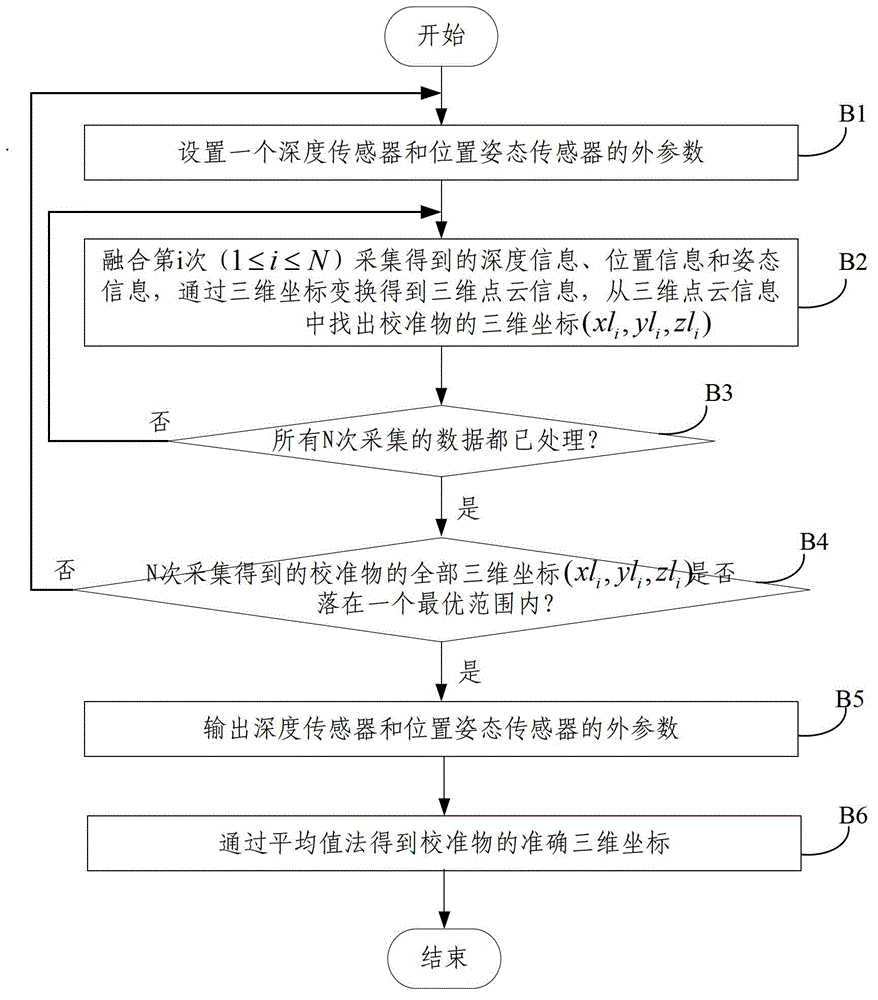

[0060] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

[0061] In Embodiment 1, the depth sensor is a two-dimensional laser radar; the image sensor is a color CMOS image sensor or a color CCD image sensor; the position and attitude sensor is an integrated navigation system composed of GPS and IMU.

[0062] Step A: Use the 3D acquisition system to collect N (N ≥ 2) times of calibration objects along different paths, that is, use the depth sensor, image sensor and position and attitude sensor placed on the mobile platform to collect N (N ≥ 2) times of calibration objects along different paths. ≥2) acquisitions; each acquisition records a set of depth information of the calibration object obtained by the depth sensor, records one ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com