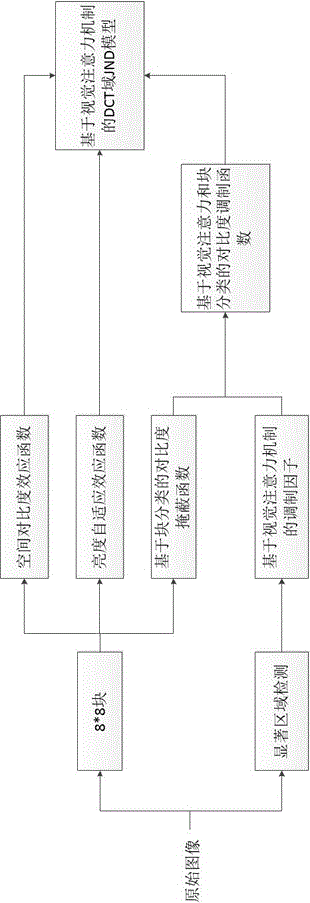

Image JND Threshold Calculation Method Based on Visual Attention Mechanism in DCT Domain

A visual attention, image technology, applied in the field of image/video coding, can solve the problem of not considering the JND model, and achieve the effect of fine and accurate segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

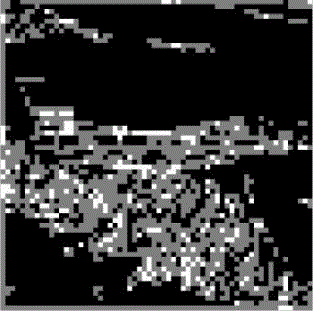

Image

Examples

Embodiment Construction

[0029] Below in conjunction with accompanying drawing, the present invention will be further described with specific example:

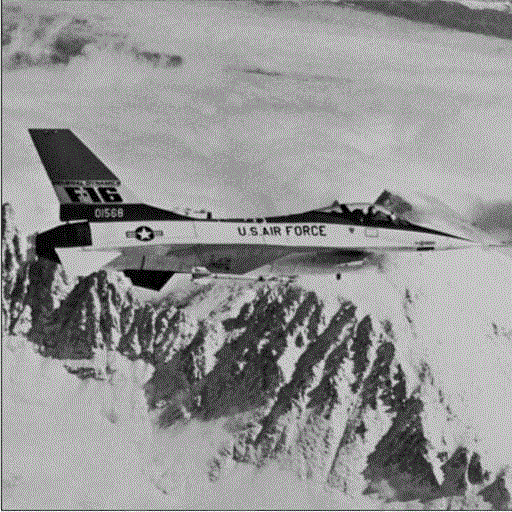

[0030] The example that the present invention provides adopts MATLAB7 as the emulation experiment platform, uses the bmp grayscale image Airplane of 512 * 512 as the selected test image, describes this example in detail below in conjunction with each step:

[0031] Step (1), select the bmp grayscale image of 512 * 512 as the image of input test, carry out the DCT transformation of 8 * 8 to it, transform it into DCT domain by space domain;

[0032] In step (2), in the DCT domain, the perceivable distortion JND value is obtained by calculating the product of the basic threshold value of the spatial contrast and the adaptive modulation factor of the brightness, and the calculation formula is as follows:

[0033] T JND (n,i,j)=T Basic (n,i,j)×F lum (n) (1)

[0034] Among them, T Basic (n,i,j) represent the spatial contrast sensitivity threshold, F l...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com