A Video Summarization Method Based on Spatial-Temporal Recombination of Events

A technology of video summarization and activity events, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of scenes where surveillance video cannot be applied, cannot express video semantic information well, and lose activity information.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings.

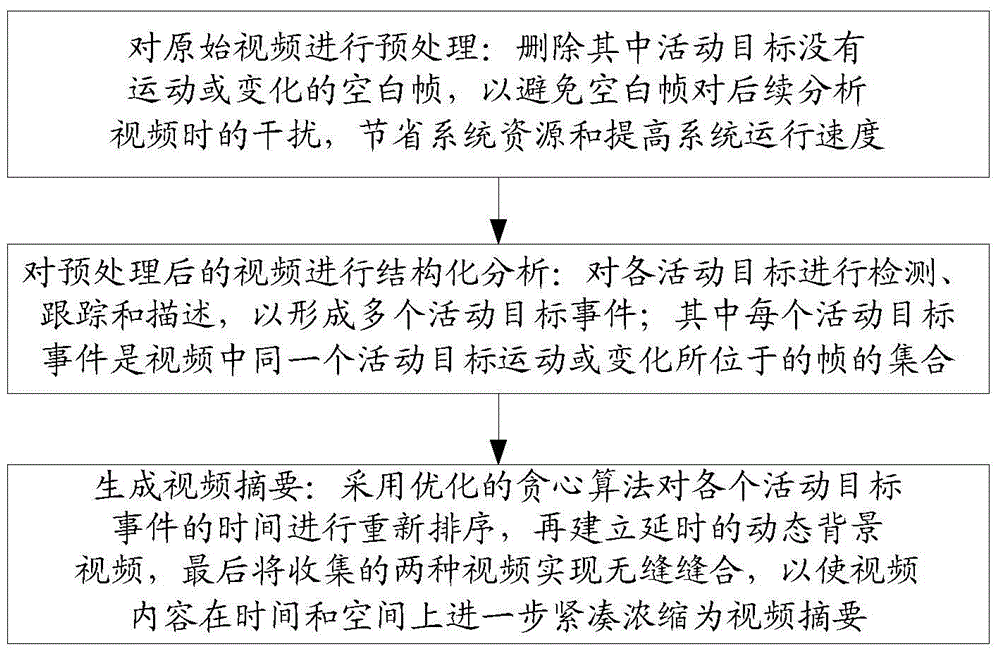

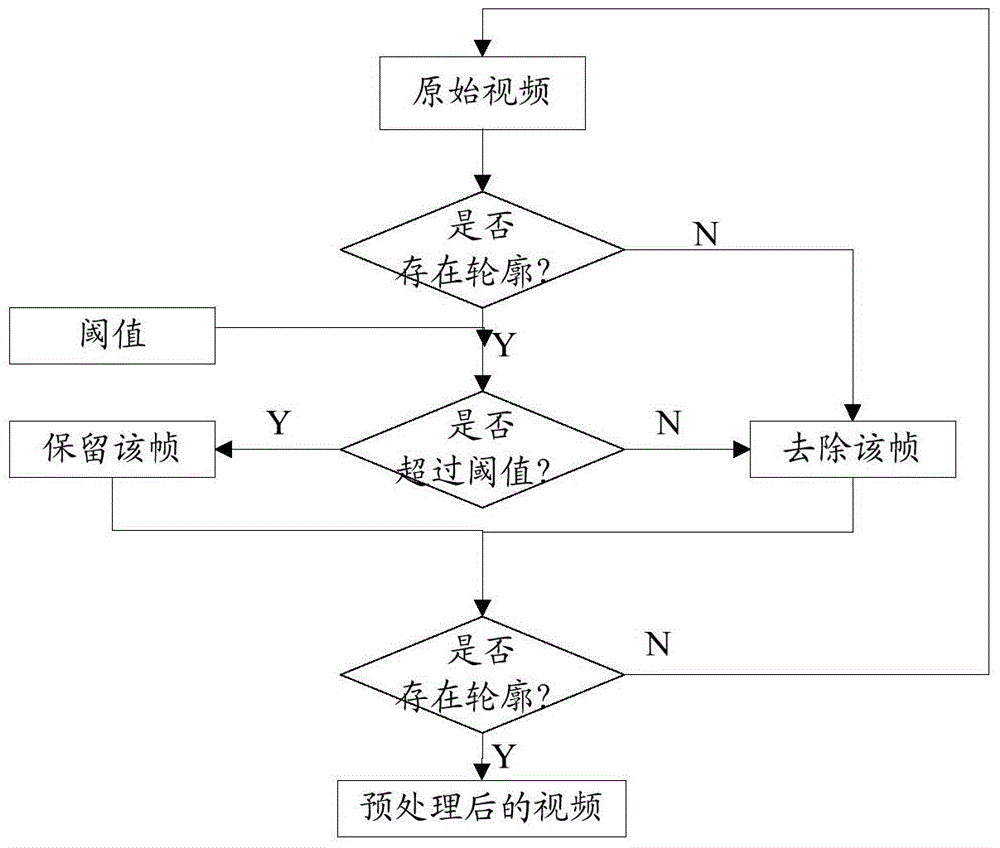

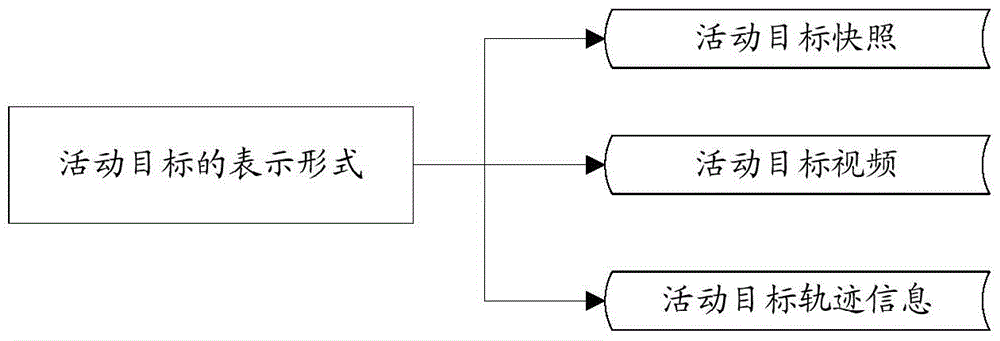

[0043] The video summary generation method based on the spatio-temporal recombination of active events of the present invention is: first preprocess the original video, remove blank frames, and then perform structural analysis on the preprocessed video: take the active target in the original video as the object and extract it Event videos of all key activity goals, and weaken the time correlation between each activity goal event, and regroup each activity goal event in time according to the principle of non-conflicting activity scope; at the same time, extract the background reasonably according to the user's visual experience Images, generate time-lapse dynamic background video; finally, these active target events and time-lapse dynamic background video are seamle...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com