Water flowing speed monitoring method based on moving target identification in videos

A moving target, speed monitoring technology, applied in the direction of fluid velocity measurement, velocity/acceleration/impact measurement, measurement device, etc., can solve the problems of low safety, high risk factor of ship measurement method and high cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

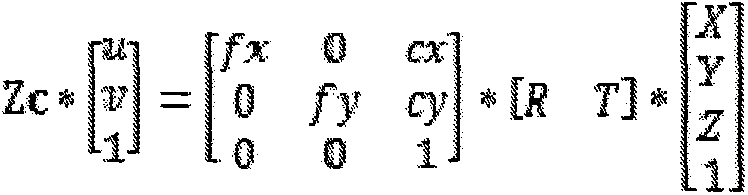

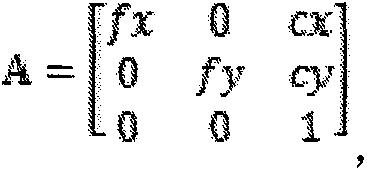

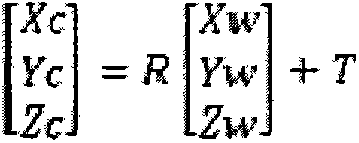

[0029] The present invention will be described in detail below in conjunction with the examples. This technology uses video monitoring technology to form a sequence of images in real time on the flow of the river section, automatically detects and tracks the buoys on the water surface in the sequence images, and uses the principles of solid geometry and photogrammetry to establish a conversion model between the image and the actual water surface coordinates to obtain the buoyancy The time and moving distance of objects moving in the field of view are calculated to obtain the flow velocity of the flood. Place suspended objects as buoys in the river, select the field of view of the camera, and place the camera fixedly for recording. The reality of the present invention mainly includes the following aspects: video-based moving target detection and tracking, camera calibration method and the parameters obtained through the previous steps to solve the water velocity.

[0030] 1. V...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com