GPU (Graphics Processing Unit)-based HEVC (High Efficiency Video Coding) parallel decoding method

A decoding method and parallel algorithm technology, applied in the field of HEVC parallel decoding based on GPU, to achieve the effect of improving decoding speed and decoding efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

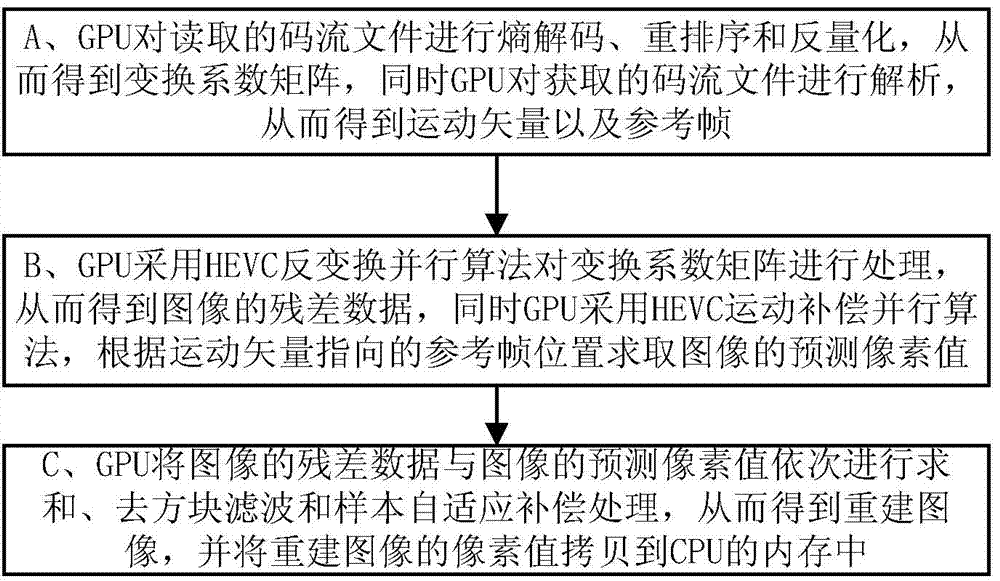

[0064] HEVC decoding framework such as Figure 5 shown. The HEVC decoding process is the reverse of the encoding process. The decoder reads the code stream file and obtains the bit stream from the NAL (Network Abstraction Layer). The decoding is performed in a frame-by-frame order, and a frame of image is divided into several maximum encoding Unit LCU, in the order of raster scanning, entropy decoding is performed with LCU as the basic unit, and then reordered to obtain the residual coefficient of the corresponding coding unit; then the residual coefficient is dequantized and inversely transformed to obtain the image residual data. At the same time, the decoder generates a prediction block based on the header information decoded from the code stream: if it is an inter-frame prediction mode, it will generate a corresponding prediction block based on the motion vector and the reference frame; if it is an intra-frame prediction mode, it will generate a prediction block from the ...

Embodiment 2

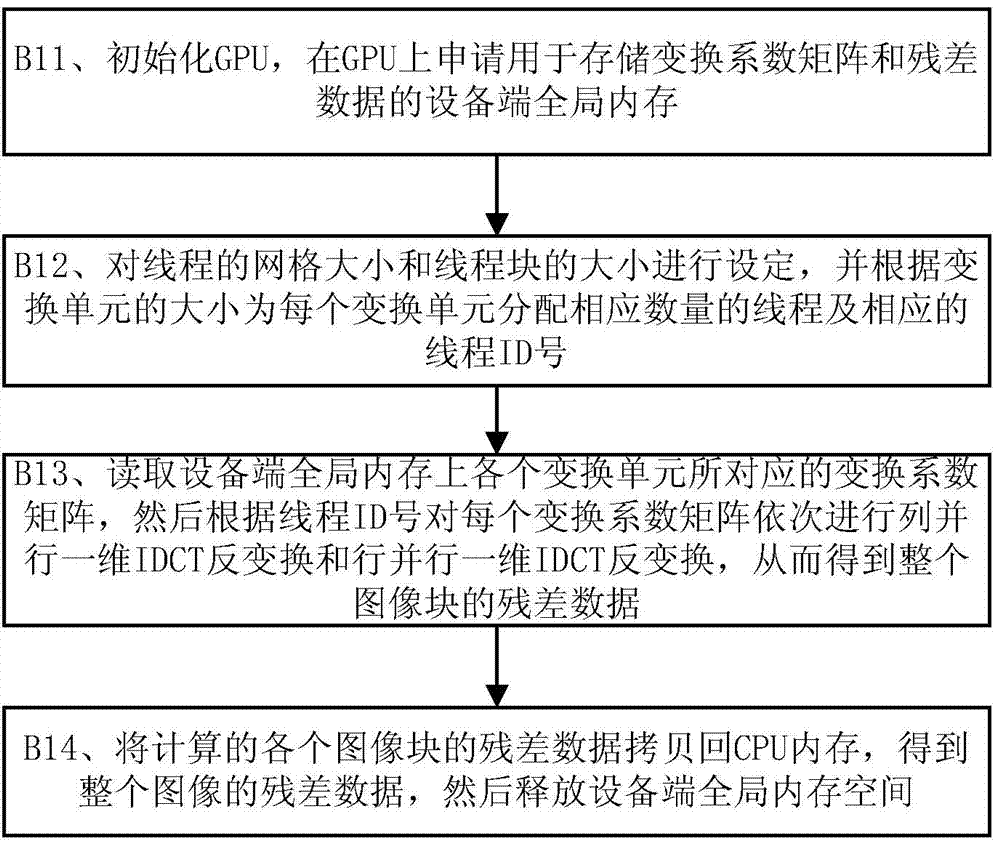

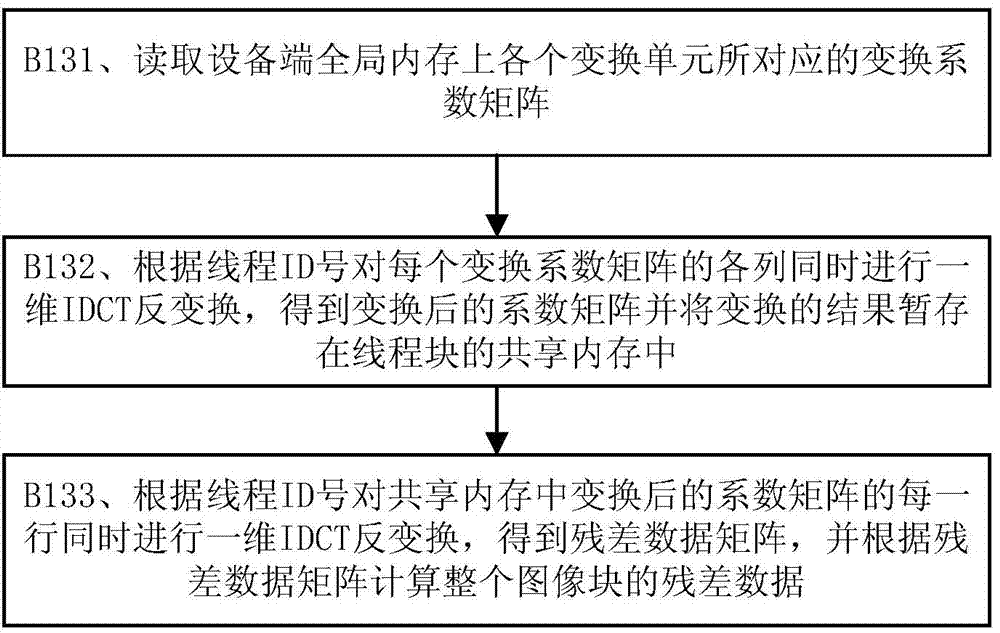

[0068] This embodiment describes the HEVC inverse transformation parallel algorithm process of the present invention.

[0069] The inverse transform module is a process of converting the transform coefficient matrix of the current block into a residual sample matrix, and prepares for subsequent reconstruction. The inverse transformation is performed after the inverse quantization process, and the TU transformation unit is also used as the basic unit for processing, and the source data used is the result of inverse quantization. When the HEVC decoder of the present invention performs two-dimensional IDCT inverse transform, it first performs one-dimensional IDCT inverse discrete cosine transform in the horizontal direction, then performs one-dimensional IDCT inverse discrete cosine transform in the vertical direction, and finally multiplies the matrix to obtain The transformation coefficient matrix is converted into a residual data matrix of the same size, thereby completing t...

Embodiment 3

[0079] This embodiment describes the HEVC motion compensation parallel algorithm process of the present invention.

[0080]The implementation principle of inter-frame motion compensation, simply put, is that the motion vector obtained by parsing the code stream is obtained according to the position pointed to on the reference frame to obtain the predicted value, and the position pointed to by the whole pixel point of the reference frame is directly read. If it is a sub-pixel position, it is necessary to obtain the sub-pixel predicted value through pixel interpolation, and then add the predicted value to the image residual value obtained through inverse quantization and inverse transformation to obtain the image reconstruction value. In the motion compensation module, the calculation of pixel interpolation and filtering takes up about 70% of the computational load. Therefore, the realization of the motion compensation of the present invention on the GPU is mainly to perform pix...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com