Patents

Literature

483results about How to "Improve decoding speed" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

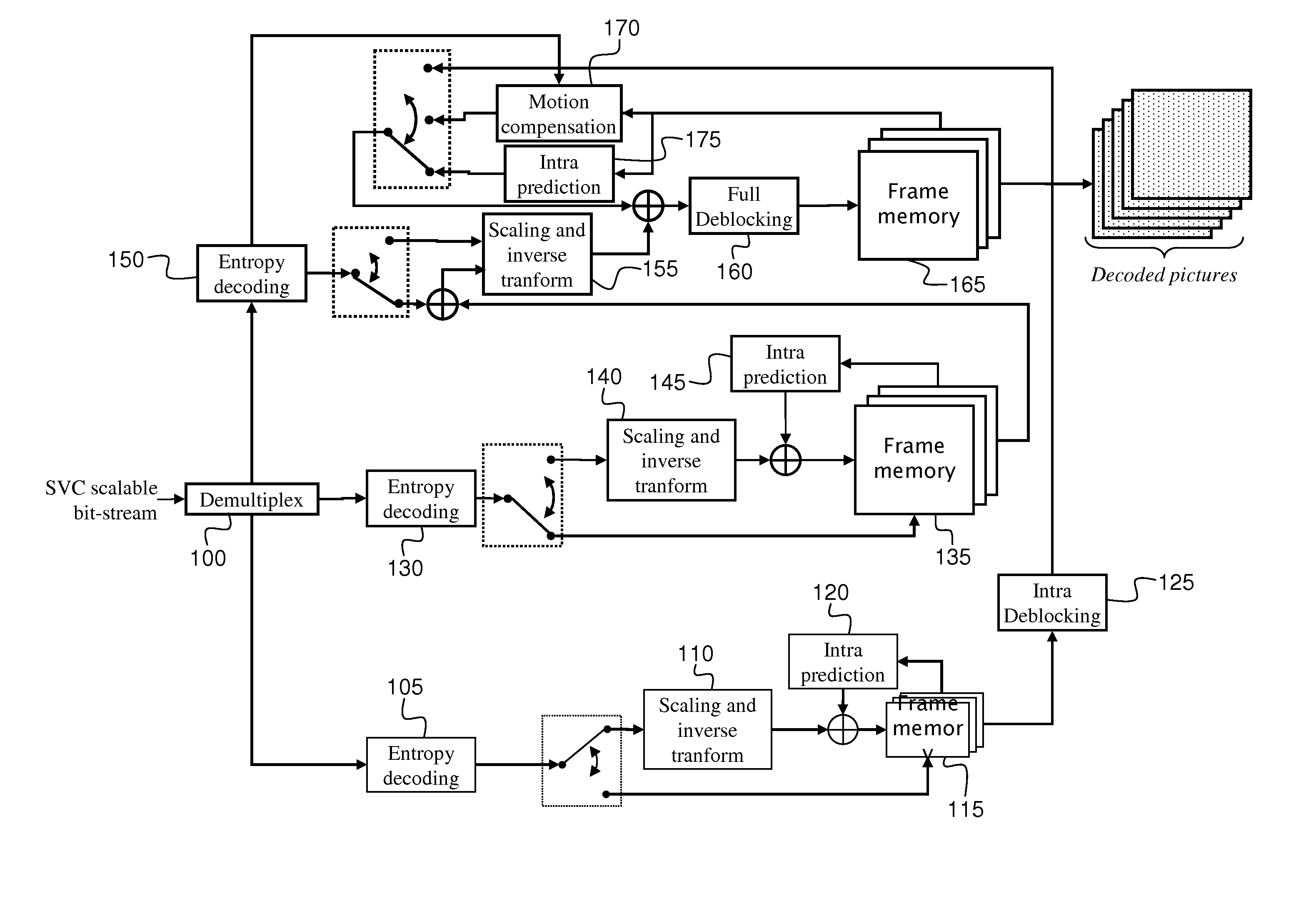

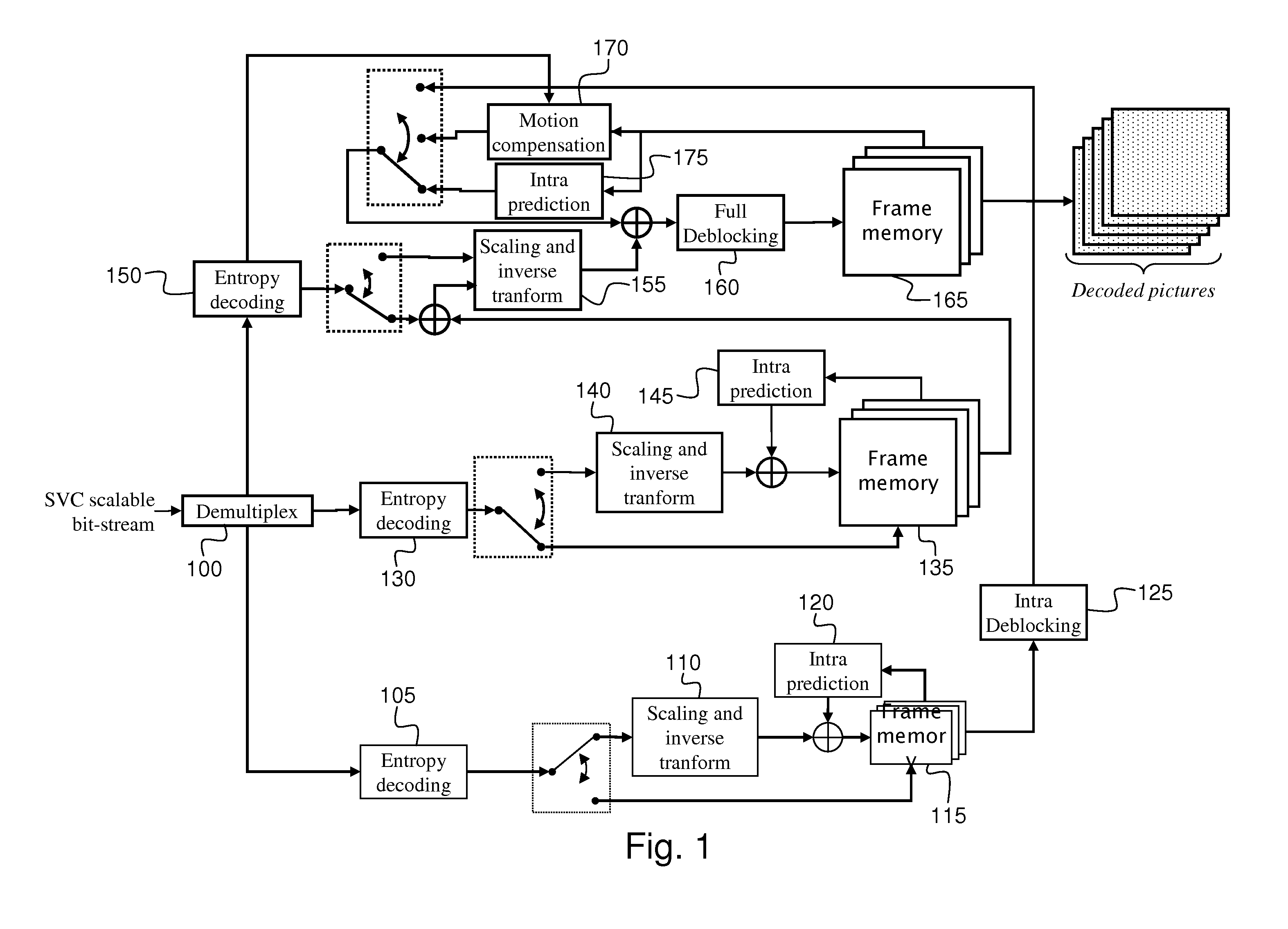

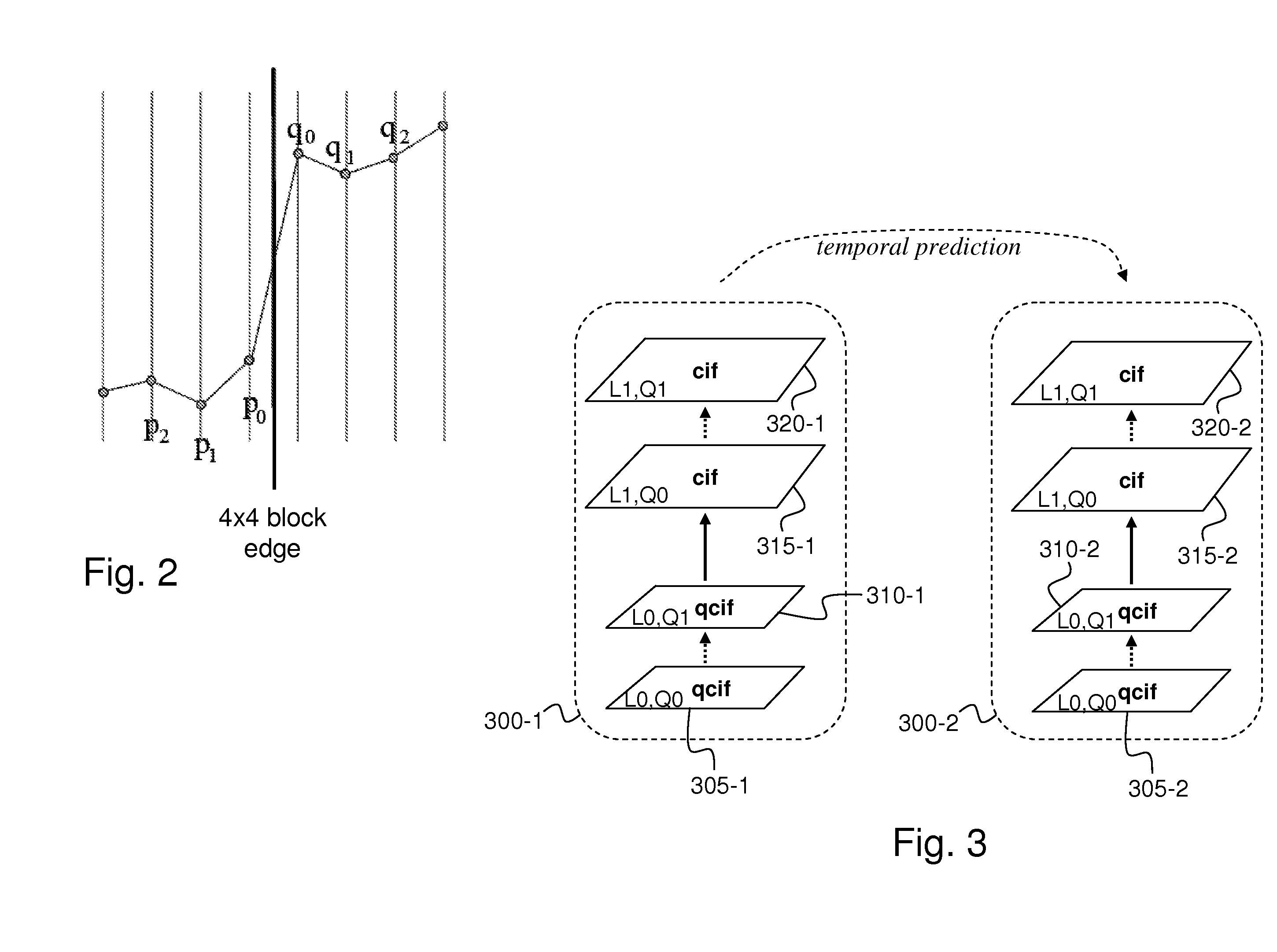

Method and device for deblocking filtering of scalable bitstream during decoding

InactiveUS20100316139A1Improve decoding speedSimple stepsColor television with pulse code modulationColor television with bandwidth reductionDeblocking filterData element

A method and device for deblocking filtering of a scalable bitstream during decoding is disclosed. According to the invention, the decoding of at least one part of a picture encoded in a base layer and at least one enhancement layer in a scalable bitstream, the bitstream comprising at least one access unit representing the picture, each access unit comprising a plurality of data elements, each data element belonging to a said layer, comprises the reception (600) of at least one data element of said at least one access unit and, if said at least one data element belongs to an access unit of a predetermined type, the decoding (715, 820) of the data of said data element, and the application (720, 825) of a full deblocking filter to at least one part of the decoded data, the full deblocking filter being applied to all of the.

Owner:CANON KK

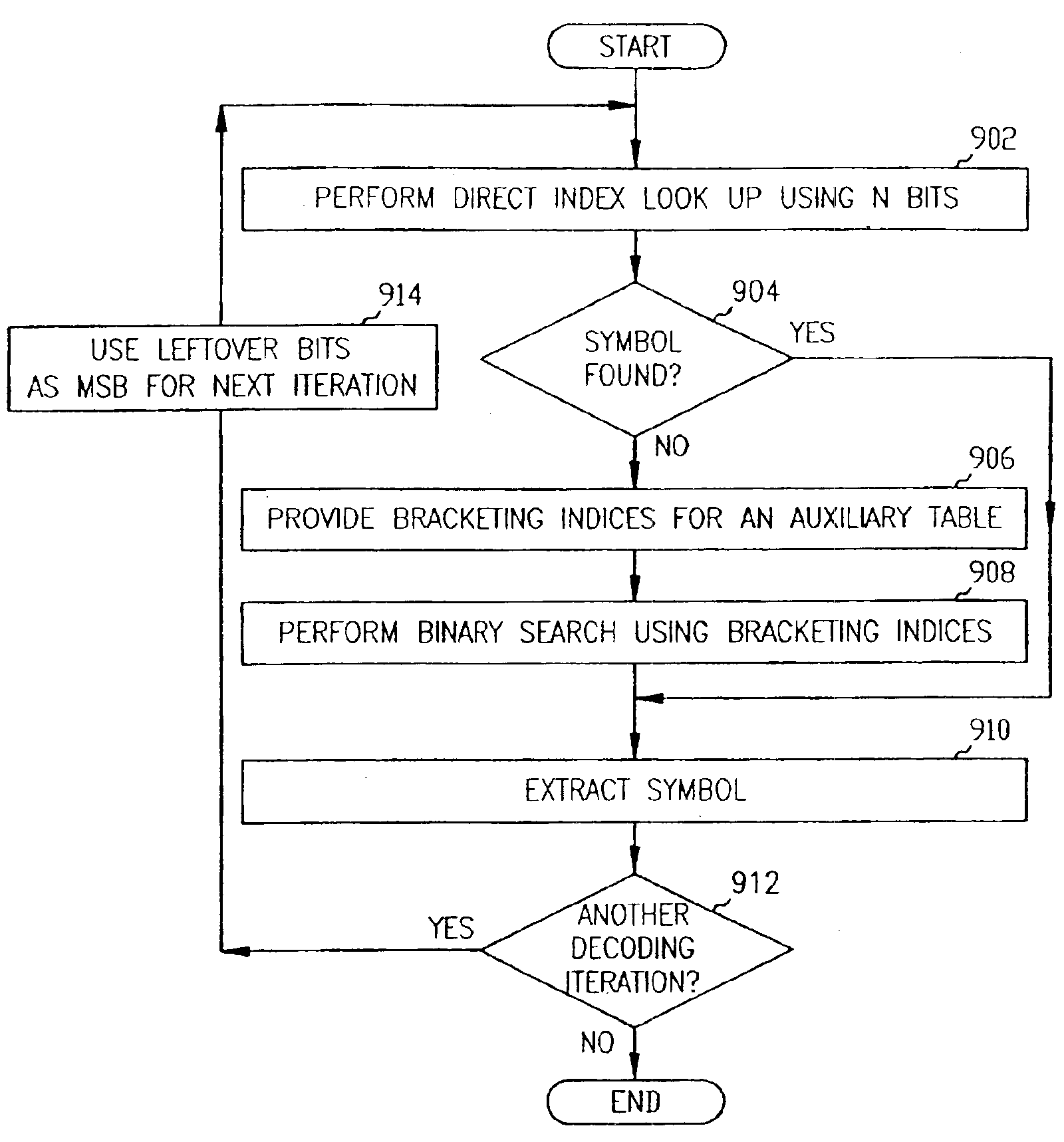

System and method for compressing data

InactiveUS6839624B1Improve decoding speedSave memory spaceNavigational calculation instrumentsPosition fixationTheoretical computer scienceSubject matter

Systems, devices and methods are provided to compress data, and in particular to code and decode data. One aspect of the present subject matter is a data structure. The data structure includes a field representing a decoding structure to decode canonical Huffman encoded data, and a field representing a symbol table. The decoding structure includes a field representing an accelerator table to provide a 2N-deep direct-index lookup to provide high-frequency symbols for high-frequency data and to provide bracketing indices for low-frequency data. The decoding structure also includes a field for a binary search table to provide a low-frequency symbol index using a binary search bounded by the bracketing indices provided by the accelerator table. The symbol table is adapted to provide a symbol associated with the low-frequency index.

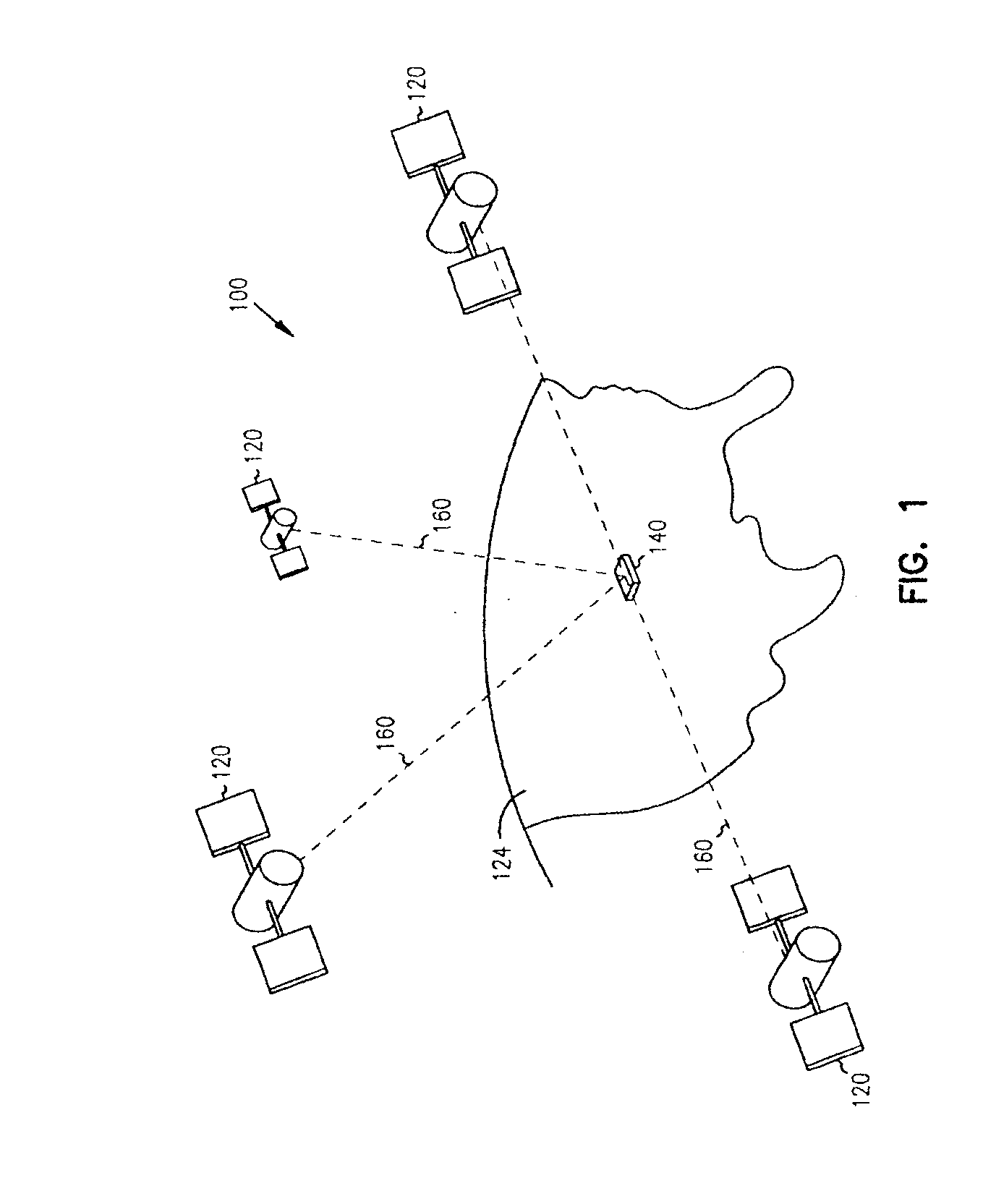

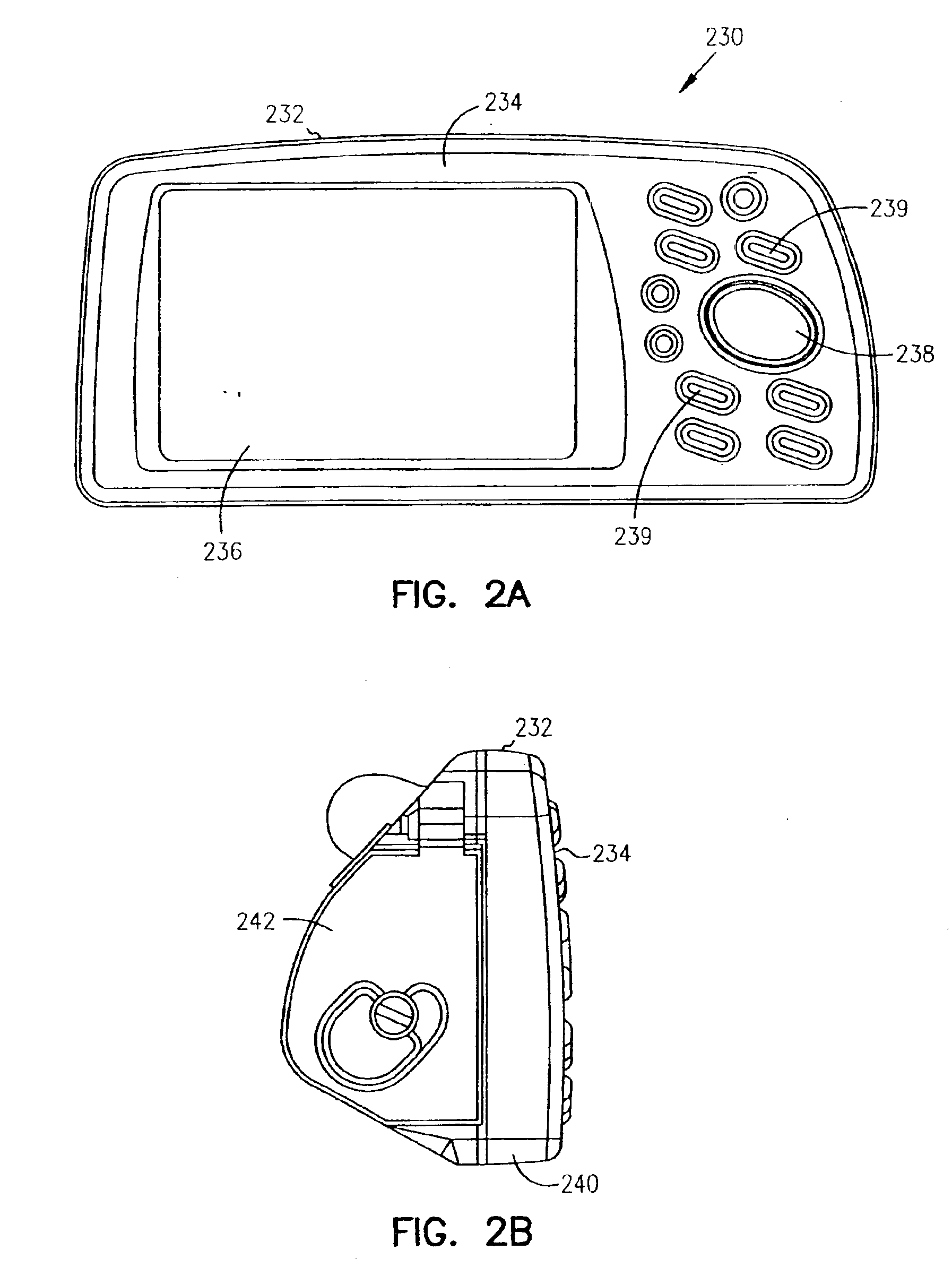

Owner:GARMIN

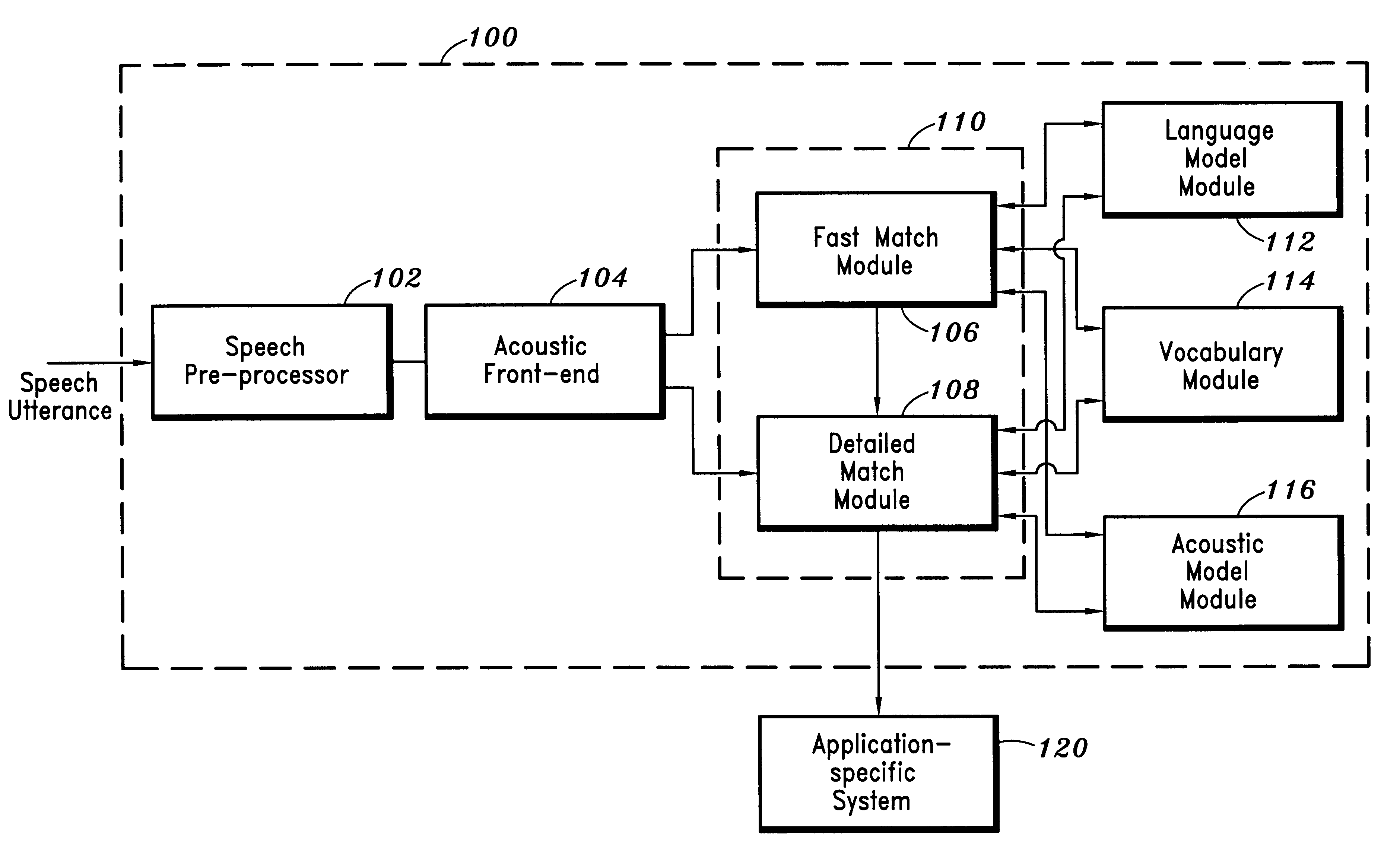

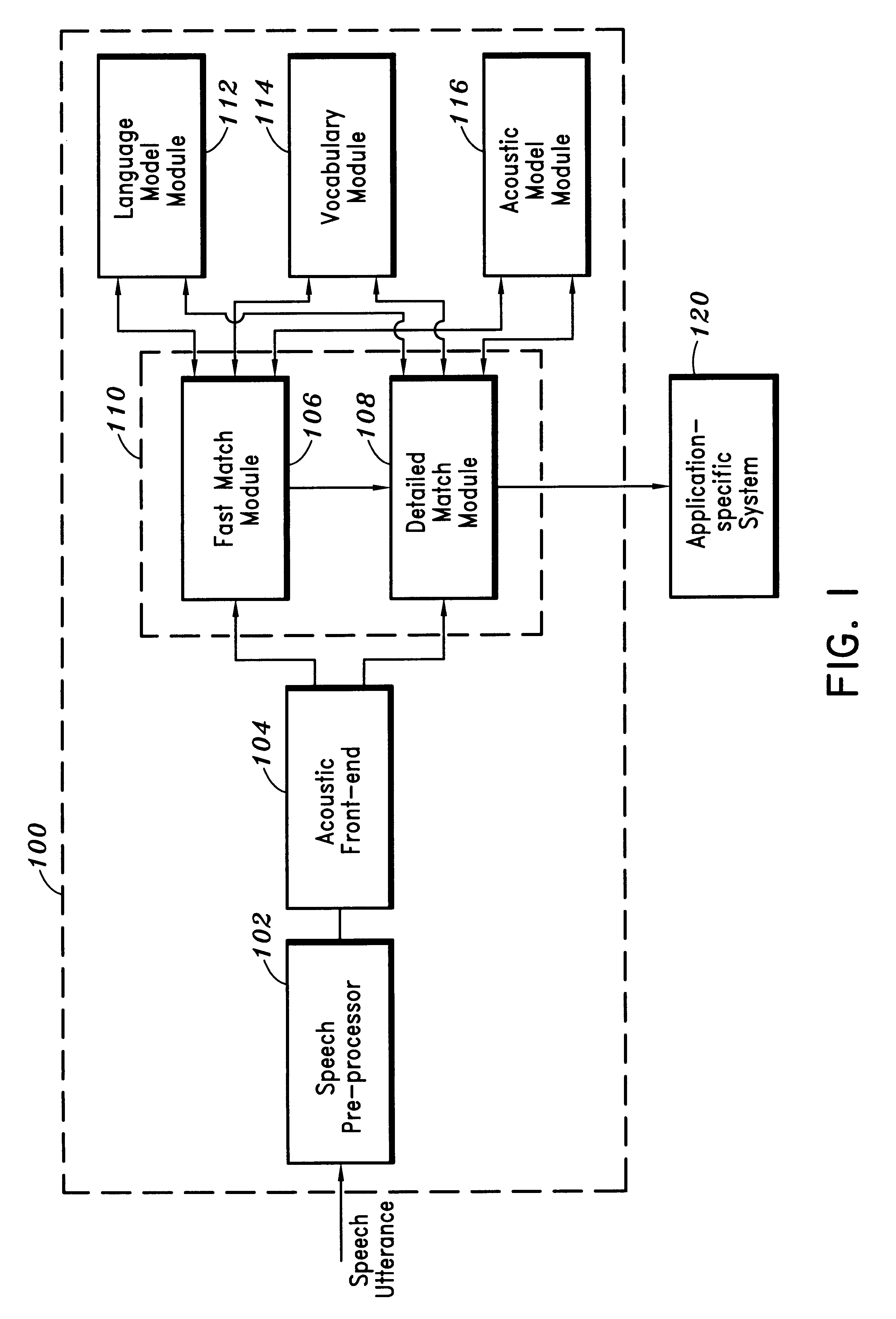

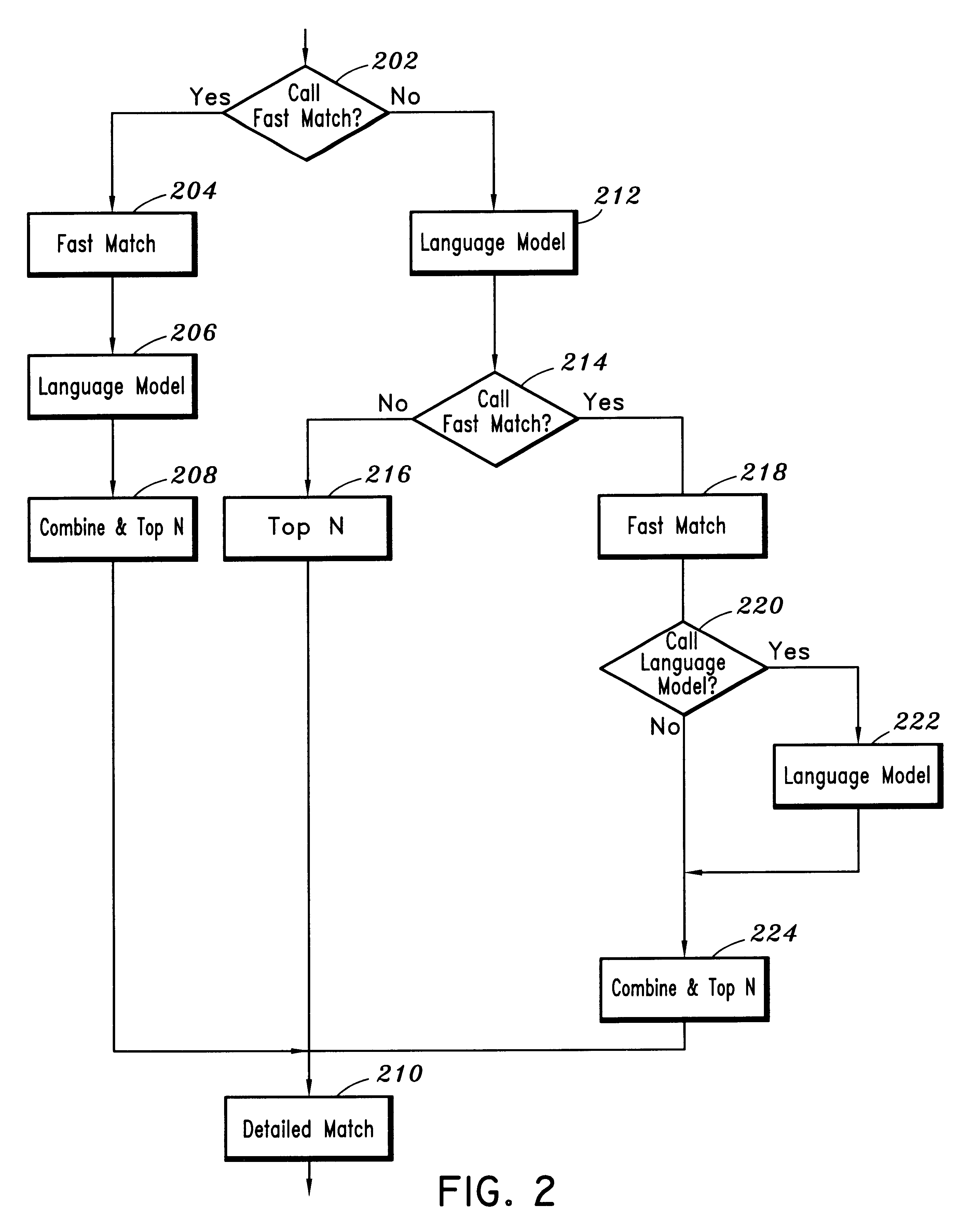

Method for reducing search complexity in a speech recognition system

InactiveUS6178401B1Improve decoding speedReduce in quantitySpeech recognitionSpeech identificationSpeech sound

A method is provided for reducing search complexity in a speech recognition system having a fast match, a detailed match, and a language model. Based on at least one predetermined variable, the fast match is optionally employed to generate candidate words and acoustic scores corresponding to the candidate words. The language model is employed to generate language model scores. The acoustic scores are combined with the language model scores and the combined scores are ranked to determine top ranking candidate words to be later processed by the detailed match, when the fast match is employed. The detailed match is employed to generate detailed match scores for the top ranking candidate words.

Owner:NUANCE COMM INC

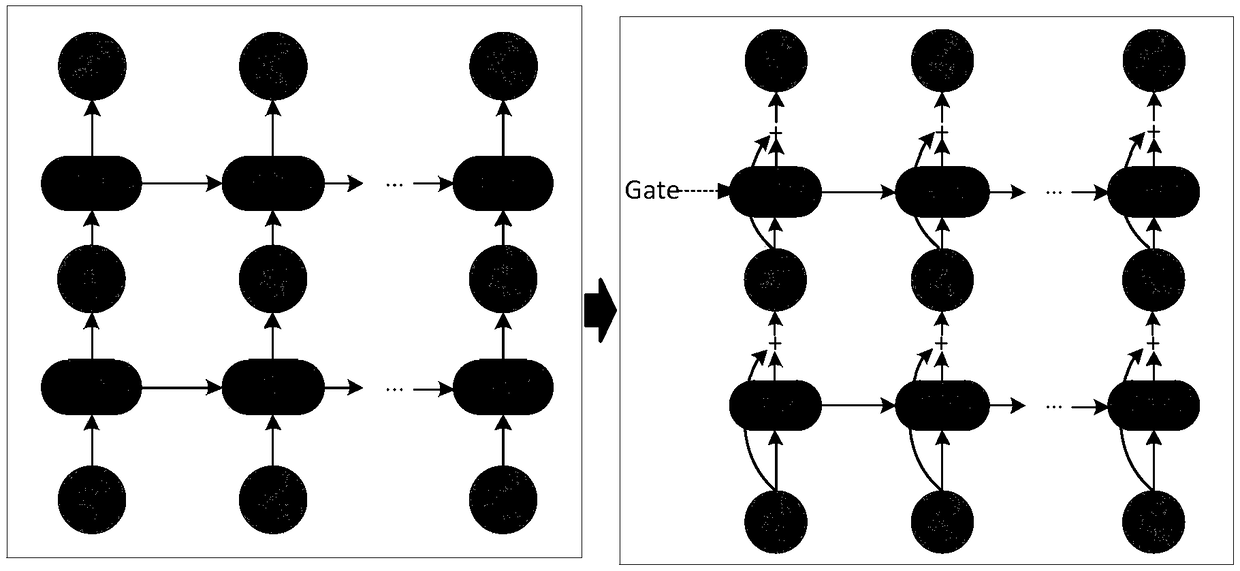

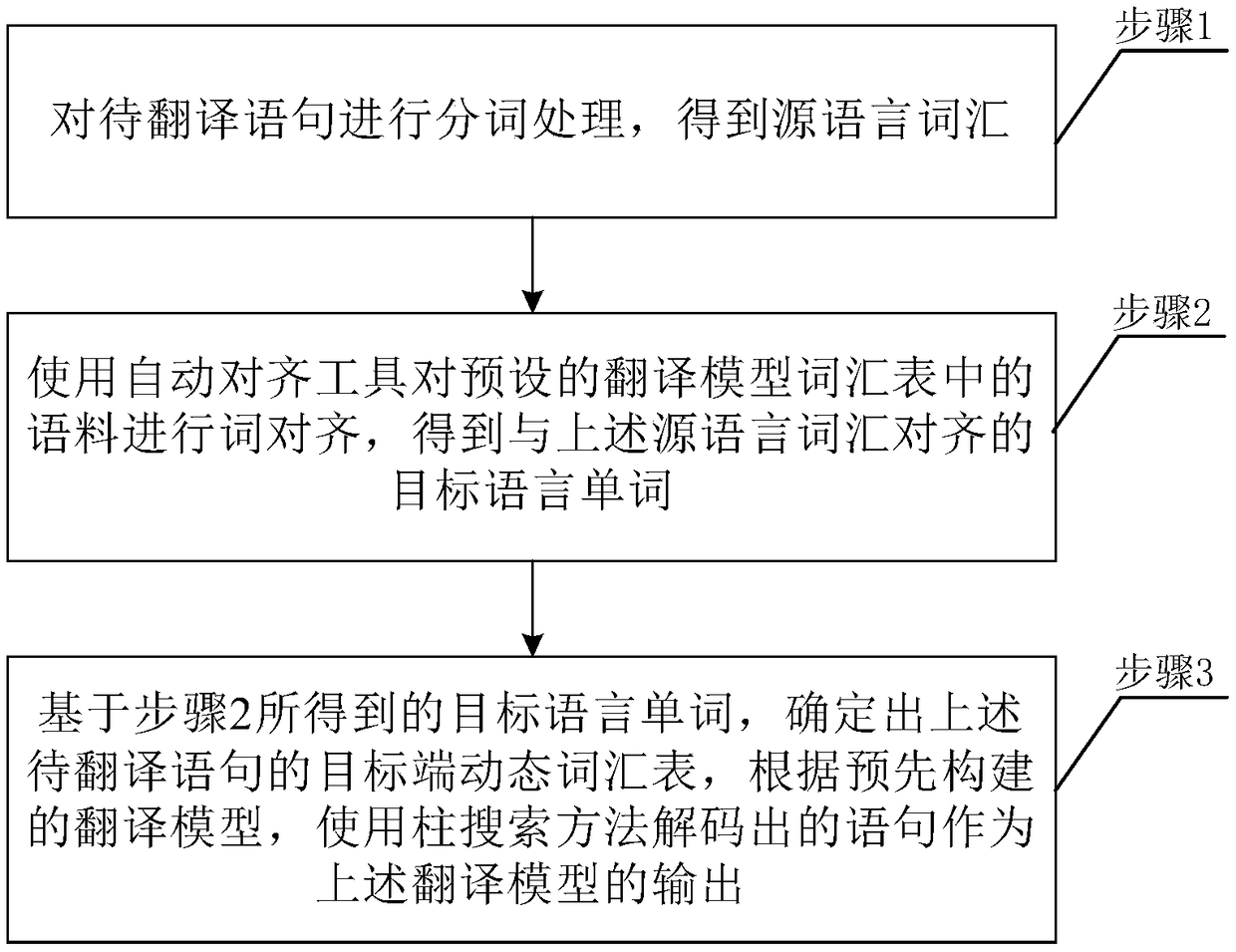

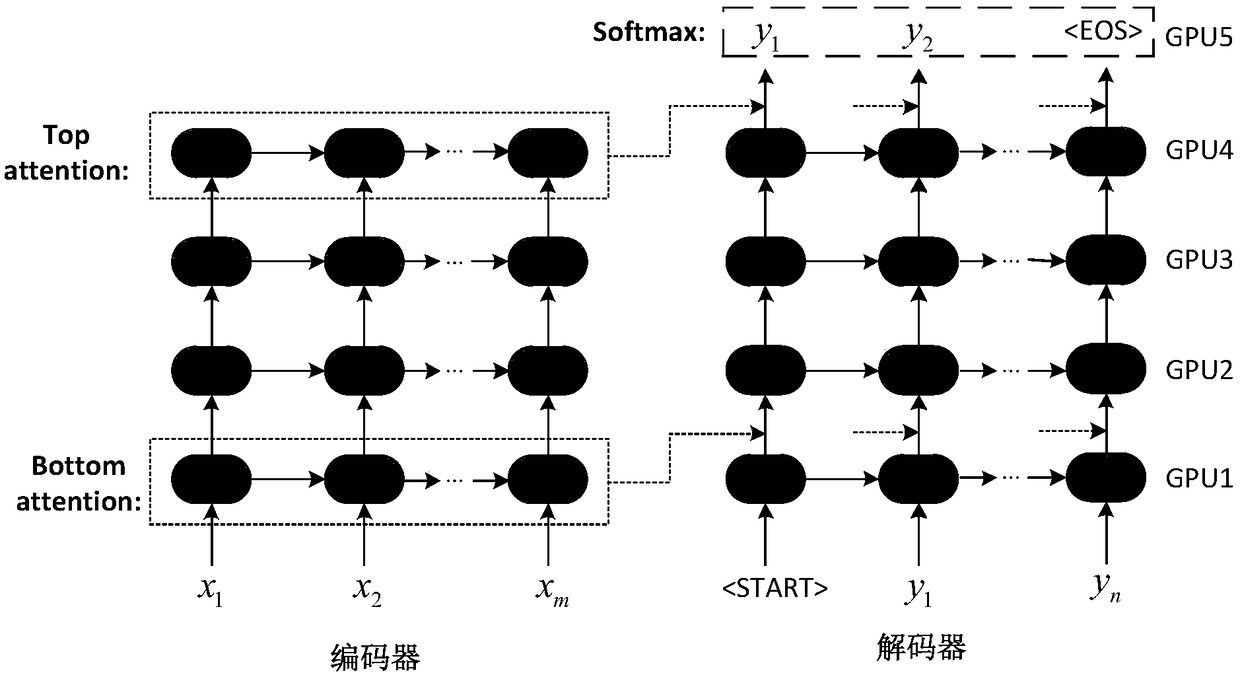

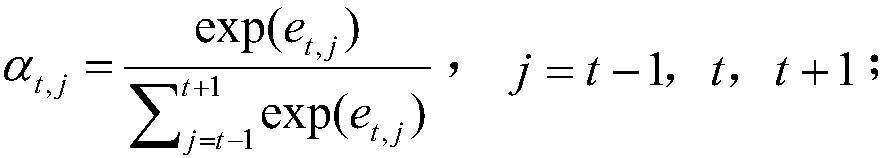

Decoding method based on deep neural network translation model

ActiveCN108647214ASolve complexitySolve difficultyNatural language translationNeural architecturesDecoding methodsModel translation

The invention relates to the field of the language processing, and provides a decoding method based on a deep neural network translation model. The problems of high model training complexity, large training difficulty and slow decoding speed in a machine translation model are solved. The specific implementation way of the method comprises the following steps: performing word segmentation processing on the to-be-translated sentence to obtain source language vocabulary; step two, performing word alignment on the linguistic data in a preset translation model glossary by using an automatic alignment tool so as to obtain a target language word aligned to the source language vocabulary; and step three, determining a target-side dynamic glossary of the to-be-translated sentence based on the target language word obtained in the step two, and using the sentence decoded by using a column searching method as the output of the translation model according to the pre-constructed translation model, wherein the translation model is the deep neural network based on the threshold residual mechanism and the parallel attention mechanism. Through the decoding method disclosed by the invention, the model translation quality is improved, and the mode coding speed is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

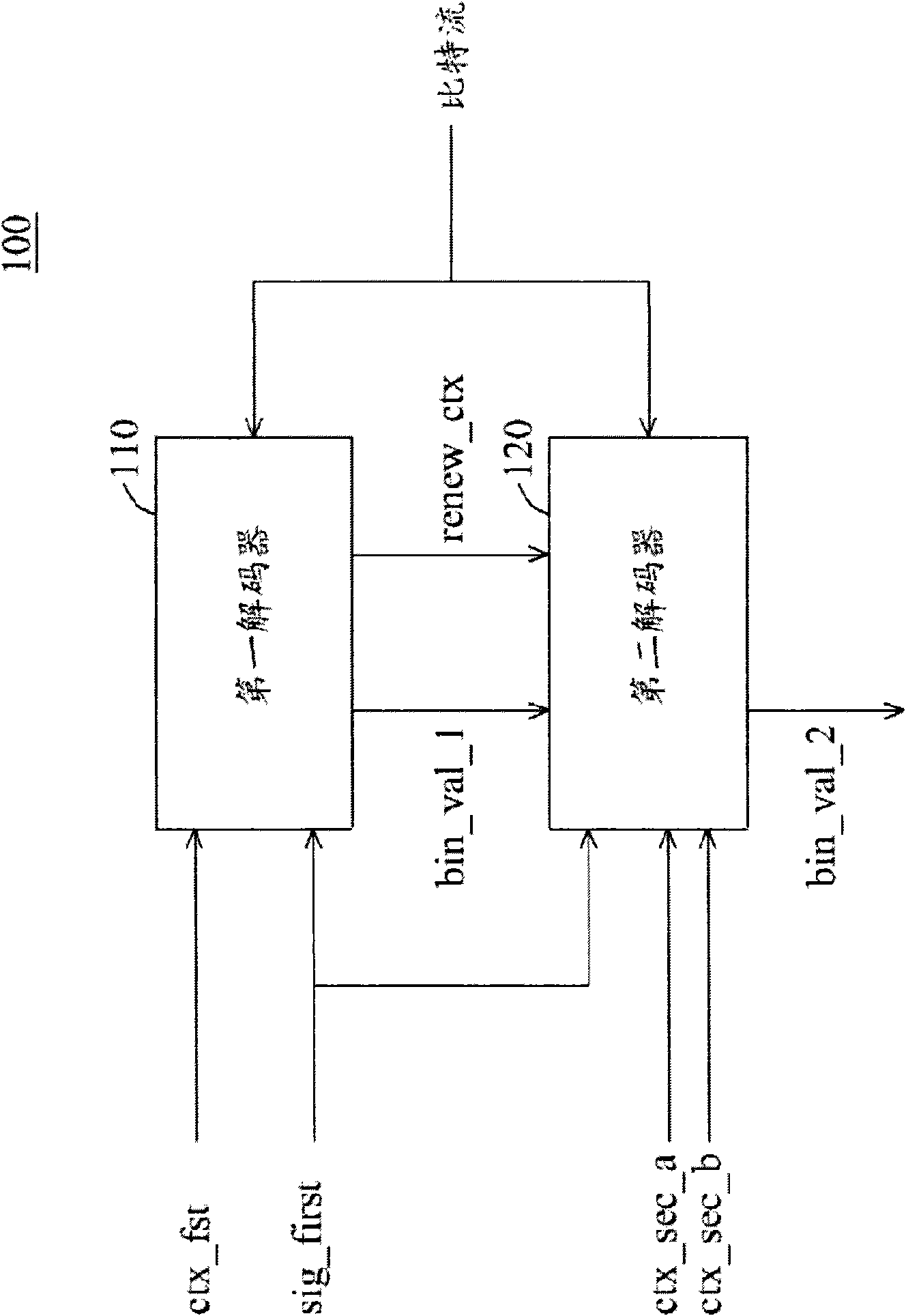

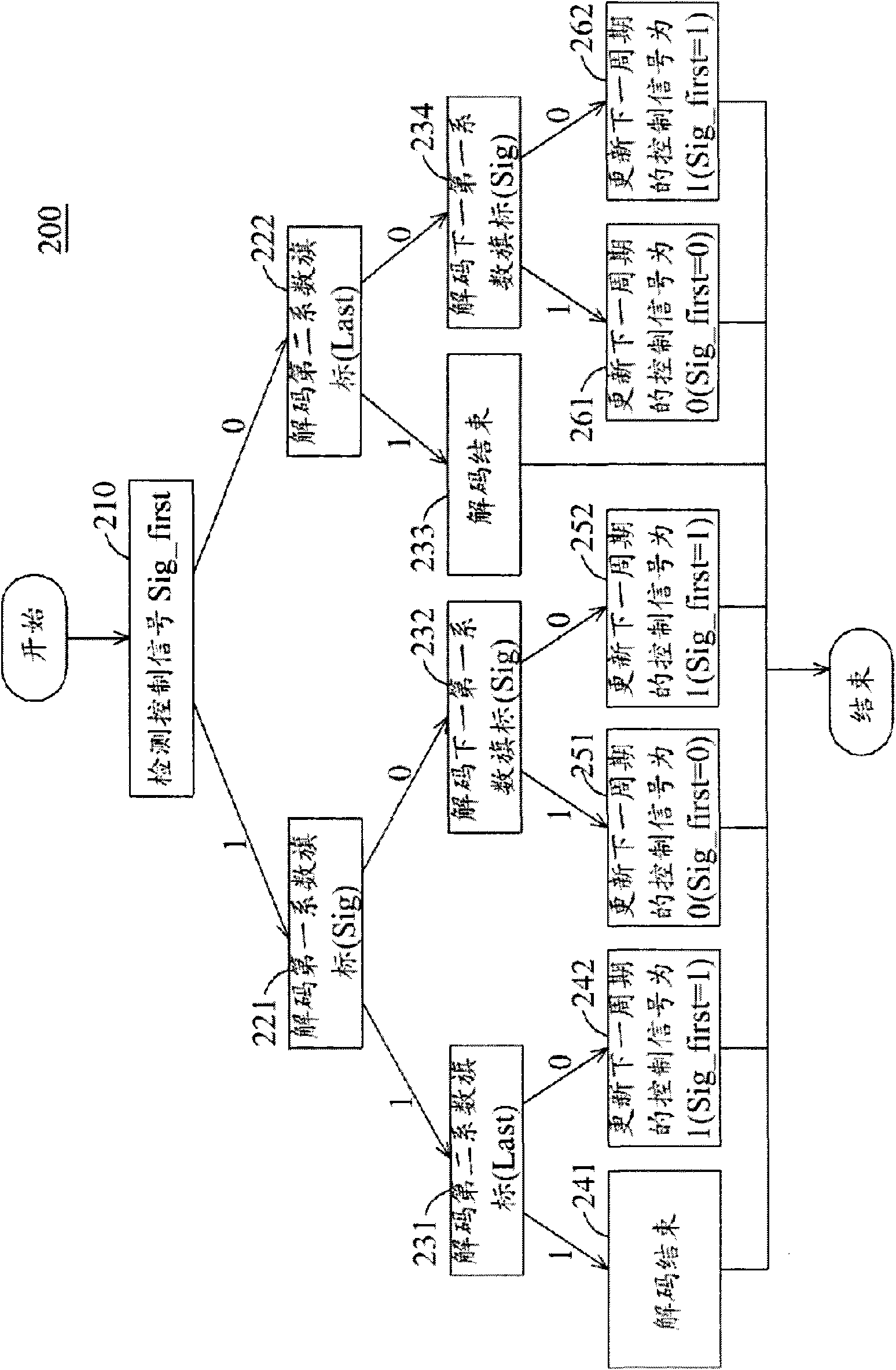

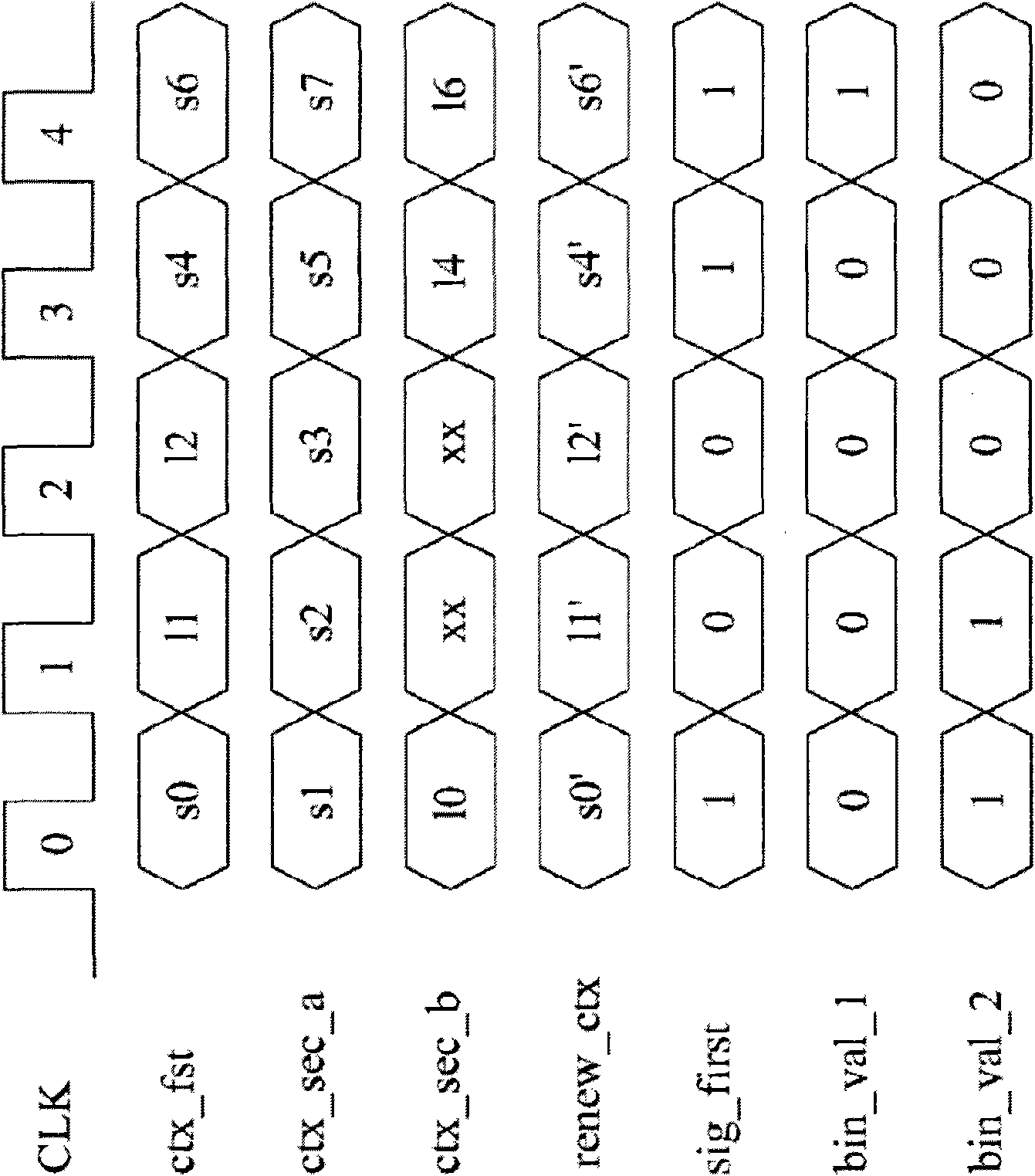

Cabac decoding unit and method

ActiveCN101600104AImprove decoding efficiencyImprove decoding speedCode conversionTelevision systemsComputer architectureControl signal

A context-based adaptive binary arithmetic coding (CABAC) method. The CABAC method for a bitstream comprises: detecting a control signal (Sig_first); decoding a first bin representing one of a first coefficient flag (Sig) and a second coefficient flag (Last) from the bitstream according to the control signal (Sig_first); decoding a second bin representing one of the second coefficient flag (Last) and a next first coefficient flag (Sig) from the bitstream according to the decoded first bin and the control signal (Sig_first); and updating the control signal (Sig_first) according to the decoded first and second bins. The first and second bins are decoded in one clock cycle, the first coefficient flag (Sig) indicates whether a corresponding coefficient value is zero, and the second coefficient flag (Last) indicates whether a coefficient map decoding process is finished.

Owner:MEDIATEK INC

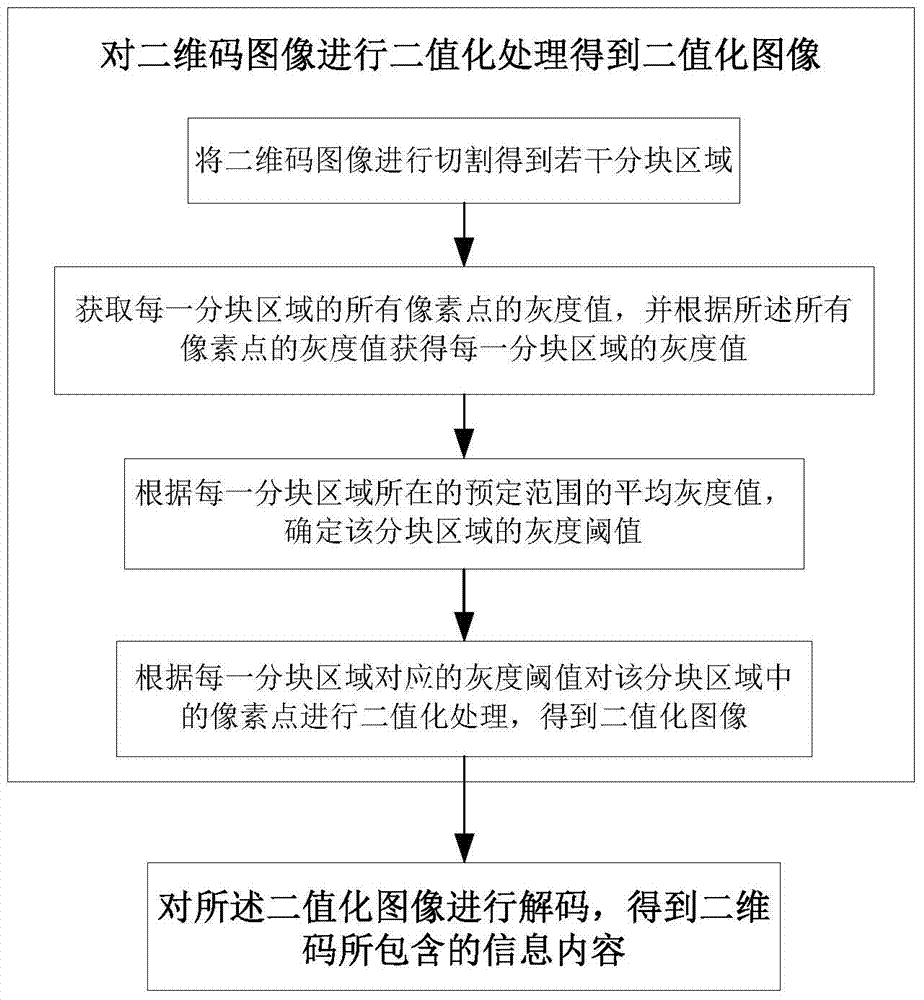

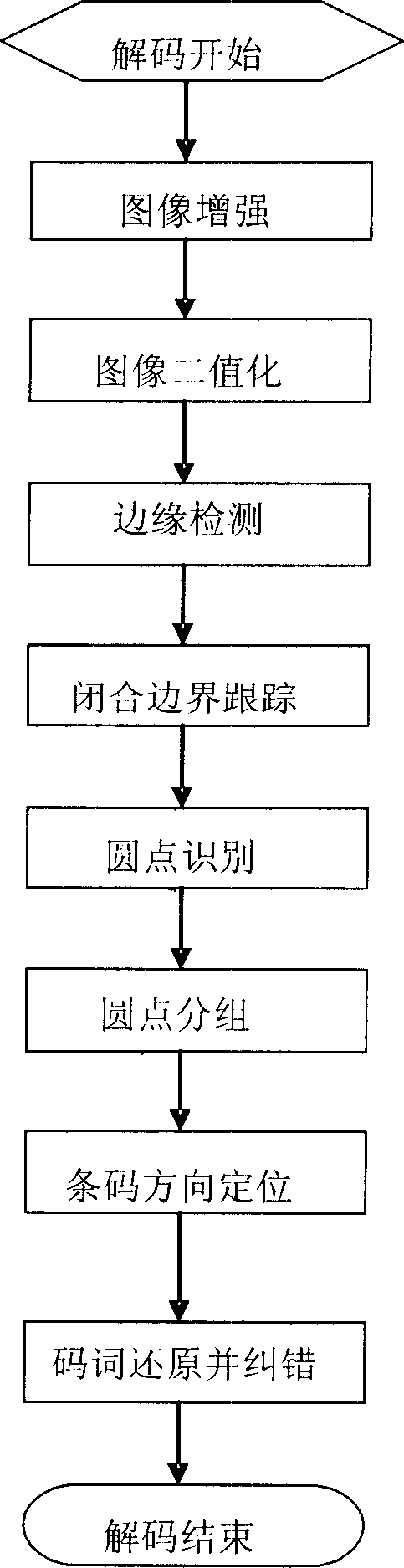

Two-dimensional code decoding system and method

InactiveCN104517089AEliminate distractionsGuaranteed accuracySensing by electromagnetic radiationParallel computingCoding decoding

Method and system for decoding a two dimensional code is disclosed. In the binarization process of a two dimensional code image, through performing a dividing operation on the two dimensional code image, each block region has a different grayscale threshold. For each block region, whether a pixel in the block region is determined as black or white is not solely based on the grayscale value of the pixel itself, but also an average grayscale value of a predetermined area set for the block region where the pixel locates. When the predetermined area in which the block region locates has a larger grayscale value as a whole, the grayscale threshold corresponding to the block region may become larger, and vice versa.

Owner:PEKING UNIV FOUNDER GRP CO LTD +1

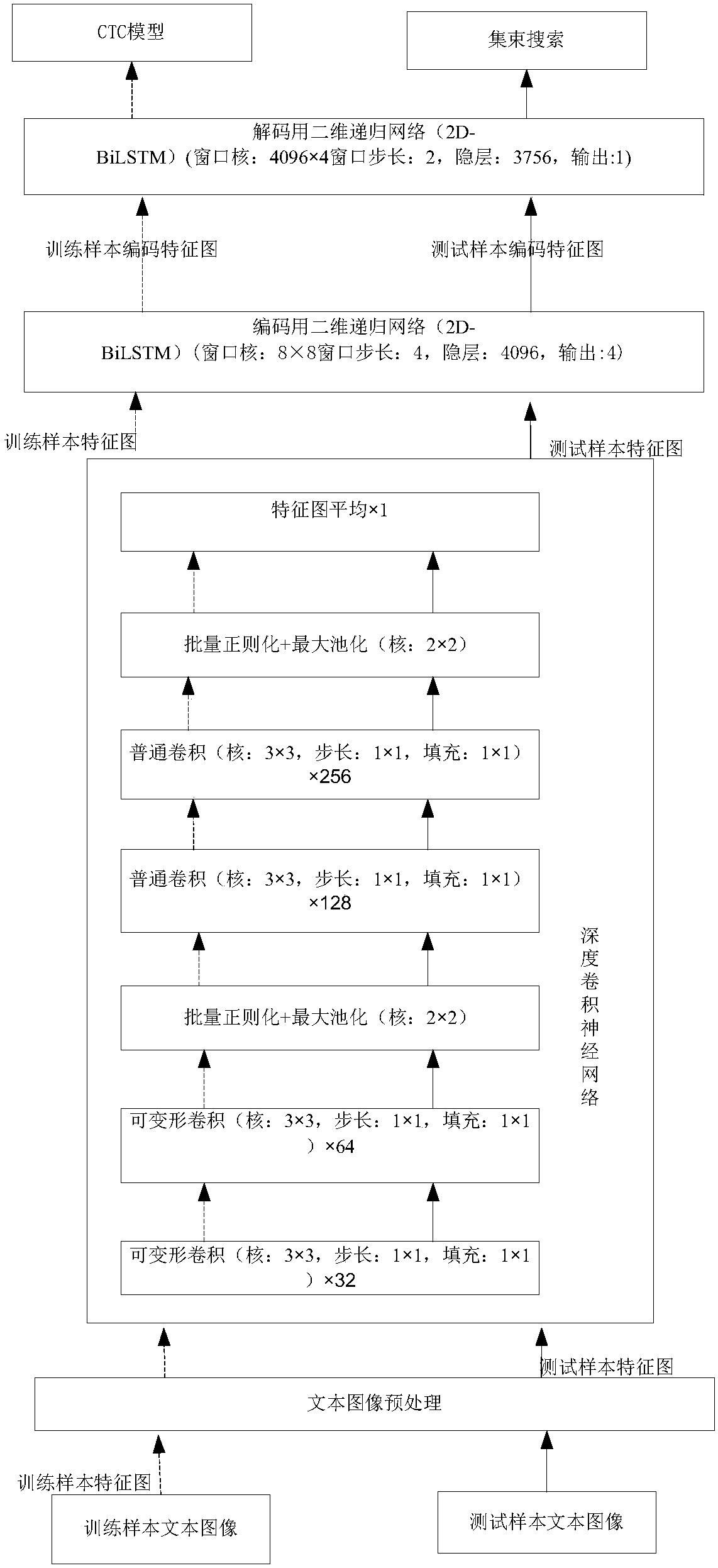

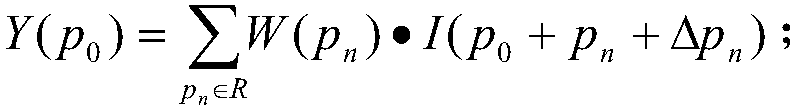

Two-dimensional recursive network-based recognition method of Chinese text in natural scene images

ActiveCN108399419AOvercoming the problem of low recognition rateImprove recognition accuracyNeural architecturesNeural learning methodsTime informationPattern recognition

The invention discloses a two-dimensional recursive network-based recognition method of Chinese text in natural scene images. Firstly, a training sample set is acquired, and a neural network formed bysequentially connecting a deep convolutional network, a two-dimensional recursive network used for encoding, a two-dimensional recursive network used for decoding and a CTC model is trained; test samples are input into the trained deep convolutional network, and feature maps of the test samples are acquired; the feature maps of the test samples are input into the trained two-dimensional recursivenetwork, which is used for encoding, to obtain encoding feature maps of the test samples; the encoding feature maps of the test samples are input into the trained two-dimensional recursive network, which is used for decoding, to obtain a probability result of each commonly used Chinese character in each image of the test samples; and clustering searching processing is carried out, and finally, the overall Chinese text in the test samples is recognized. According to the method of the invention, space / time information and context information of the text images are fully utilized, the text imagepre-segmentation problem can be avoided, and recognition accuracy is improved.

Owner:SOUTH CHINA UNIV OF TECH

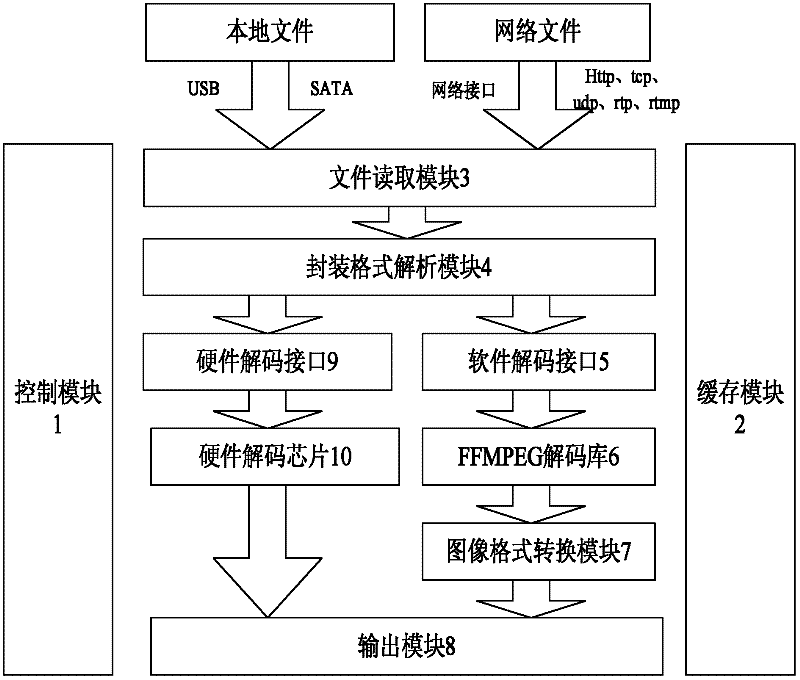

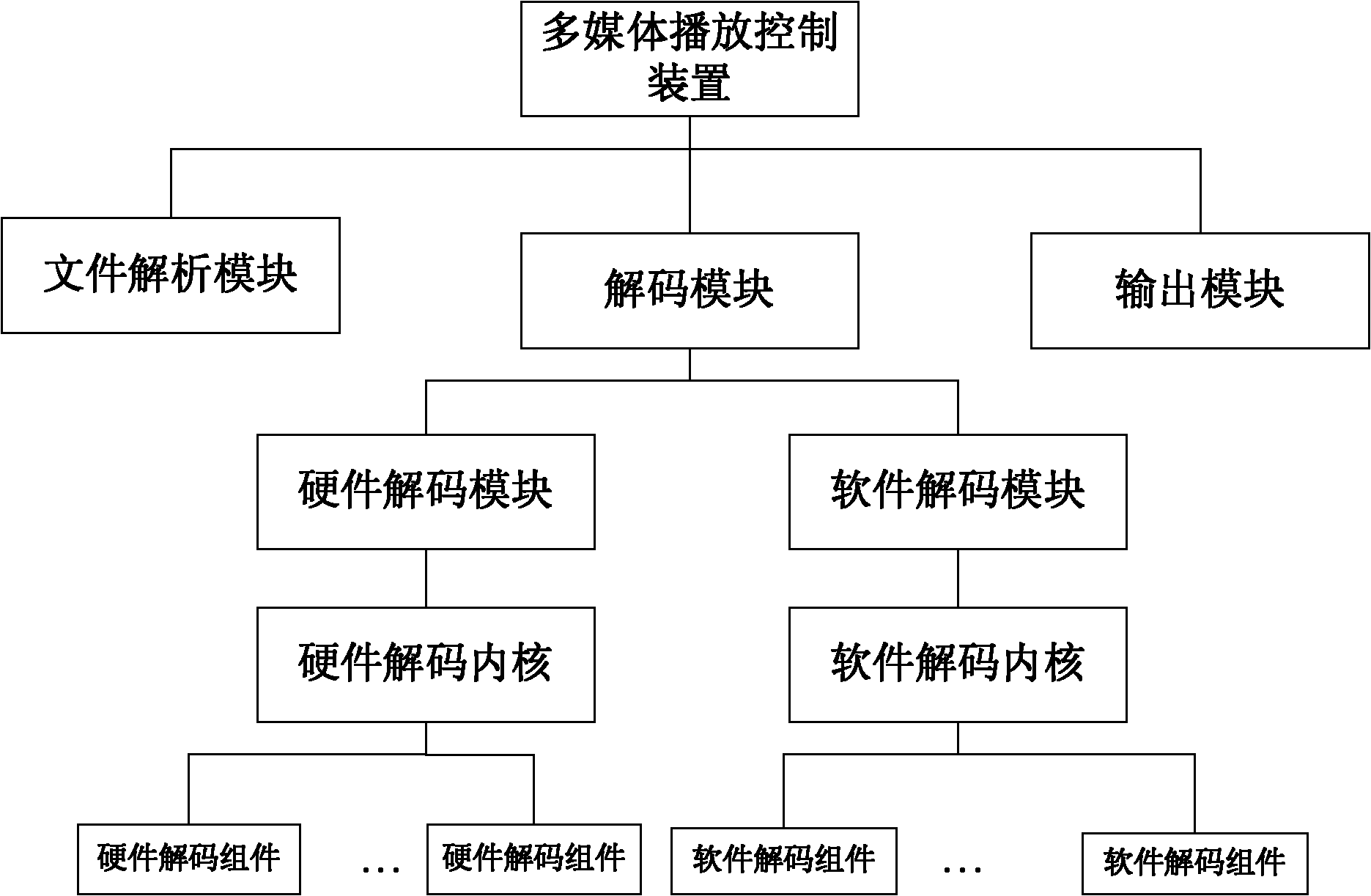

All-format media player capable of supporting hardware decoding for digital STB (Set Top Box)

ActiveCN102404624AImprove scalabilityVersatileSelective content distributionExternal storageComputer terminal

The invention discloses an all-format media player capable of supporting hardware decoding for digital STB (Set Top Box), wherein a hardware decoding interface and a hardware decoding chip which is connected with the hardware decoding interface are arranged between a package format analysis module and an output module; when the hardware decoding manner is needed, demultiplexed PES data or ES data are transmitted to the hardware decoding chip through the hardware decoding interface and decoded into audio / video data. The all-format media player disclosed by the invention has the advantages of coexisting software / hardware decoding, supporting hardware acceleration, supporting various types of channels, having excellent expansibility on software, having excellent generality on source codes and capably transplanting on different hardware platforms. The all-format media player can play media files stored in a local storage medium and network media files, supports the playing of the media files in full package format, and further reads and plays the media files in an external storage medium. According to the all-format media player disclosed by the invention, the disadvantages that the media files in several specific formats only can be played by the present digital television terminal can be effectively solved, therefore the resources are saved, and superior high-definition video playing effect can further be provided to users.

Owner:AVIT

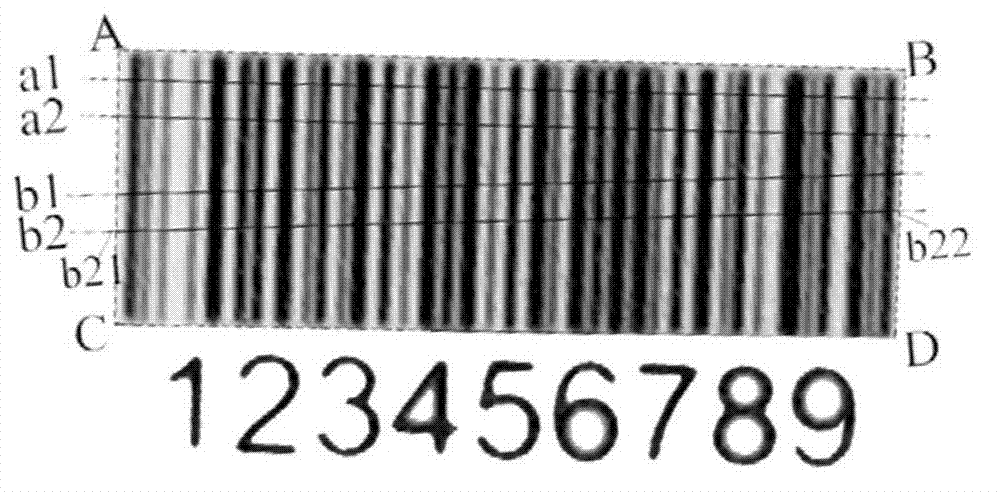

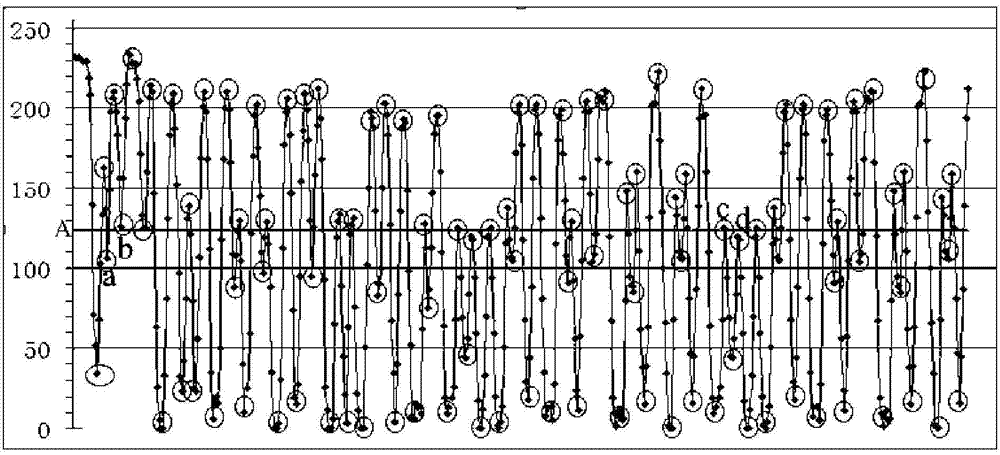

One-dimensional barcode identification method based on image sampling line grey scale information analysis

ActiveCN104732183ASimple processImprove decoding speedSensing by electromagnetic radiationInformation analysisBarcode

The invention provides a one-dimensional barcode identification method based on image sampling line grey scale information analysis. The method comprises the following steps that 1, a precise region of a one-dimensional barcode is found out according to image segmentation and calculation to form a sampling interval ABCD; 2, a sampling curve b2 passing through all black and white strip spaces of the one-dimensional barcode is arranged according to the placing position of an image or in the other approaching direction; 3, grey scale values at all positions, from a point b21 to a point b22 on the sampling interval ABCD, on the sampling curve b2 are obtained to form a grey scale curve, grey scale curve crest parts correspond to black strips of the one-dimensional barcode, and trough parts correspond to blanks of the one-dimensional barcode; 4, corresponding processing is carried out on the sampled grey scale curve to obtain a two-value pulse curve of black and white corresponding; 5, barcode bar vacancy boundary points are obtained from the two-value pulse curve, then the width of the barcode bar vacancy sequence is calculated and converted into the width of a barcode bar vacancy unit module, and a coded character set of the corresponding one-dimensional barcode is searched to obtain corresponding codons.

Owner:HANGZHOU SYNOCHIP DATA SECURITY TECH CO LTD

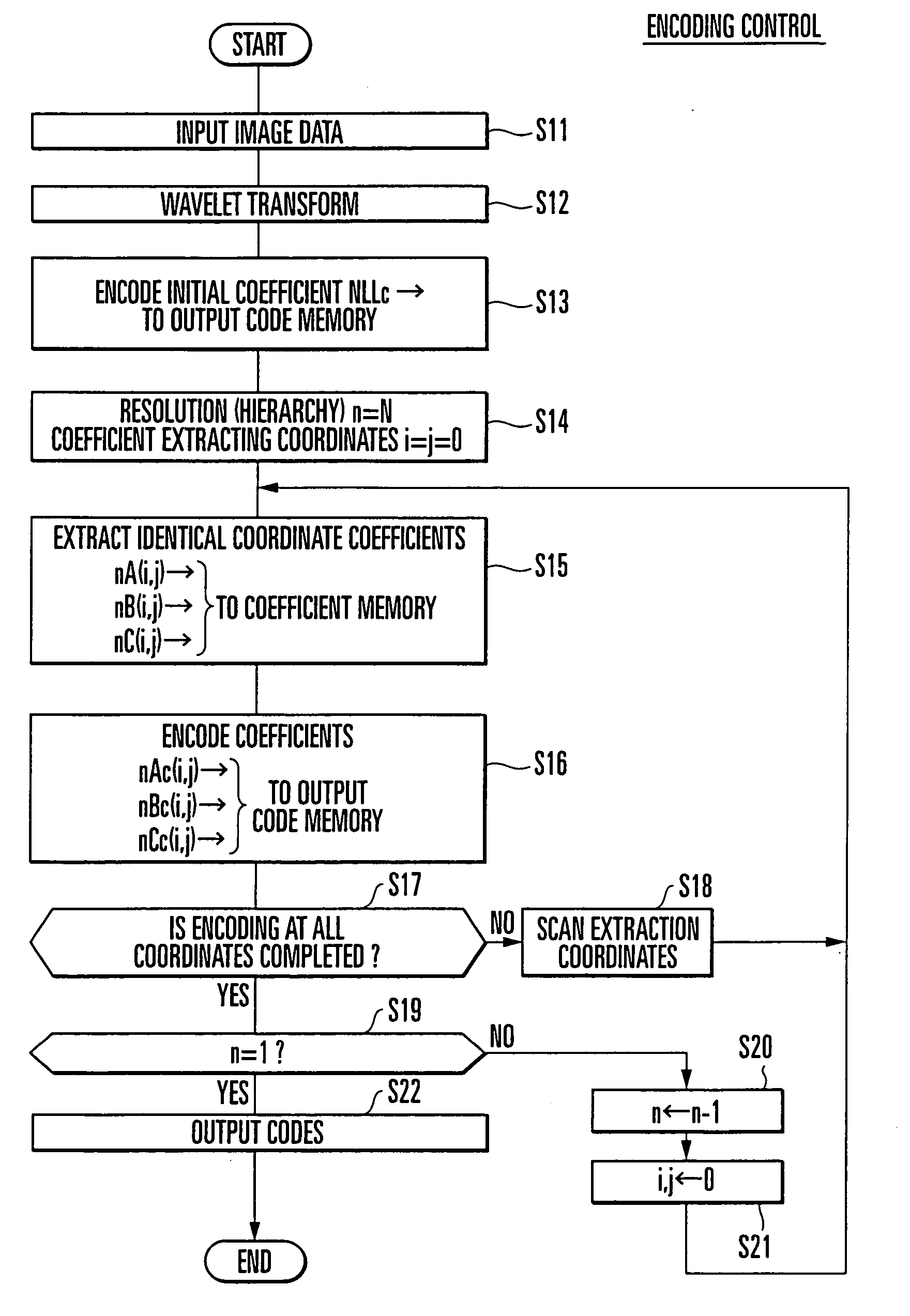

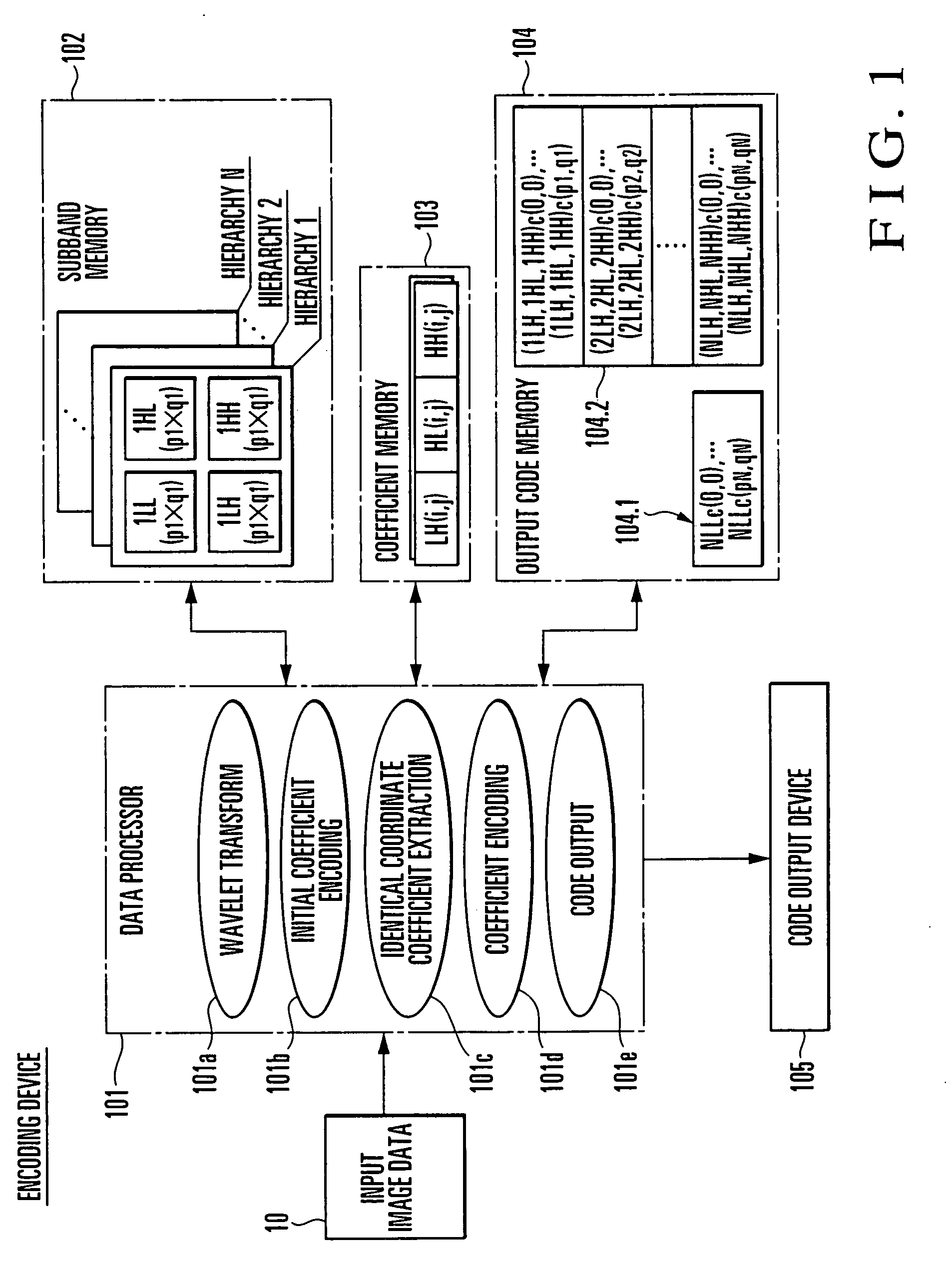

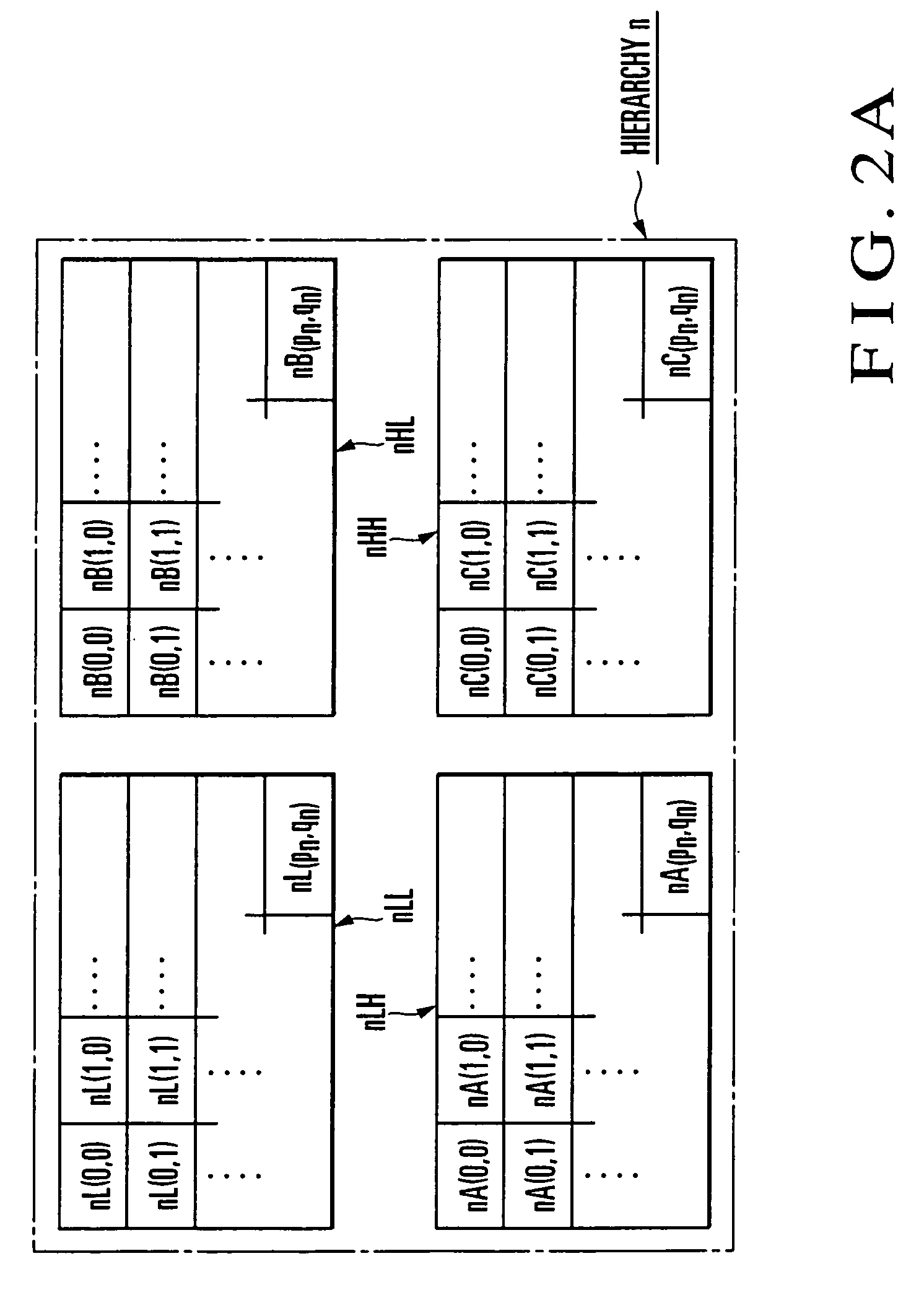

2-Dimensional signal encoding/decoding method and device

InactiveUS20070165959A1Reduce the number of timesShorten the timeCode conversionCharacter and pattern recognitionWavelet decompositionHaar functions

An image is divided into subbands by wavelet transform using the Haar function as the base, and the lowest-frequency LL subband is entirely encoded. LH, HL, and HH subband coefficients which belong to the wavelet decomposition level of each hierarchy are then encoded such that coefficients at the same spatial position are encoded at once. The decoding side first decompresses the lowest-frequency LL subband, and then decodes sets of the LH, HL, and HH coefficients at the same spatial position in the subband of each wavelet decomposition level one by one. The decoding side immediately performs inverse wavelet transform by using the coefficient values, thereby obtaining the LL coefficient value of the next wavelet decomposition level. This makes it possible to sufficiently increase the processing speed even when the wavelet encoding / decoding is performed using a sequential CPU.

Owner:NEC CORP

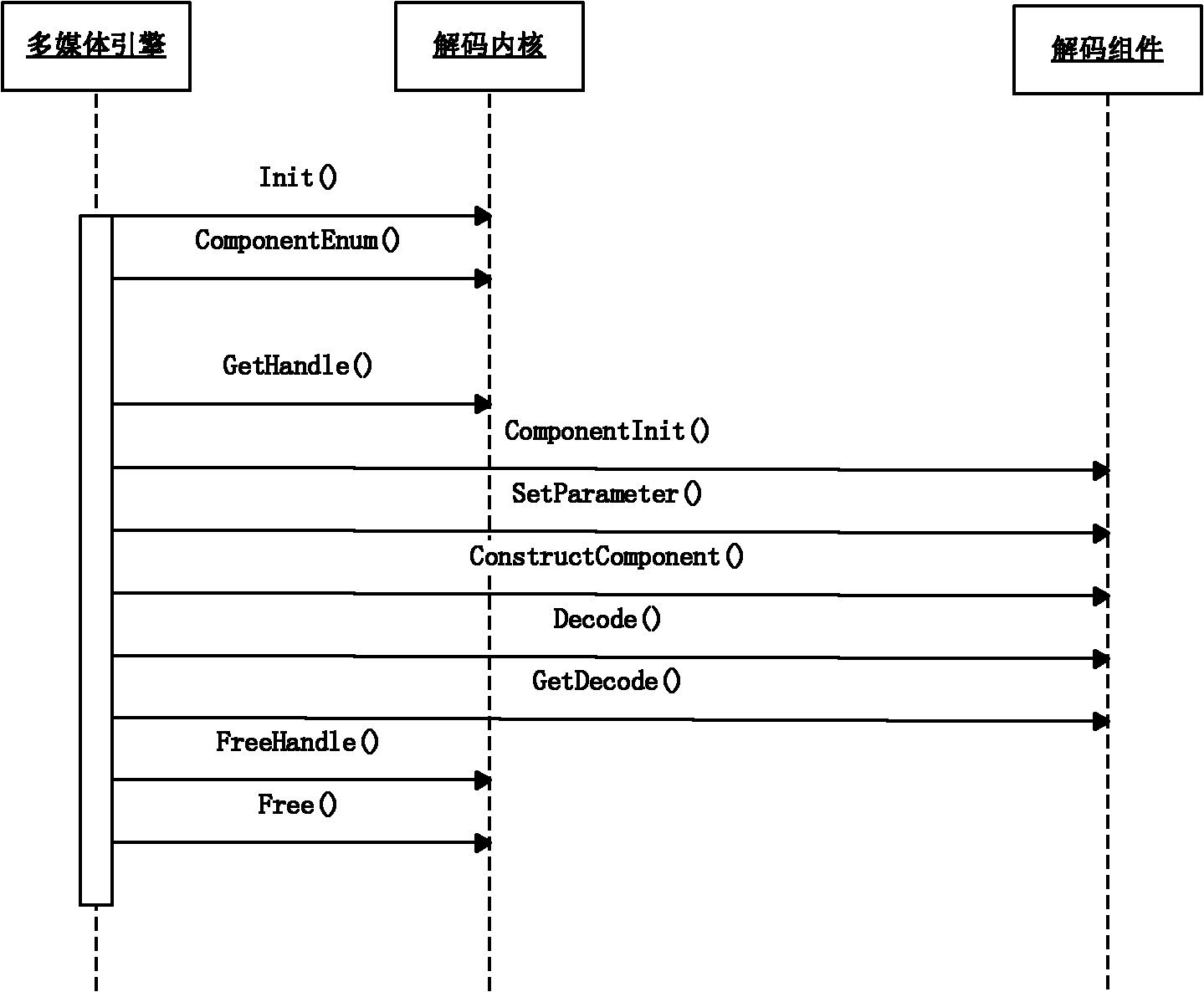

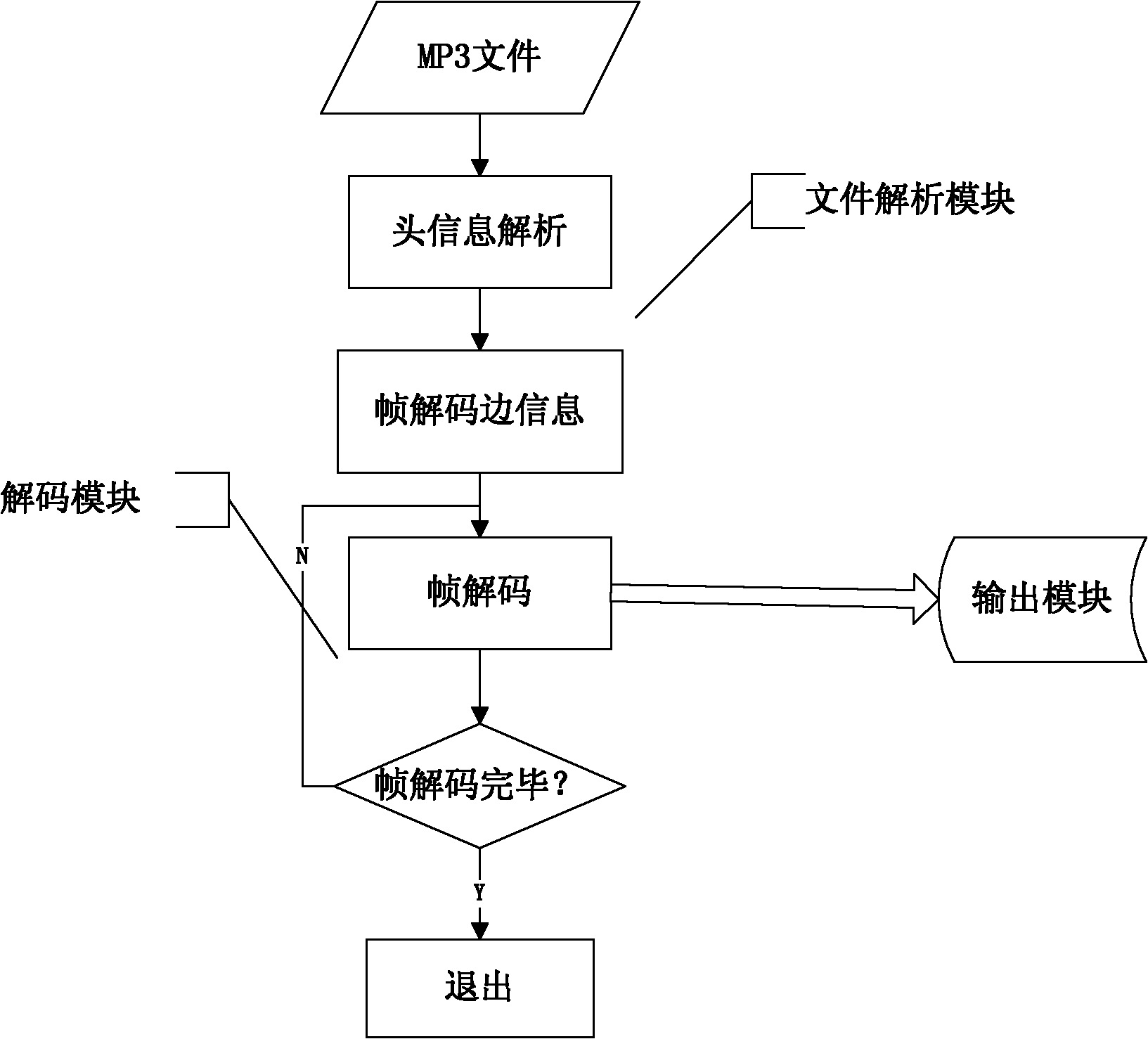

Software and hardware-decoding general multi-media playing equipment and playing method thereof

InactiveCN102074257APortableImprove decoding speedDigital recording/reproducingDisplay deviceInformation acquisition

The invention discloses software and hardware-decoding general multi-media playing equipment, which comprises a multi-media information acquisition device, a multi-media processing device and a multi-media display device. The general multi-media playing equipment is characterized in that: the multi-media processing device comprises a file analyzing module for analyzing multi-media information according to the types of multi-media files, a decoding module for decoding analyzed multi-media audio / video steaming information and an output module for receiving the multi-media information decoded by the decoding module and outputting the multi-media information to the multi-media display device for displaying. The general multi-media playing equipment can utilize processing capacity of a hardware platform to a maximum degree and improve the processing capacity of multi-media.

Owner:博视联(苏州)信息科技有限公司

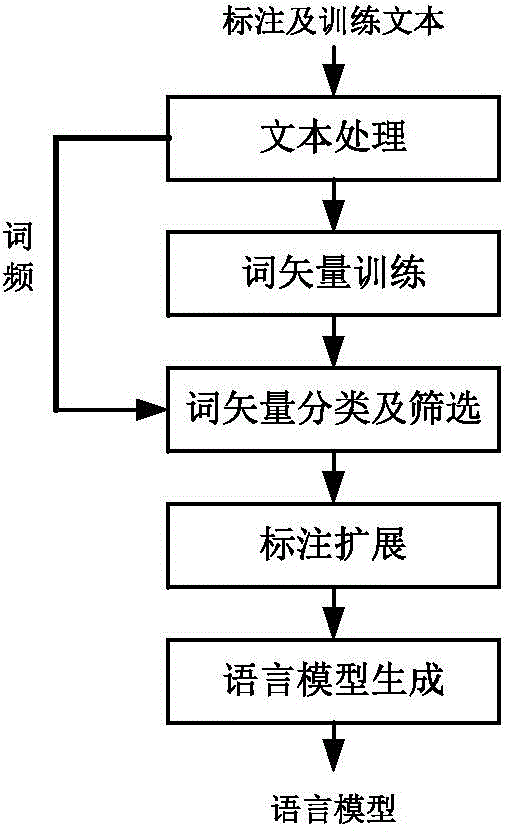

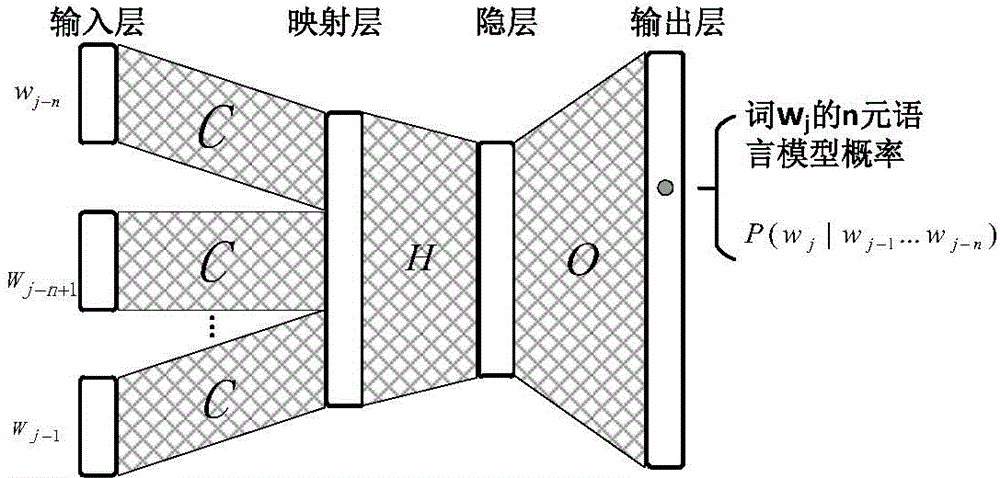

N-gram grammar model constructing method for voice identification and voice identification system

InactiveCN105261358AReduce sparsityControlling the Search PathSpeech recognitionPart of speechSpeech identification

The invention provides an n-gram grammar model constructing method for voice identification and a voice identification system. The method comprises: step (101), training is carried out by using a neural network language model to obtain word vectors, and classification and multi-layer screening is carried out on word vectors to obtain parts of speech; step (102), manual marking is expanded by using a direct word frequency statistic method; and when same-kind-word substitution is carried out, direct statistics of 1-to-n-gram grammar combination units changing relative to an original sentence is carried out, thereby obtaining an n-gram grammar model of the expanding part; step (103), manual marking is carried out to generate a preliminary n-gram grammar model, model interpolation is carried out on the preliminary n-gram grammar model and the n-gram grammar model of the expanding part, thereby obtaining a final n-gram grammar model. In addition, the step (101) includes: step (101-1), inputting a mark and a training text; step (101-2), carrying out training by using a neural network language model to obtain corresponding work vectors of words in a dictionary; step (101-3), carrying out word vector classification by using a k mean value method; and step (101-4), carrying out multi-layer screening on the classification result to obtain parts of speech finally.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

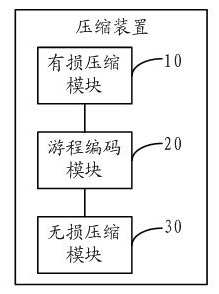

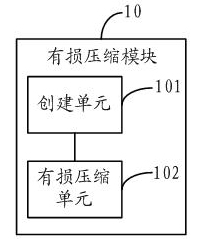

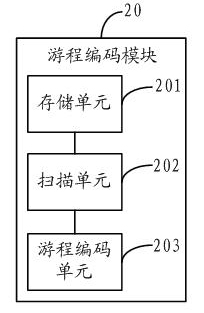

Method and device for compressing film thumbnails

ActiveCN102088604AReduce digitsSmooth displayTelevision systemsDigital video signal modificationThumbnailLossless compression

The embodiment of the invention discloses a method for compressing film thumbnails, comprising the following steps: carrying out loss compression on the film thumbnails to acquire film thumbnails with specific digits; carrying out run coding on the film thumbnails with the specific digits; and carrying out lossless compression on the film thumbnails which are subjected to the run coding to acquire compressed pictures. The embodiment of the invention also provides a device for compressing film thumbnails. In the invention, the loss compression, the run coding and the lossless compression are combined effectively to compress the film thumbnails, thus having the advantages of higher compression ratio, high compression efficiency, low degree of distortion and fast decoding speed.

Owner:SHENZHEN SKYWORTH DIGITAL TECH CO LTD

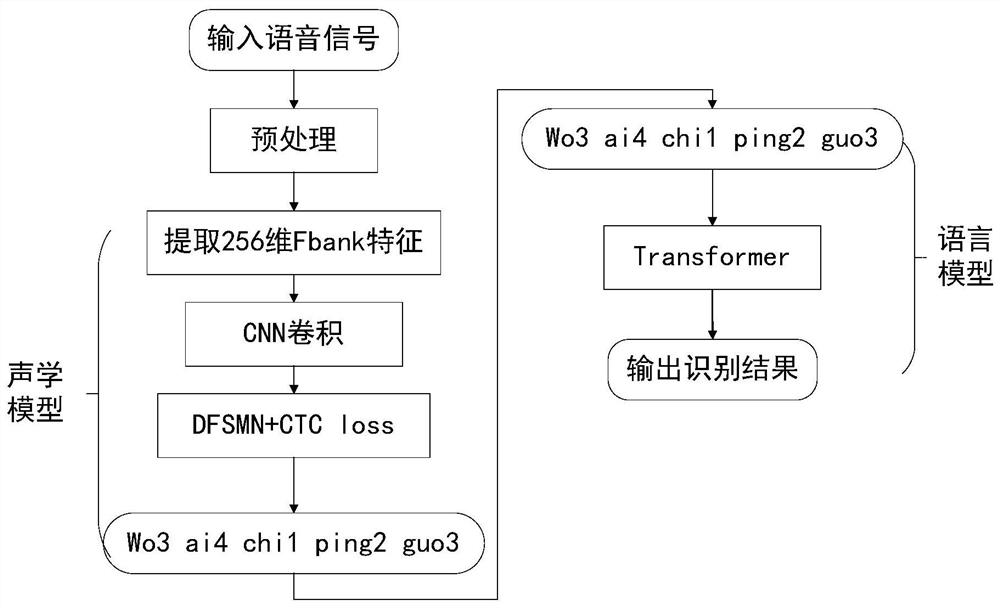

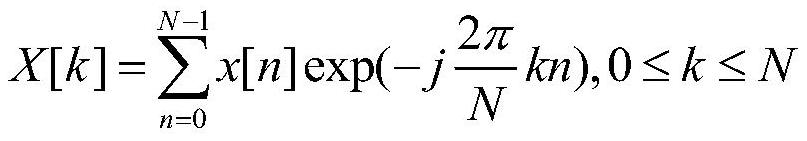

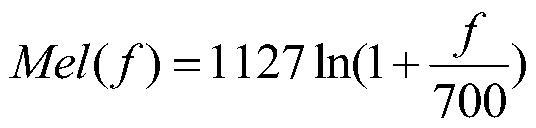

Chinese speech recognition method combining Transformer and CNN-DFSMN-CTC

InactiveCN111968629AIncrease training speedImprove recognition accuracySpeech recognitionData setBeam search

The invention requests to protect a Chinese speech recognition method combining Transformer and CNN-DFSMN-CTC. The method comprises the steps of: S1, preprocessing a speech signal, and extracting 80-dimensional log mel Fbank features; S2, carrying out convolution on the extracted 80-dimensional Fbank features by using a CNN convolution network; S3, inputting the features into a DFSMN network structure; S4, taking CTC loss as a loss function of an acoustic model, predicting by adopting a Beam search algorithm, and optimizing by using an Adam optimizer; S5, introducing a strong language model Transformer for iterative training until an optimal model structure is achieved; and S6, combining the Transformer with the acoustic model CNN-DFSMN-CTC to carry out adaptation, and carrying out verification on multiple data sets to finally obtain an optimal identification result. According to the method, the recognition accuracy is higher, the decoding speed is higher, the character error rate reaches 11.8% after verification on a plurality of data sets, and the best character error rate reaches 7.8% on an Aidatang data set.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

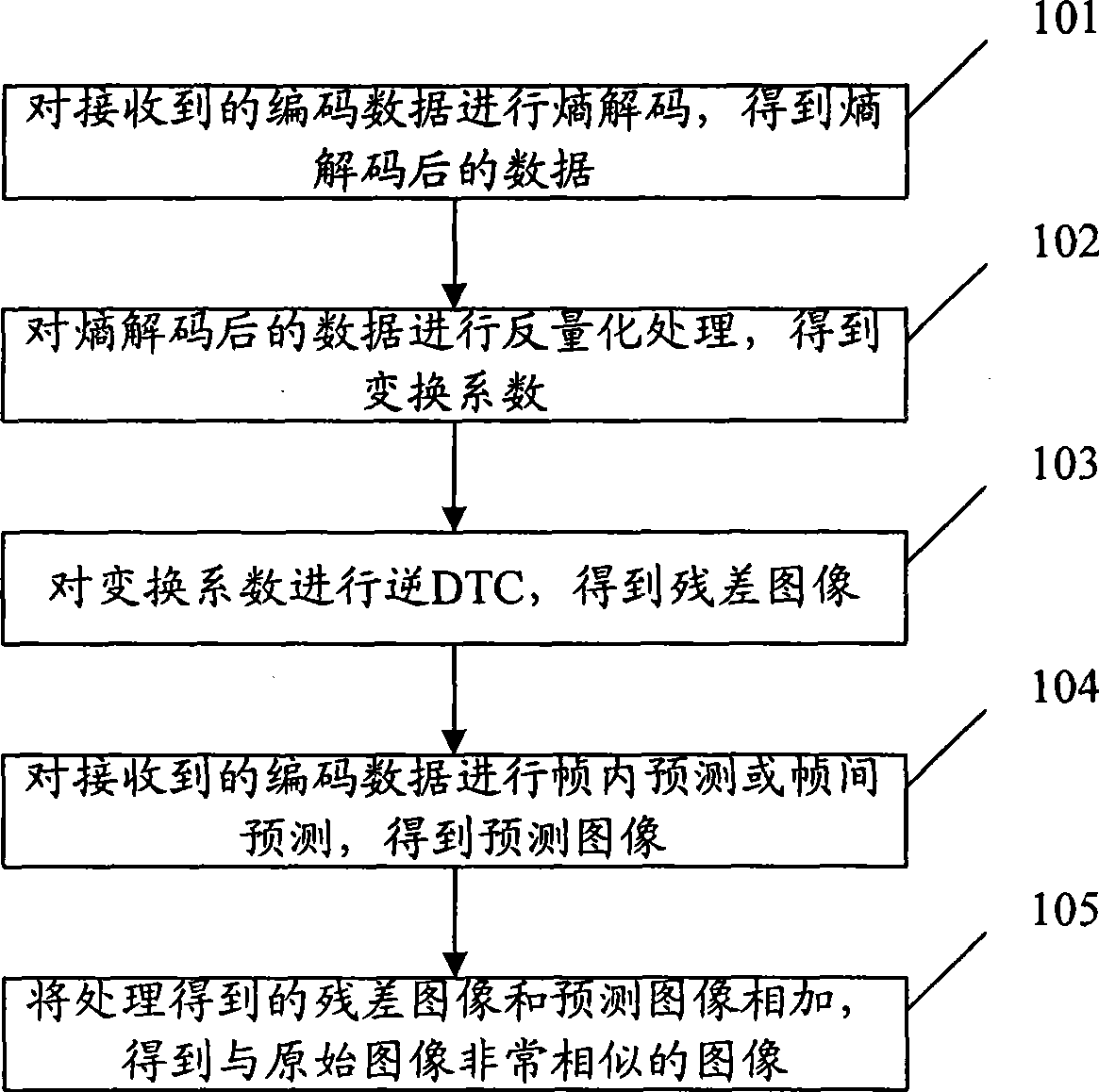

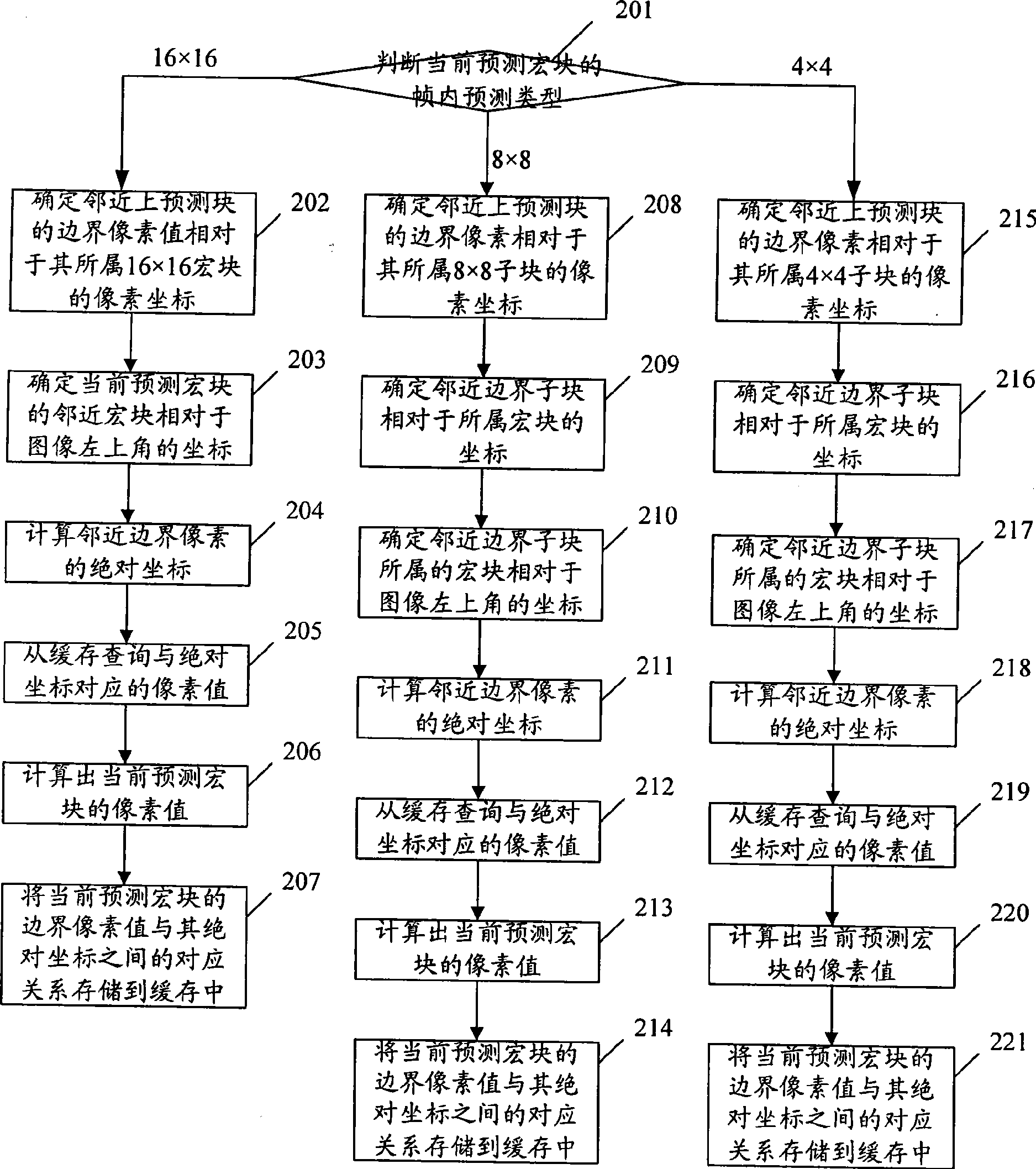

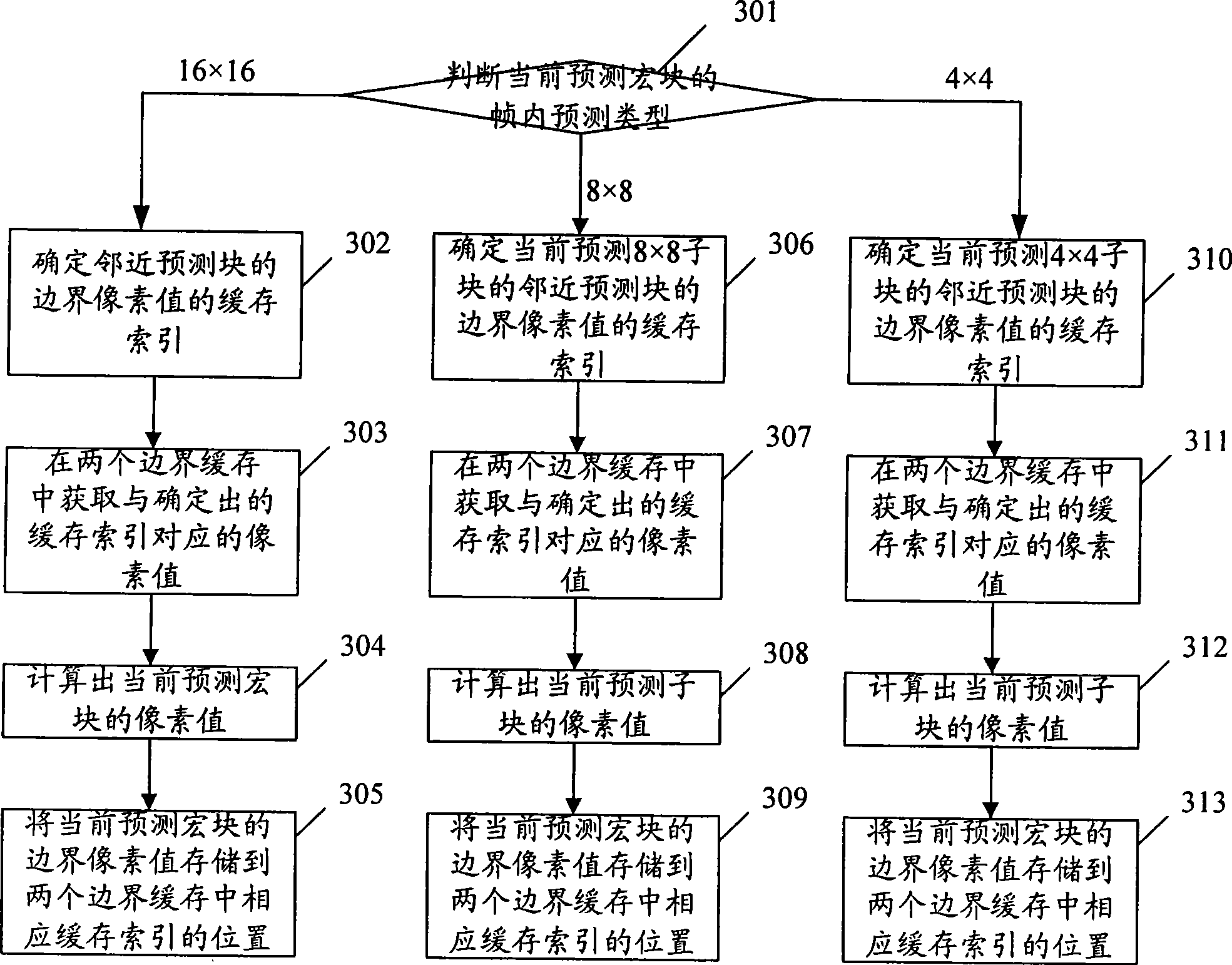

Method and apparatus for intra-frame prediction

InactiveCN101365136AShorten forecast timeImprove decoding speedTelevision systemsDigital video signal modificationAlgorithmIntra-frame

Owner:SHENZHEN COSHIP ELECTRONICS CO LTD

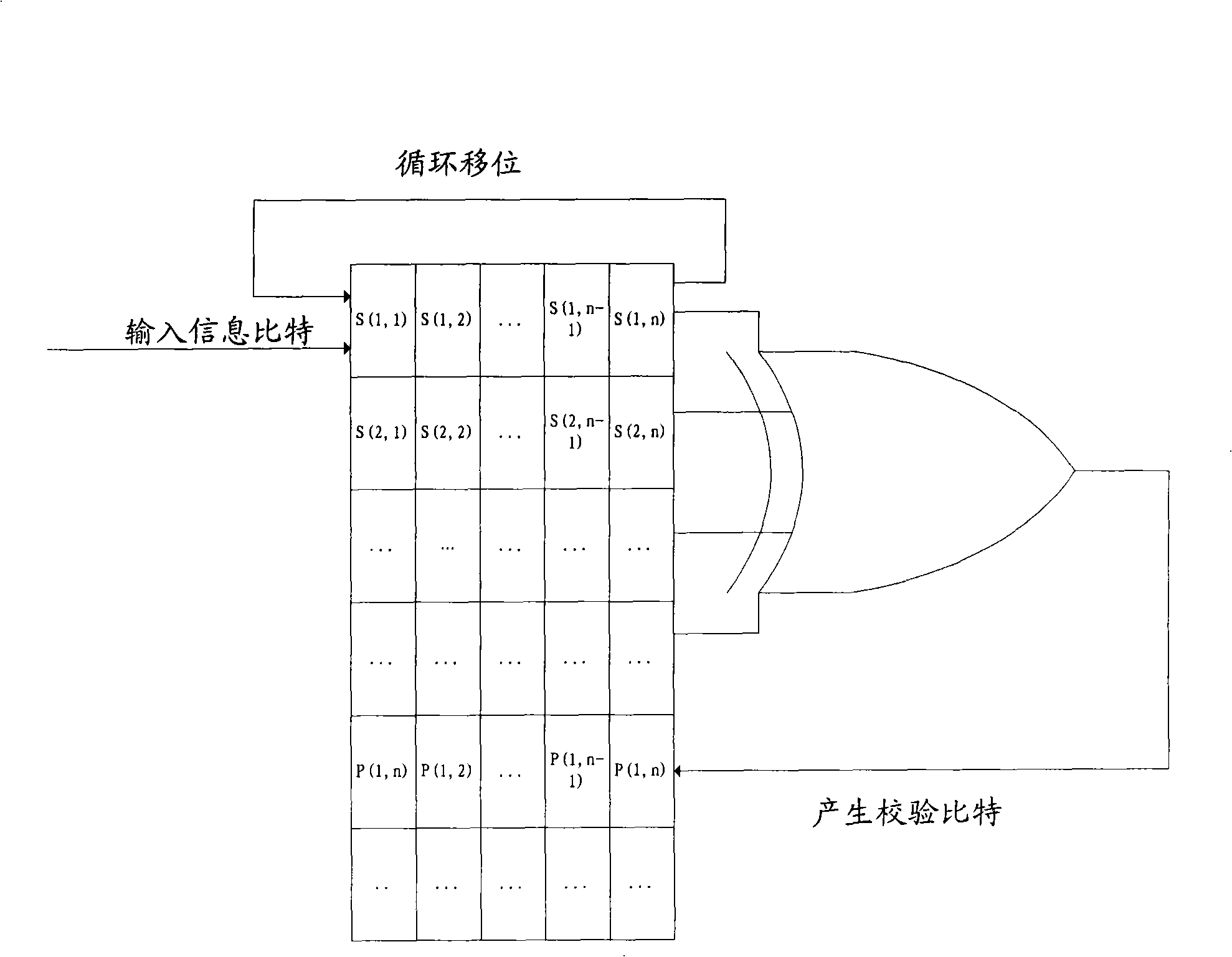

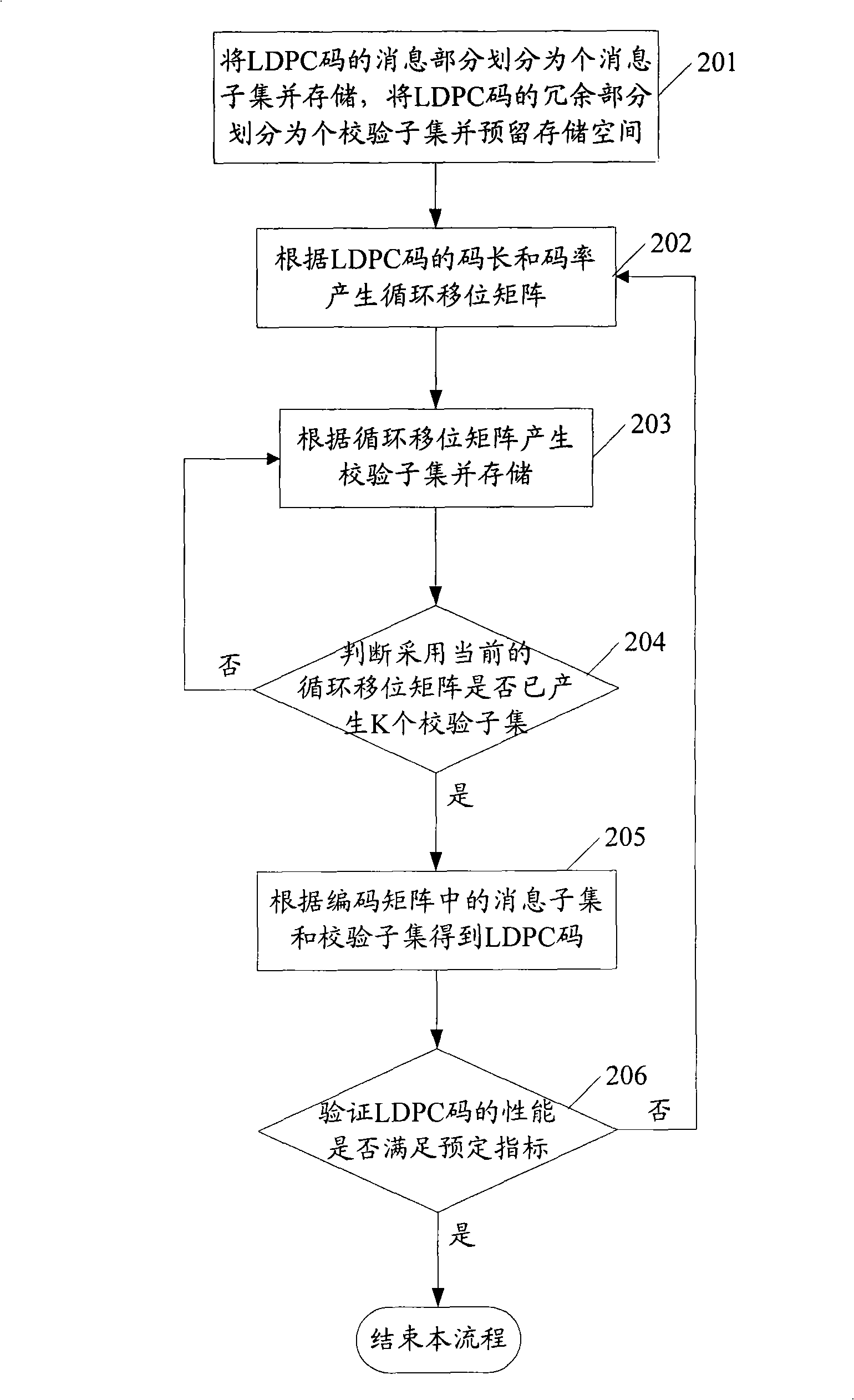

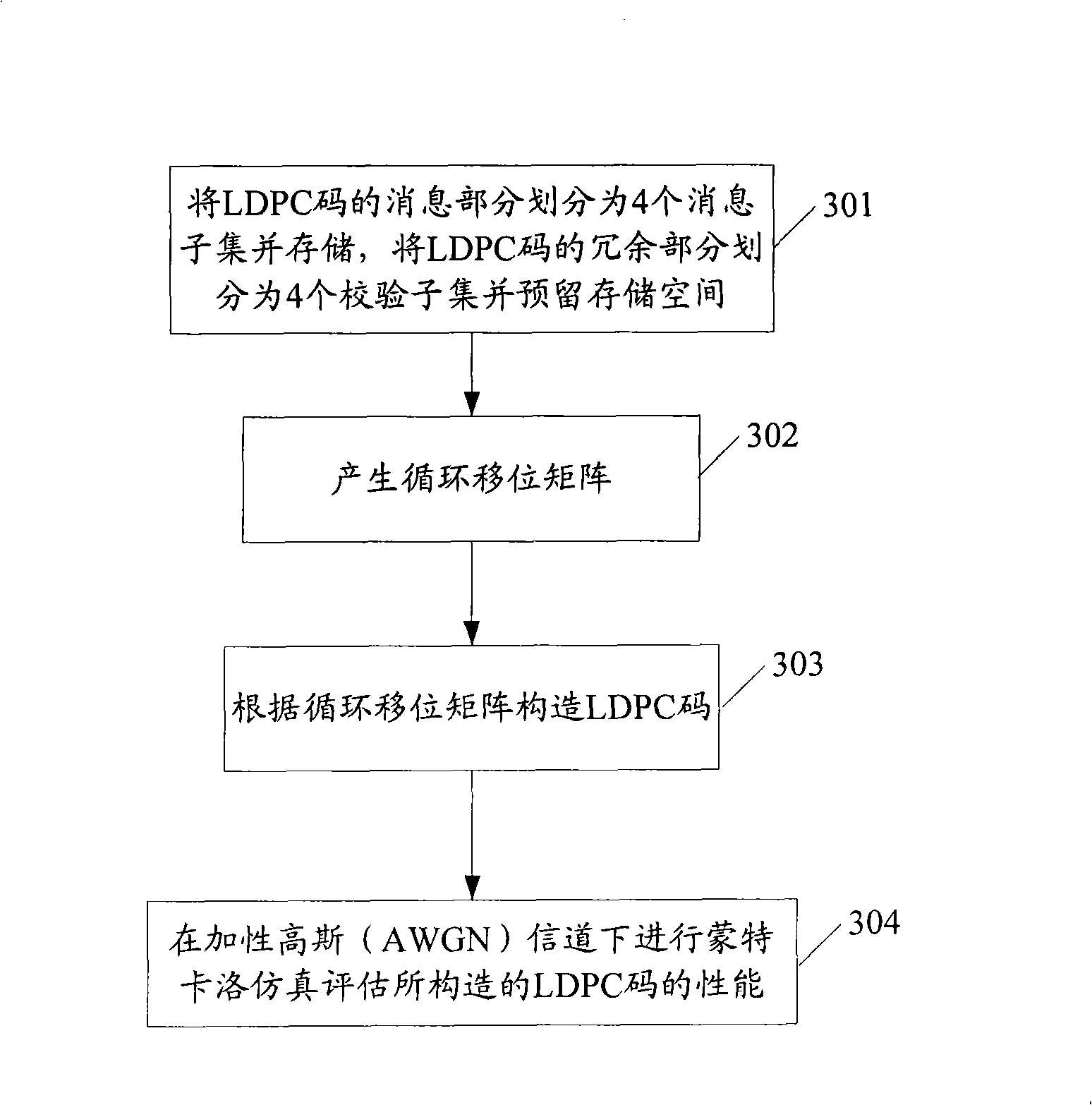

Constructing method, encoder and decoder of low density check code

InactiveCN101340193AReduce complexityImprove encoding speedError correction/detection using multiple parity bitsShift matrixCyclic shift

The embodiment of the invention discloses a construction method of check codes with low density, an encoder and a decoder. A message part and a redundant part of the LDPC codes to be constructed are respectively divided into M message subsets and K check subsets and are respectively stored in the different lines of an encoding matrix; a circulating shift matrix is generated according to code length and code rate of the LDPC codes to be constructed, elements in the encoding matrix are carried out the circulating shift according to the circulating shift matrix before carrying out exclusive or plus of the elements in the encoding matrix by lines each time respectively, thereby leading information bits and check bits in the same line in the encoding matrix which participate in the exclusive or plus to be different and further generating the different check bits by the exclusive or plus; therefore, the embodiment of the invention can construct the LDPC codes by the search of the circulating shift matrix, compared with a great number of matrix operations in the prior art, the construction method, the encoder and the decoder of the invention can reduce the complexity for constructing, encoding and decoding the LDPC codes.

Owner:POTEVIO INFORMATION TECH

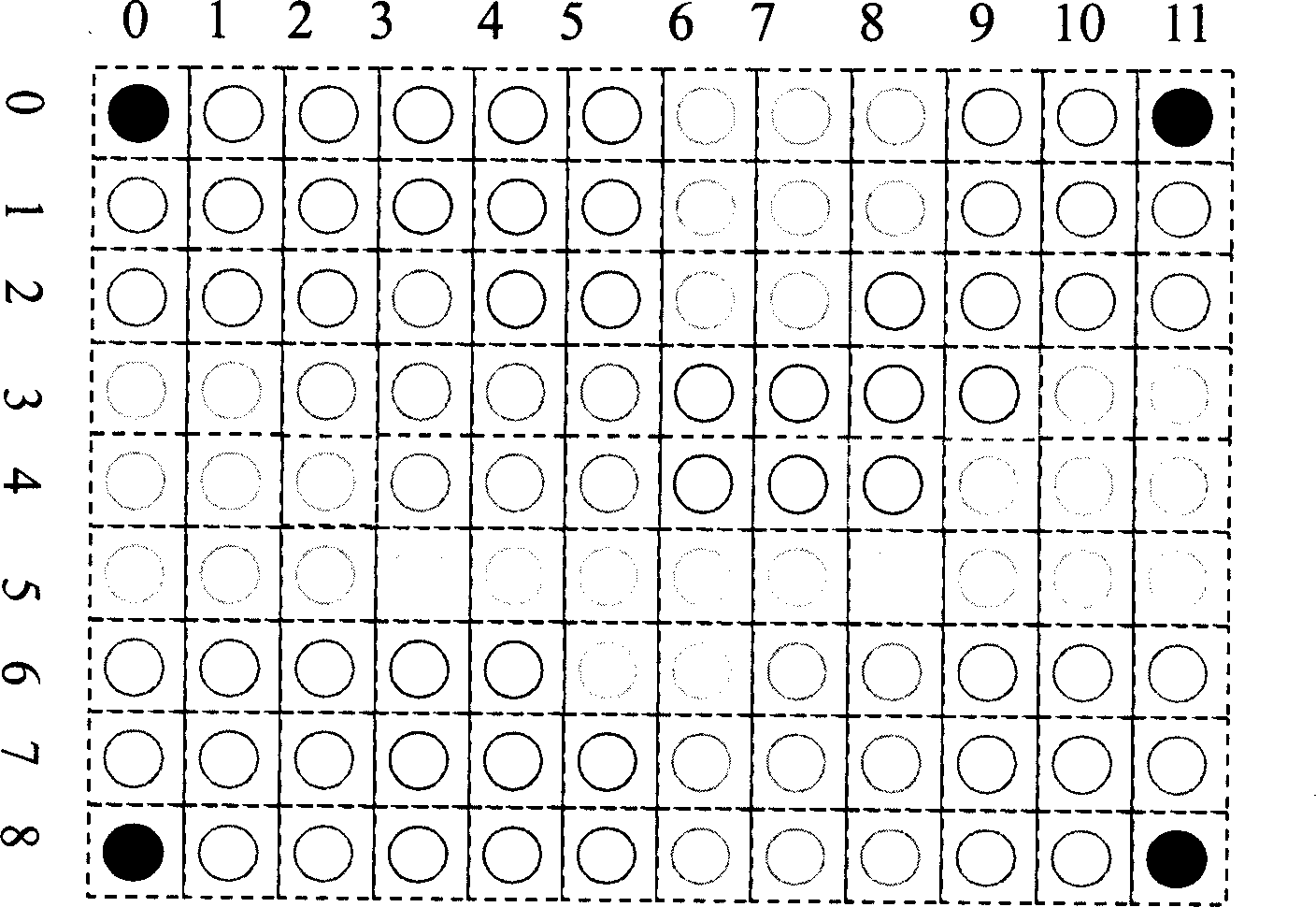

Two-dimensional code, encoding and decoding method thereof

InactiveCN1885311AReduce adhesionLower requirementCo-operative working arrangementsCharacter and pattern recognitionDecoding methodsImaging processing

Owner:SHENZHEN SYSCAN TECHC CO LTD

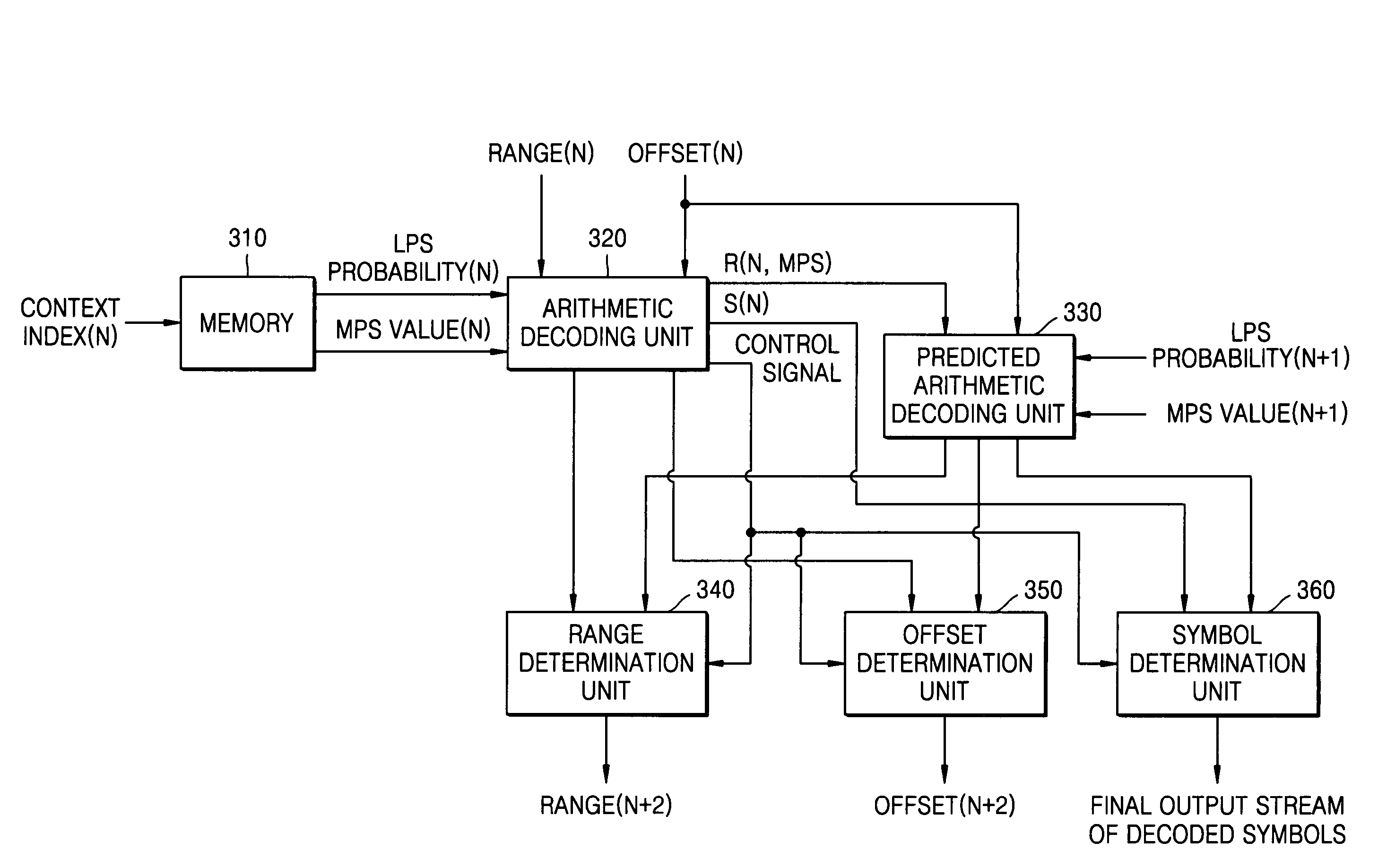

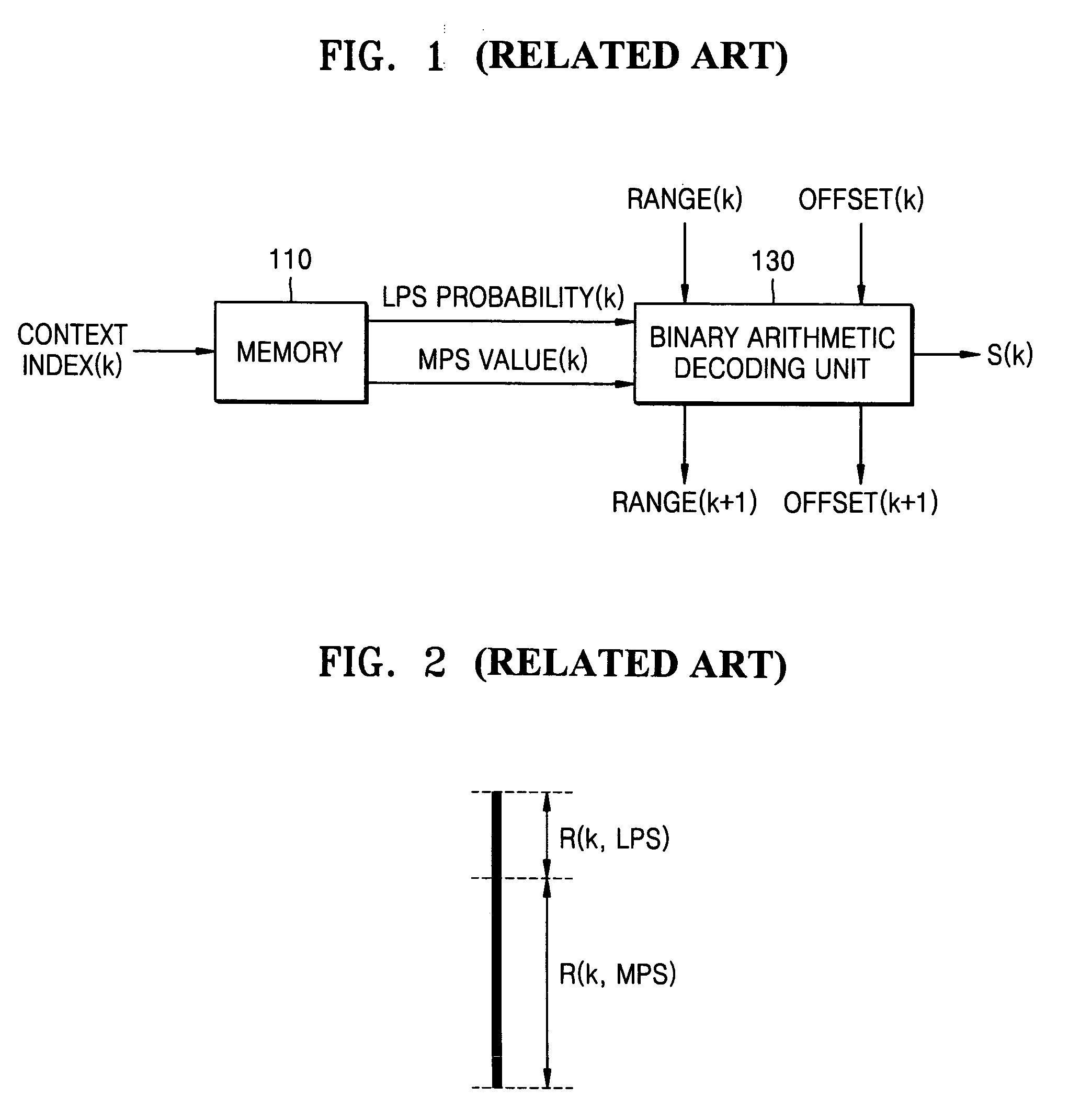

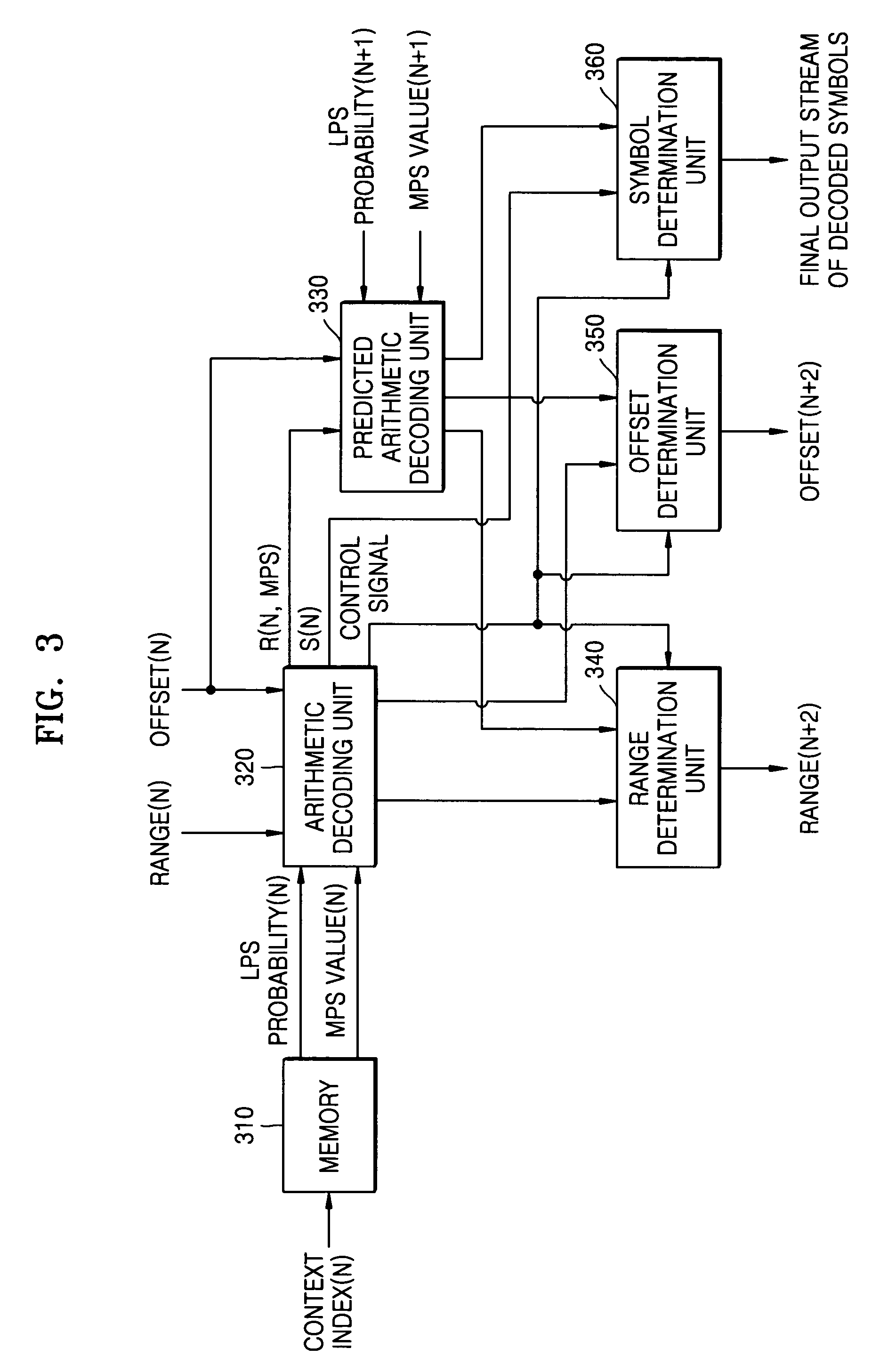

Arithmetic decoding apparatus and method

InactiveUS7304590B2Improve decoding speedCode conversionCharacter and pattern recognitionDecoding methods

Owner:KOREA ADVANCED INST OF SCI & TECH +1

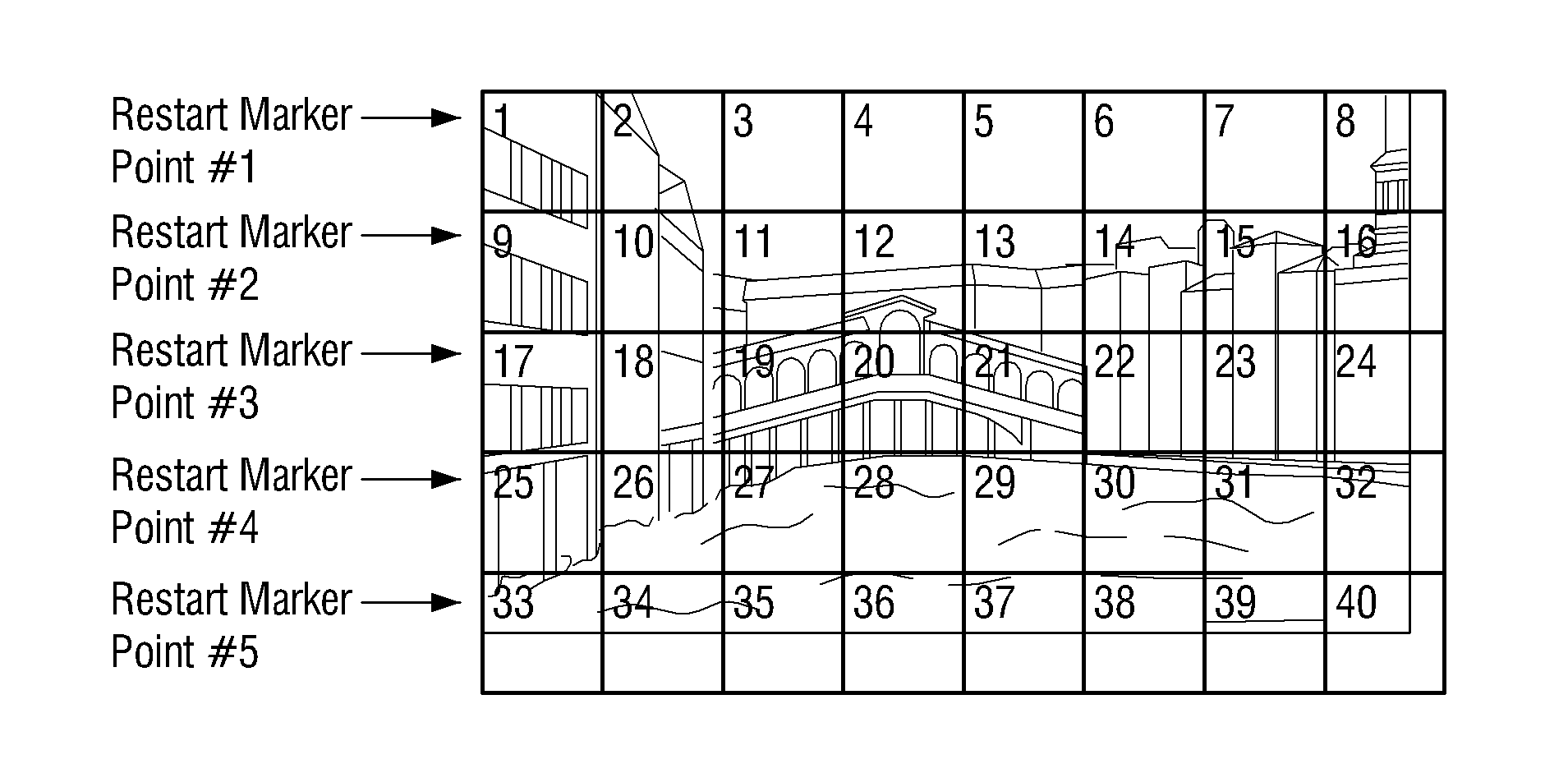

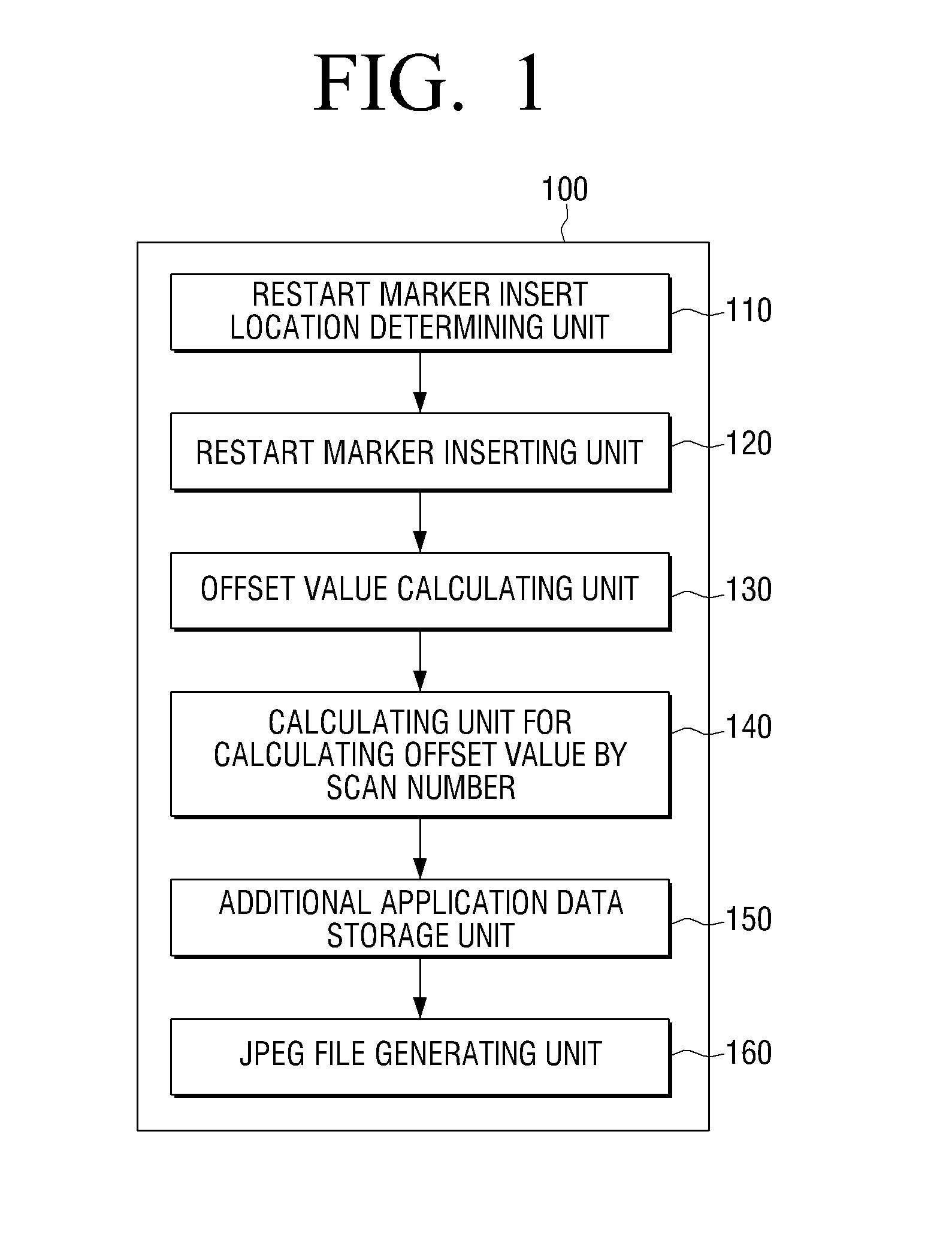

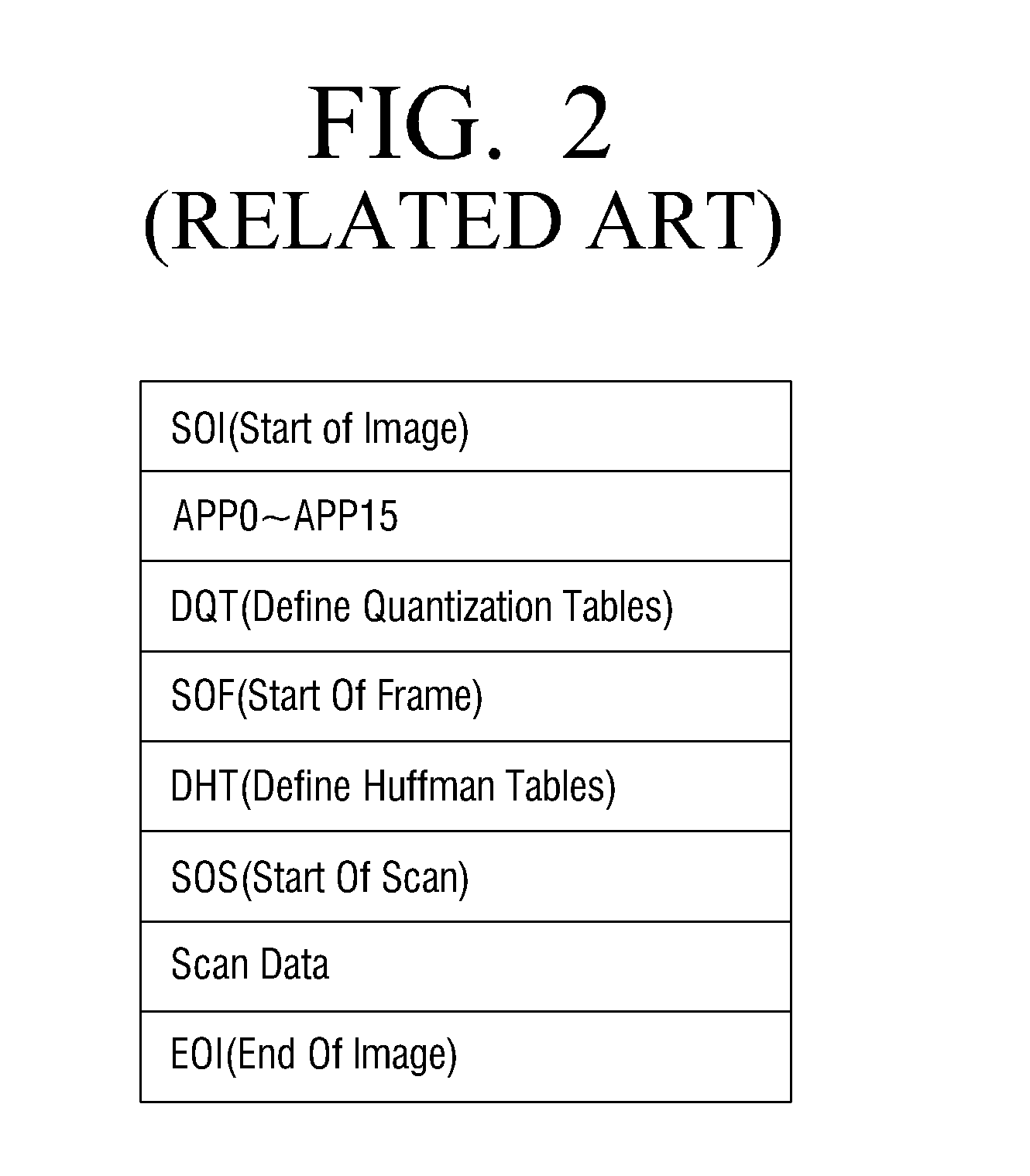

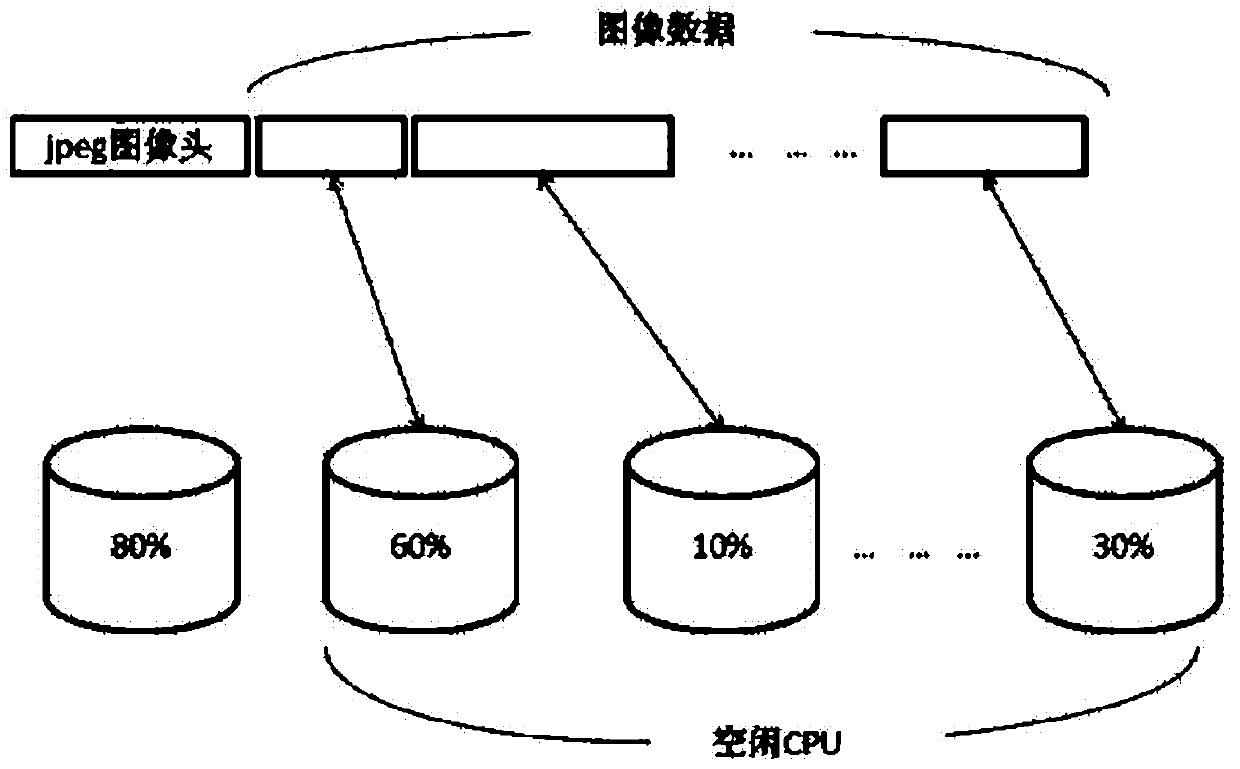

Method and apparatus for generating JPEG files suitable for parallel decoding

InactiveUS20120155767A1Improve speedValid confirmationCharacter and pattern recognitionDigital video signal modificationJPEGEntropy encoding

Owner:SAMSUNG ELECTRONICS CO LTD

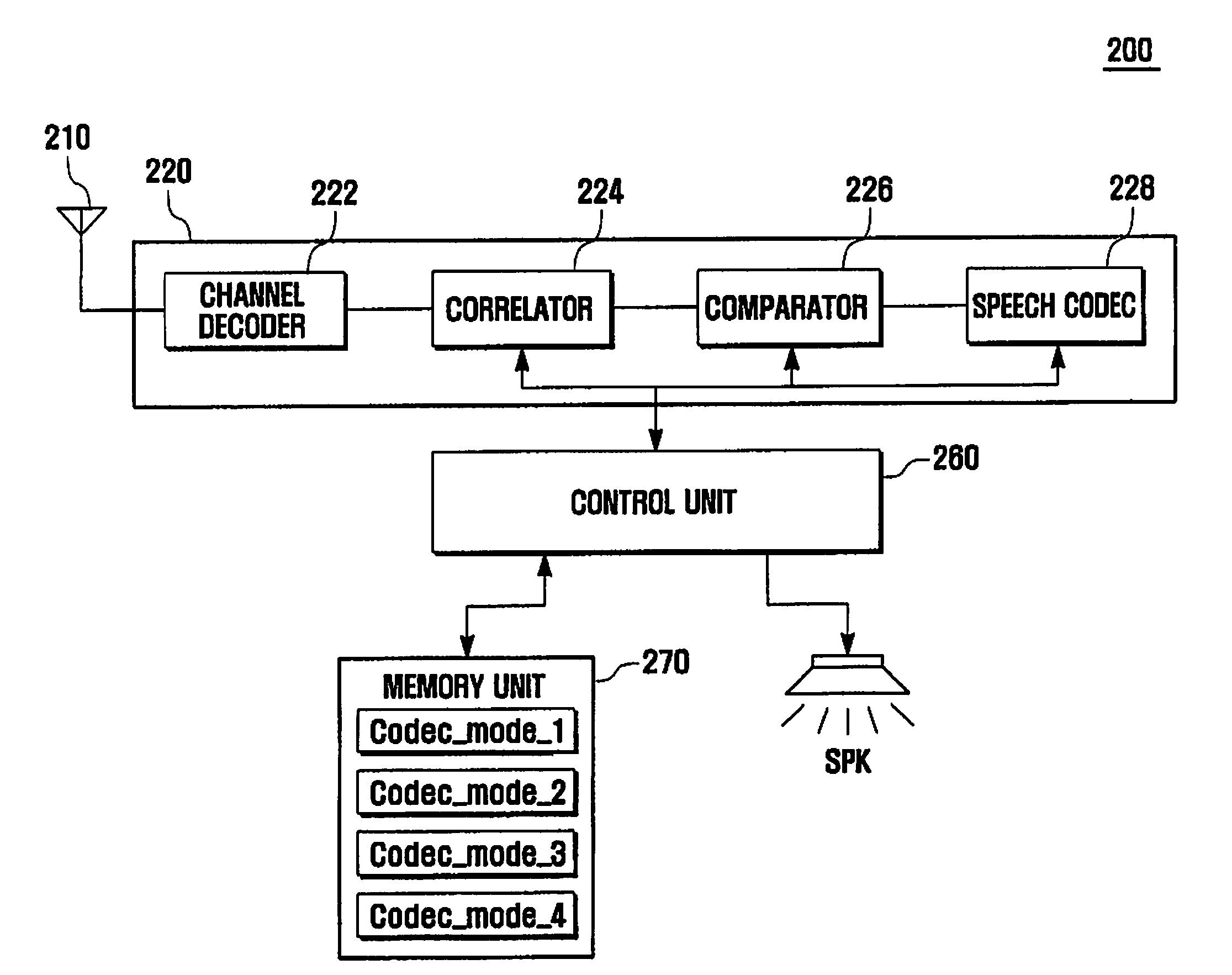

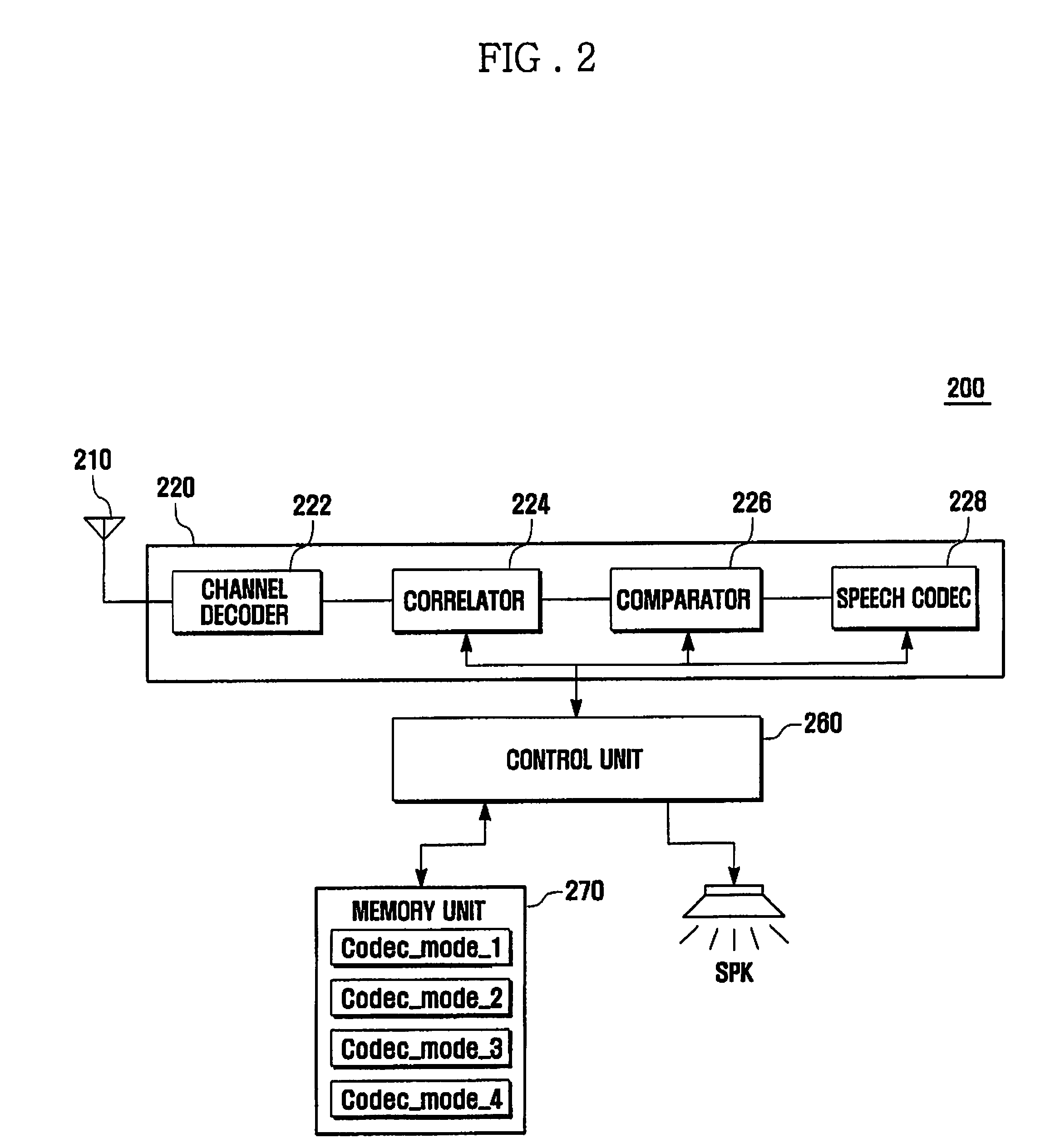

Codec mode decoding method and apparatus for adaptive multi-rate system

InactiveUS20080140392A1Improve decoding speedMemory utilizationReceiver specific arrangementsSpeech analysisDecoding methodsCommunications system

A codec mode decoding apparatus and method for an AMR communication system for enhancing decoding speed and optimizing memory utilization. The codec mode decoding method includes receiving data encoded using an adaptive multi-rate scheme; extracting a bit value from an informative region of the data by through a channel decoding on the data; producing correlation values by correlating the bit value and at least two codec modes; selecting one of the codec modes, of which correlation value is a maximum likelihood value, as an adapted codec mode; activating a first codec corresponding to the adapted codec mode; and decoding the data using the first codec.

Owner:SAMSUNG ELECTRONICS CO LTD

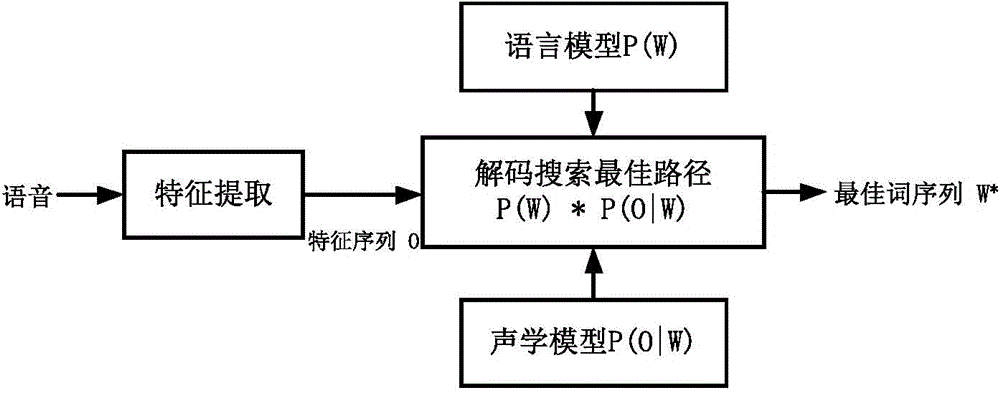

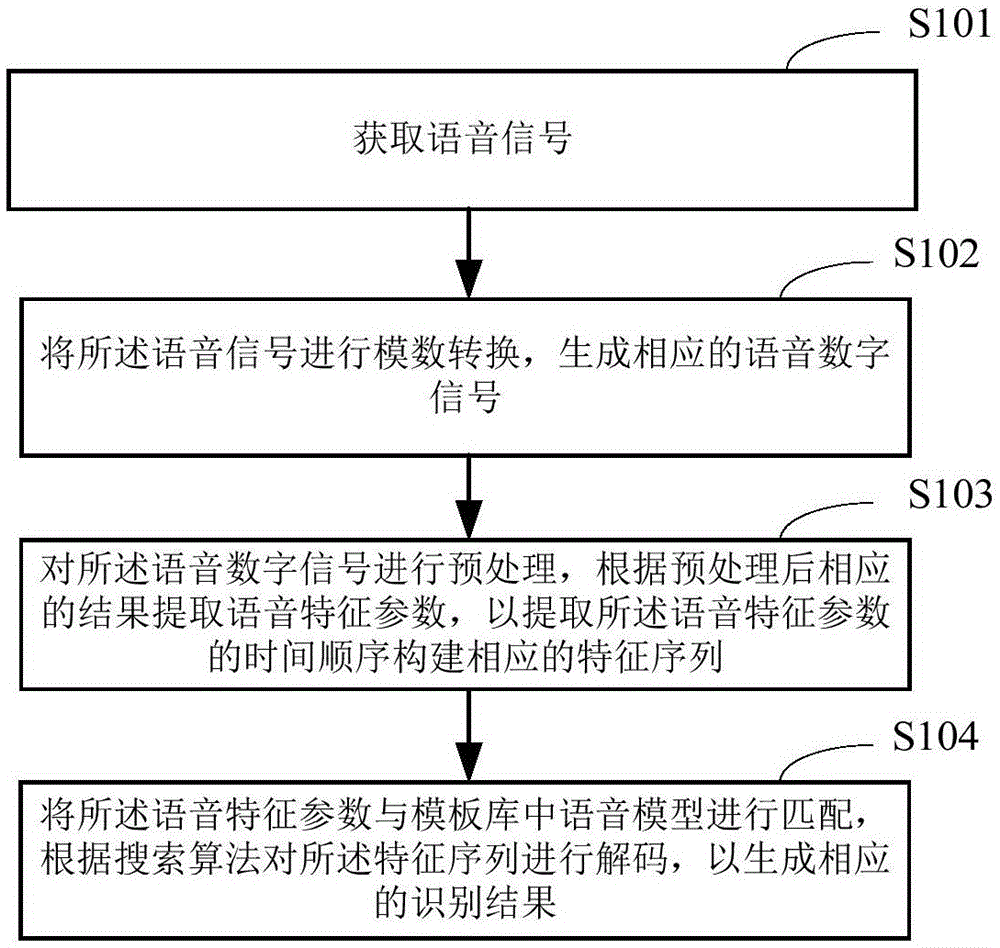

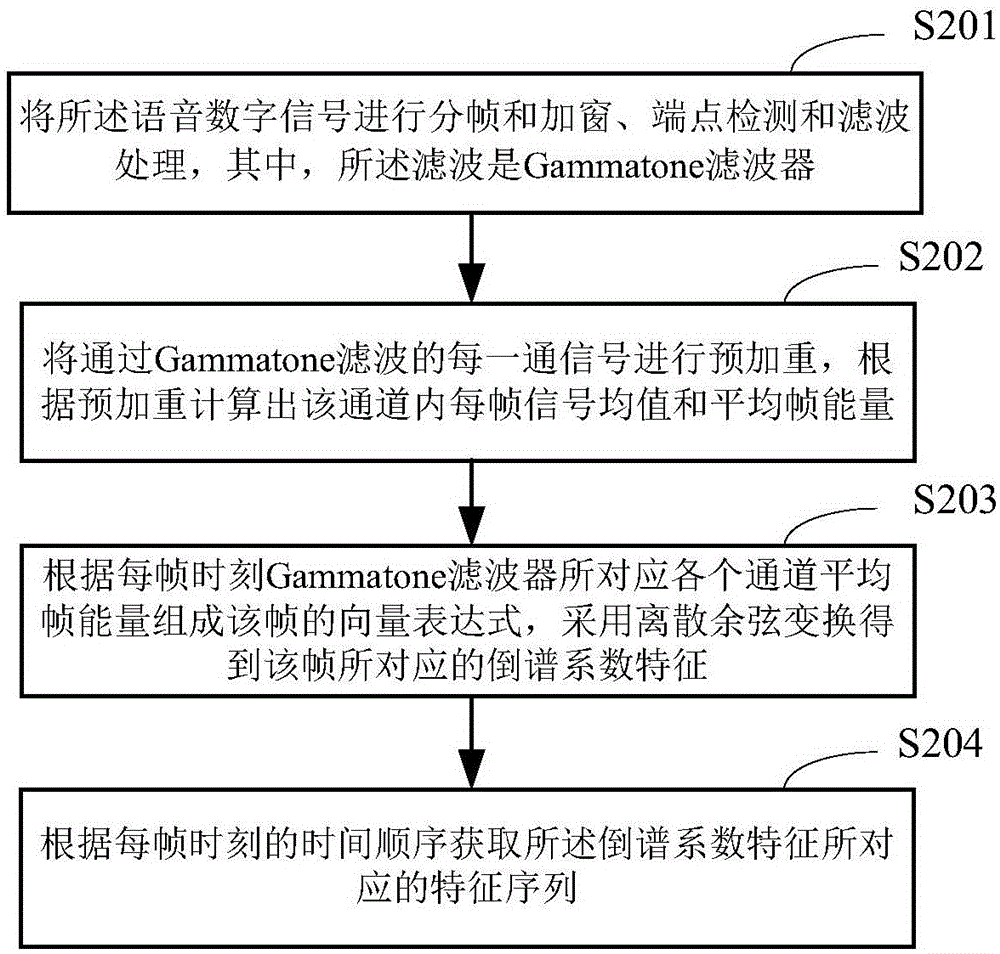

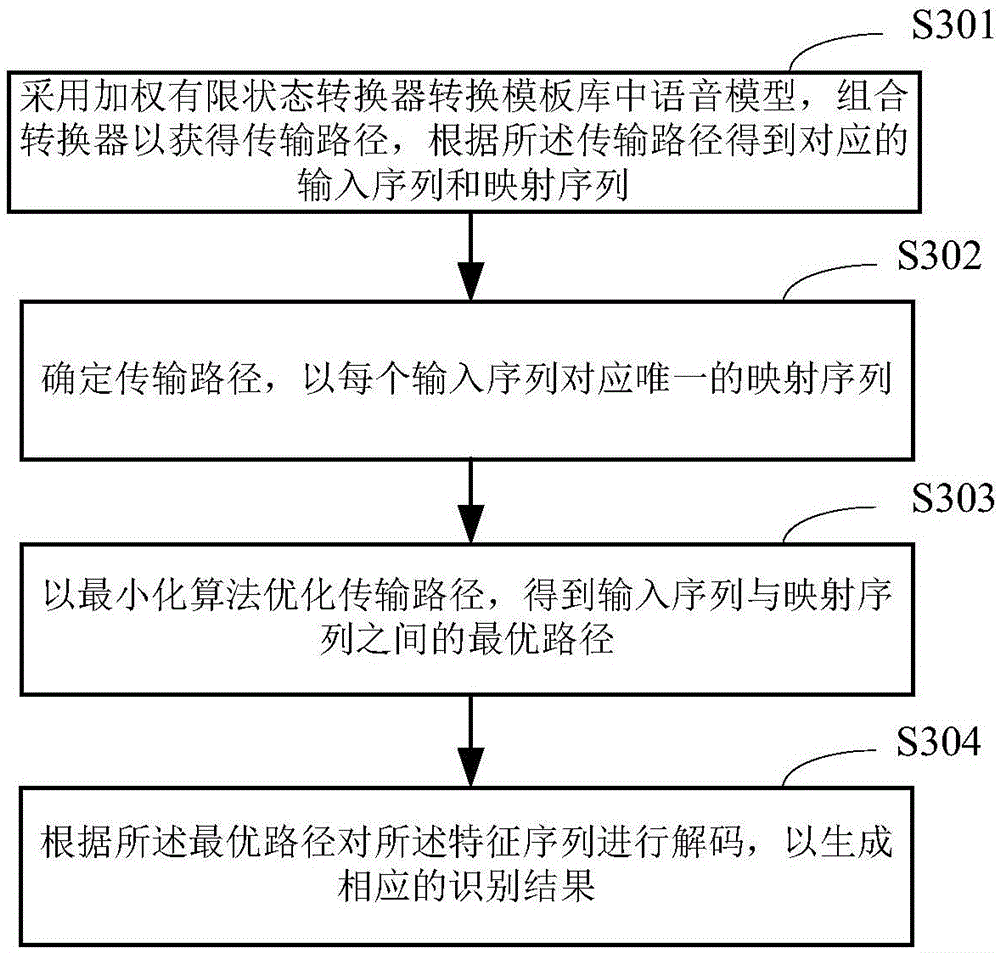

Speech recognition method and system

InactiveCN105118501AImprove Noise RobustnessSmall amount of calculationSpeech recognitionChronological timeSpeech identification

The invention belongs to the speech recognition technical filed and relates to a speech recognition method and system. The method includes the following steps that: speech signals are acquired; analog-digital conversion is performed on the speech signals, so that corresponding speech digital signals can be generated; preprocessing is performed on the speech digital signals, and speech feature parameters are extracted according to corresponding preprocessing results, and a time sequence of extracting the speech feature parameters is utilized to construct a corresponding feature sequence; the speech feature parameters are matched with speech models in a template library, and the feature sequence is decoded according to a search algorithm, and therefore, a corresponding recognition result can be generated. According to the speech recognition method and system of the invention, time-domain GFCC (gammatone frequency cepstrum coefficient) features are extracted to replace frequency-domain MFCC (mel frequency cepstrum coefficient) features, and DCT conversion is adopted, and therefore, computation quantity can be reduced, and computation speed and robustness can be improved; and the mechanism of weighted finite state transformation is adopted to construct a decoding model, and smoothing and compression processing of the model is additionally adopted, and therefore, decoding speed can be increased.

Owner:徐洋

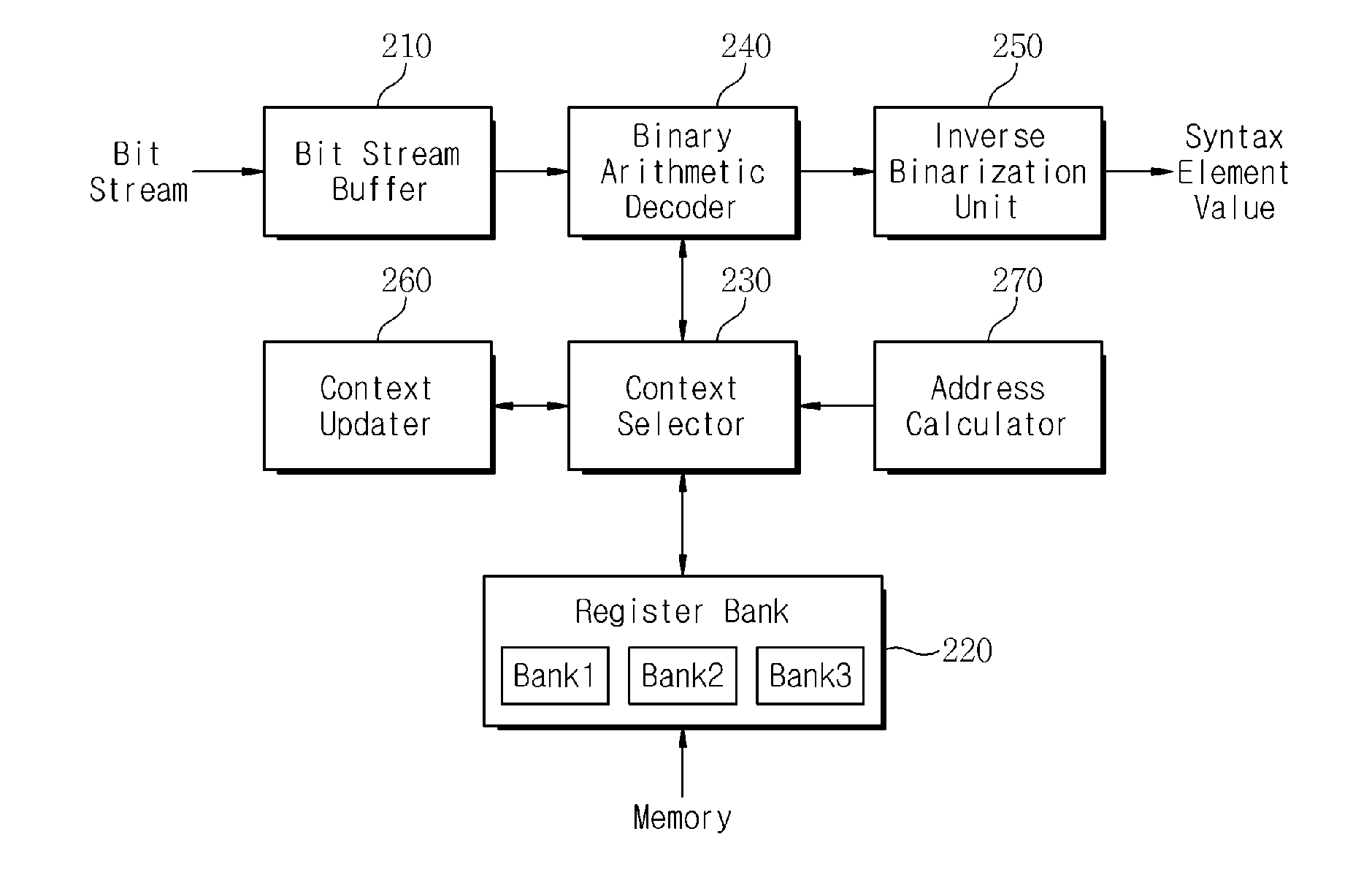

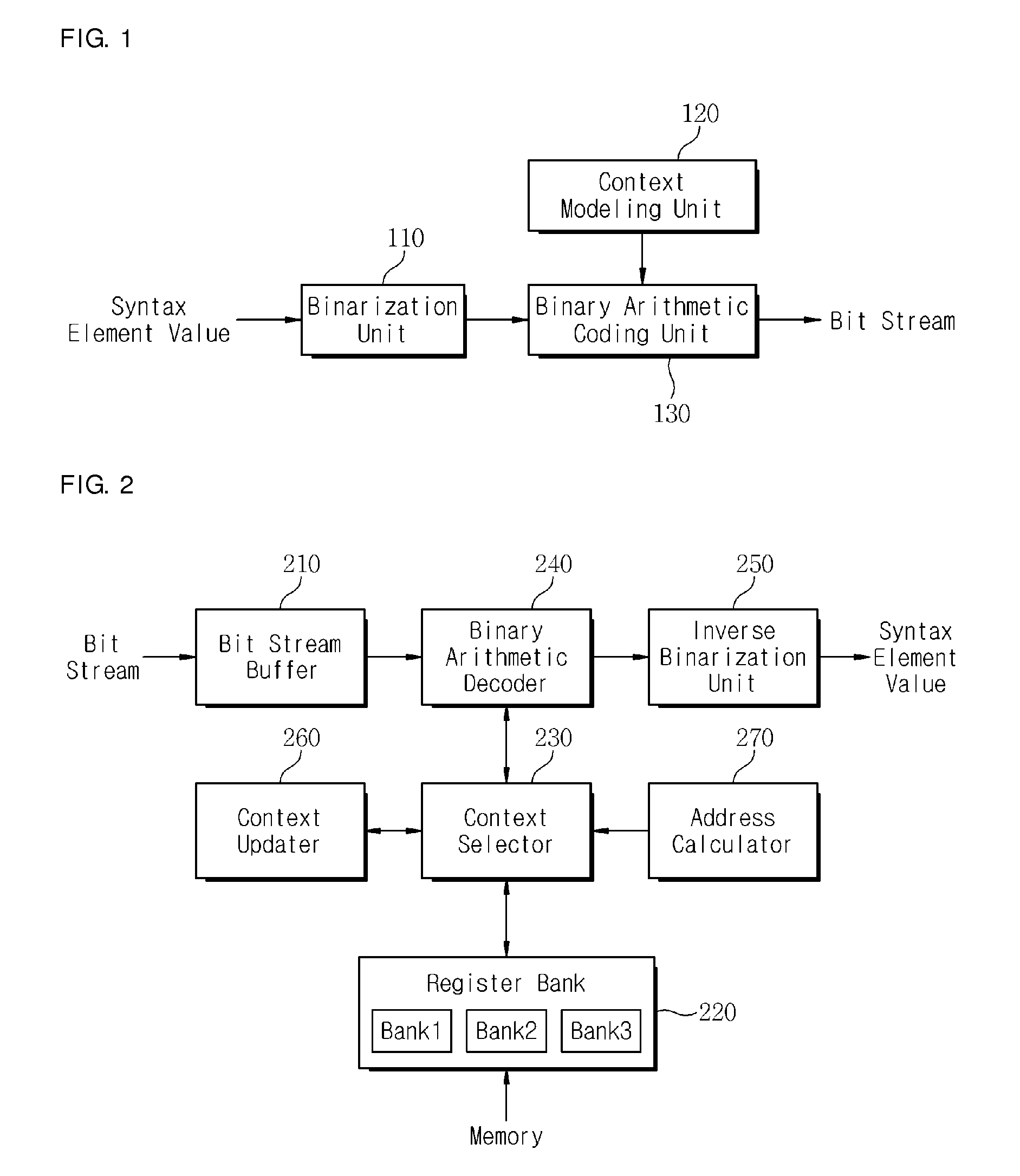

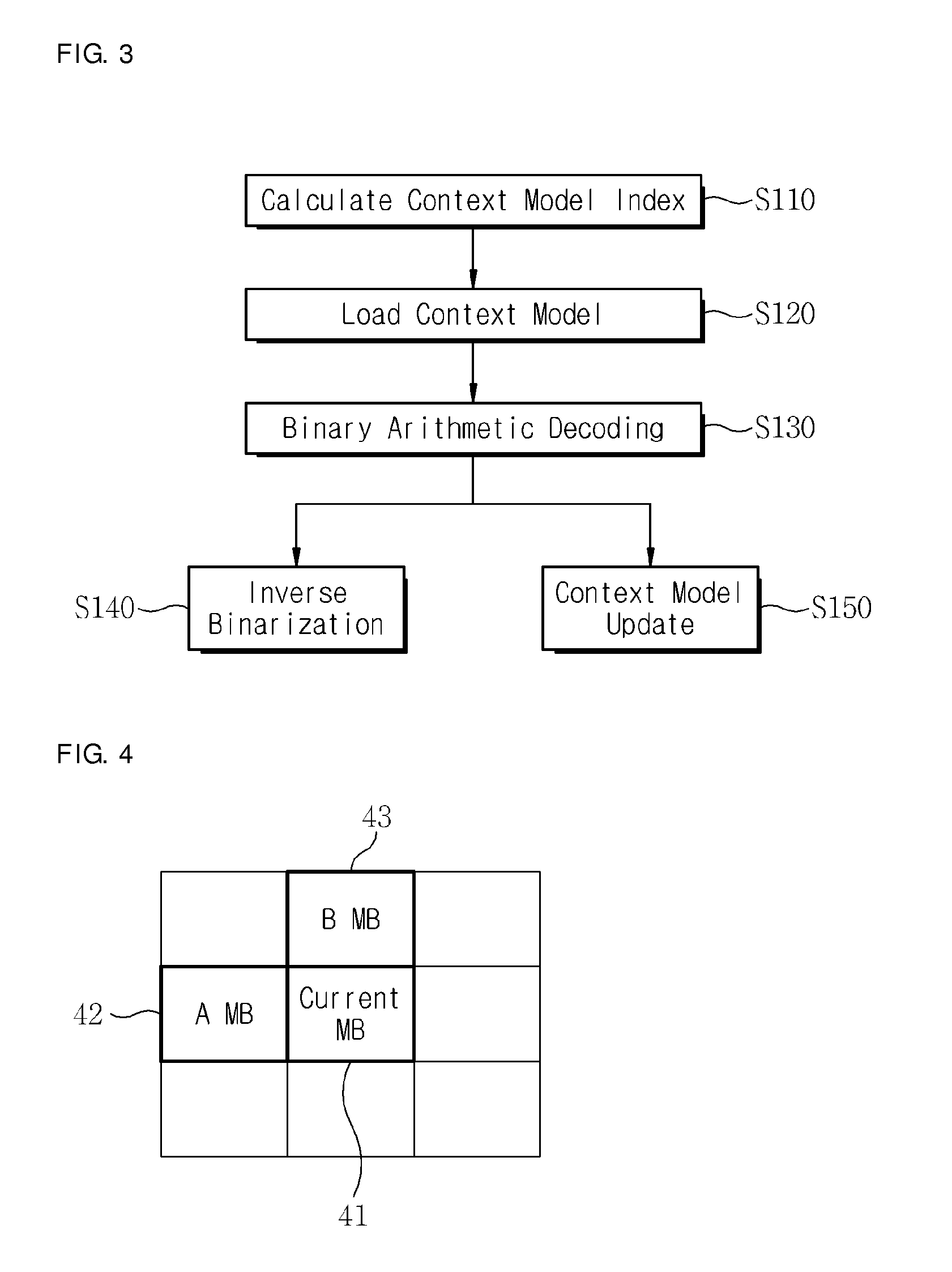

Decoding device for context-based adaptive binary arithmetic coding (CABAC) technique

ActiveUS8319672B2Improve decoding speedImprove performanceCode conversionArithmetic codingContext based

A decoding device is suitable for a context-based adaptive binary arithmetic coding (CABAC) technique and allows a real-time decoding of high-definition video. The CABAC decoding device allows a simultaneous performing since determination according to multiple conditions is based on logic circuits when the device is formed in hardware. Additionally, when one macro block starts to be decoded, the device reads and stores information about neighboring macro blocks at a time from a memory. Therefore, the device does not need to access the memory at every operation and this improves the overall decoding speed.

Owner:KOREA ELECTRONICS TECH INST

Fast multivariate LDPC code decoding method with low decoding complexity

ActiveCN107863972ASave storage spaceGood effectError correction/detection using multiple parity bitsCode conversionFast Fourier transformRound complexity

The invention discloses a fast multivariate LDPC code decoding method with a low decoding complexity, belonging to the technical field of mobile communication channel coding. According to the multivariate LDPC code decoding method, when storing decoding messages, only the decoding messages corresponding to the location of a non-zero element in a check matrix H are stored, and due to the sparsity of the check matrix H, the decoding method can greatly reduce the storage space occupied by the decoding messages when being implemented by using software or hardware. During decoding, the addressing speed of the decoding messages can be accelerated by using pre-calculated information of the non-zero element in the check matrix H, wherein the information includes the row number, the column number and an element value of the non-zero element; and meanwhile, in a decoding iteration process, fast Fourier transform is introduced to reduce the complexity of a decoding operation when processing a check node message, and thus the decoding speed can be accelerated. The decoding method disclosed by the invention has a relatively low decoding complexity, requires a small storage space, and can also increase the decoding speed to a great extent.

Owner:SHANDONG UNIV

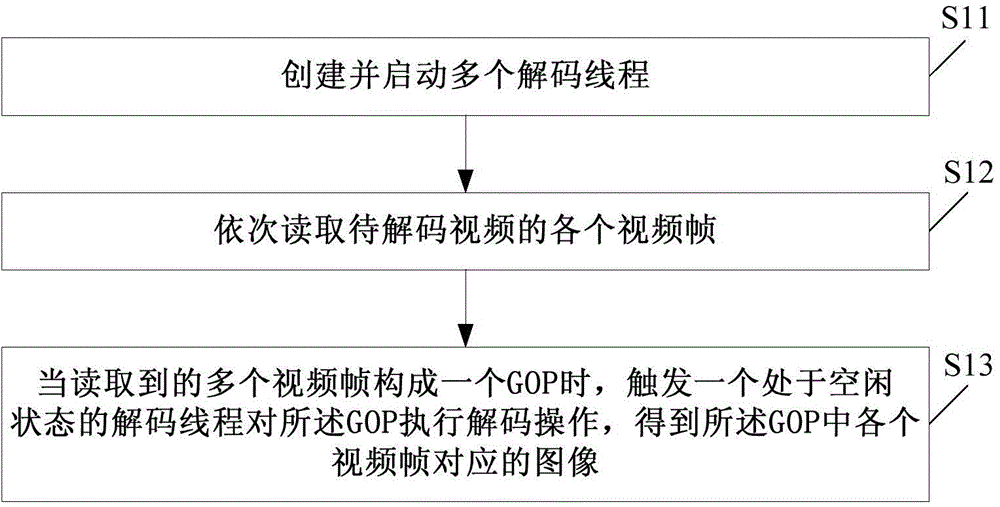

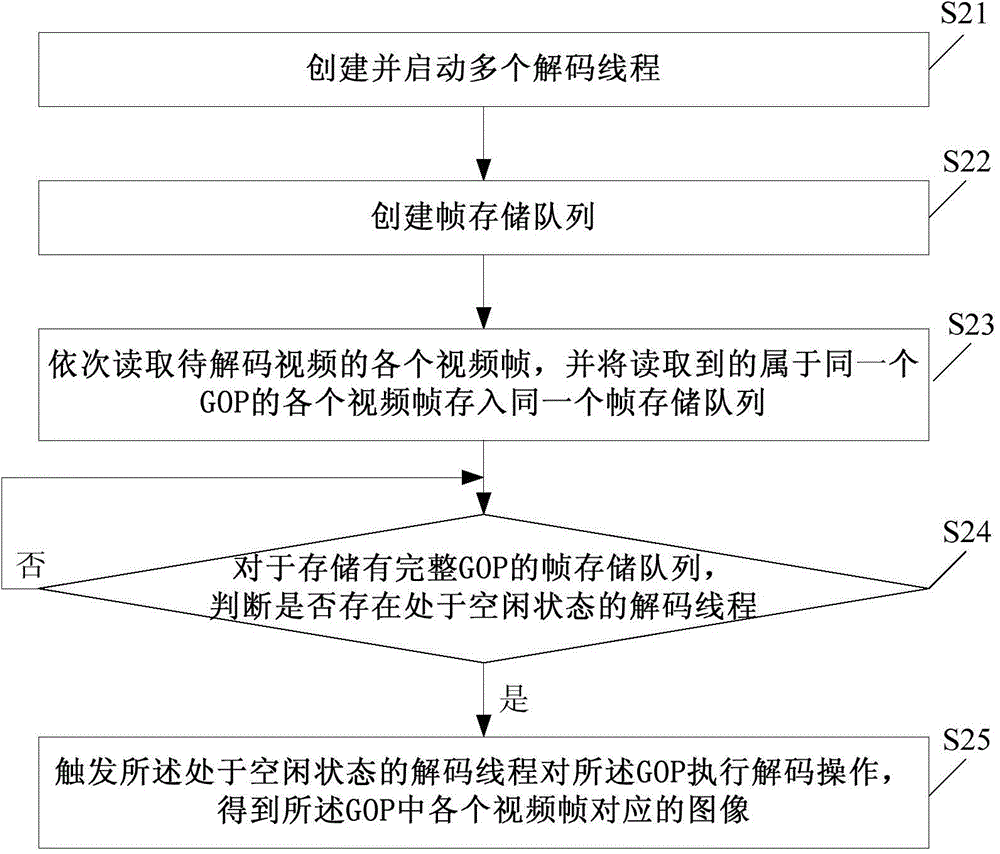

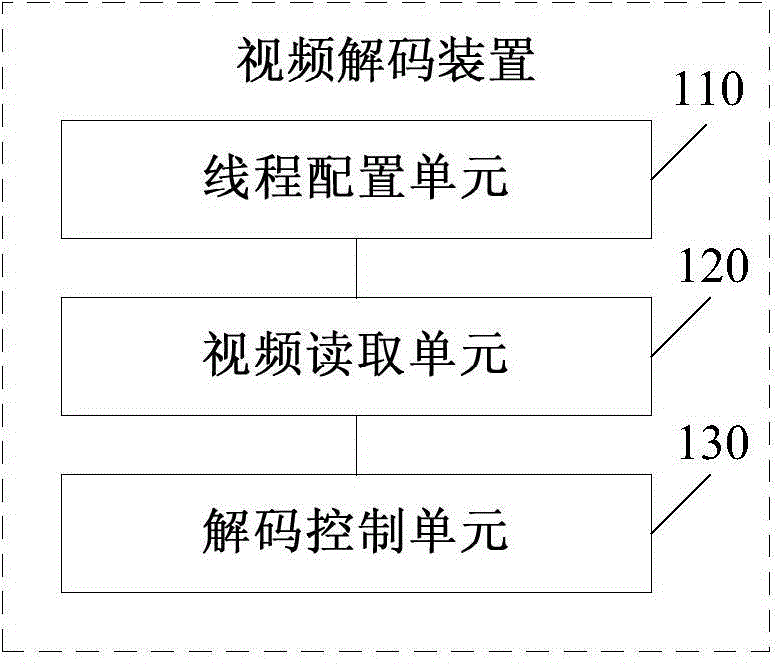

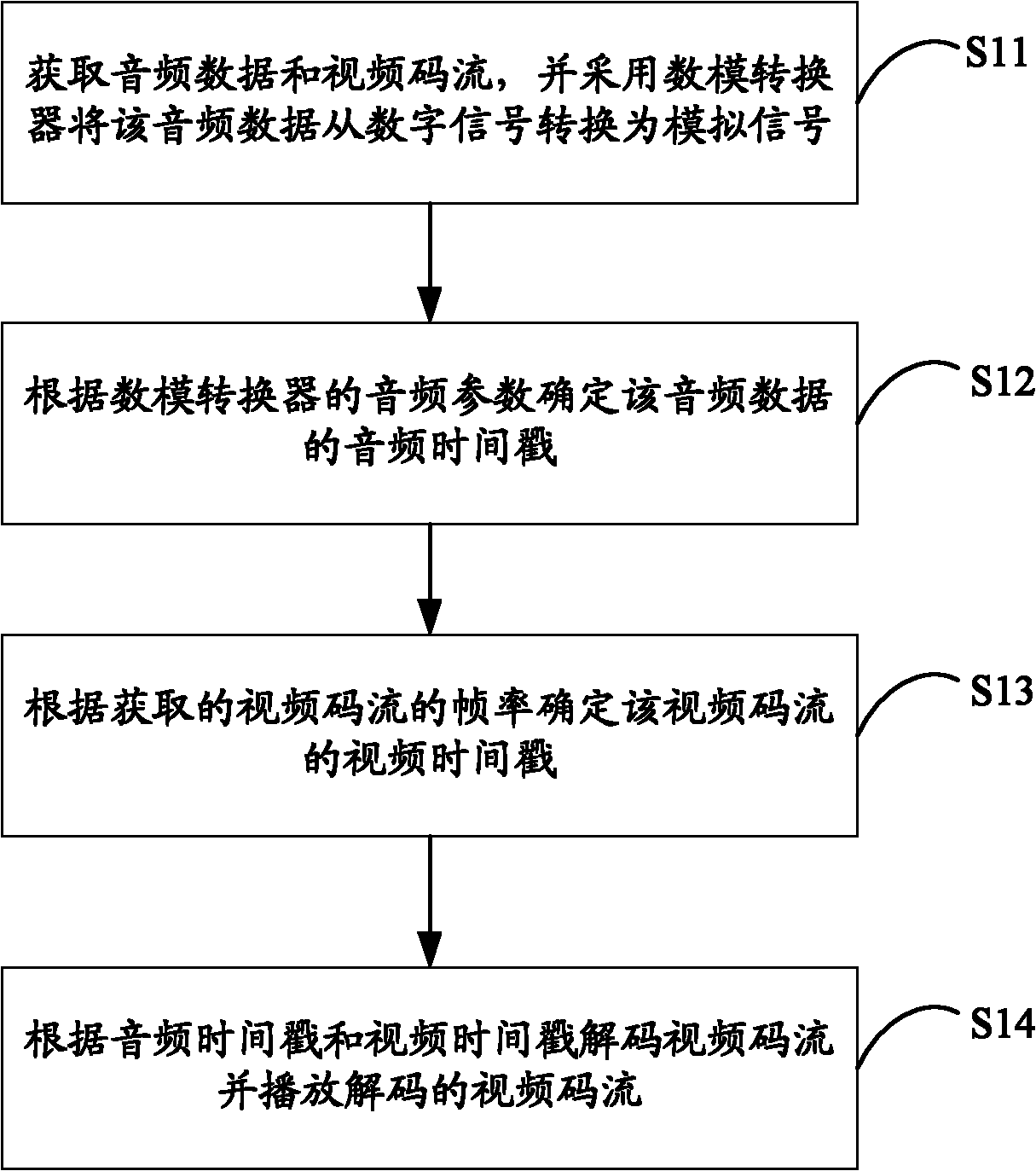

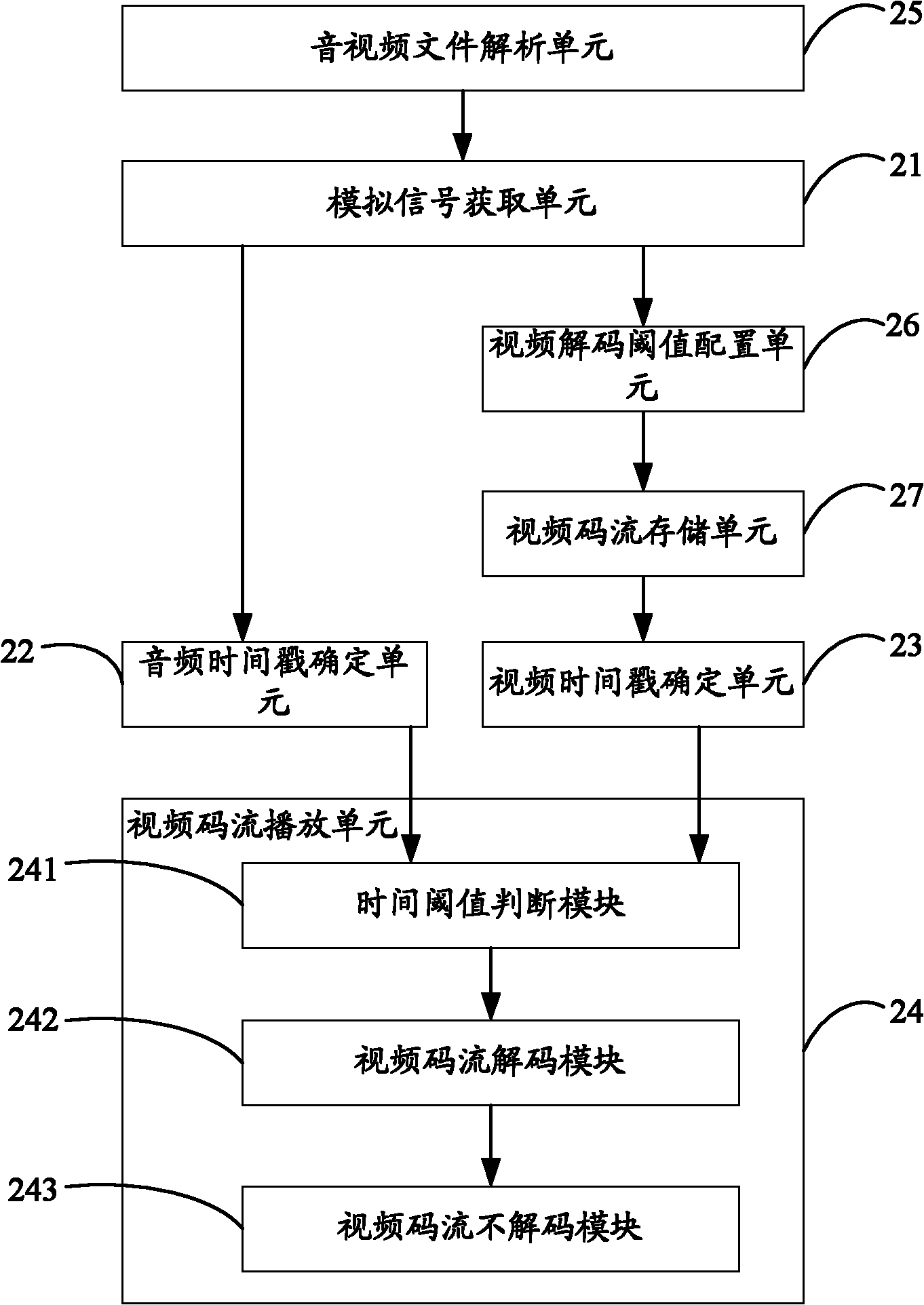

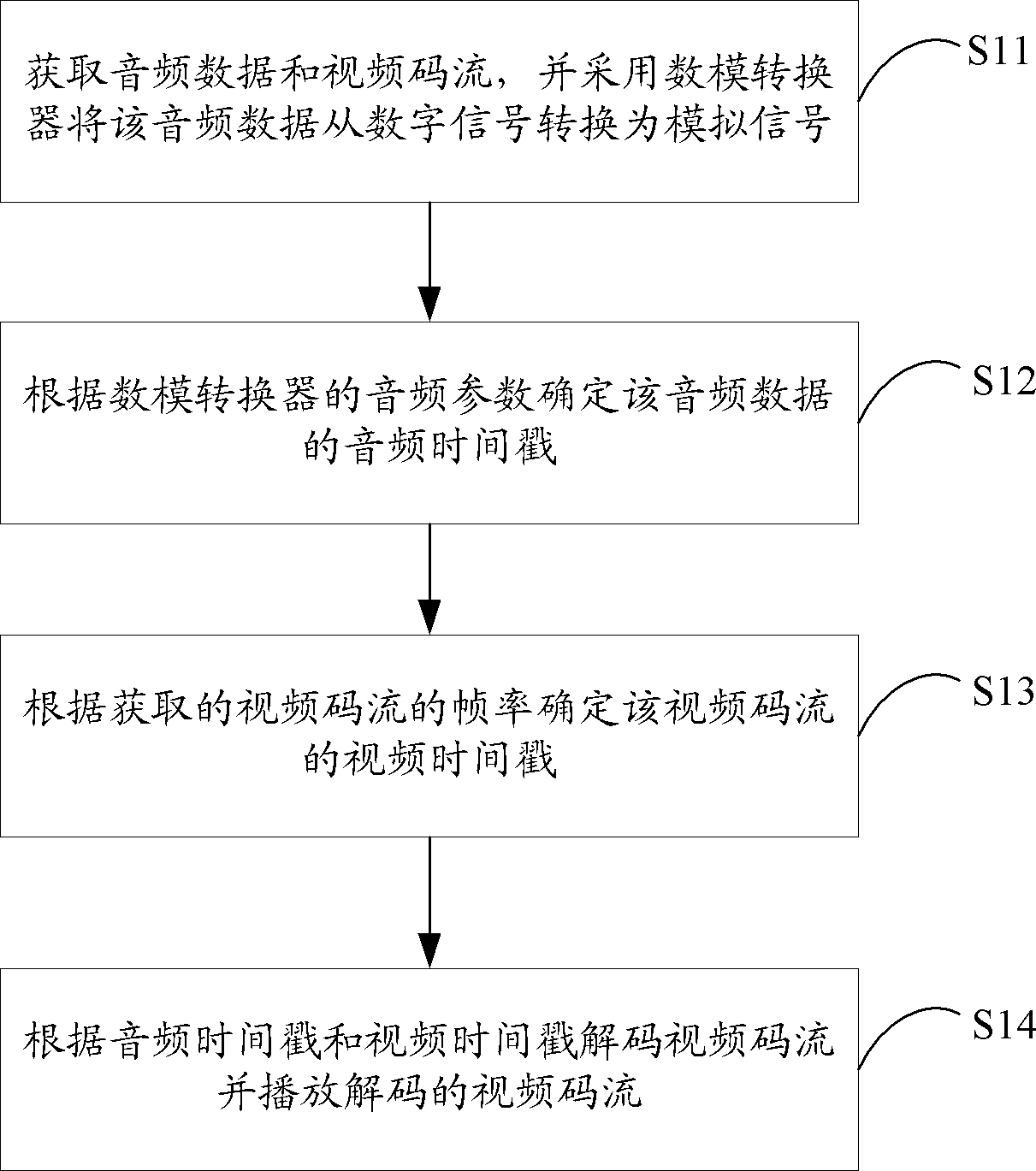

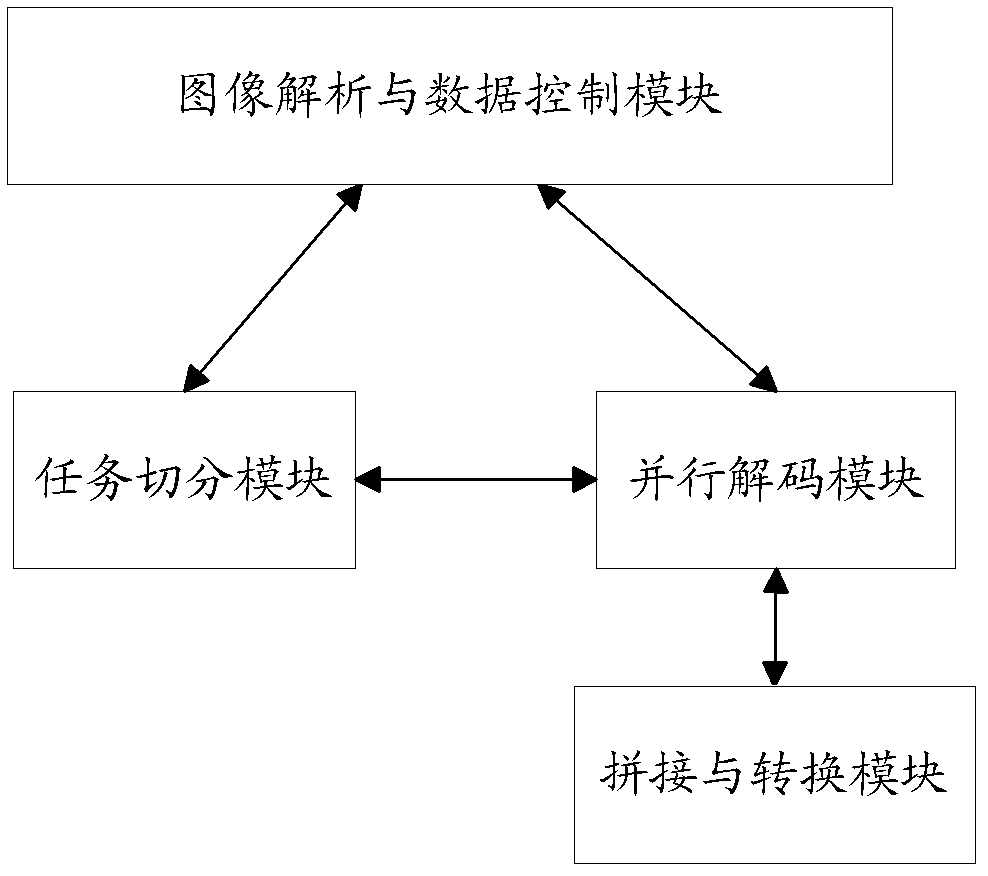

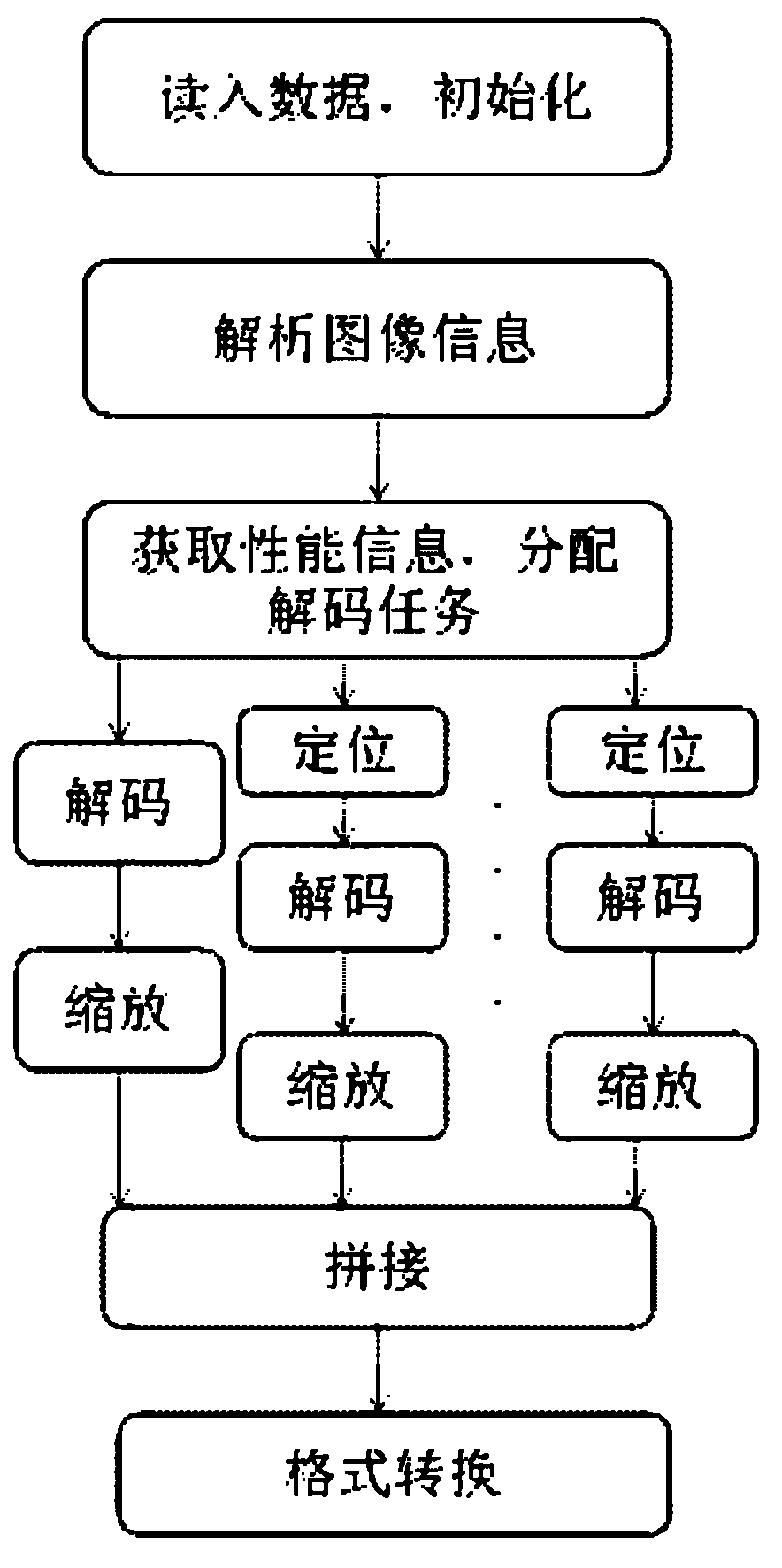

Video decoding method and device and terminal device

InactiveCN105992005ARealize online playImprove decoding speedDigital video signal modificationSelective content distributionTerminal equipmentVideo decoding

The invention discloses a video decoding method and device and a terminal device. Through the creation and starting of multiple decoding threads, the decoding threads are independent mutually and are in concurrent operation, thus multiple picture groups can be decoded at the same time, compared with an existing method of decoding through a serial mode, the decoding speed is raised by multiple times, thus the online playing of an HD video file with a large amount of data can be realized, and a jam phenomenon is avoided.

Owner:GUANGZHOU UCWEB COMP TECH CO LTD

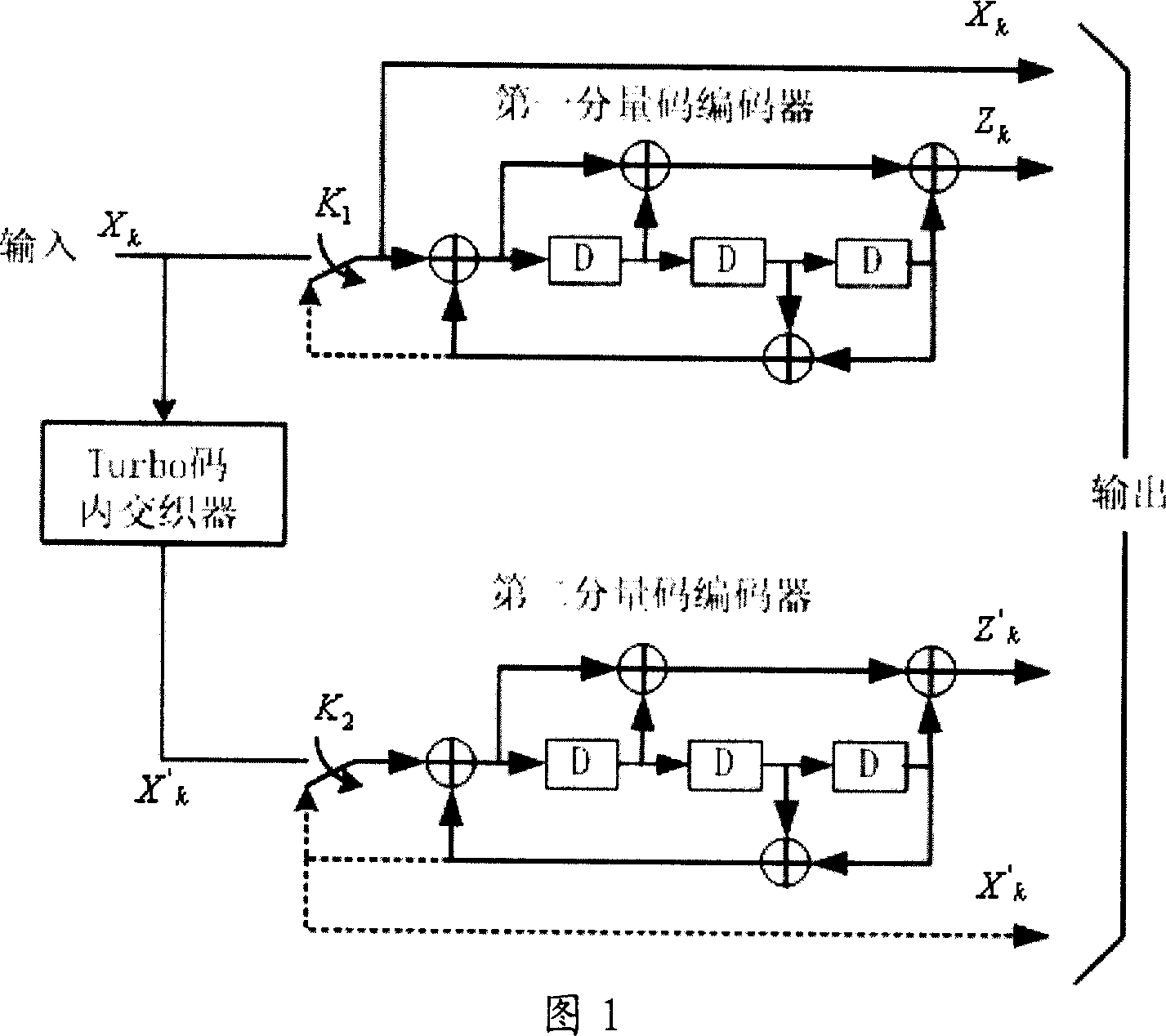

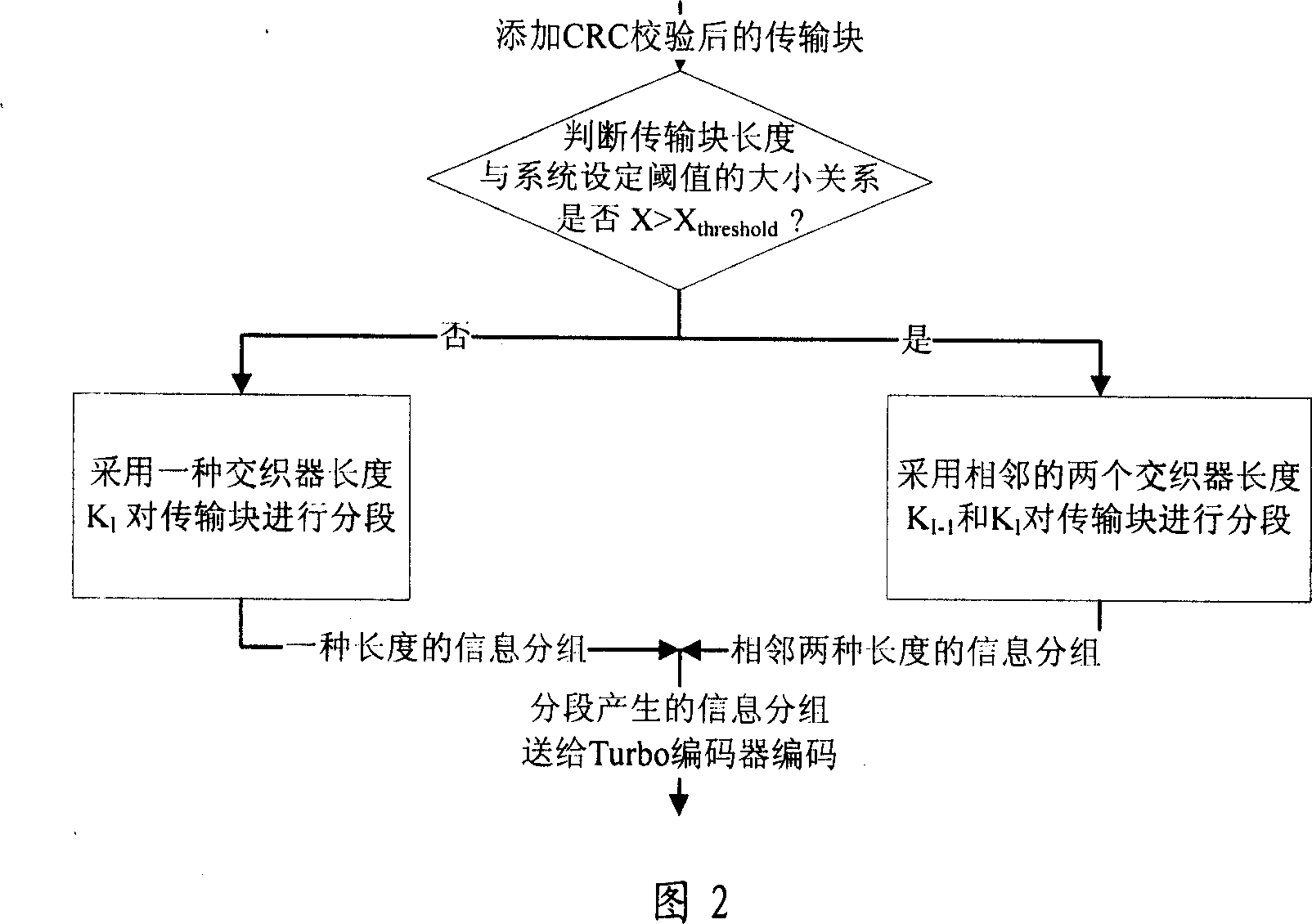

A Turbo code transmission block segmenting method

InactiveCN101060481AReduce the numberReduce in quantityError preventionError correction/detection using interleaving techniquesRound complexityTurbo coded

The disclosed segmenting method for Turbo code comprises: deciding whether the transmission block length over the system-set threshold; if equal or less than the threshold, using interlace device length KI for segmentation; or else, using near KI-1 and KI for segmentation. This invention ensures system performance, reduces filling-bit number, and decreases the complexity and time delay.

Owner:ZTE CORP

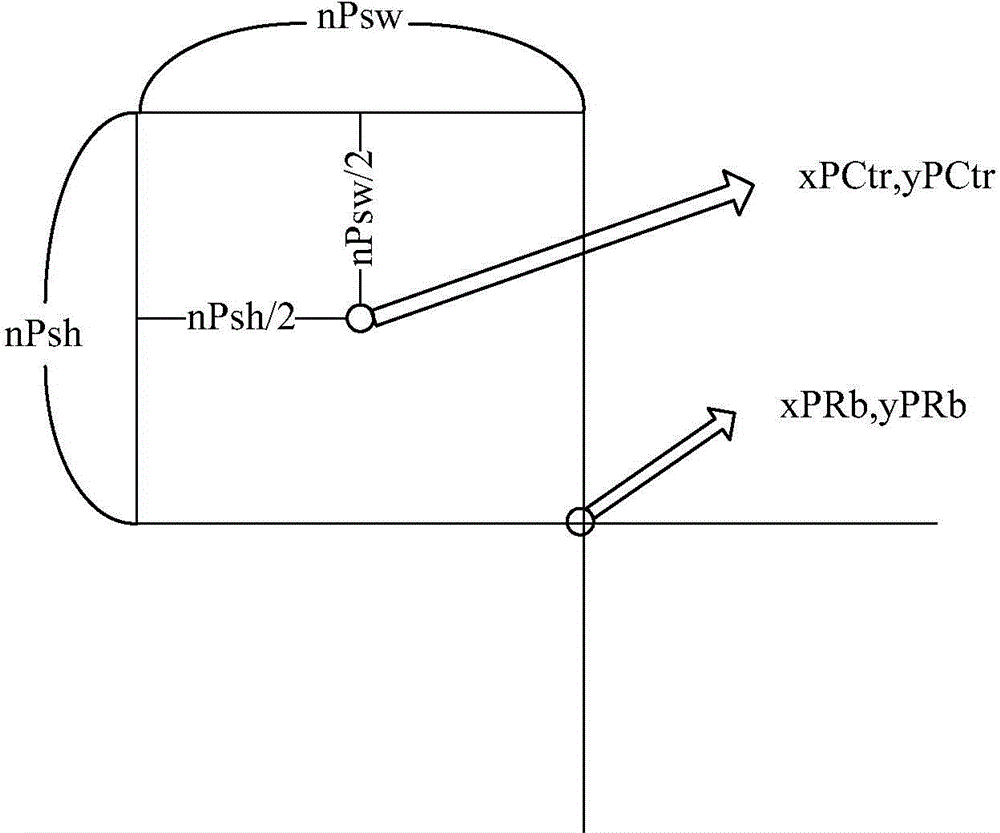

Method and terminal for playing video on low-side embedded product

ActiveCN102595114AImprove decoding speedReduce decoding complexityTelevision systemsSelective content distributionComputer hardwareDigital analog converter

The invention is applied to the audio and video playing field, and provides a method and a terminal for playing video on a low-side embedded product. The method comprises the following steps of: obtaining audio data and video code stream, and adopting a digital-to-analog convertor to convert the audio data from a digital signal to an analog signal; confirming an audio timestamp of the audio data according to audio parameter of the digital-to-analog convertor; confirming a video timestamp of the video code stream according to frame rate of the obtained video code stream; and decoding the video code stream according to the audio timestamp and the video timestamp and playing the decoded video code stream. According to the embodiment of the invention, only when an absolute value of the difference between the audio timestamp and the video timestamp is less than a preset time threshold, can the video code stream coded by adopting an MJPED coding mode with lower complexity be decoded, so that the audio and the video can be played synchronously.

Owner:ANYKA (GUANGZHOU) MICROELECTRONICS TECH CO LTD

Video decoding data storage method and calculation method of motion vector data

ActiveCN104811721AImprove decoding efficiencyReduce data volumeDigital video signal modificationStatic random-access memoryMotion vector

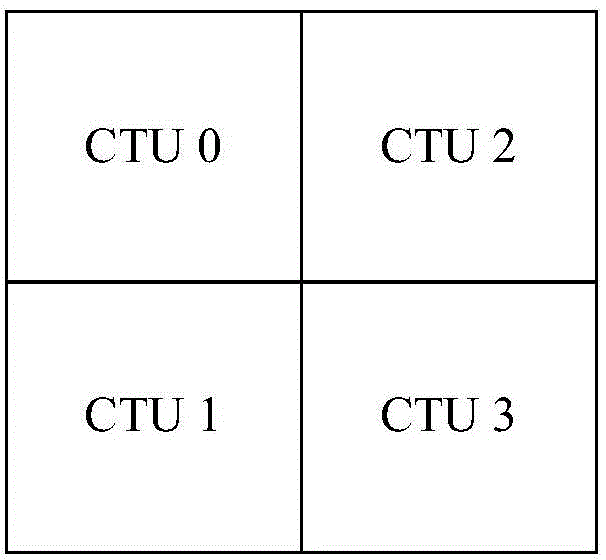

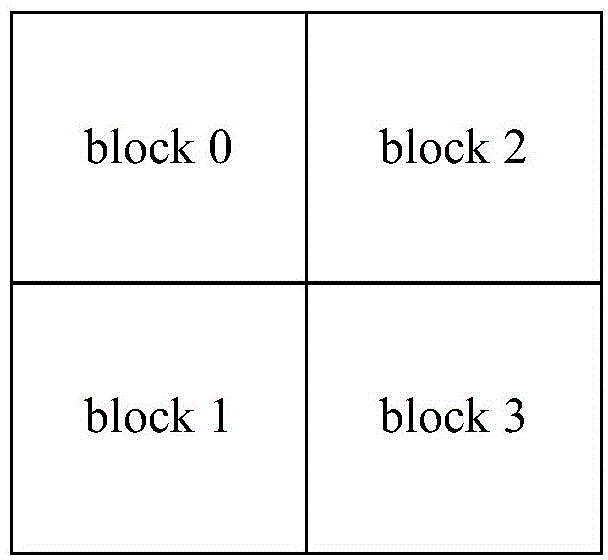

The invention provides a video decoding data storage method and a calculation method of motion vector data. The video decoding data storage method includes setting a reference frame queue table in a static random access memory, storing basic information of a plurality of reference frames in the reference frame queue table, wherein each unit of the reference frame queue table stores index information corresponding to one reference frame in a decoding buffer area; storing a plurality of groups of frame buffer information corresponding to the index information in the decoding buffer area, wherein each group of frame buffer information contains frame display sequence data and motion vector storage address information; storing the motion vector storage of each reference frame in a dynamic random access memory, wherein the motion vector storage address information is address information of the motion vector data of a coding tree unit of corresponding blocks of the reference frames stored in the dynamic random access memory. The video decoding data storage method and the calculation method of the motion vector data can improve the video decoding efficiency and save hardware expense and bandwidth resources occupied during video decoding.

Owner:ALLWINNER TECH CO LTD

JPEG image decoding method and decoder suitable for multi-core embedded type platform

Owner:SAMSUNG ELECTRONICS CHINA R&D CENT +1

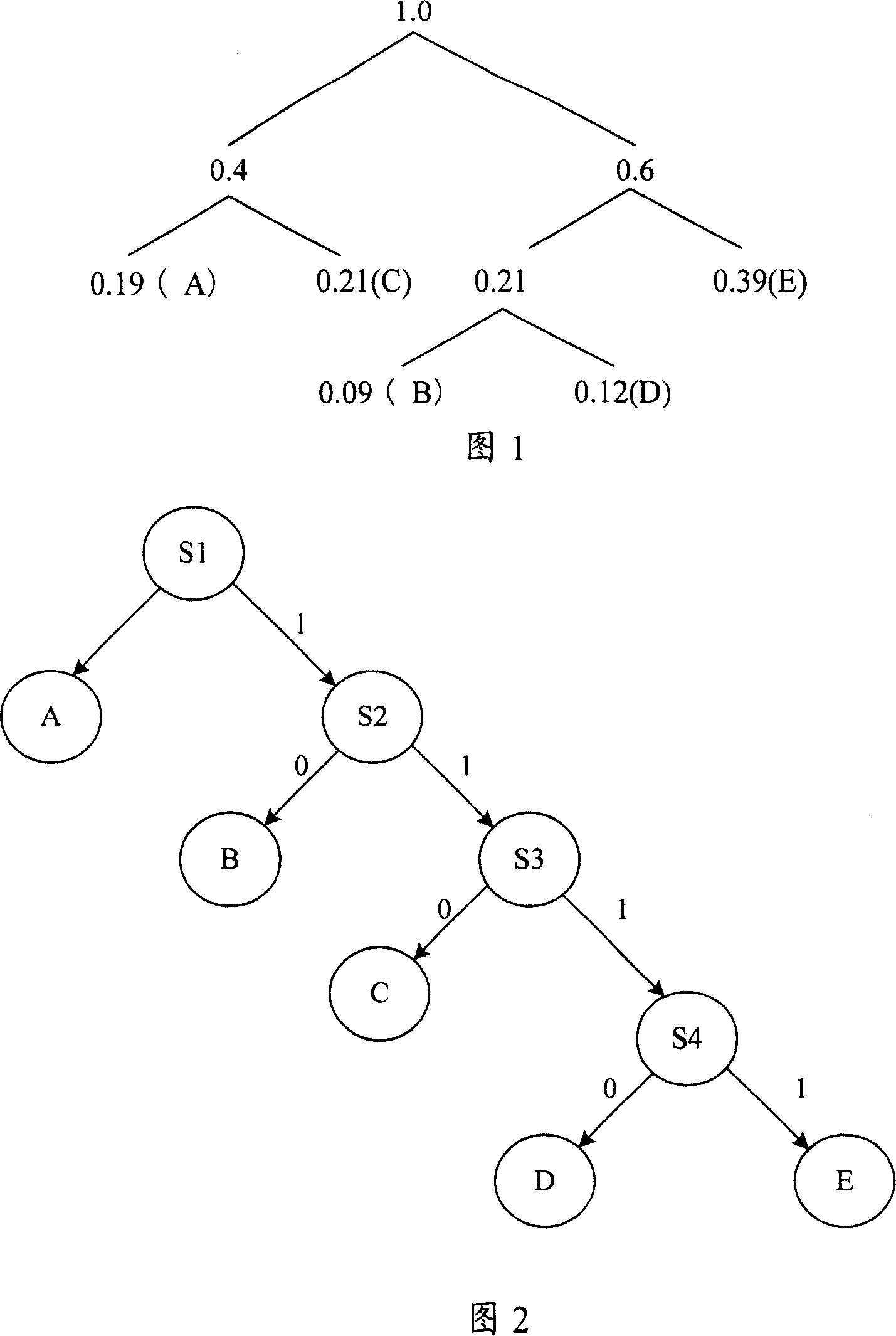

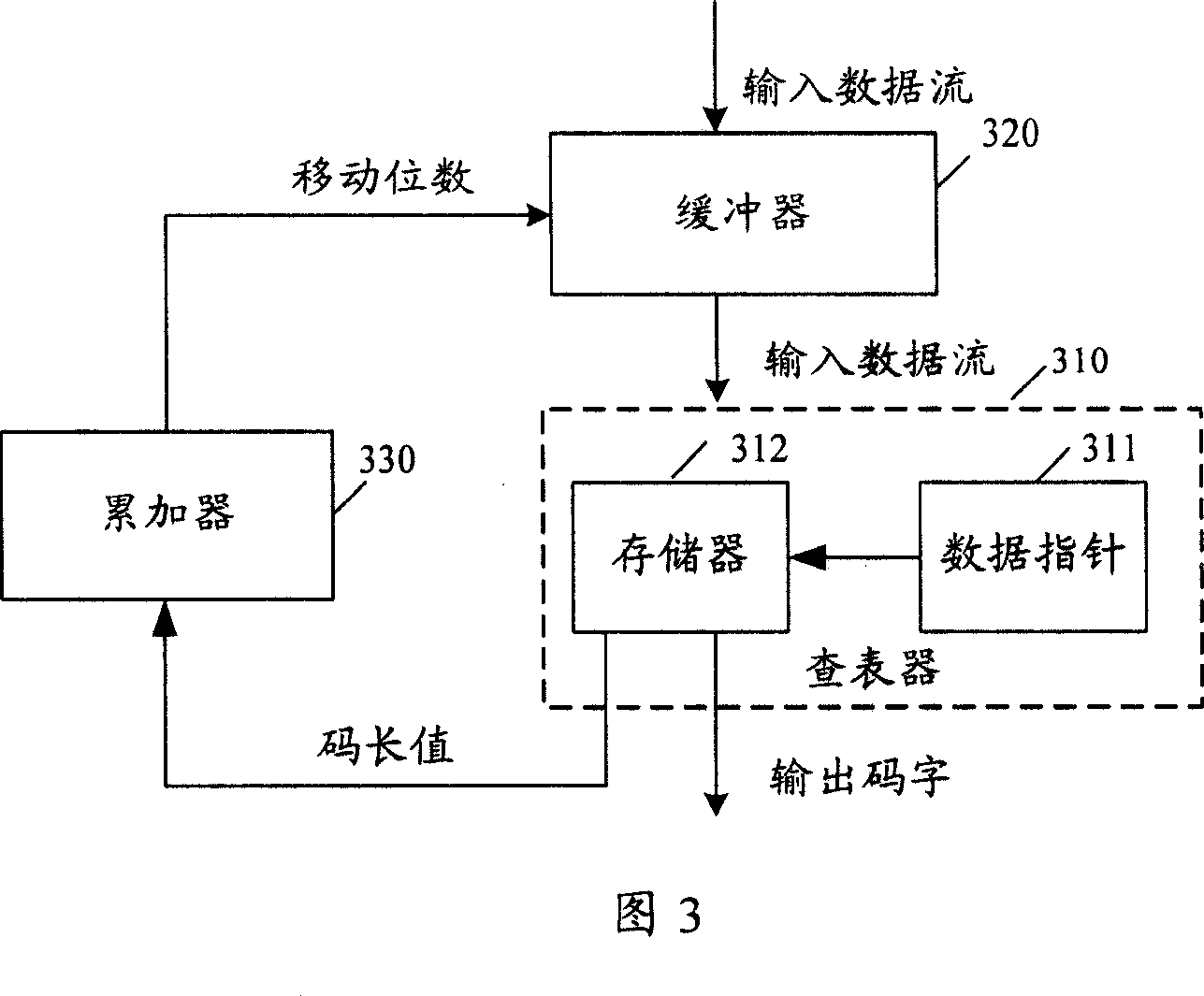

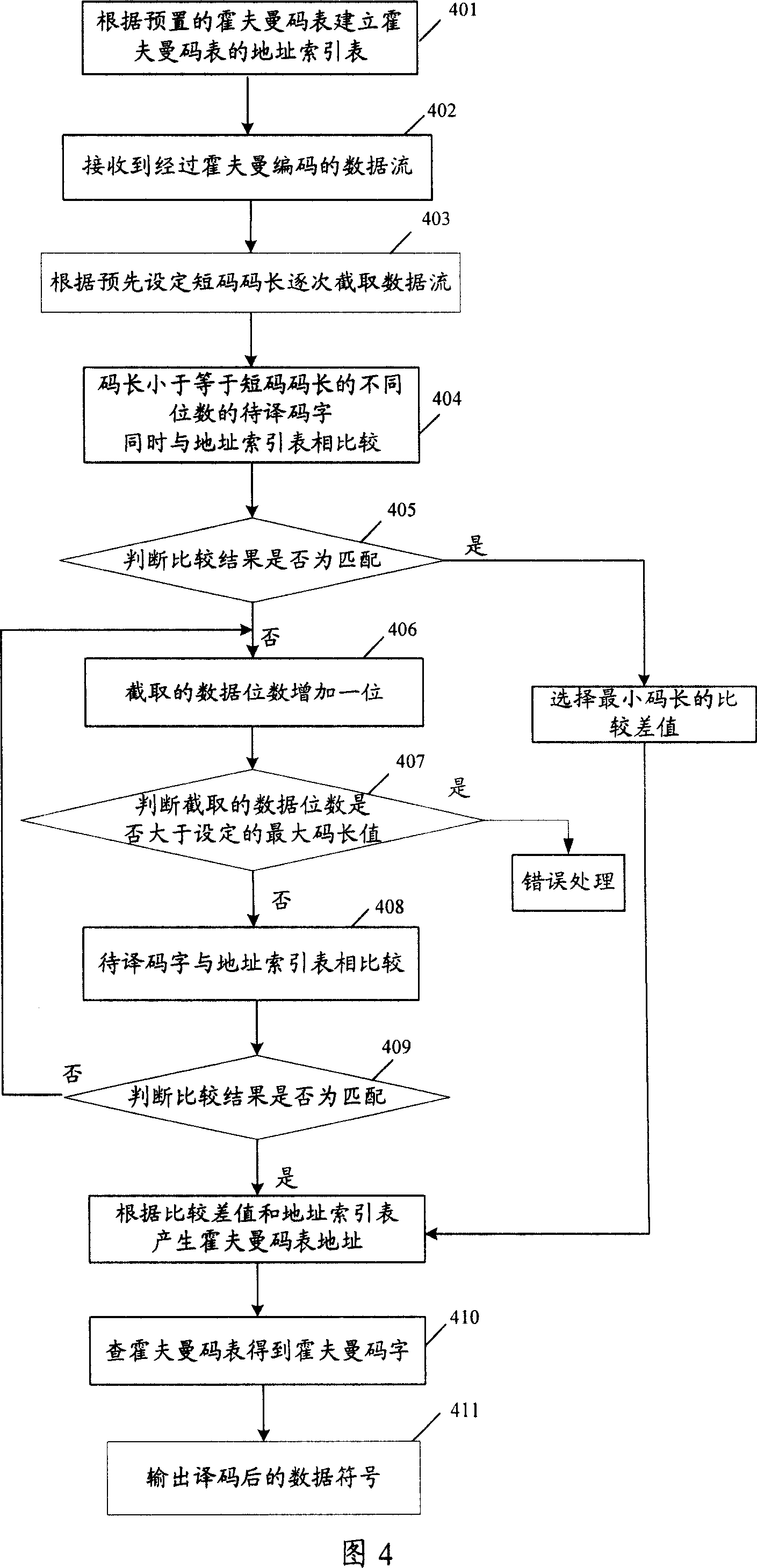

Method and device for realizing Hoffman decodeng

InactiveCN101022552AEncoding implementationImprove decoding efficiencyTelevision systemsDigital video signal modificationProgramming languageData stream

A method for realizing Huffman decoding includes receiving data stream being Huffman-coded, intercepting different cipher of data stream and using it as word to be decoded, comparing word to be decoded separately with address indexing table used to indicate code word position in Huffman code table to obtain corresponding Huffman code table address then obtaining data symbol before Huffman table by seeking Huffman table according to Huffman code table address and outputting obtained data symbol out. The device used for realizing said method is also disclosed.

Owner:VIMICRO CORP

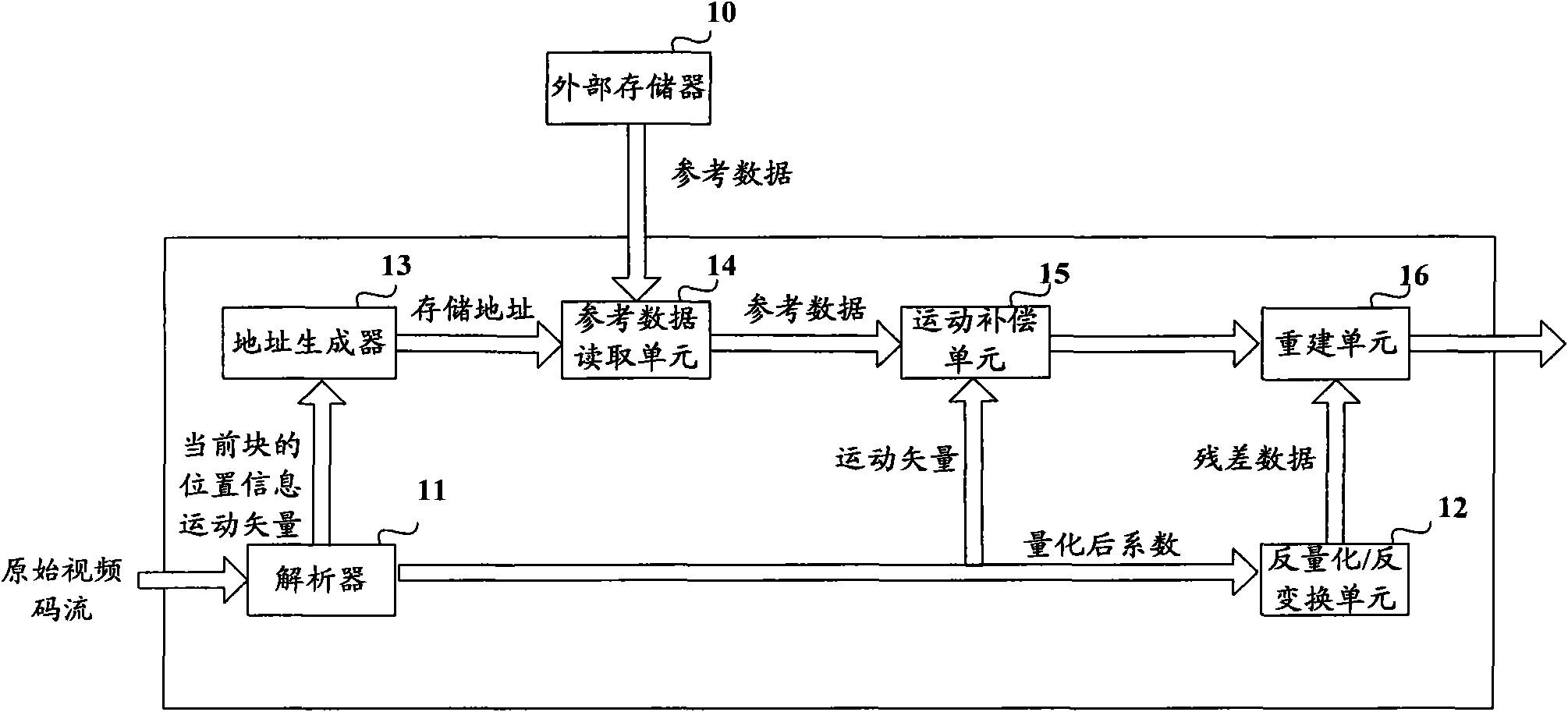

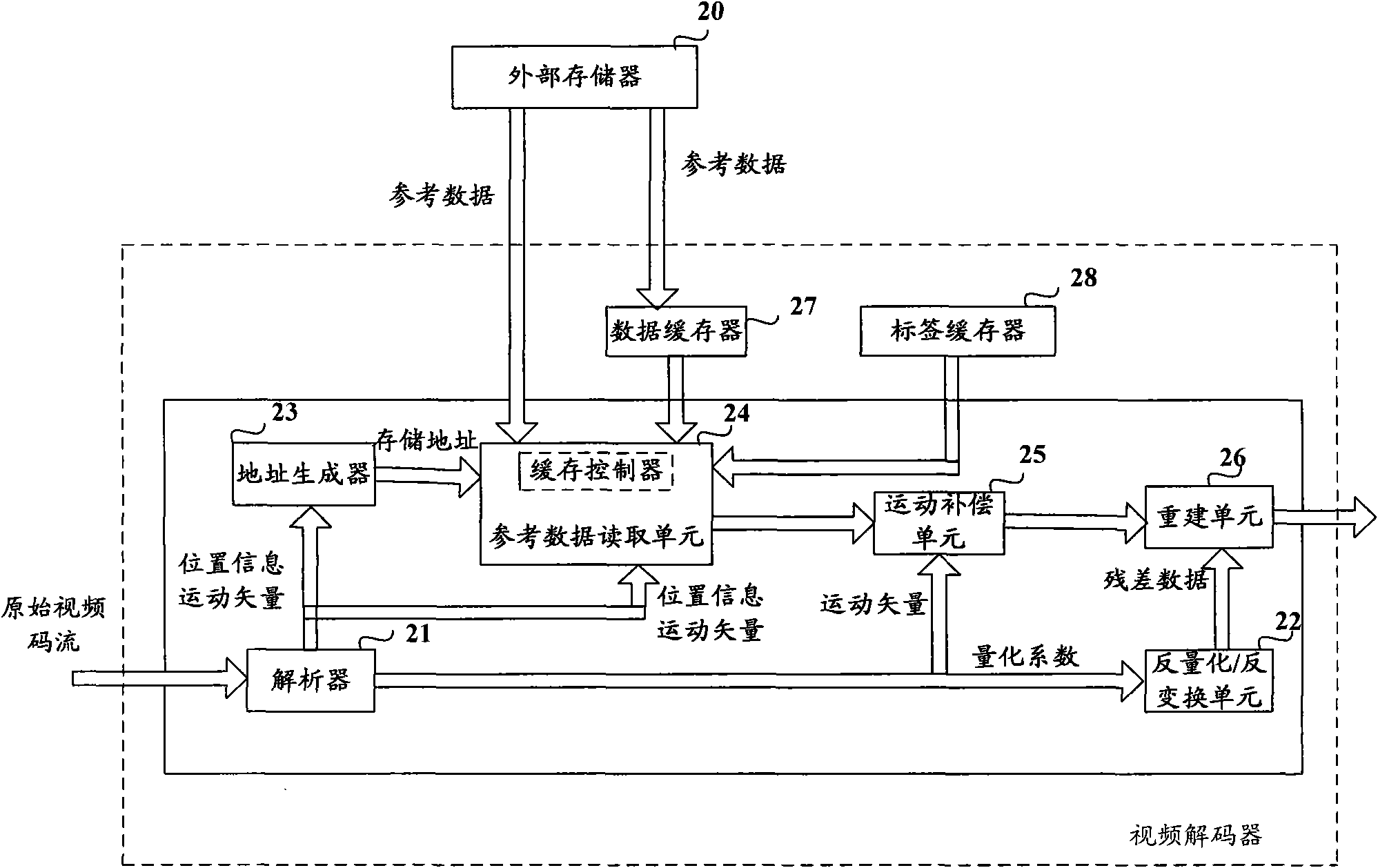

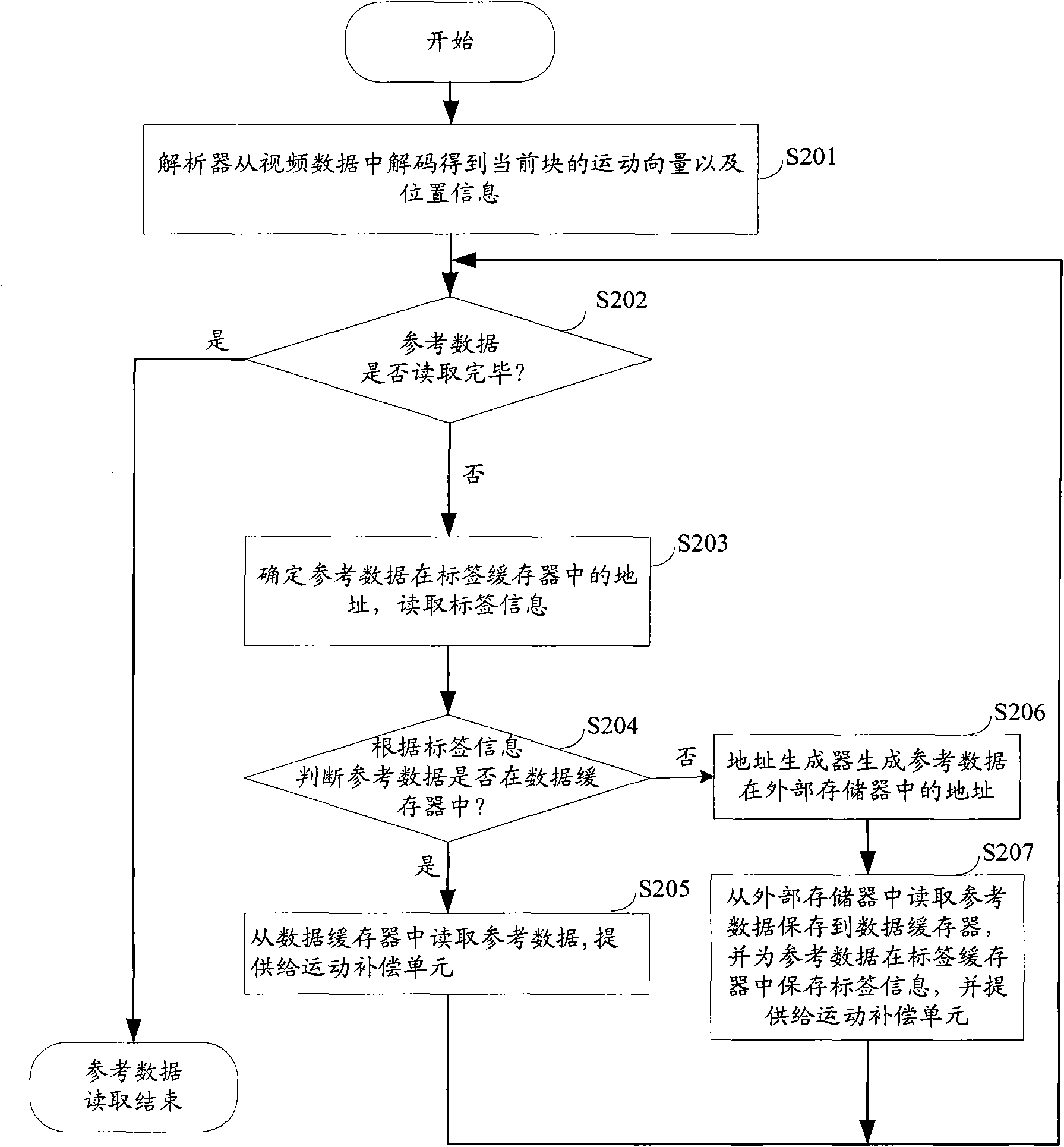

Video processing device and method

ActiveCN102340662AReduce areaSave bandwidthTelevision systemsDigital video signal modificationExternal storageVideo processing

The invention relates to the field of video processing, in particular to video decoding technology, and provides a video processing device and method for decoding the coded data obtained by the block-based predictive coding technology so as to achieve the aim of flexibly setting the type and storage block of a data cache according to the requirement for saving bandwidth of external memory, thereby saving the bandwidth of the external memory and increasing the decoding speed.

Owner:ARTEK MICROELECTRONICS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com