A kinect-based object support relationship inference method

A technology that supports relationships and objects. It is applied in the field of scene analysis and can solve problems such as the inability to reflect the relationship between objects, the accuracy of the algorithm is not high, and the extraction is not comprehensive enough.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

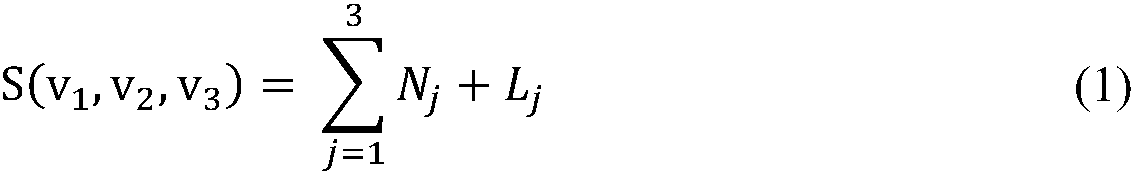

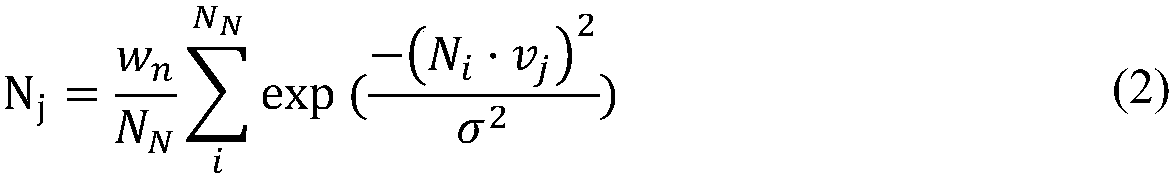

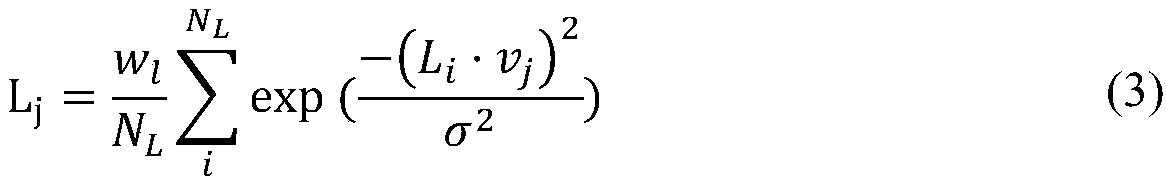

[0122] In order to verify the effect of the algorithm in the present invention, the present invention conducts experiments on RGB-D images of No. 1001-1200 in the NYU-Depth2 database. The depth information and color information of the image are extracted, and the classifier is trained by using the existing calibration results. Finally, the correct support area of each area is used as the evaluation condition, and compared with the existing support relationship calculation algorithm.

[0123] When judging the correctness of the support relationship, there is still controversy about whether the structural level classification of the support area is correct. Therefore, the correct rate structure with structural level and the correct rate structure without structural level are displayed here. The specific results are shown in Table 1. 2.

[0124] It can be seen from the above table that the method proposed by the present invention can more accurately and effectively extract the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com