Text classification method based on cyclic convolution network

A text classification and circular convolution technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve problems such as adverse effects on results, sensitivity to window size, noise, etc., to reduce data sparsity and improve performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

[0030] The basic idea of the present invention is to construct a better context representation, so that words can be disambiguated, and then a better text representation can be obtained for text classification.

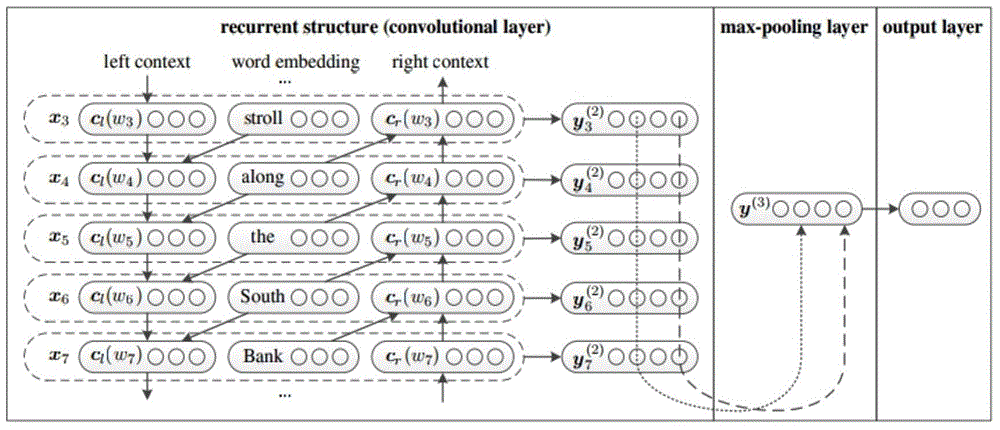

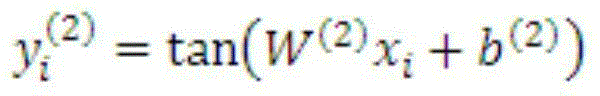

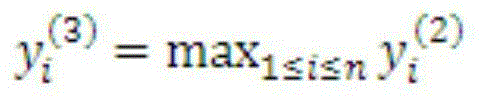

[0031] For text classification, the core problem is text representation. Traditional methods often lose word order information, and their improved methods also suffer from data sparsity. In view of these two points, this method proposes to use a recurrent network to model the context, retain as long as possible word order information, and optimize the representation of the current word; and use the largest pooling technology to extract the most useful words and phrases for text classification.

[0032] Accordi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com