Rapid and accurate human eye positioning method and sight estimation method based on human eye positioning

A human eye positioning and human eye technology, applied in the field of line of sight estimation based on human eye positioning, can solve problems such as rarely achieving real-time effects, and achieve the effect of overcoming large-scale head movement and fast line of sight direction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] In a preferred embodiment of the present invention, Figure 4 For the human face image 1 shown (width is w, height is h), perform eye positioning, and find the position and radius of the left eye and right eye on the image.

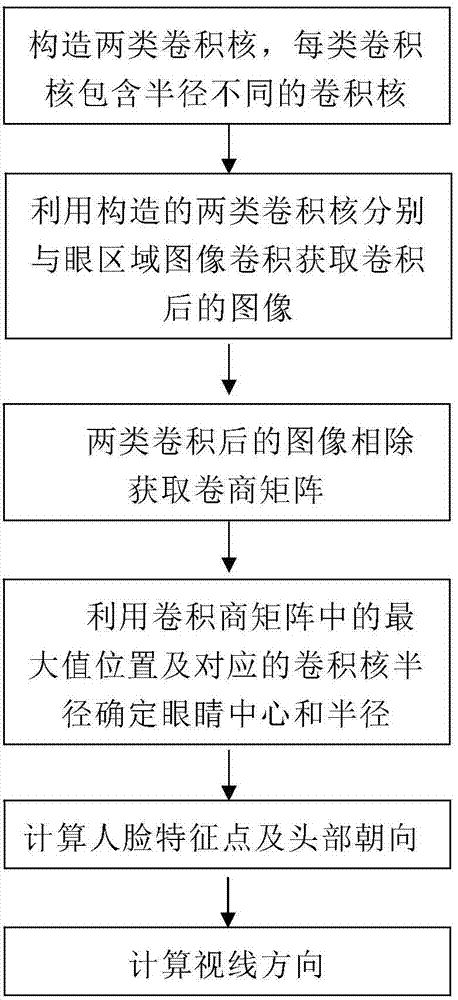

[0045] See figure 1 , The human eye positioning method of the present invention includes the following steps:

[0046] Step 1: Construct two types of convolution kernels. The difference between the two types of convolution kernels is that the weights at the center of the convolution kernels are different. The convolution kernel K r Has a central weight value, the convolution kernel K’ r The center weight is 0, and each type of convolution kernel has a convolution kernel with a different radius.

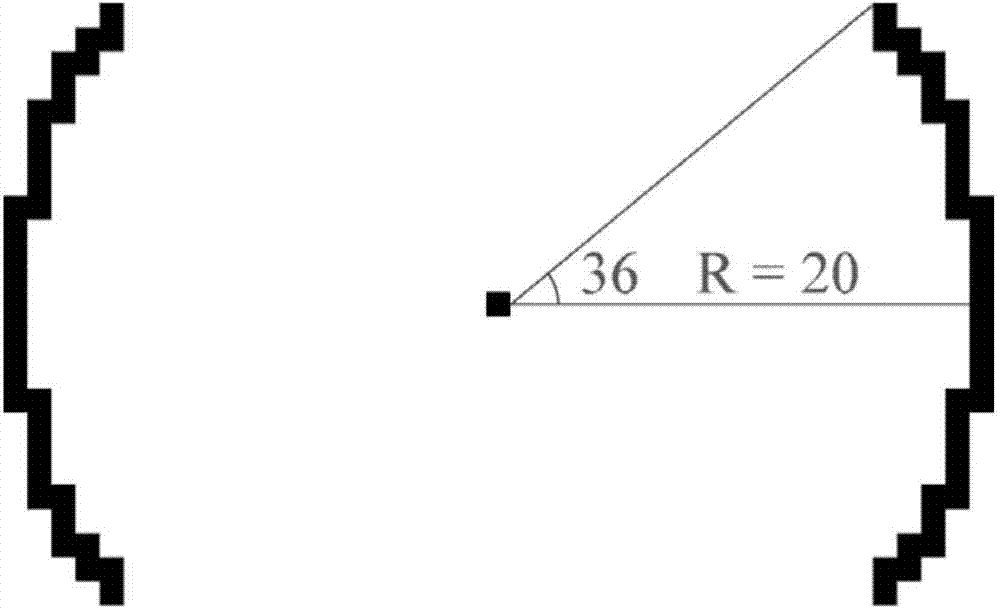

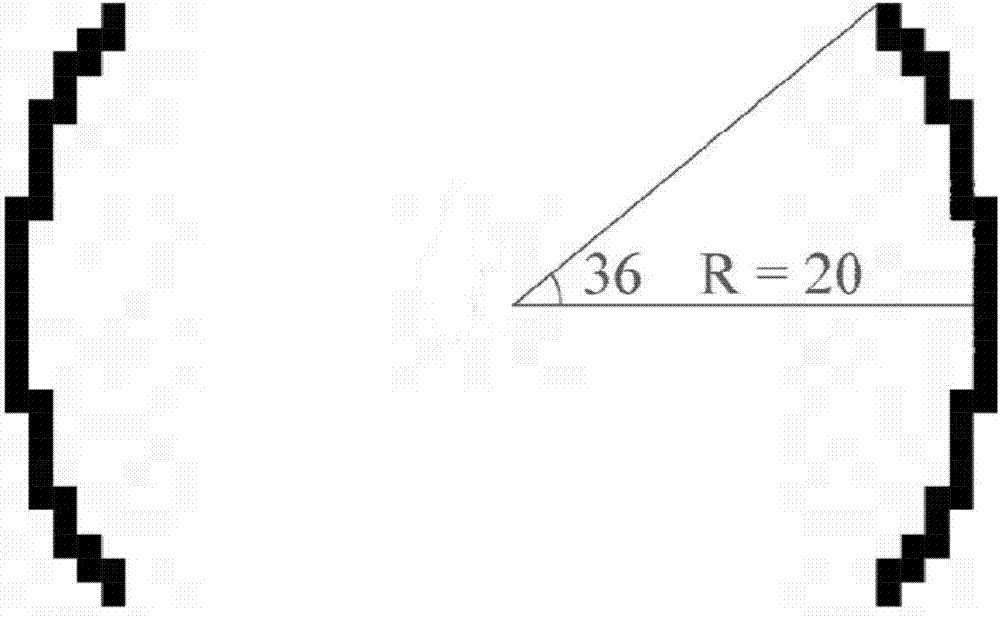

[0047] In this embodiment, 0.1w convolution kernels K are constructed r , Which are all convolution kernels with circular boundaries, with different radii r, the maximum value r among these radii r max And minimum r min They are 0.2w and 0.1w respectively. figu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com