Voice interaction method and device

A technology of voice interaction and voice data, applied in voice analysis, voice recognition, acquisition/recognition of facial features, etc., can solve the problems of low flexibility and single response form of voice content, so as to improve flexibility and enrich voice response methods , the effect of meeting user needs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

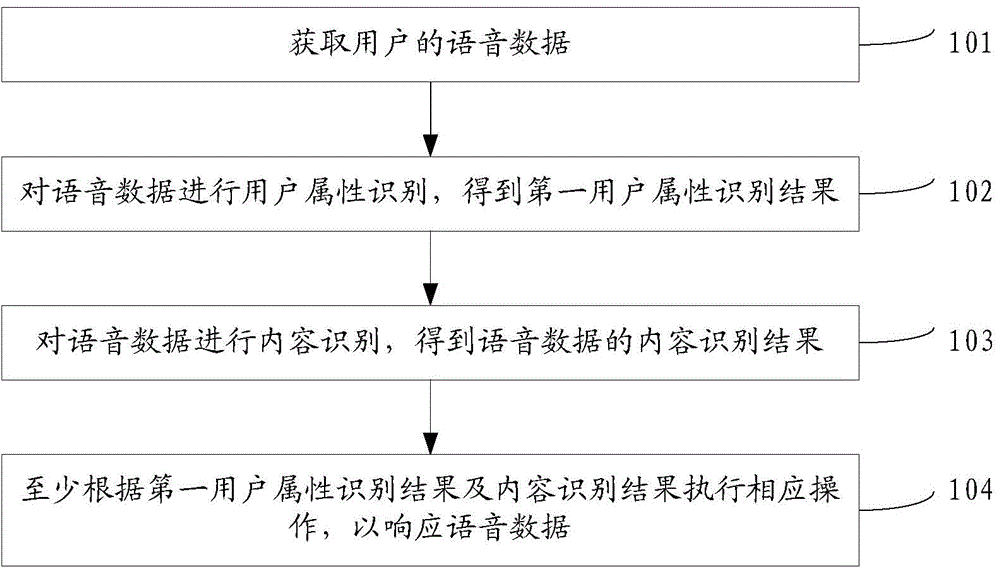

[0069] The embodiment of the present invention provides a voice interaction method, see figure 1 , the method flow provided by this embodiment includes:

[0070] 101. Acquire voice data of the user.

[0071] 102. Perform user attribute recognition on the voice data to obtain a first user attribute recognition result.

[0072] 103. Perform content recognition on the voice data to obtain a content recognition result of the voice data.

[0073] 104. Perform a corresponding operation according to at least the first user attribute recognition result and the content recognition result to respond to the voice data.

[0074] In the method provided in this embodiment, after the voice data of the user is obtained, user attribute identification and content identification are respectively performed on the voice data to obtain the first user attribute identification result and content identification result of the voice data, and at least according to the first user Perform corresponding...

Embodiment 2

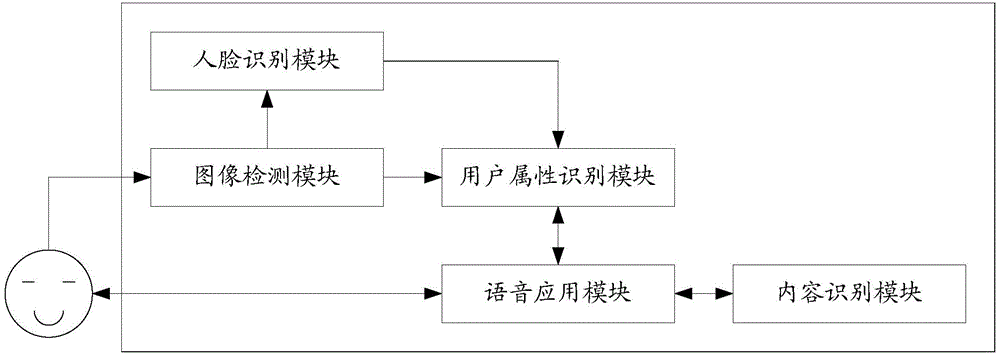

[0099] The embodiment of the present invention provides a voice interaction method, now in combination with the first embodiment above and figure 2 The illustrated voice interaction system explains in detail the voice interaction method provided by the embodiment of the present invention. exist figure 2 Among them, the voice interaction system is divided into five parts, which are image detection module, user attribute recognition module, face recognition module, voice content recognition module and voice application module. Among them, the image detection module is used to detect the number of people in the collected user image; the user attribute recognition module is used to perform user attribute recognition on the user voice; the face recognition module is used to detect the number of people in the user image when the image detection module When it is a preset value, it recognizes the face data in the user image; the speech content recognition module is used to carry o...

Embodiment 3

[0136] An embodiment of the present invention provides a voice interaction device, and a user executes the method shown in the first or second embodiment above. see Figure 5 , the device includes: an acquisition module 501 , a user attribute identification module 502 , a content identification module 503 , and an execution module 504 .

[0137] Wherein, acquisition module 501 is used to obtain the user's voice data; user attribute identification module 502 is connected with acquisition module 501, and is used to carry out user attribute identification to voice data, obtains the first user attribute identification result; Content identification module 503 and user attribute The recognition module 502 is connected to perform content recognition on the voice data to obtain the content recognition result of the voice data; the execution module 504 is connected to the content recognition module 503 and is used to perform corresponding operations at least according to the first use...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com