Method for identifying user action and intelligent mobile terminal

A user and action technology, applied in the field of human-computer interaction, can solve problems such as poor user experience, limited range of user actions, and high requirements for equipment posture

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

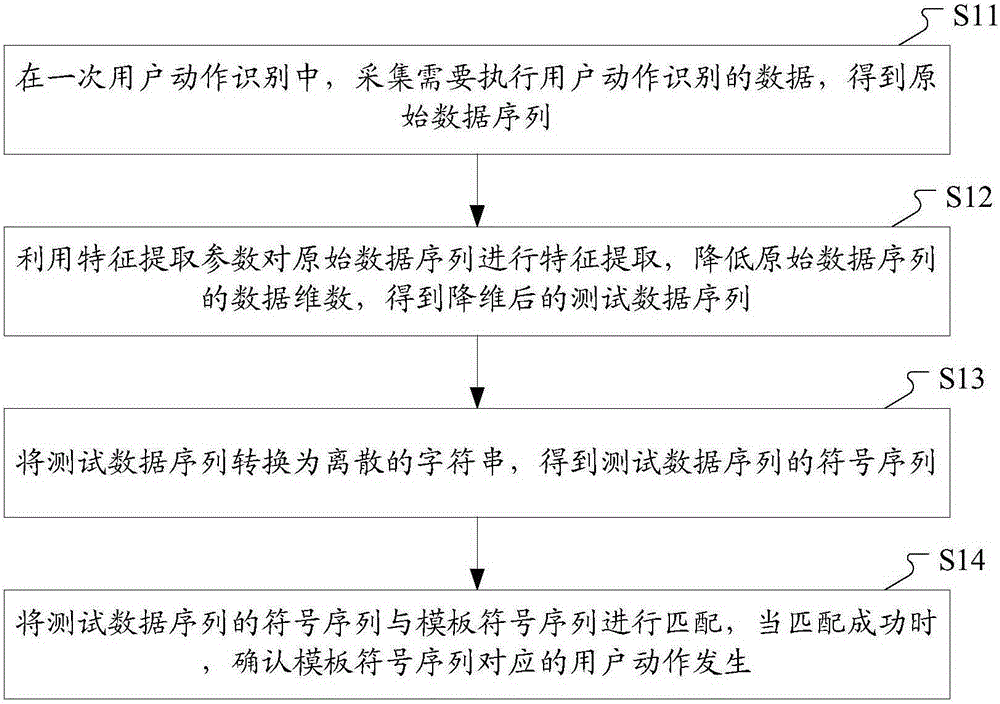

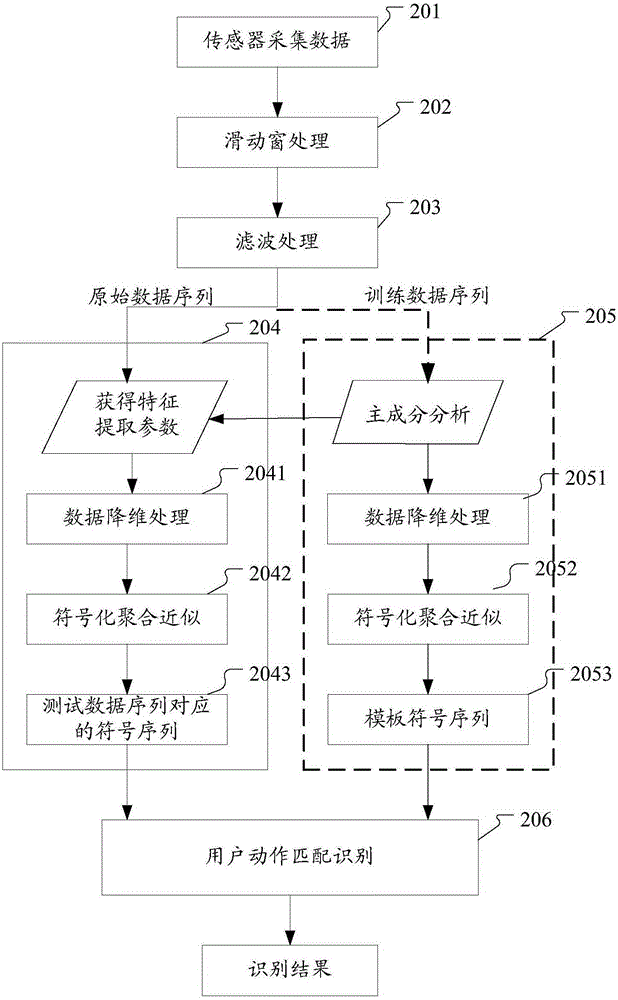

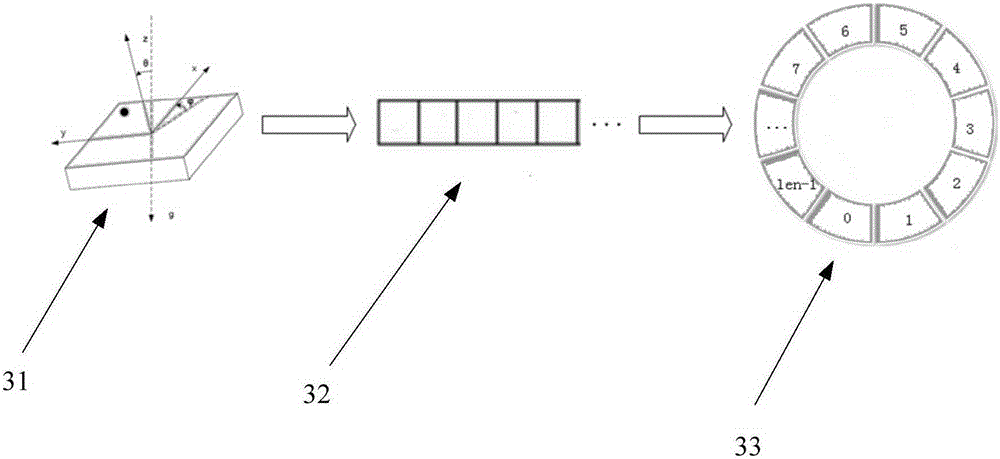

[0087] The main concept of the present invention is: for the problems existing in the existing sensor-based user action recognition scheme, the embodiment of the present invention collects user action data in advance for training, obtains feature extraction parameters and template symbol sequences, and uses the feature extraction parameters to reduce the test The data dimension of the data sequence (for example, reducing the three-dimensional acceleration data to one dimension), compared with the existing scheme of directly operating on the collected high-dimensional data to identify, removes noise, reduces computational complexity and Requirements for device posture when the user performs an action. Furthermore, by symbolizing the reduced-dimensional low-dimensional data sequence into a string sequence, the noise in the data sequence can be further removed, the amount of calculation can be reduced, and the recognition accuracy can be improved. Finally, the character string se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com