Hyperspectral image classification method based on spectral-spatial cooperation of deep convolutional neural network

A convolutional neural network and hyperspectral image technology, applied in the field of space-spectrum joint hyperspectral image classification, can solve the problems of affecting accuracy, large amount of calculation for dimension reduction processing, and loss of spectral information, so as to improve classification accuracy and solve classification problems. The effect of low precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

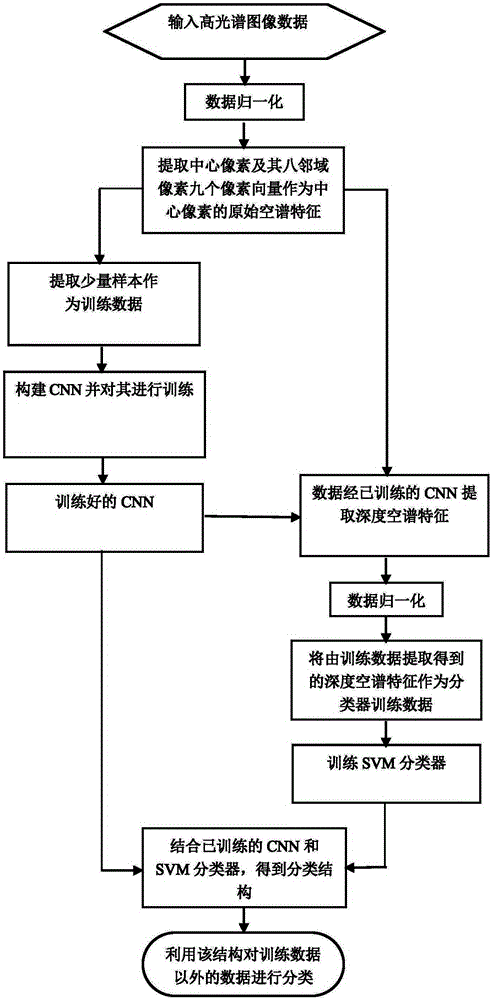

[0021] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

[0022] Step 1 Input the specular image data, according to the formula Normalize the data. Where ij represents the coordinate position s represents the spectrum segment, generally 100-240 spectrum segment, x max 、x min represent the maximum and minimum values in the 3D hyperspectral data, respectively.

[0023] Step 2 Extract the original spatial spectral features, and in the hyperspectral image, a total of nine pixel vectors of the central pixel and eight neighboring pixels Extracted as the original spatial spectral feature of the center pixel at (i, j) position.

[0024] Step 3 randomly extracts a small amount of labeled data from the data extracted in step 2 as the data for training CNN.

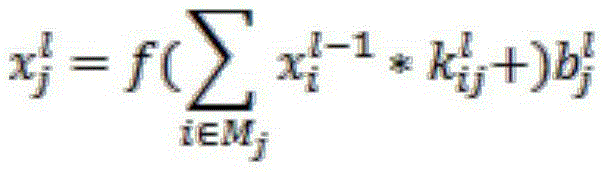

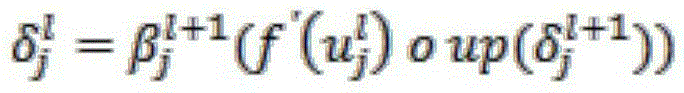

[0025] Step 4 Construct a convolutional neural network, take the original spatial spectral feature extracted in step 2 as input, use a one-dimensional vector as the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com