Method and device for determining lane line

A determination method and lane line technology, applied in the field of image processing, can solve the problems that are difficult to meet, do not consider occlusion and shadow, and the accuracy of lane positioning is limited.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

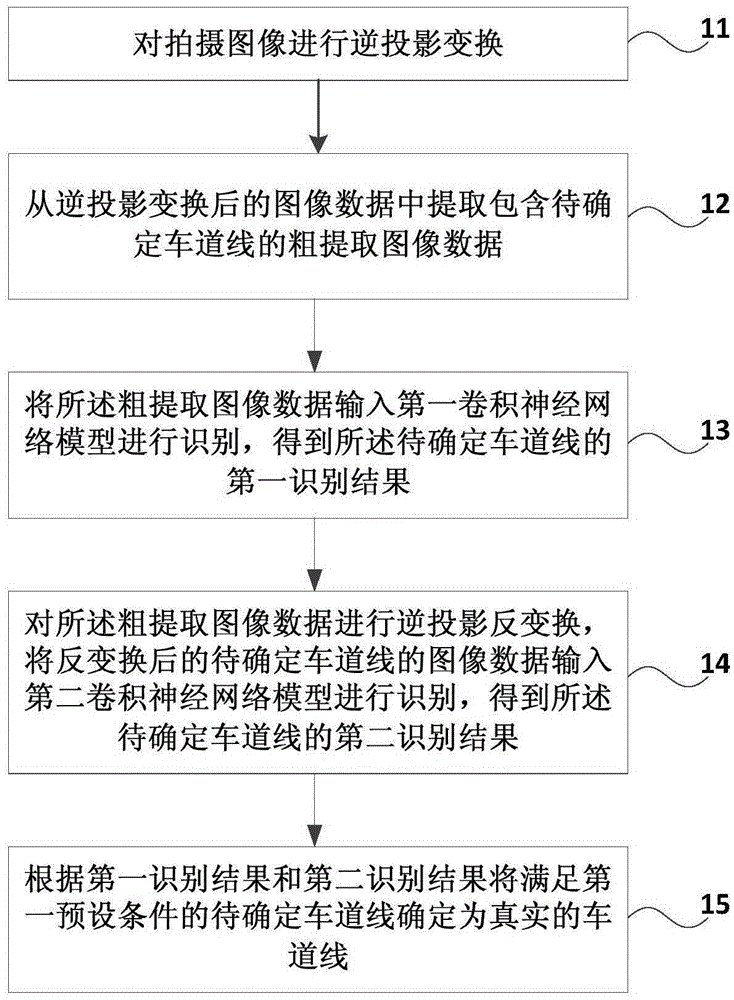

[0030] Figure 1A It is a schematic flowchart of the method for determining lane lines provided in Embodiment 1 of the present invention, as shown in Figure 1A shown, including:

[0031] S11. Performing inverse projection transformation on the captured image;

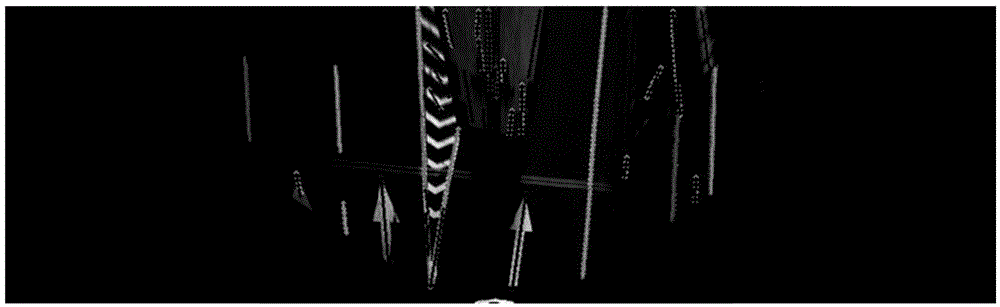

[0032] Specifically, since the vehicle-mounted camera is placed parallel to the ground, the camera shoots along the forward direction of the vehicle. In this shooting angle, the lane lines show a form of narrower and narrower width from near to far, and are not parallel to each other, intersecting at the vanishing point at infinity. It is difficult to locate the lane line at this shooting angle. Therefore, it is first necessary to adjust the camera's viewing angle to be perpendicular to the ground through back projection.

[0033] For example, if the three-dimensional space coordinates corresponding to the camera are (X, Y, Z), the parameters of the camera are: focal length f x and f y , optical center coordinate c ...

Embodiment 2

[0085] figure 2 A schematic structural diagram of a lane line determination device provided in Embodiment 2 of the present invention, as shown in figure 2 As shown, it specifically includes: an image transformation module 21, a rough extraction module 22, a first recognition module 23, a second recognition module 24 and a lane line determination module 25;

[0086] The image transformation module 21 is used to perform inverse projection transformation on the captured image;

[0087] The rough extraction module 22 is used to extract rough extracted image data including lane lines to be determined from the image data after back projection transformation;

[0088] The first recognition module 23 is used to input the roughly extracted image data into a first convolutional neural network model for recognition, and obtain a first recognition result of the to-be-determined lane line;

[0089] The second identification module 24 is used to perform inverse projection and inverse tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com