Coordination-model-based method for obtaining video abstract

A technology for acquiring videos and models, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problem of not ensuring that adjacent frames have similar sparse expression coefficients, the influence of sparse expression accuracy, and the lack of ignoring the dispersion of video frames. And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

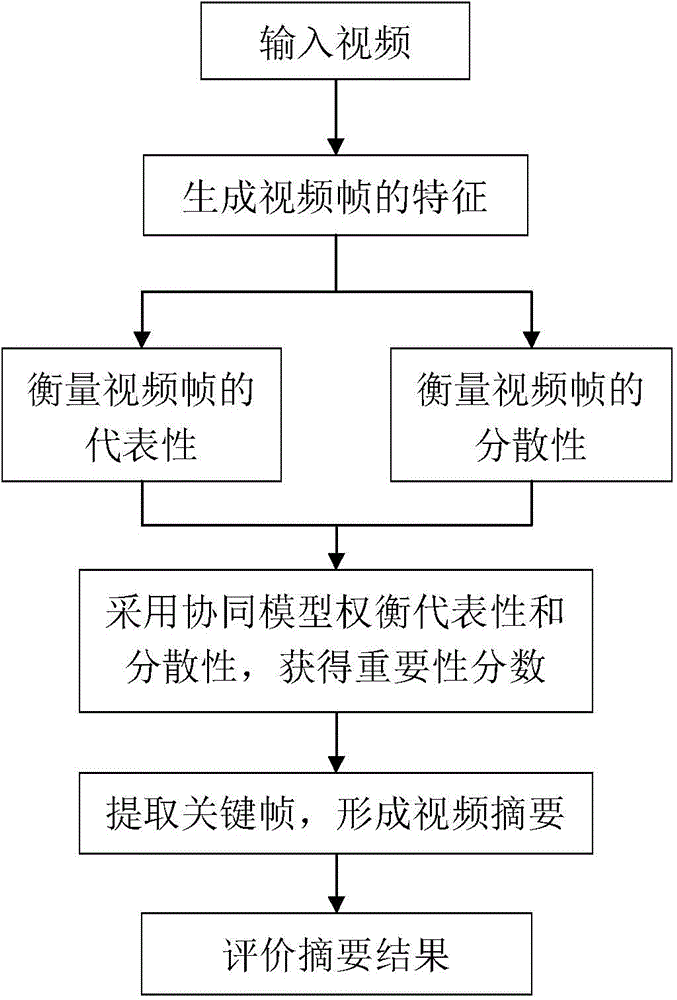

[0043] refer to figure 1 , the steps that the present invention realizes are as follows:

[0044] Step 1, generate feature representations for video frames.

[0045] (1a) extracting the bottom layer image feature operation frame by frame from the input video containing n frames, and obtaining the bottom layer feature set of the input video;

[0046] (1b) Use the BoW (Bag-of-Word) model on this feature set to obtain the feature description vector x of each frame of the video, thereby obtaining the feature expression matrix X of the video = [x 1 ,x 2 ,...,x n ];

[0047] Step 2, comprehensively evaluate the importance of video frames through the collaborative model.

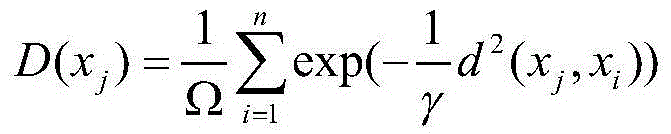

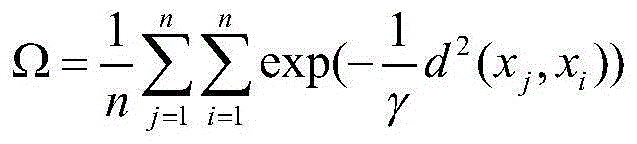

[0048] (2a) Carry out dictionary learning on the obtained video feature expression matrix, and measure the expressivity of the video frame by calculating the reconstruction error of the sparse expression coefficients. A frame with a smaller reconstruction error indicates better expressivity. In order to achieve...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com