A Video Stabilization Method Based on Local and Global Motion Disparity Compensation

A technology of difference compensation and video stabilization, which is applied to TVs, color TVs, and components of color TVs, etc., and can solve the problems of low stabilization rate and high content loss.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

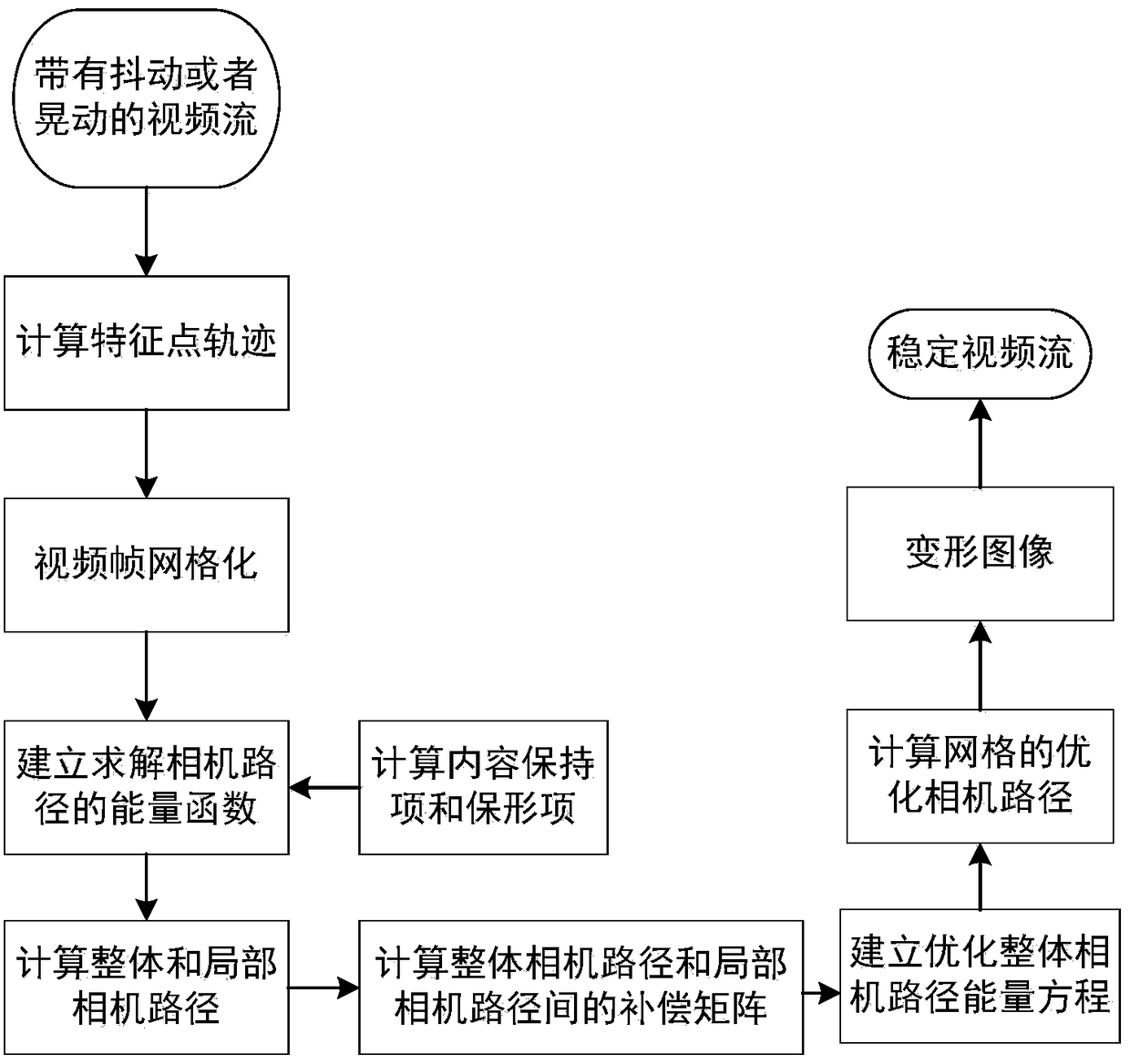

[0095] Attached below figure 1 The embodiment of the method of the present invention is described in detail.

[0096] A video stabilization method based on local and overall motion difference compensation, the specific steps are as described in step 1 to step 11 in the main body of the invention;

[0097] Wherein, in step 1, the detection of the feature point p is realized by the feature point detection function GoodFeaturesToTrack() function in the computer vision library OpenCV function library, for the feature point The feature point tracking function calcOpticalFlowPyrLK () function in the computer vision library OpenCV function library is adopted to calculate; the value of video frame quantity N is 387 in the present embodiment;

[0098] In step 2, this embodiment divides the video frame t (the value range of t is 1 to 387) into 8*8=64 grids, that is, the value of M is 64, and the value of D is 8; there are 8 grids in one row Grid, then there are 9 grid corners in a ro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com