Video object segmentation method based on self-paced weak supervised learning

A technology of object segmentation and weak supervision, applied in image analysis, image data processing, instruments, etc., can solve the problems of system performance limitation, dependence on professional knowledge and own experience, quantity and quality uncertainty, etc., to achieve high segmentation accuracy , the effect of good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be further described below in conjunction with the accompanying drawings and embodiments, and the present invention includes but not limited to the following embodiments.

[0036] The computer hardware environment used for implementation is: Intel Xeon E5-2600 v3@2.6GHz 8-core CPU processor, 64GB memory, equipped with GeForce GTX TITAN X GPU. The running software environment is: Linux 14.04 64-bit operating system. We use Matlab R2015a software to implement the method proposed in the invention.

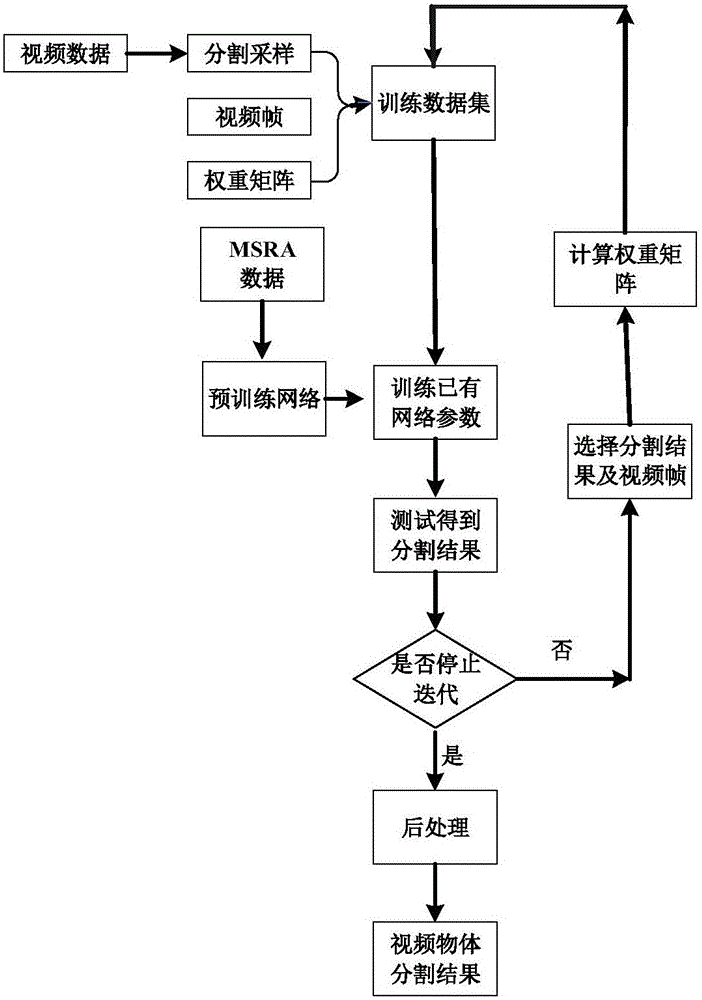

[0037] refer to figure 1 Method flowchart, the present invention is implemented as follows:

[0038] 1. Construct a deep neural network and perform pre-training. Modify the Loss parameters of the last layer of the deep neural network proposed by Nian Liu et al. in 2015 in Predictingeye fixations using convolutional neural networks[C].Proceedings of the IEEEConference on Computer Vision and Pattern Recognition.2015:362-370.Predicting is "HingeLoss"...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com