Shuffle data caching method based on mapping-reduction calculation model

A data caching and computing model technology, applied in the directions of fault processing, computing, and electrical digital data processing that are not based on redundancy, can solve problems such as affecting the performance of computing itself, not fully utilizing hardware features, etc., to improve robustness, Avoid the effect of manually setting checkpoints

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

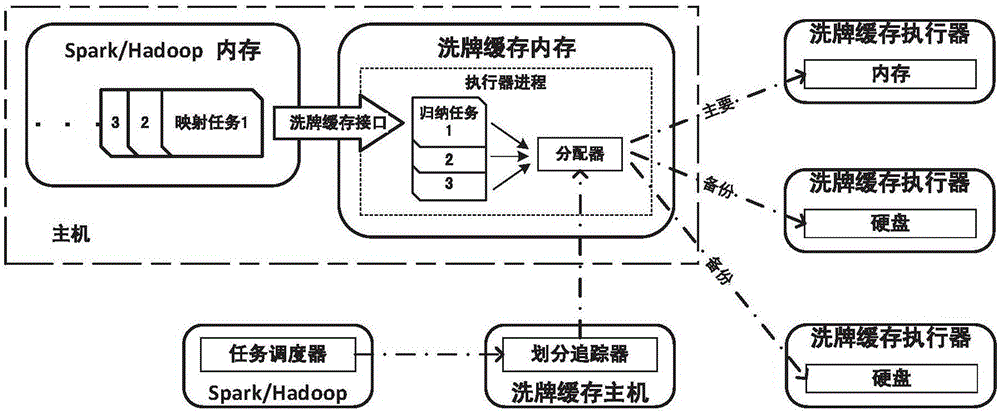

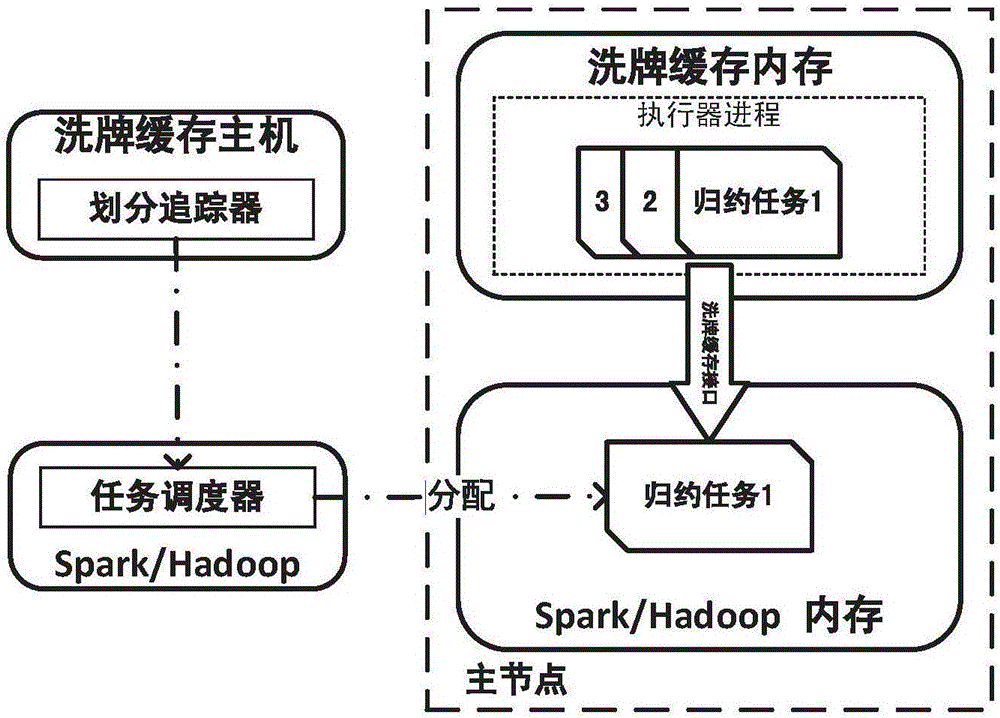

[0043] Embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings. This embodiment is implemented on the premise of the technical solution and algorithm of the present invention, and provides detailed implementation and specific operation process, but the applicable platform is not limited to the following embodiments. The specific operating platform of this example is a small cluster composed of two common servers, each of which is installed with UbuntuServer 14.04.1 LTS 64 bit and equipped with 8GB of memory. The specific development of the present invention is based on the source code version of Apache Spark 1.6 as an illustration, and other mapping-reduction distributed computing frameworks such as Hadoop are also applicable. First, you need to modify the source code of Spark to transmit the shuffled data through the interface of this method.

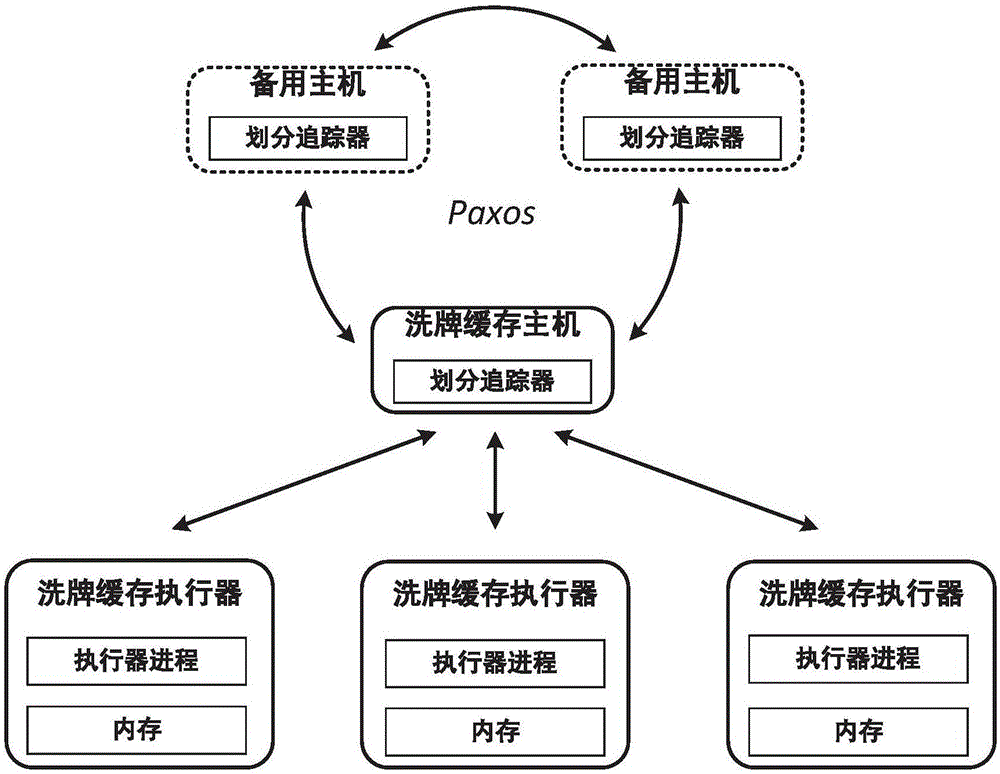

[0044] The present invention deploys a cache system in a distributed co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com