Perspective navigation map system, generation method and positioning method

A technology of navigation map and map library, which is applied in the perspective navigation map system and the generation of perspective navigation map, perspective navigation map for positioning, can solve the problems of excessive radiation exposure, time-consuming, waste of medical resources, etc., to reduce radiation exposure dose, Effect of improving operation efficiency and shortening operation time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

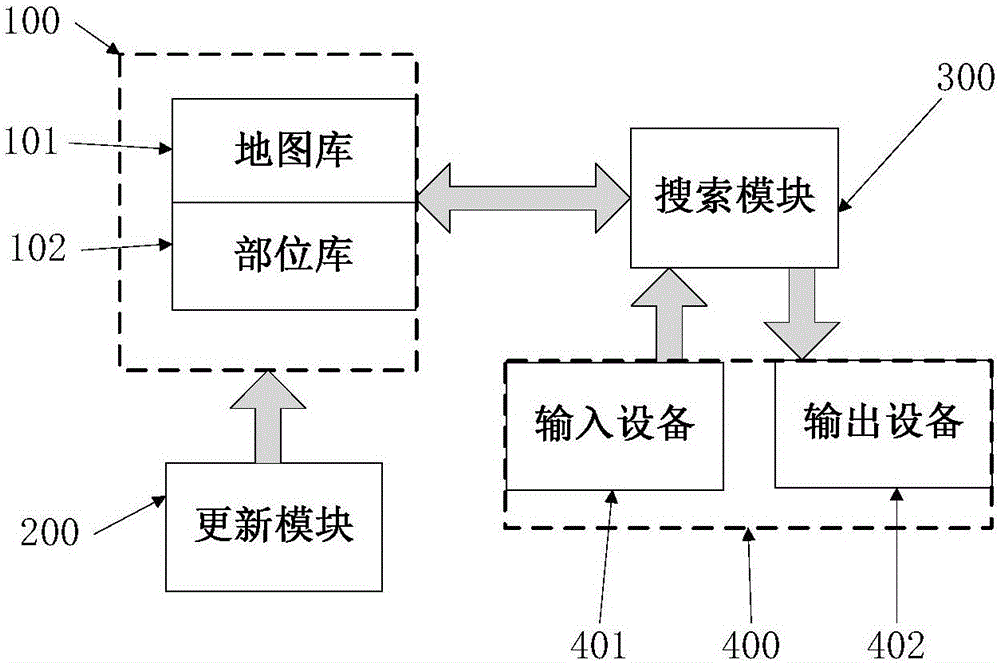

[0042] Such as figure 1 As shown, the embodiment of the present invention provides a perspective navigation map system, which includes a storage module 100 , an update module 200 , a search module 300 and a human-computer interaction module 400 .

[0043] The storage module 100 is used for storing the information of the patient's fluoroscopic images to form a fluoroscopic navigation map. It includes a map library 101 and a part library 102 .

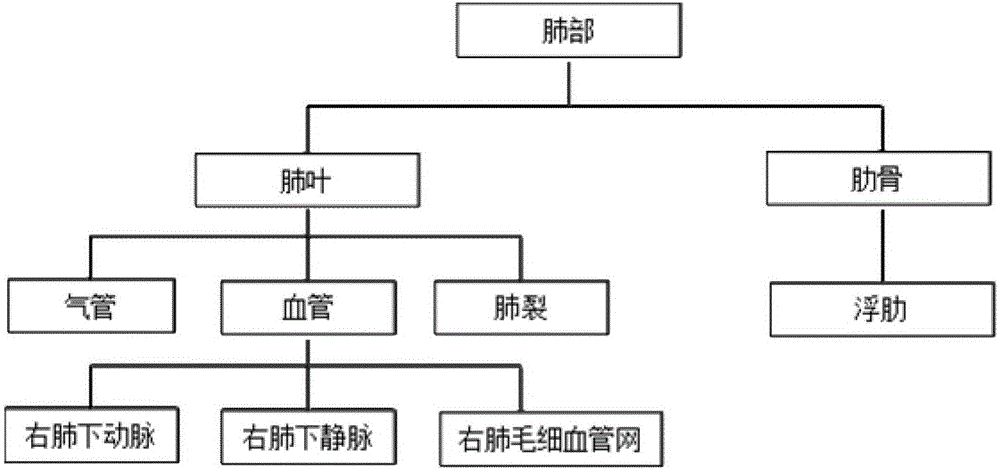

[0044] During clinical interventional operations, each anatomical structure of the human body corresponds to different positions of the fluoroscopy platform, and the map library 101 is divided into multiple regions according to the fluoroscopy platform, and anatomical objects are determined according to the corresponding human anatomical structure of each region. Each anatomical object is a tree structure formed according to human cognition, and has its corresponding root unit. Each root unit can contain multiple levels of subunits. T...

Embodiment 2

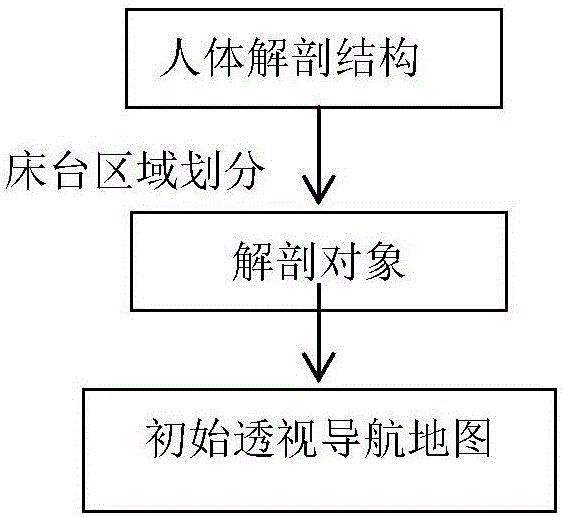

[0053] An embodiment of the present invention provides a method for generating a perspective navigation map, which includes: generation of an initial perspective navigation map and instantiation of anatomical objects.

[0054] Such as image 3 As shown, the anatomical structure of the human body corresponds to different positions of the fluoroscopy platform, and the storage module is divided into multiple regions according to the fluoroscopy platform, and the regions include anatomical objects determined according to the anatomical structure, and the anatomical objects include root units. The root cell contains child cells, forming the initial perspective navigation map.

[0055] The initial perspective navigation map consists of a number of initial anatomical objects. The various anatomical structures inside the initial anatomical object are abstracted when the initial perspective navigation map is formed, and they have no definite position coordinates, but only the topologi...

Embodiment 3

[0066] Such as Image 6 As shown, in actual use, the process of using the perspective navigation map for positioning includes searching for information on anatomical objects, and the steps are as follows:

[0067] A. Input the information of the object to be located through the human-computer interaction module, and the human-computer interaction module will pass the information of the object to be located to the search module;

[0068] B. The search module searches the information of the storage module according to the information of the object to be located, and obtains the location information of the located object;

[0069] C. The search module transmits the position information of the positioning object to the human-computer interaction module;

[0070] D. The human-computer interaction module outputs the position information of the positioning object.

[0071] In step A of the embodiment of the present invention, the object information to be positioned may be input thr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com