Virtual assembling method and device

A technology of virtual assembly and assembly operation, applied in the field of computer vision, can solve the problems of limited assembly effect, dangerous operation, difficult to widely apply, etc., to achieve the effect of improving assembly efficiency and assembly effect, enhancing the sense of interaction, and being widely used

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

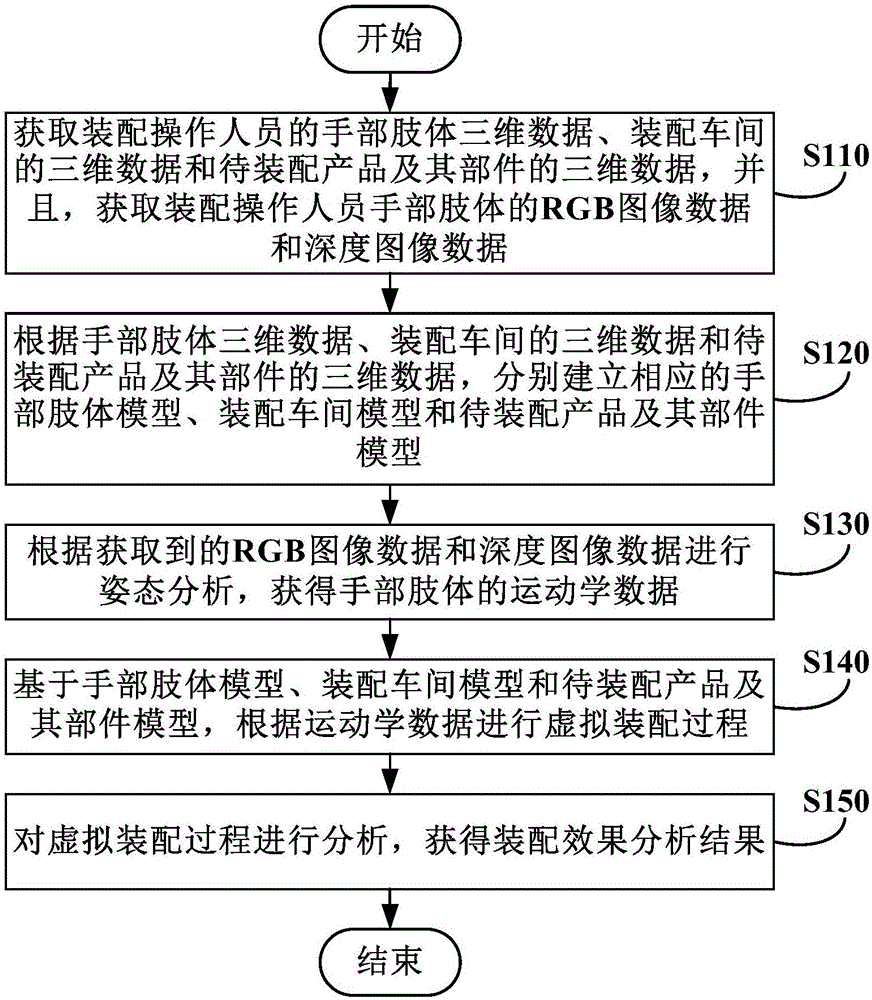

[0031] figure 1 It is a flow chart showing the virtual assembly method according to Embodiment 1 of the present invention. available in as image 3 The shown apparatus performs the method.

[0032] refer to figure 1 , in step S110, acquire the 3D data of the hands and limbs of the assembly operator, the 3D data of the assembly workshop, and the 3D data of the product to be assembled and its parts, and acquire the RGB image data and depth image data of the hands and limbs of the assembly operator.

[0033] Specifically, somatosensory interaction devices such as Kinect can be used to collect information on the arm of the assembly operator, the assembly workshop, the product to be assembled and its components, and complete the input of 3D data, providing a data source for subsequent modeling processing and production of teaching materials. At the same time, the Kinect body sensor is used to obtain the RGB image and depth image data of the assembly operator, which provides a da...

Embodiment 2

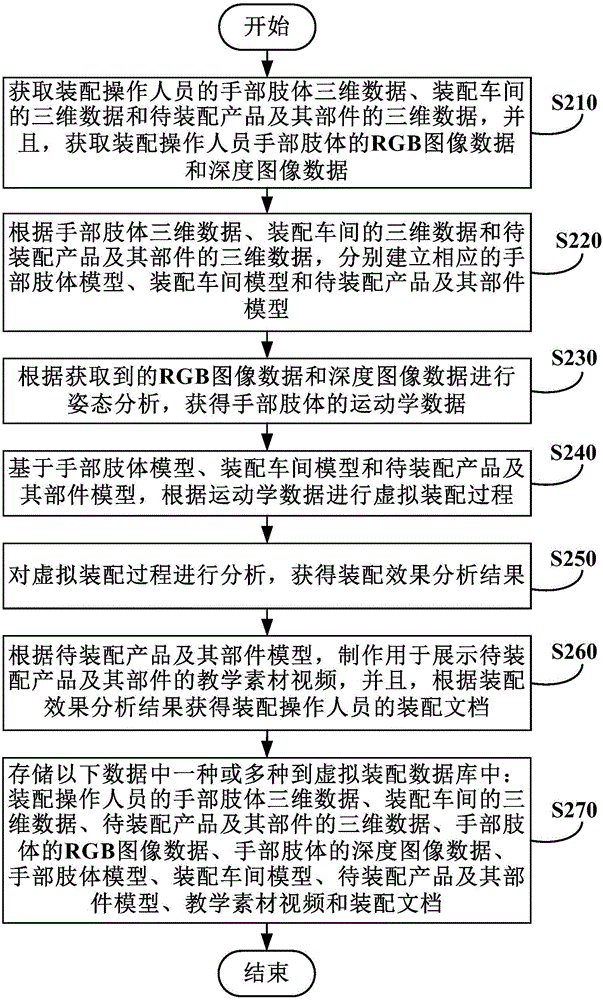

[0050] figure 2 is a flowchart illustrating a virtual assembly method according to Embodiment 2 of the present invention, which can be regarded as figure 1 A specific implementation of . available in as Figure 4 The shown apparatus performs the method.

[0051] refer to figure 2 , in step S210, acquire the 3D data of the hands and limbs of the assembly operator, the 3D data of the assembly workshop, and the 3D data of the product to be assembled and its parts, and acquire the RGB image data and depth image data of the hands and limbs of the assembly operator.

[0052] According to an exemplary embodiment of the present invention, the process of acquiring the three-dimensional data of the hands and limbs of the assembly operator in step S210, the three-dimensional data of the assembly workshop, and the three-dimensional data of the product to be assembled and its parts may include: acquiring the three-dimensional data of the assembly operator through the Kinect somatosens...

Embodiment 3

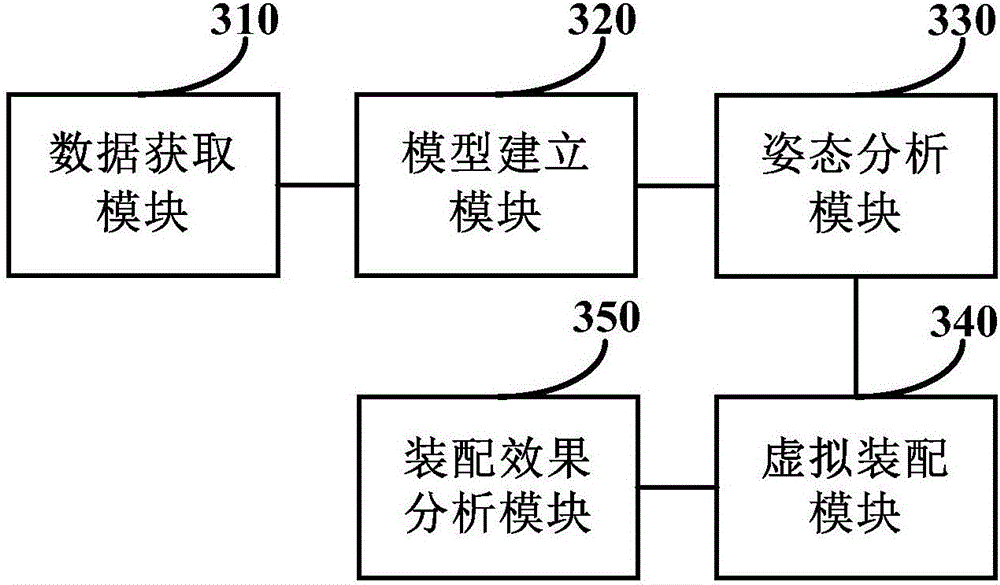

[0072] Based on the same technical idea, image 3 is a logic block diagram showing the virtual assembly device according to the third embodiment of the present invention. It can be used to execute the process of the virtual assembly method described in the first embodiment.

[0073] refer to image 3 , the virtual assembly device includes a data acquisition module 310 , a model building module 320 , a posture analysis module 330 , a virtual assembly module 340 and an assembly effect analysis module 350 .

[0074] The data acquisition module 310 is used to acquire the three-dimensional data of the hands and limbs of the assembly operator, the three-dimensional data of the assembly workshop and the three-dimensional data of the product to be assembled and its parts, and acquire the RGB image data and depth image data of the hands and limbs of the assembly operator .

[0075] The model building module 320 is used to respectively establish corresponding hand and limb models, asse...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com