Hand gesture identification method based on Leap Motion and Kinect

A gesture recognition and gesture technology, applied in the field of human-computer interaction, can solve the problems of insufficient gesture accuracy and achieve the effects of low cost, improved accuracy, and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0045] For the convenience of description, the relevant technical terms appearing in the specific implementation are explained first:

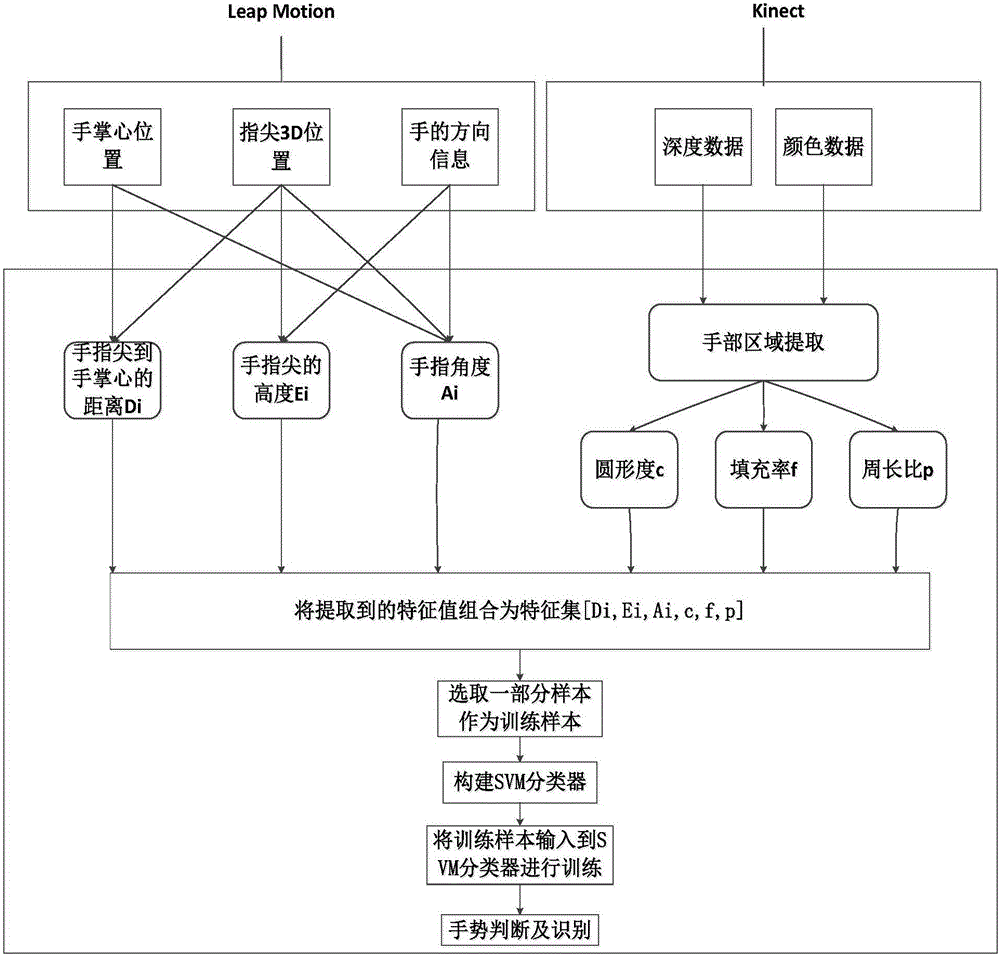

[0046] figure 1 It is a flow chart of the present invention's gesture recognition method based on Leap Motion and Kinect.

[0047] In this example, first follow the image 3 As shown in the connection hardware, it is necessary to directly connect the two front-end devices of Leap Motion and Kinect with the data cable of the PC.

[0048] After the hardware connection is complete, combine the figure 1 A kind of gesture recognition method based on Leap Motion and Kinect of the present invention is described in detail, specifically comprises the following steps:

[0049] (1), use the Leap Motion sensor to obtain the relevant point coordinates of the hand and the gesture feature information of the hand;

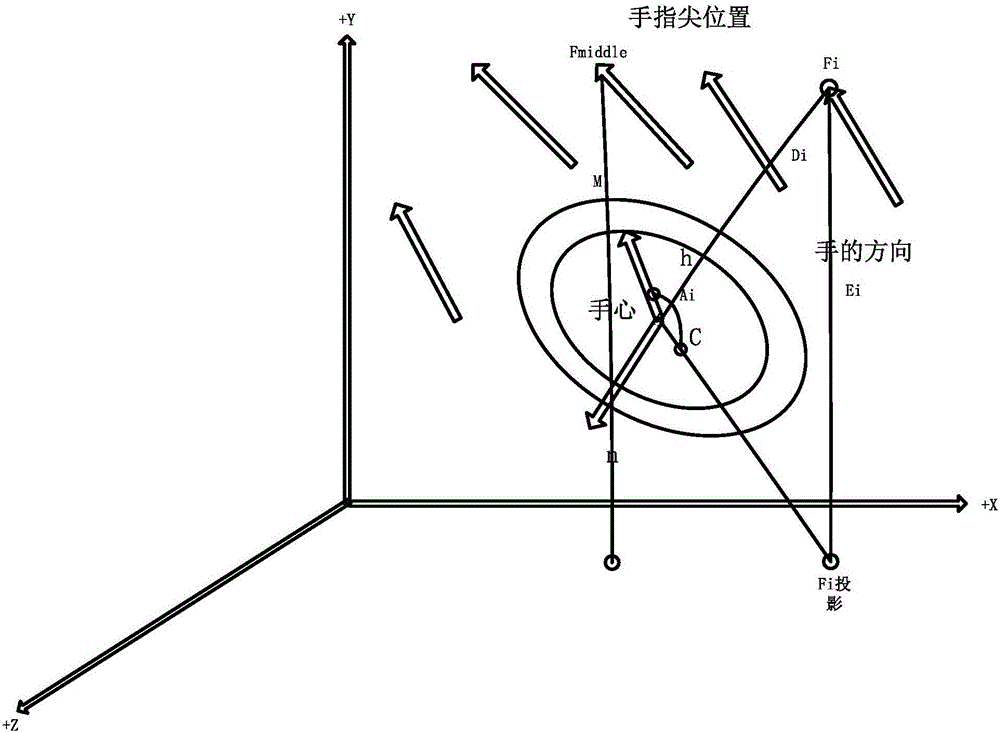

[0050] (1.1), the Leap Motion sensor establishes a space coordinate system, the origin of the coordinate system is the center of the sensor, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com