Patents

Literature

62 results about "Leap motion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

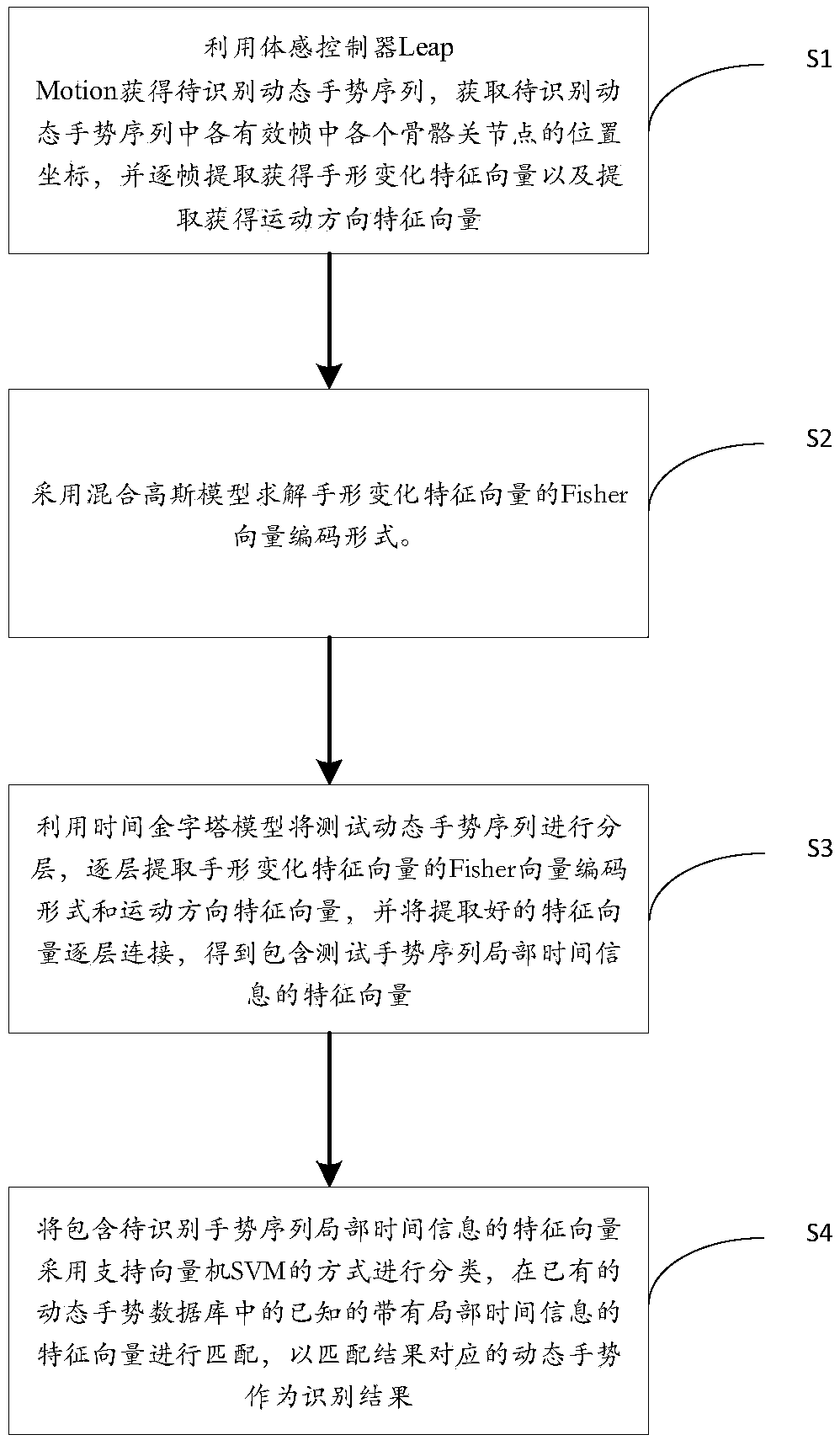

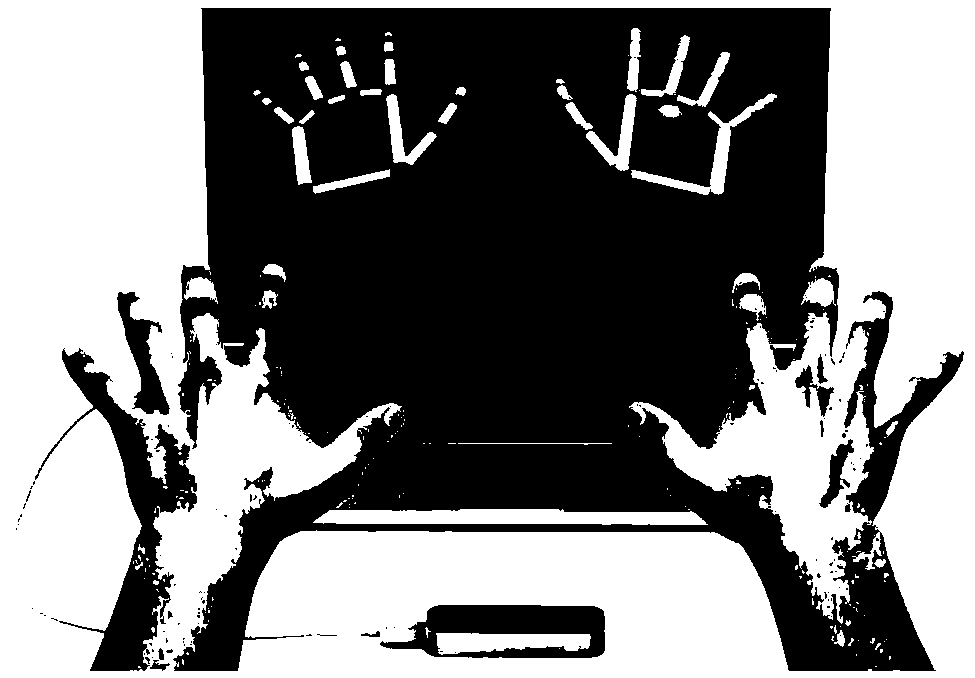

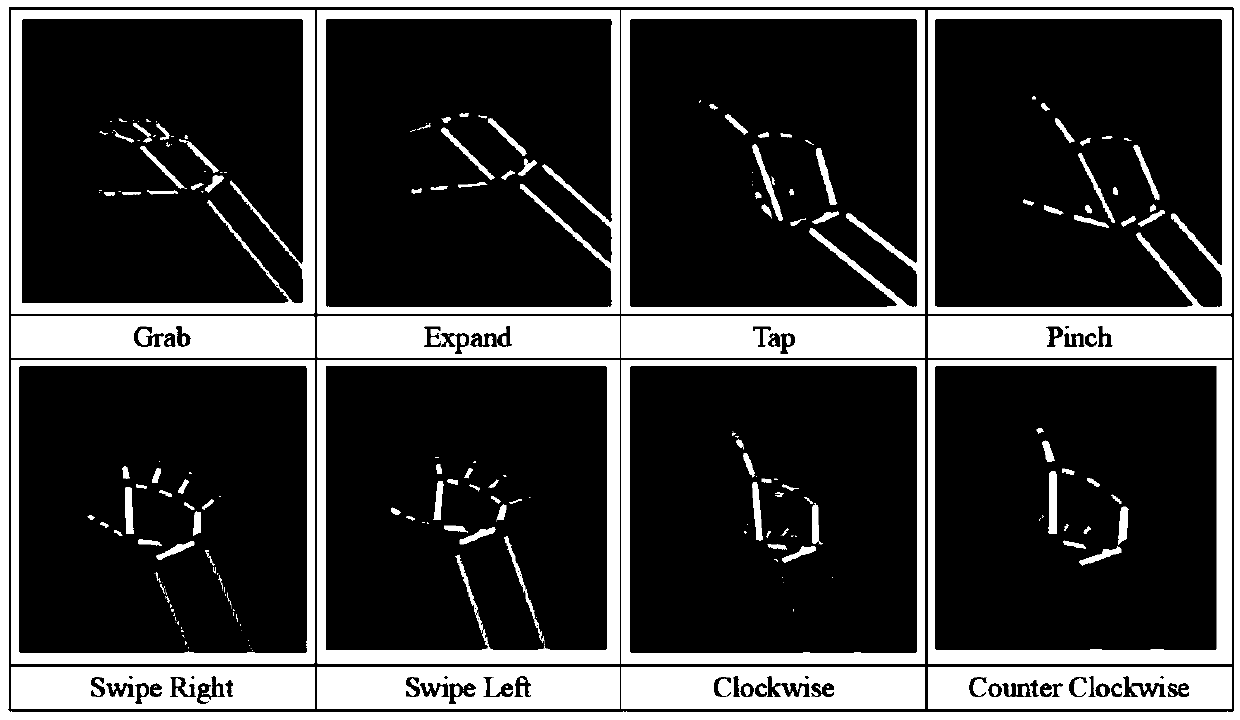

Three-dimensional depth data based dynamic gesture recognition method

InactiveCN108664877AImprove accuracyInput/output for user-computer interactionCharacter and pattern recognitionFeature vectorShape change

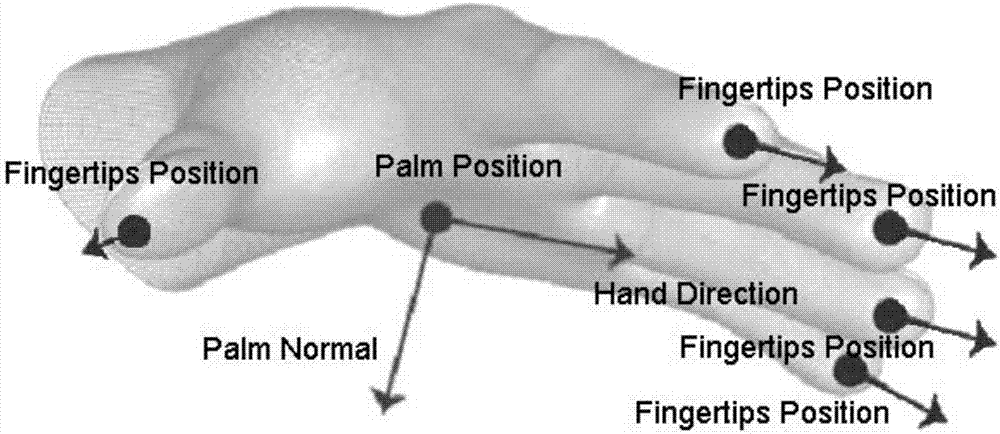

The invention discloses a three-dimensional depth data based dynamic gesture recognition method, which can improve the recognition effect of the dynamic gesture recognition and Includes such steps asobtaining a dynamic gesture sequence to be recognized by a somatosensory controller Leap Motion, obtaining position coordinates of each skeletal joint in each effective frame of the dynamic gesture sequence to be recognized, and extracting a hand shape change feature vector and a motion direction feature vector frame by frame; solving the Fisher vector coding form of the hand shape change featurevector by a Gaussian mixture model; extracting feature vectors containing test gesture sequence local time information by using a time pyramid model; and classifying the feature vectors containing to-be-recognized gesture sequence local time information by a support vector machine SVM, matching with known feature vectors with local time information in an existing dynamic gesture database, and taking a dynamic gesture corresponding to the matching result as a recognition result.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

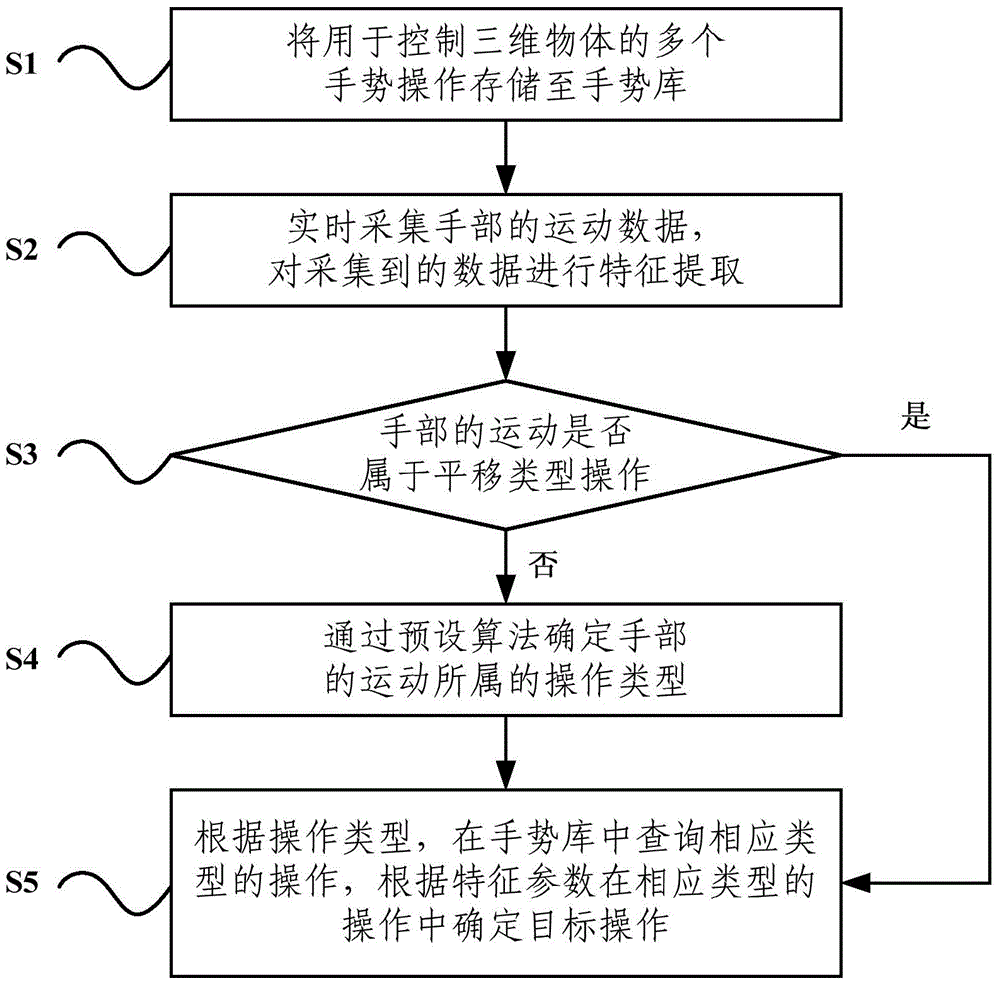

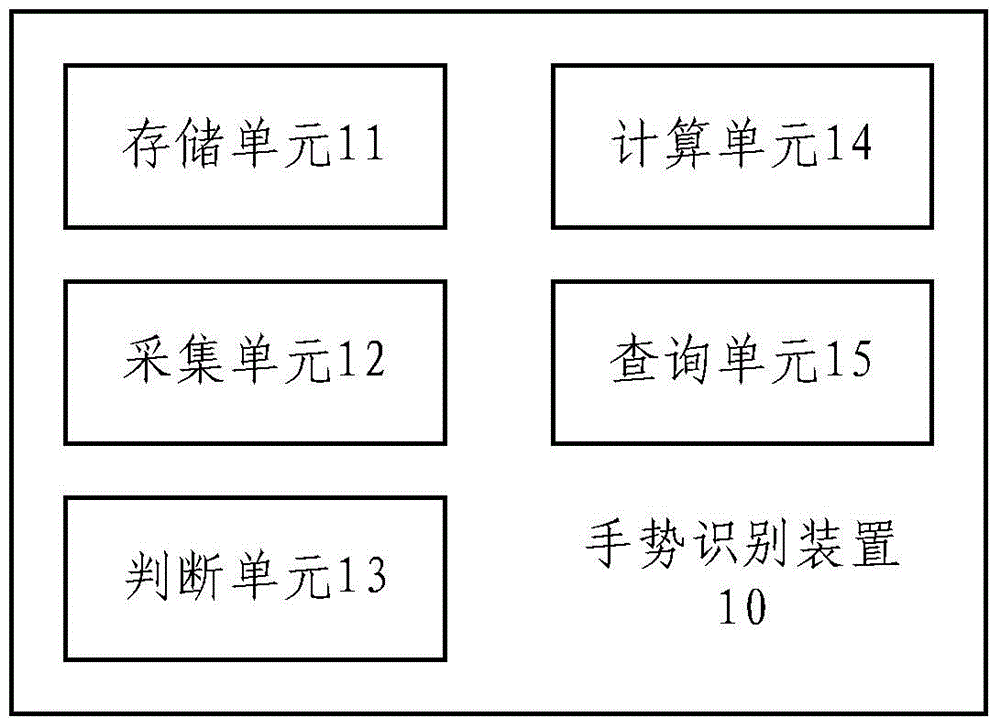

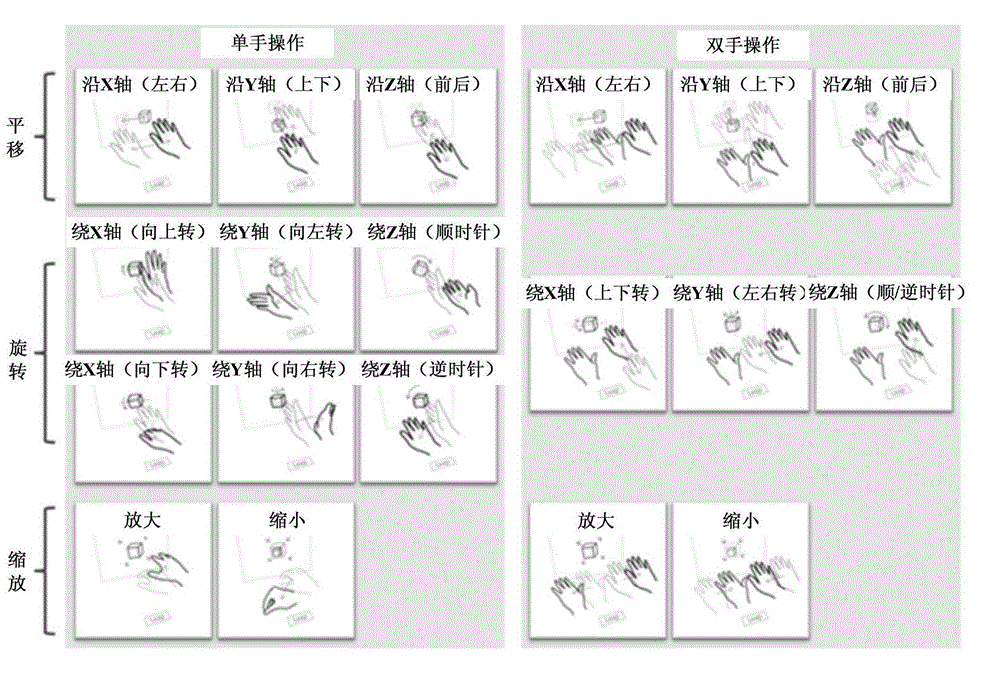

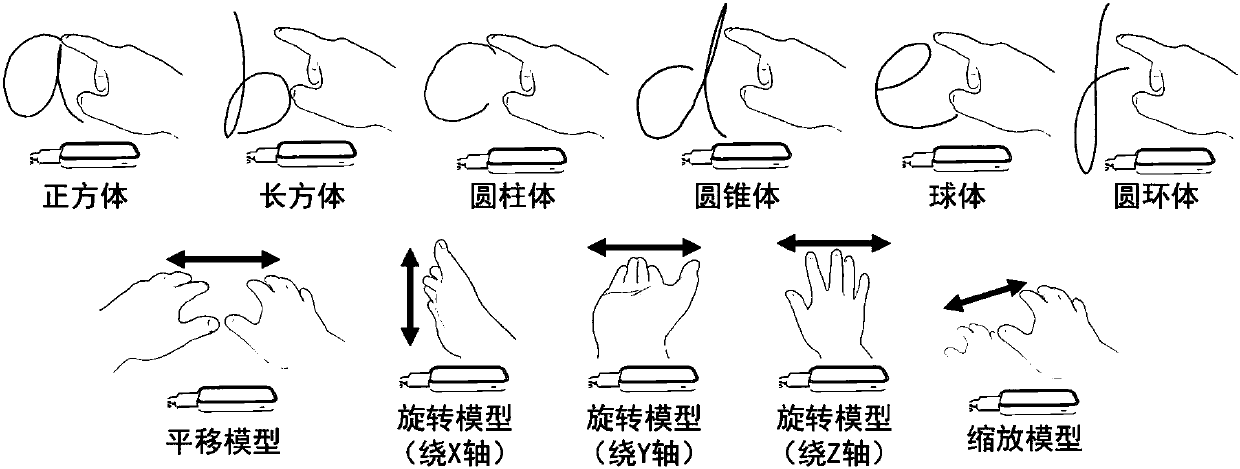

Gesture recognition method and device and Leap Motion system

ActiveCN104007819AGuaranteed smoothnessGuaranteed stabilityInput/output for user-computer interactionGraph readingFeature extractionControl system

The invention relates to a gesture recognition method and device and a Leap Motion system. The gesture recognition method comprises the methods that S1, multiple gesture operations used for controlling a three-dimensional body are stored to a gesture library; S2, motion data of the hand are collected in real time, and feature extraction is carried out on the collected data; S3, whether the motion of the hand belongs to the translation type operation or not is judged according to extracted feature parameters, the step S5 is started if the motion of the hand belongs to the translation type operation, and the step S4 is started if the motion of the hand does not belong to the translation type operation; S4, the operation type which the motion of the hand belongs to is determined through a preset algorithm; S5, the operation of the corresponding type is searched for in the gesture library according to the operation type, and the target operation is determined in the operation of the corresponding type according to the feature parameters. According to the technical scheme, the three-dimensional model operation gesture library suitable for Leap Motion and the corresponding gesture recognition method can be constructed, accuracy of gesture recognition is improved, and consistency and stability of model conversion in the three-dimensional operation are guaranteed.

Owner:TSINGHUA UNIV

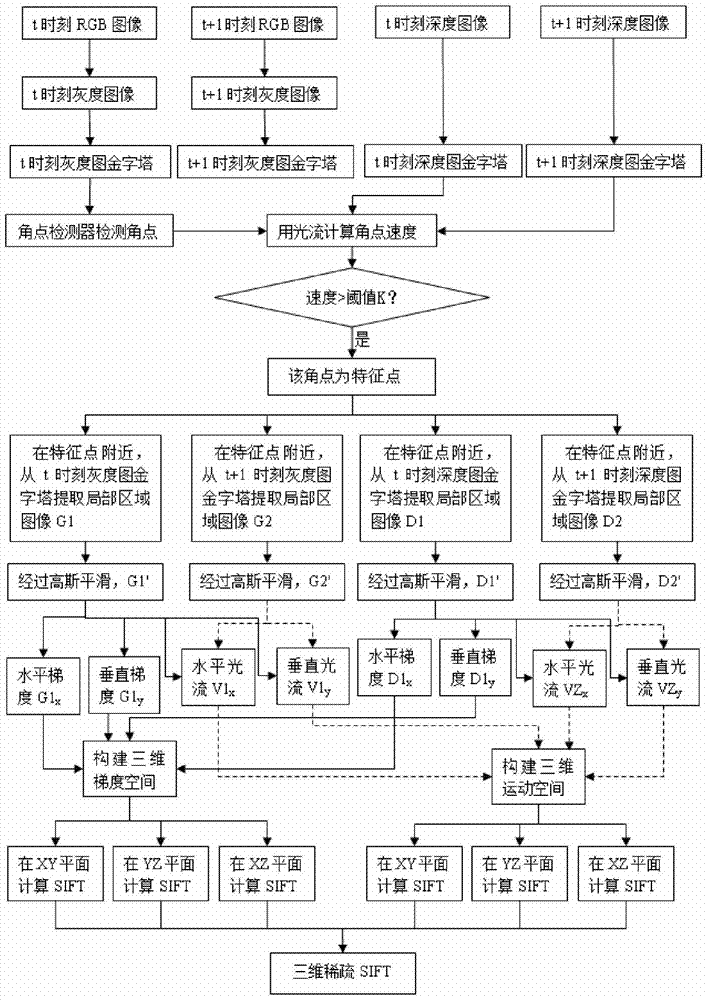

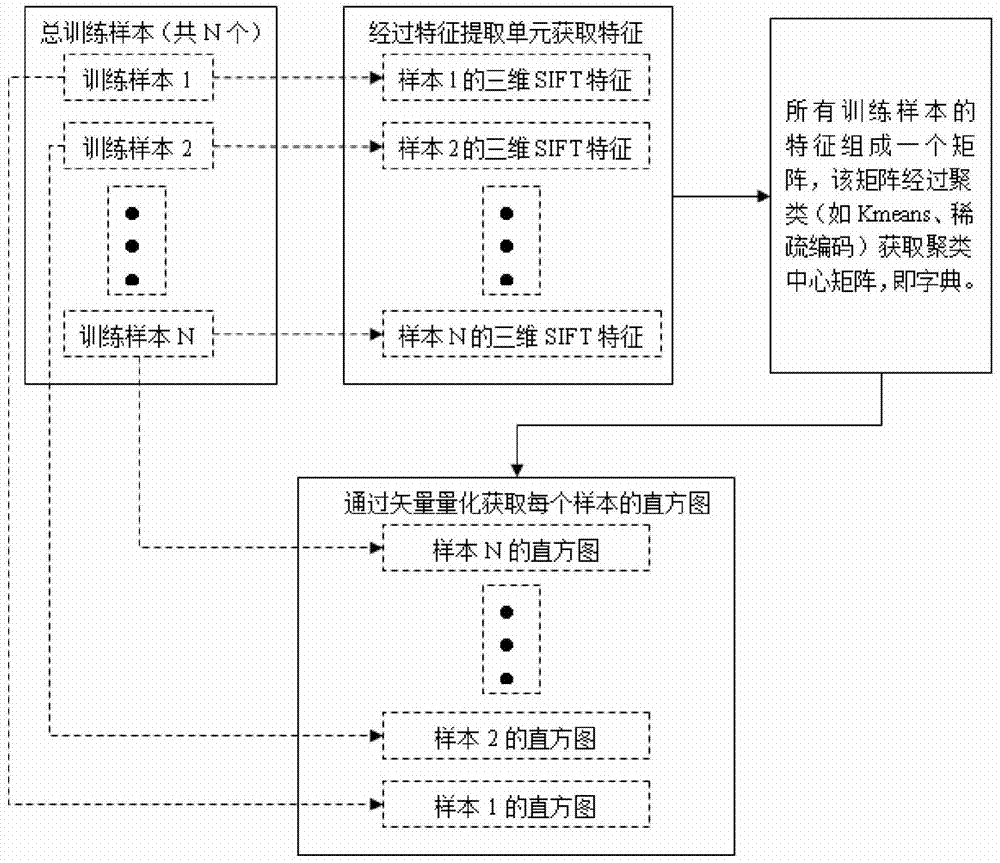

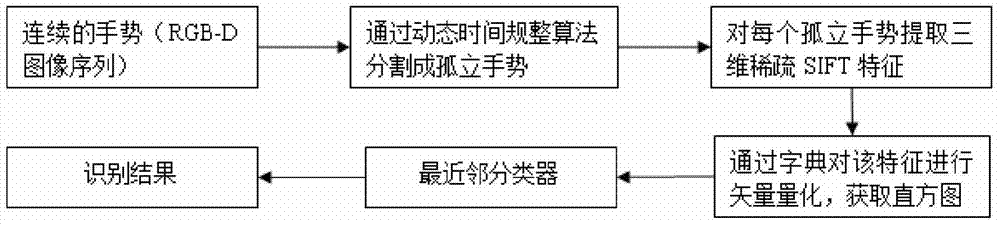

Gesture recognition method of small quantity of training samples based on RGB-D (red, green, blue and depth) data structure

InactiveCN103530619AImprove robustnessEasy to identifyCharacter and pattern recognitionFeature extractionFeature transform

The invention discloses a gesture recognition method of a small quantity of training samples based on an RGB-D data structure. The gesture recognition method is implemented by a feature extraction unit, a training unit and a recognition unit, wherein the feature extraction unit is used for extracting three-dimensional sparse SIFT (scale invariable feature transform) features in aligned RGB-D image sequences obtained by an RGB-D camera; the training unit is used for learning models by a small quantity of gesture training samples, and the recognition unit is used for recognizing input continuous gestures. The gesture recognition method can be applied to any camera or equipment, such as Kinect of Microsoft, Xtion PRO of ASUS or Leap Motion of the Leap company, which can provide RGB-D data; and a real-time recognition speed can be realized, so that the method can be used for man-machine interaction, sign language interpretation, smart home, game development and virtual reality.

Owner:BEIJING JIAOTONG UNIV

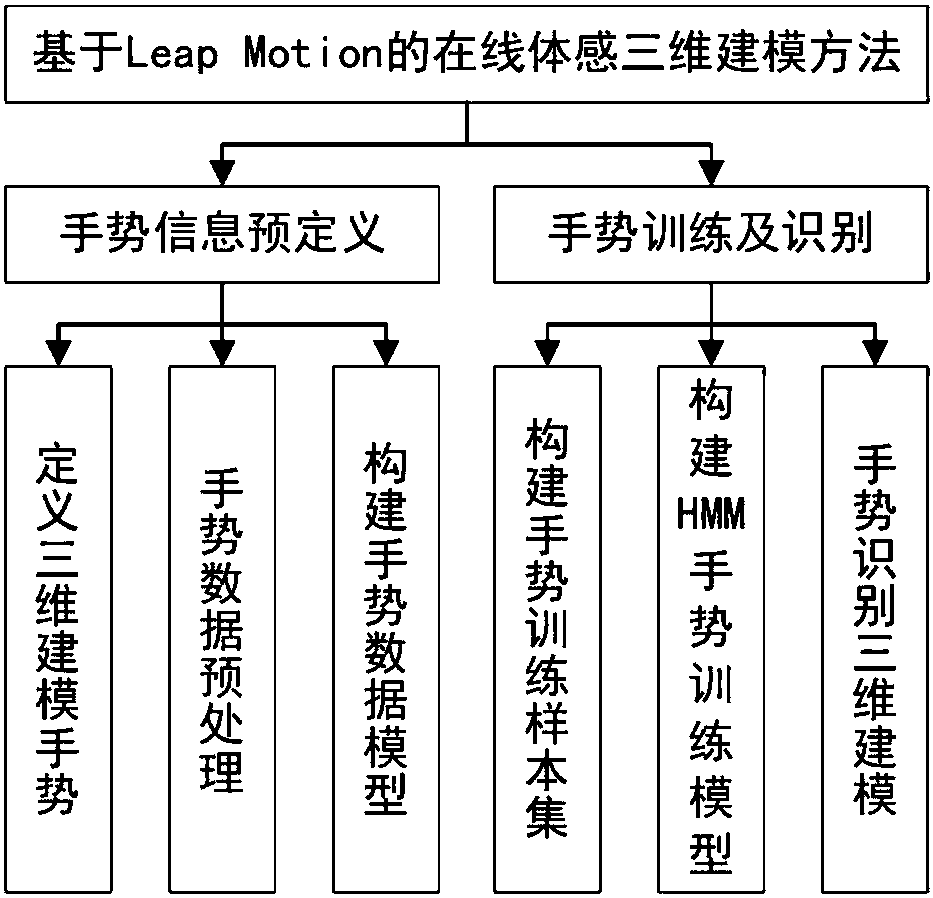

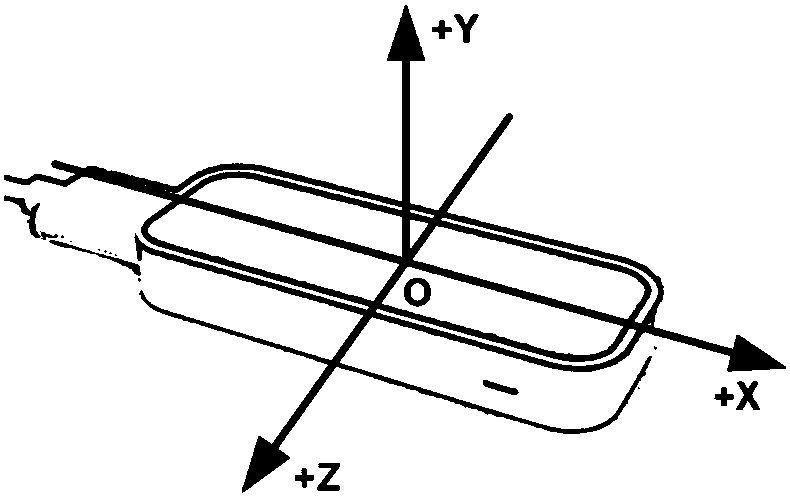

Online somatosensory three-dimensional modeling method and system based on Leap Motion

InactiveCN108182728AImprove human-computer interactionLower learning thresholdInput/output for user-computer interactionGraph readingSomatosensory systemComputer graphics

The invention discloses an online somatosensory three-dimensional modeling method and system based on Leap Motion. The method comprises: carrying out gesture information pre-definition; and carrying out gesture training and recognition. The step of gesture information pre-definition includes: defining a three-dimensional modeling gesture; carrying out gesture data preprocessing; and constructing agesture data model. The step of gesture training and recognition include: constructing a gesture training sample set; constructing an HMM gesture training model; and carrying out gesture recognitionthree-dimensional modeling. In addition, the system is composed of a software interaction unit, an acquisition unit, a real-time communication unit, a data processing unit, a storage unit, a calculation unit and an execution unit. According to the invention, the user is assisted in carrying out online three-dimensional modeling based on a browser terminal by means of somatosensory interaction based on technical principles of virtual reality, human-computer interaction and computer graphics.

Owner:WUHAN UNIV OF TECH

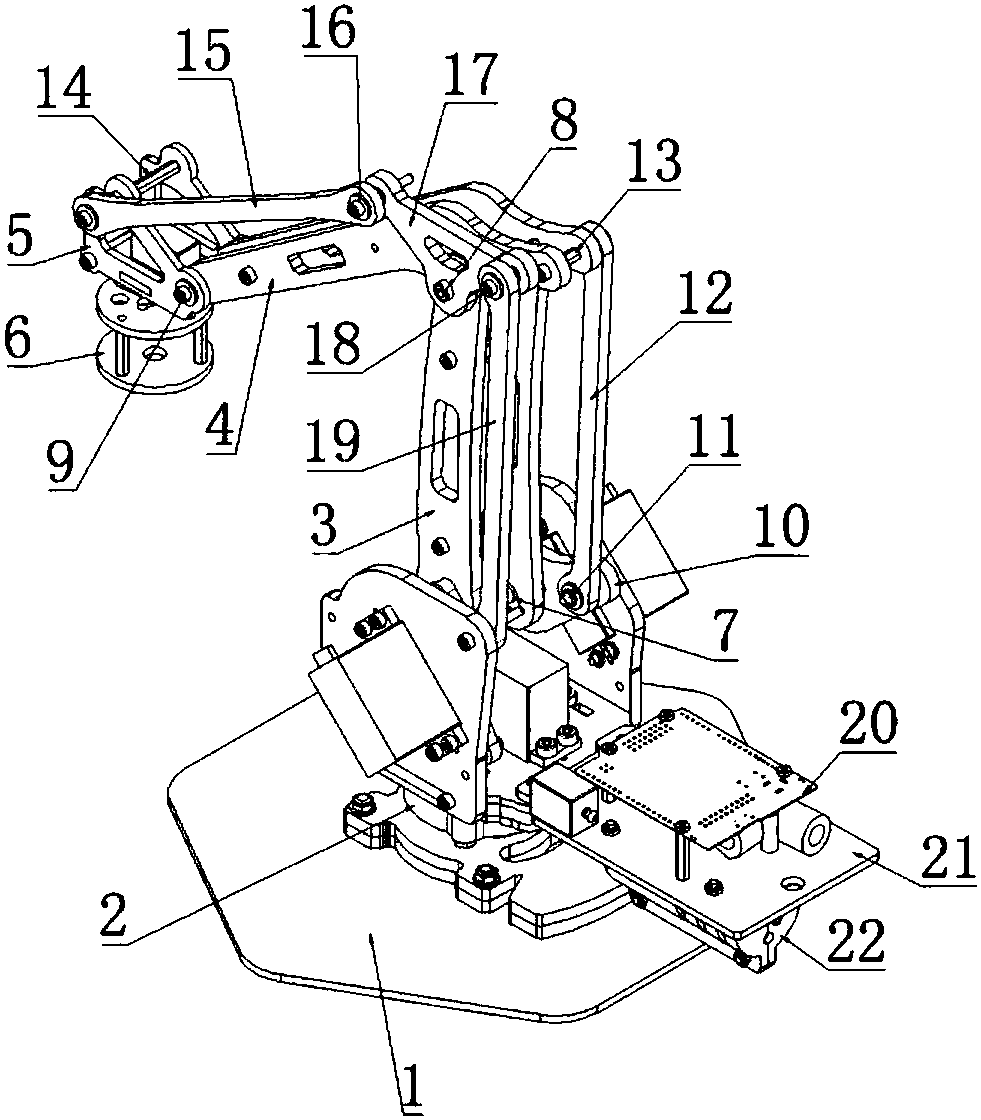

Mechanical arm and mechanical arm control method based on Leap Motion

InactiveCN107738255AEasy to implementEasy to operateProgramme-controlled manipulatorJointsControl theoryLeap motion

Owner:SHANDONG INST OF BUSINESS & TECH

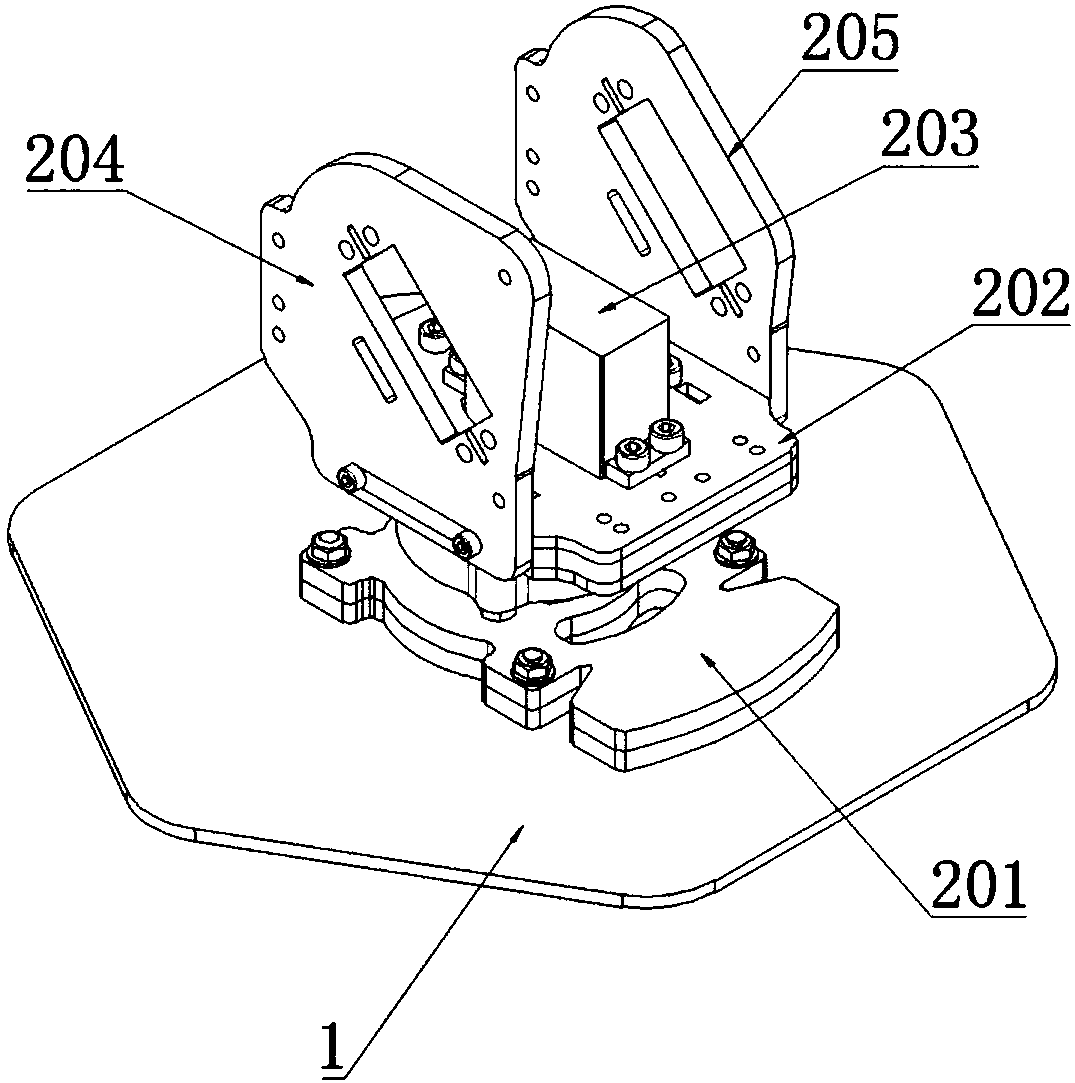

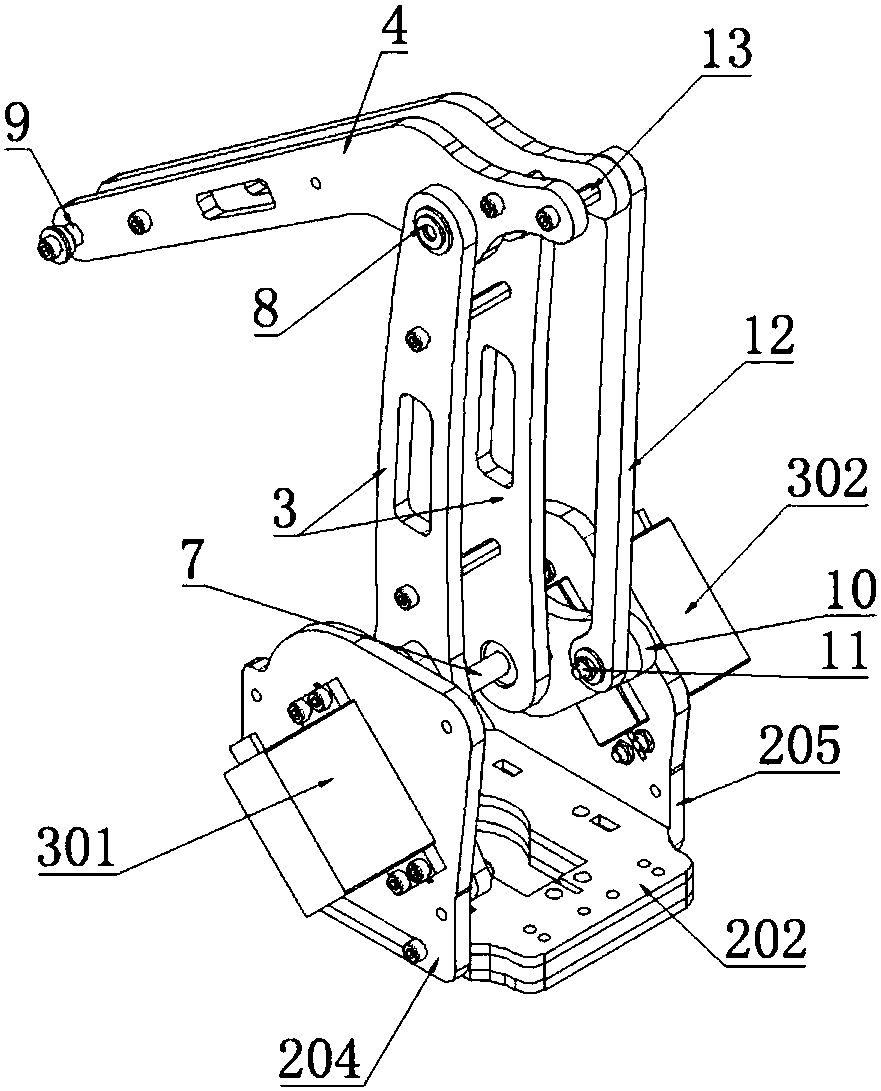

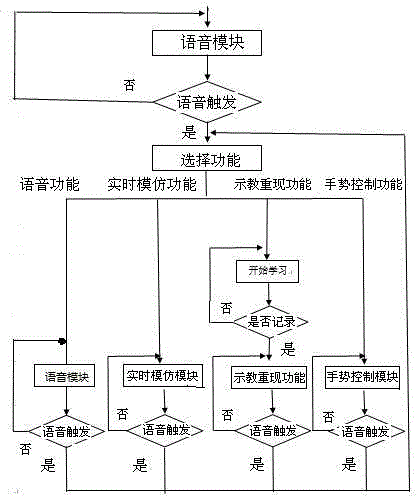

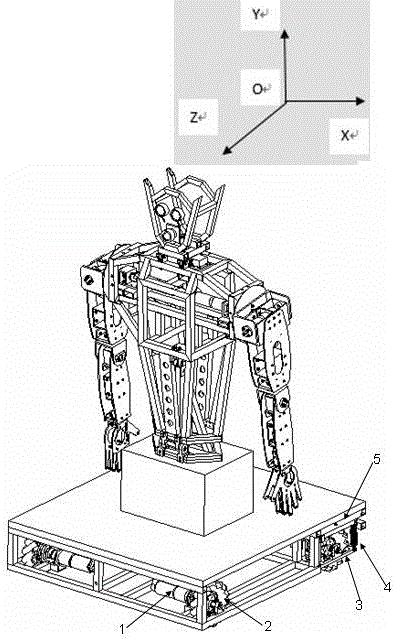

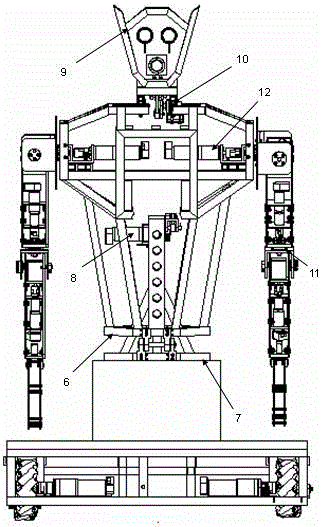

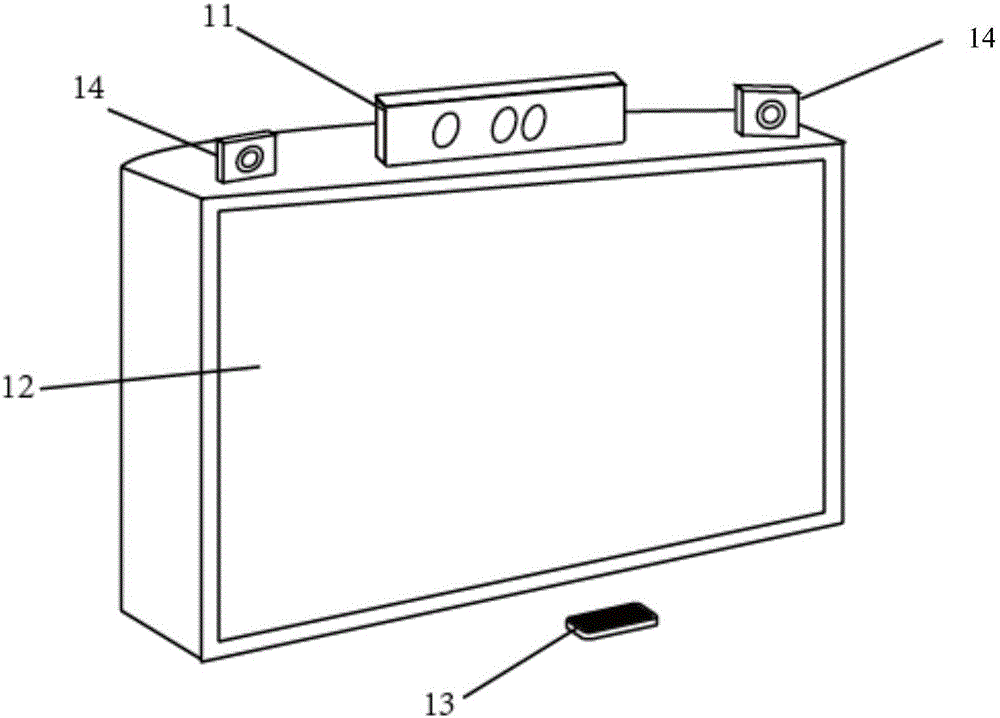

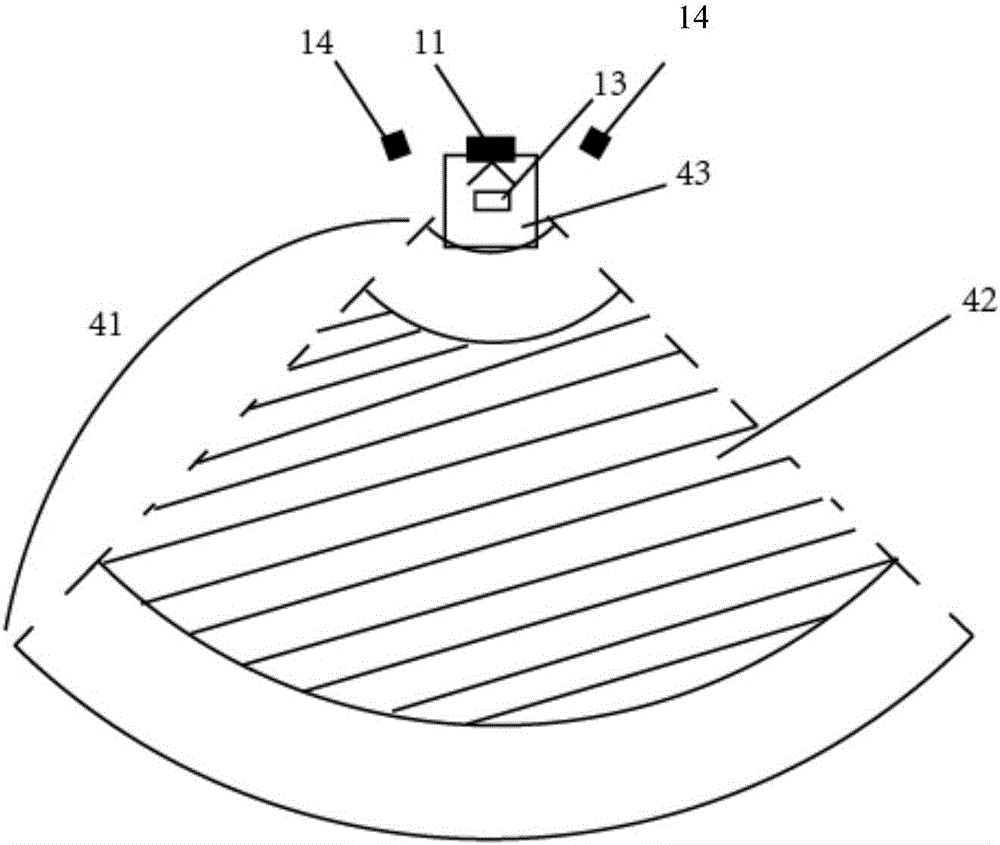

Humanoid robot based on leap motion of Kinect

InactiveCN106313072AMove quicklySmooth motionProgramme-controlled manipulatorAngular velocitySimulation

A humanoid robot based on leap motion of Kinect is composed of a Kinect sensing and upper computer processing part, an STM32 lower computer part and a humanoid mechanical skeleton part. More natural and flexible control is achieved by means of the Kinect, and man-machine interaction is friendly; and the robot has the real-time remote imitation control function, the teaching replay function and the gesture control function, and the functions are rich. By means of local area network control, wide-range remote control can be achieved, and movement is flexible and stable; mechanical arms are controlled through RE-series servo motors of the MAXCON company, large torque output can be provided through planetary reducers on the motors to stably drive the large and heavy dual mechanical arms, angular velocity information obtained through encoders at the tails of the motors is used for closed-loop control of the mechanical arms, the mechanical arms can obtain the precise and stable state by combining the PID algorithm, and made actions are more similar to the actions of an operator; and higher entertainment performance is achieved.

Owner:NANCHANG UNIV

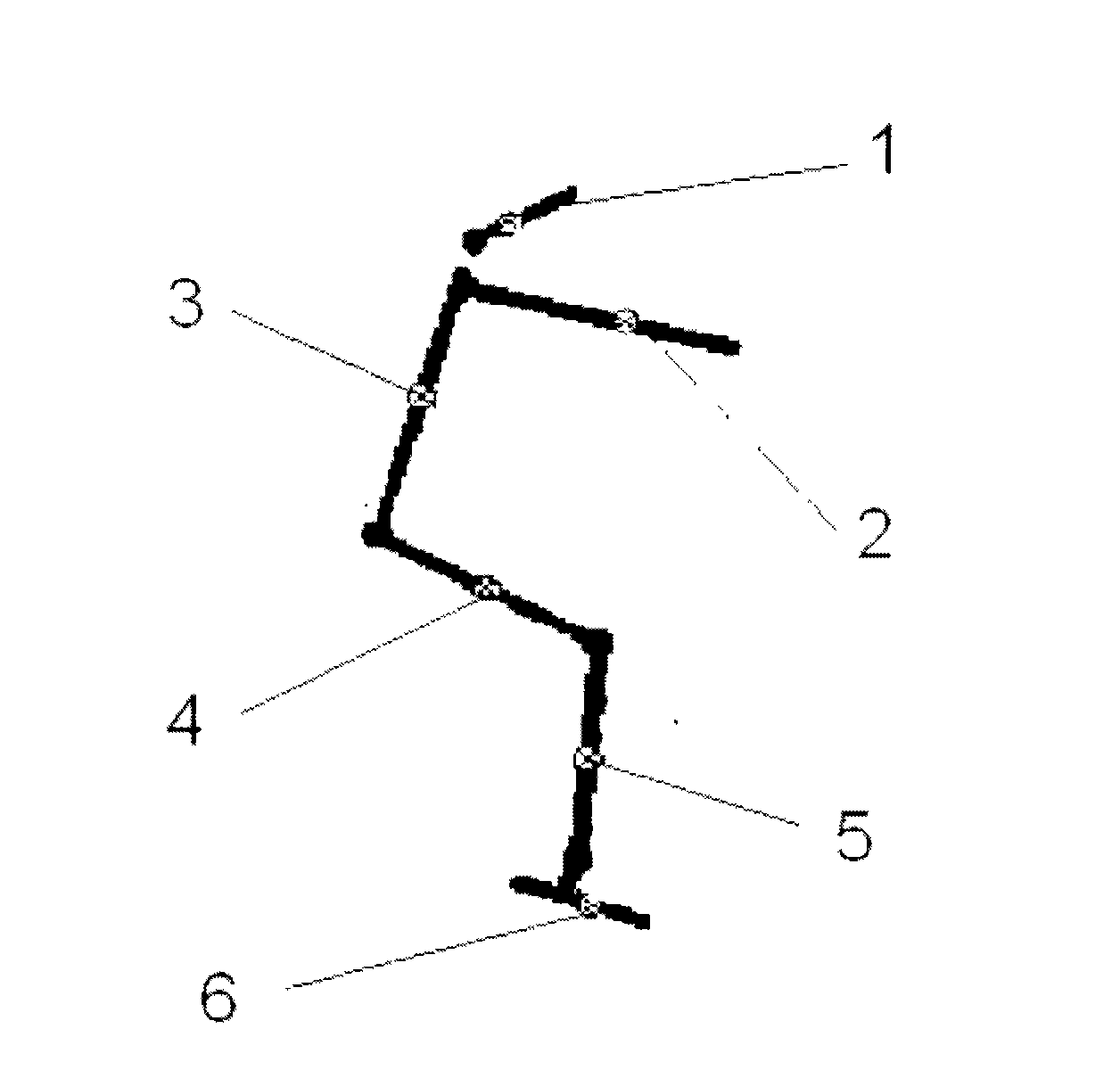

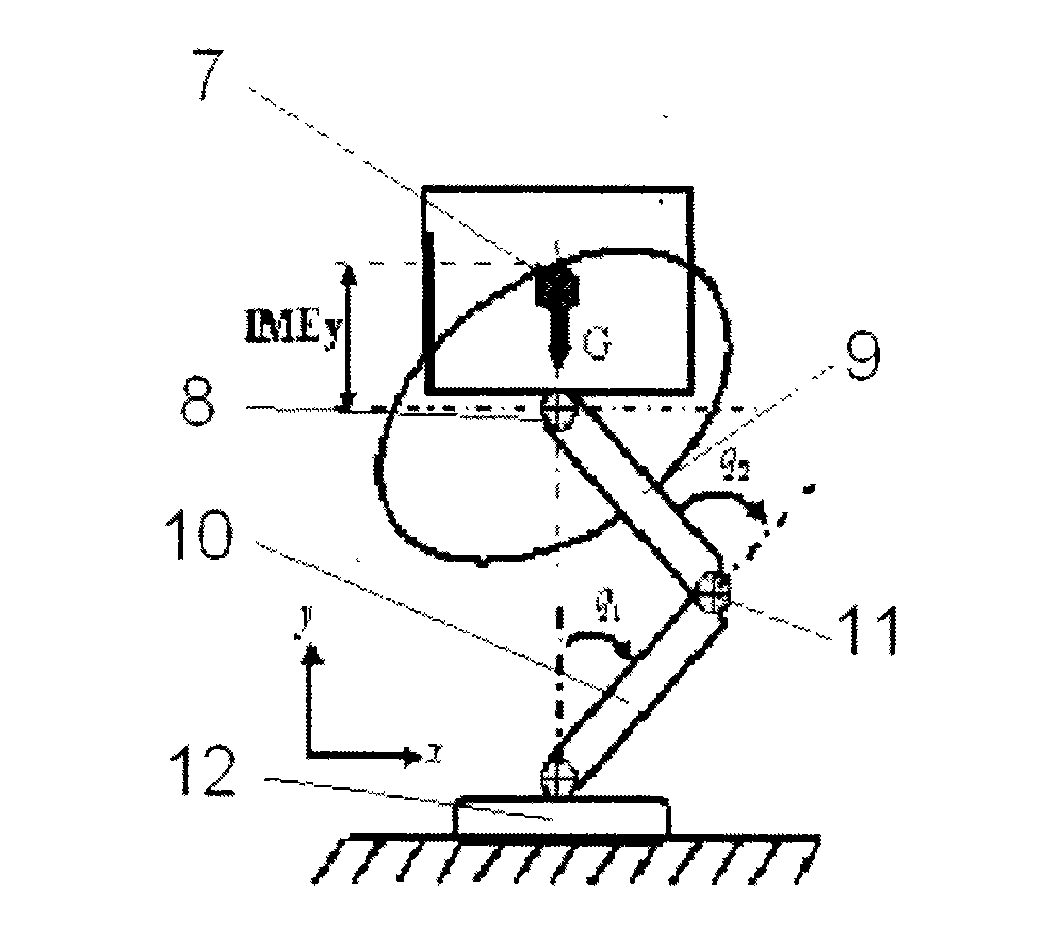

Jumping robot and motion optimization method adopting inertia matching

InactiveCN101525011ALarge reaction impulseExcellent jumping performanceSelf-moving toy figuresVehiclesResearch ObjectEngineering

The invention discloses a jumping robot and a motion optimization method adopting inertia matching. The jumping robot consists of a matrix operation controller, an aluminum plate body, a joint drive motor and a motion control algorithm with inertia matching. The system uses the jumping robot as a research object; the jumping motion is divided into three phases of a standing phase, a soaring phase and a falling collision phase; on the basis of variable constraint dynamics, the system establishes a standing phase dynamics equation by using a space floating base, performs optimization research on jumping gesture and load matching by using the inertia matching and direction manipulability and uses five polynomial to plan jumping motions. The simulation and experiment show that when the inertia matching of the jumping robot is maximal, the reaction impulse of the ground is maximal, and the jumping performance is optimal; the jumping height and the inertia matching are in direct proportion; and when the inertia matching is maximal, the jumping gesture of the robot and the load matching are optimal. The inertia matching is an effective jumping motion optimization method.

Owner:王慧娟

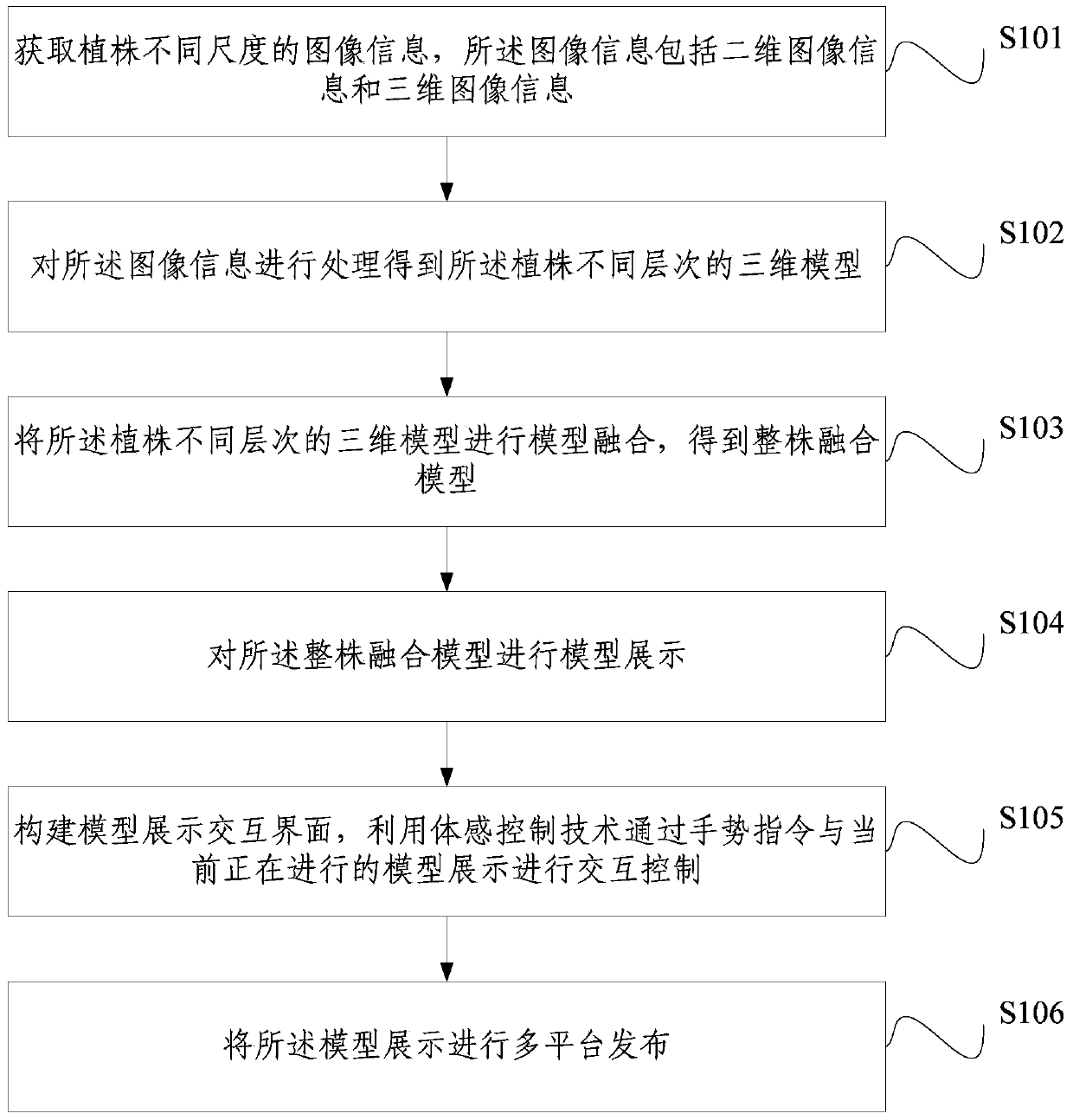

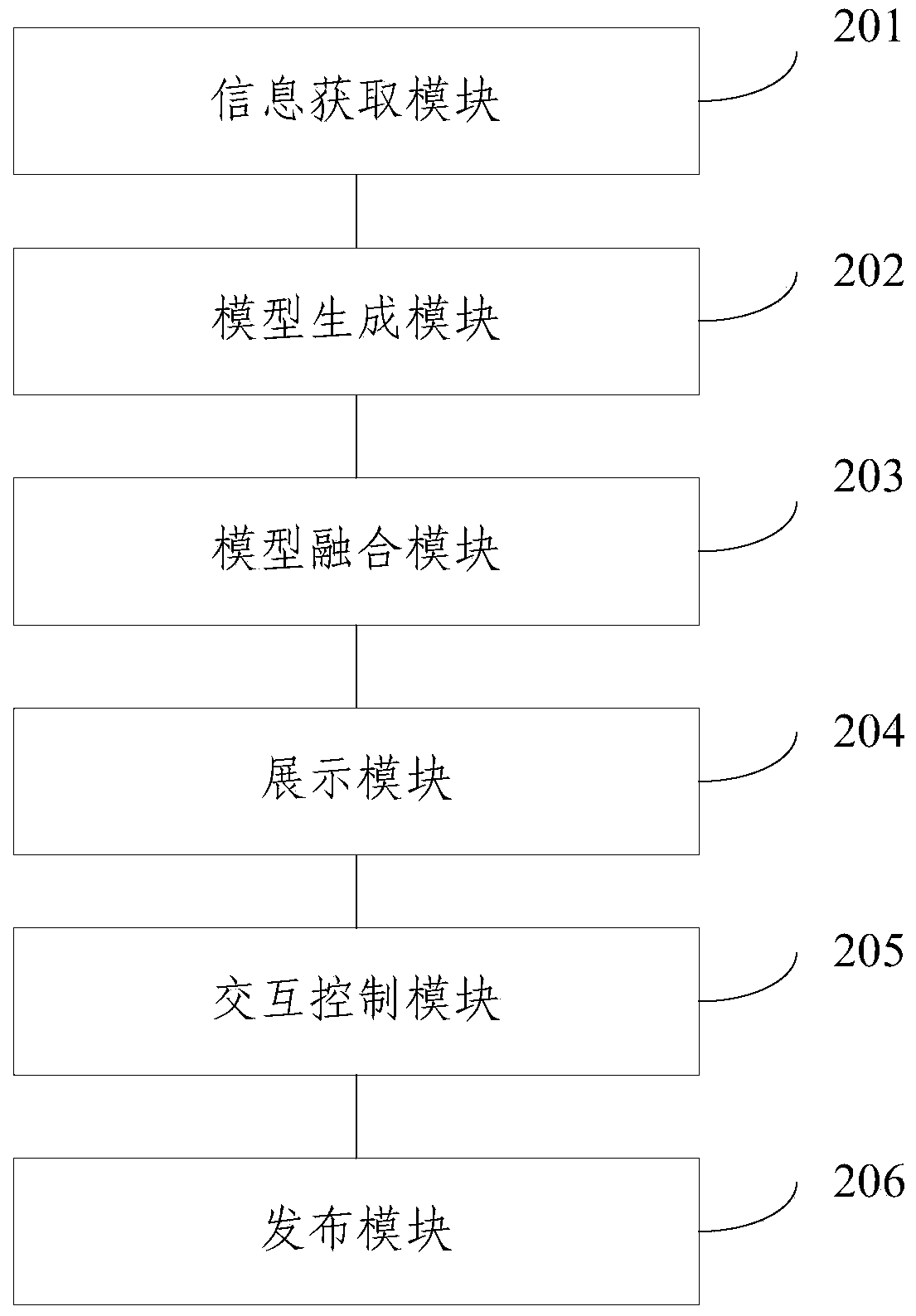

Method and system for showing three dimension model of plant

ActiveCN104200516AImprove accuracyImprove system performanceInput/output for user-computer interactionGraph readingInteraction controlSomatosensory system

The invention relates to the technical field of agricultural information, and discloses a method and a system for showing a three dimension model of a plant. The method for showing the three dimension model of the plant includes: obtaining image information of different scales of a plant, which includes two dimension image information and three dimension image information; processing the image information so as to obtain different levels of three dimension models of the plant; performing model fusion on the different levels of the three dimension models of the plant so as to obtain a whole fusion model; performing a model show for the whole fusion model; building a model show interaction interface; using a somatic sense control technology to perform interaction control through gesture commands and the model show currently performed, and releasing the model show on multiple platforms. By using the method and the device for showing the three dimension model of the plant, which are based on a leap motion somatic sense control technology, accuracy, systematization and interestingness of the three dimension model show of the plant are effectively improved.

Owner:BEIJING RES CENT FOR INFORMATION TECH & AGRI

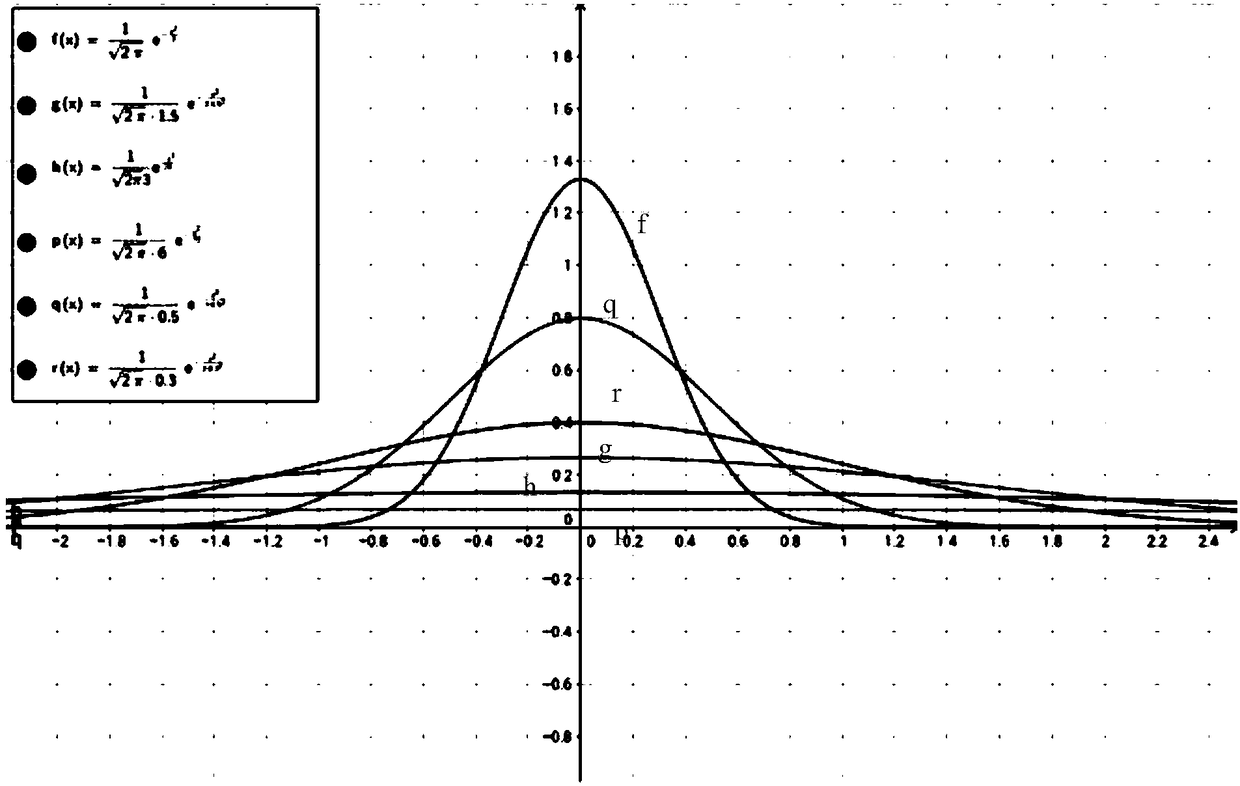

Sand painting drawing method based on Leap Motion gesture recognition

InactiveCN107024989AGood cross-platformImprove capture accuracyInput/output for user-computer interactionGraph readingVision basedFeature extraction algorithm

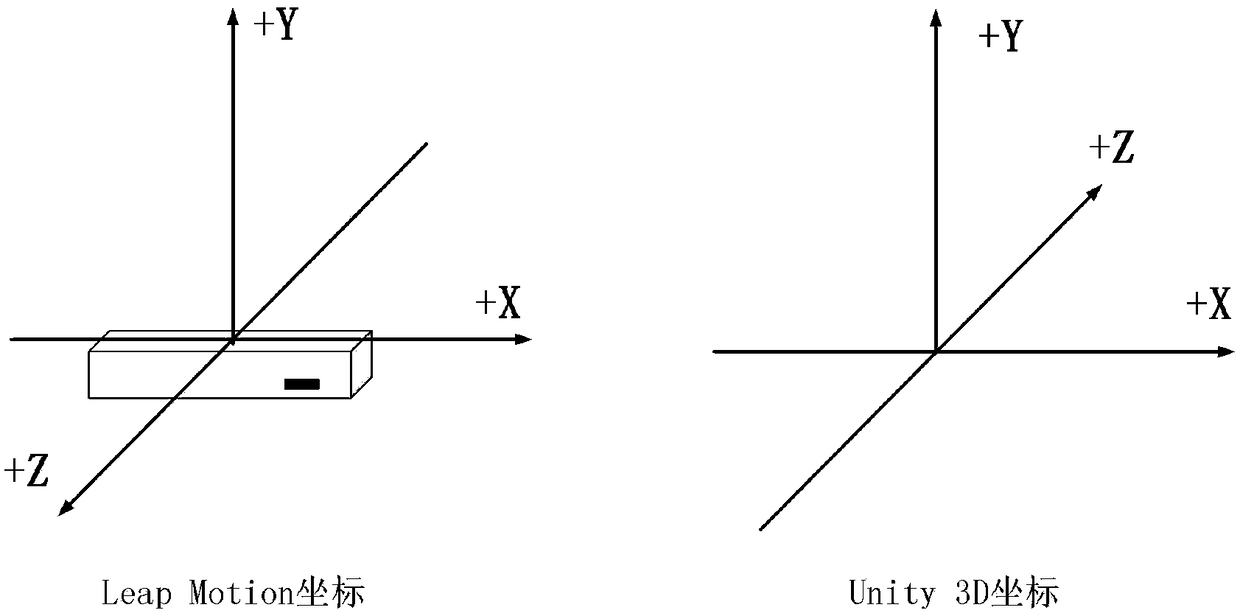

The invention belongs to the technical field of sand painting drawing, particularly relates to a sand painting drawing method based on Leap Motion gesture recognition, mainly solves the technical problems that inaccuracy and poorer timeliness are caused by the fact that the overall implementation process of vision-based gesture recognition is tedious and is susceptible to light and complicated environment and overcomes defects that sand painting performance at the present stage does not have propagation and storage functions. The virtual sand painting drawing process is completed by use of the characteristic of high Leap Motion capture gesture accuracy in combination with powerful integration and built-in functions of Unity 3D; by improving a feature extraction algorithm and optimizing the tracking process, the gesture recognition accuracy is higher, and the tracking effect is more stable; particles are added to simulate the falling effect, and finally, the vivid sand painting drawing effect is realized.

Owner:ZHONGBEI UNIV

Hand rehabilitation training method based on Leap Motion controller

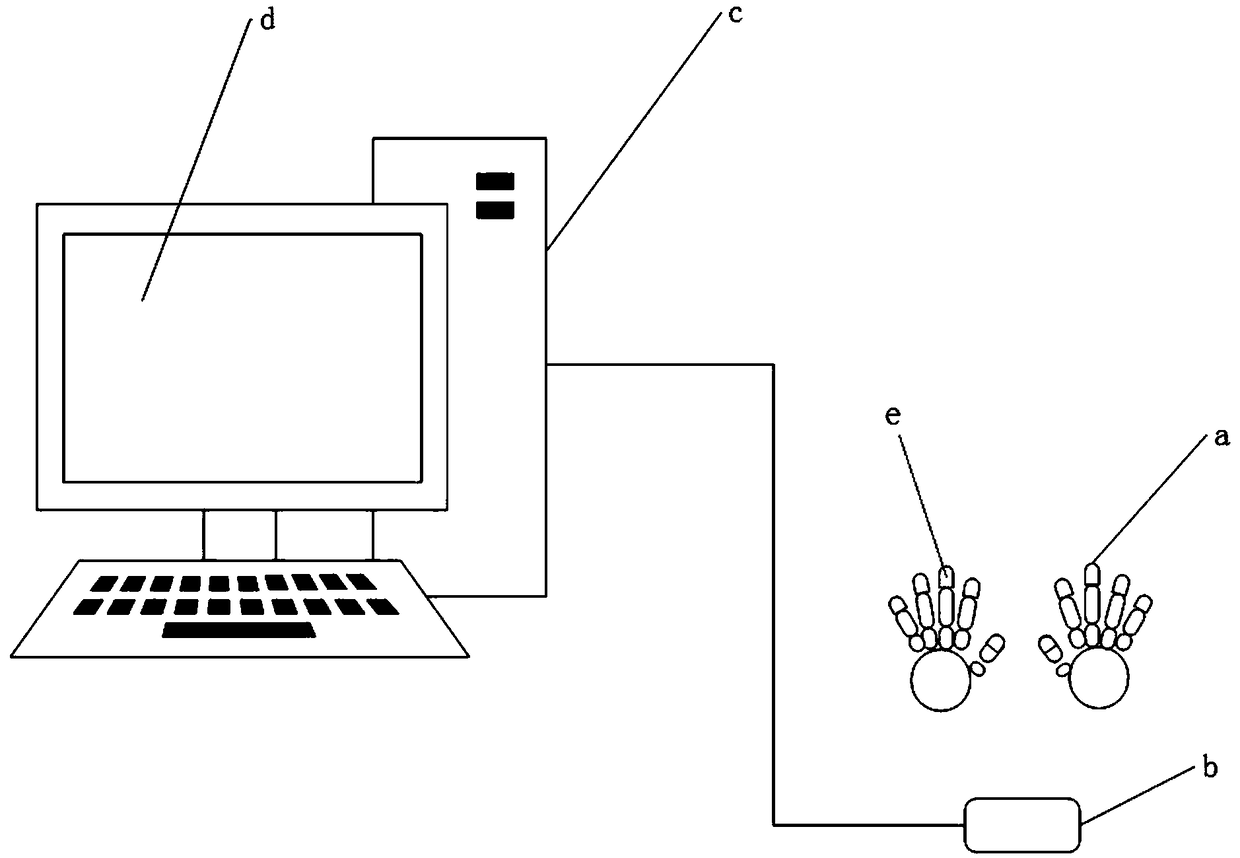

InactiveCN107491648AImprove training motivationReduce rehabilitation costsInput/output for user-computer interactionGymnastic exercisingSomatosensory systemDisplay device

The invention discloses a hand rehabilitation training method based on a Leap Motion controller. The hand rehabilitation training method comprises the following steps of A, standard hand motion data is typed into a computer host; B, a patient completes rehabilitation training motions according to standard hand motions played by a display, and data of the hand motions of the patient is obtained in real time and is transmitted to the computer host in the process that the patient completes the rehabilitation training motions; C, the acquired data of the hand motions of the patient is processed, the effective data in the data of the head motions is extracted, and then training characteristic data is obtained through extraction; D, the motions completed by the patient are evaluated. Through the combination of a virtual reality technology and a leap motion interaction technology, the hand motions of the patient are displayed in real time, the patient can see his / her own training process in a virtual reality environment, the training enthusiasm of the patient is improved, traditional passive training is turned into active training, the recovery training effect is improved, and the rehabilitation cost of the patient is lowered at the same time.

Owner:TSINGHUA UNIV

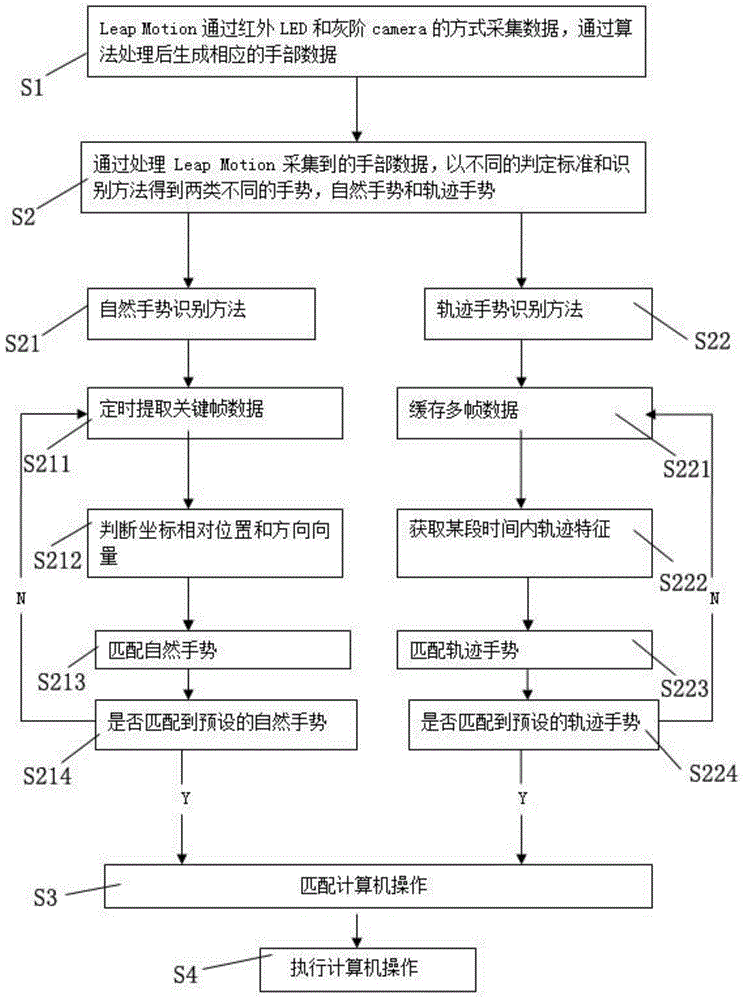

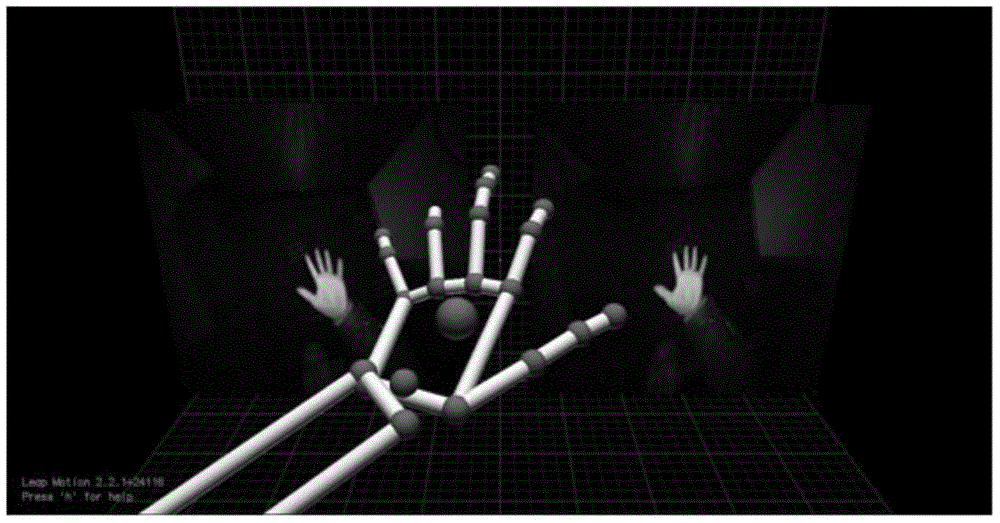

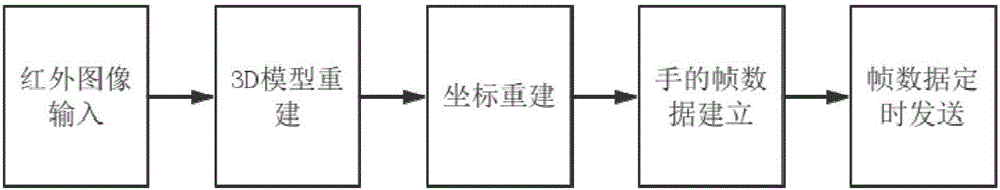

Non-contact type computer operating method based on Leap Motion

InactiveCN104793738AFreedom of manipulationHigh precisionInput/output for user-computer interactionGraph readingHuman bodyHand parts

The invention relates to a non-contact type computer operating method based on a Leap Motion. The method comprises the following steps that 1 data are collected by the Leap Motion in an infrared LED and gray scale camera mode, and after algorithm treatment is conducted, corresponding hand data are generated; 2 by treating hand data collected by the Leap Motion, and two different gestures, namely a natural gesture and a track gesture, are obtained by different judgment standards and identification methods; 3 a computer operation is matched; 4 the computer operation is executed. The non-contact type computer operating method has the advantages that information input is carried out by detecting motions of a human body, so that the whole operating and controlling process is free; a user can use the method under multiple environments freely, and operation and control can be achieved on a computer only need to carry out a simple gesture motion by the user; a somatosensory technology is applied to the operation for a computer equipment system level for the first time, and the defects that delay of a Kinect is high, and close-range precise recognition cannot be well conducted are made up.

Owner:SHANGHAI OCEAN UNIV

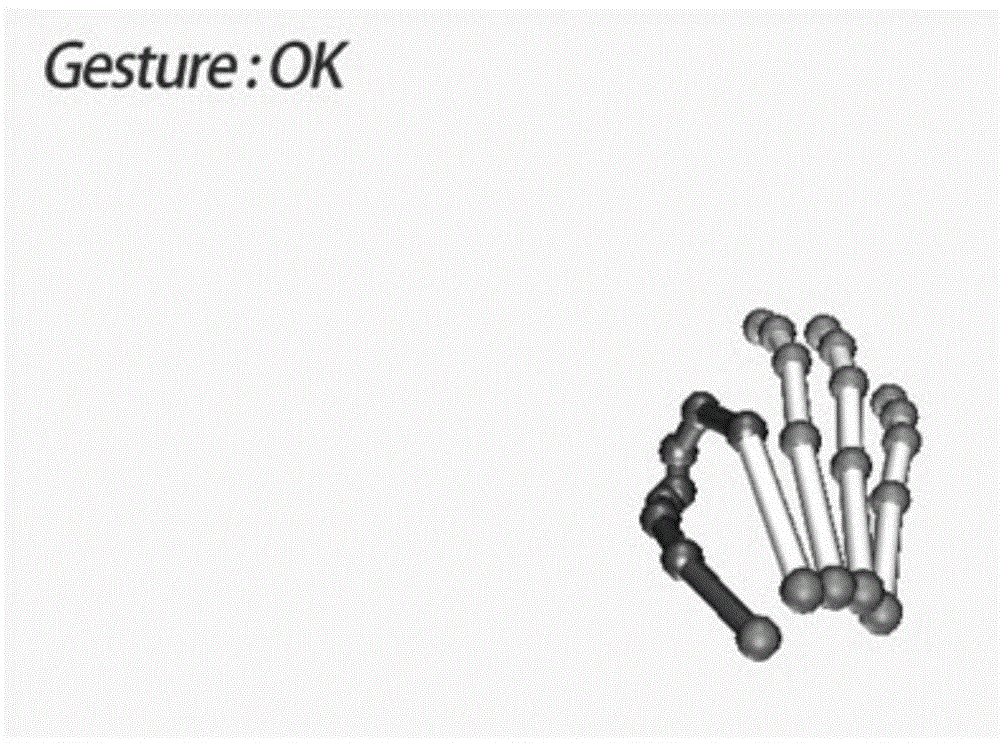

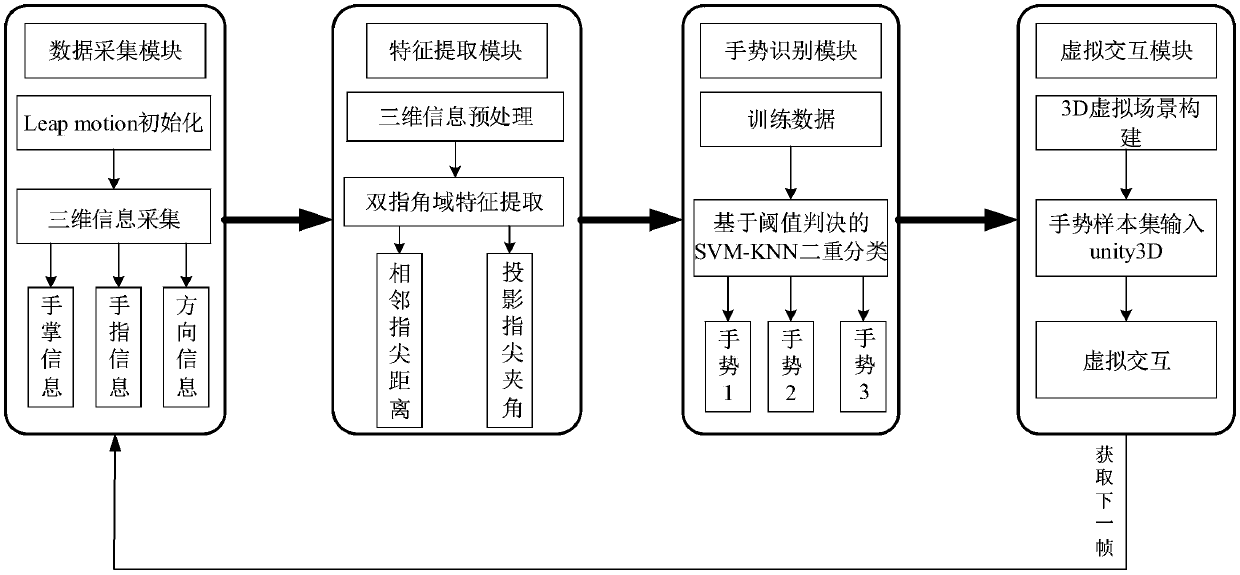

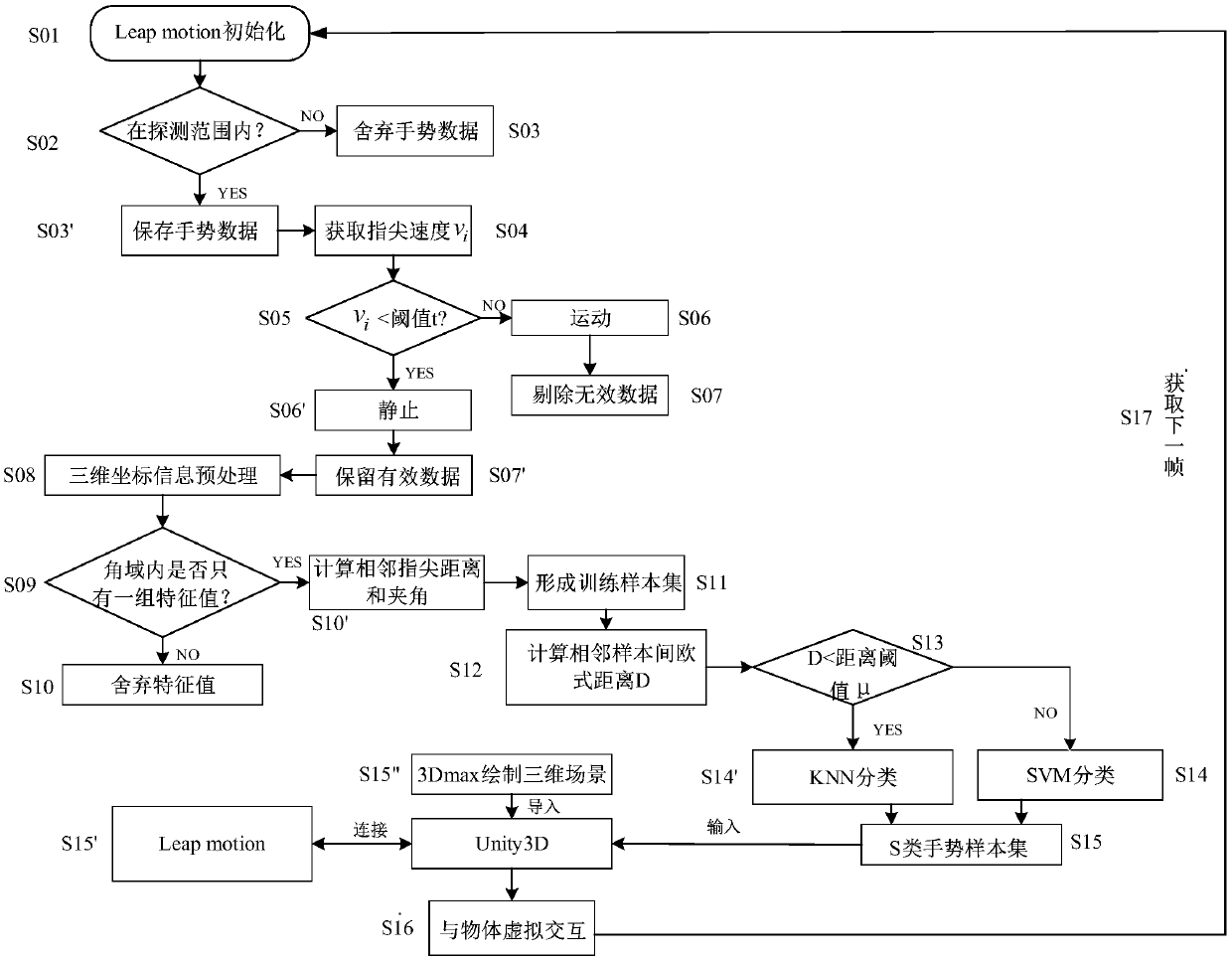

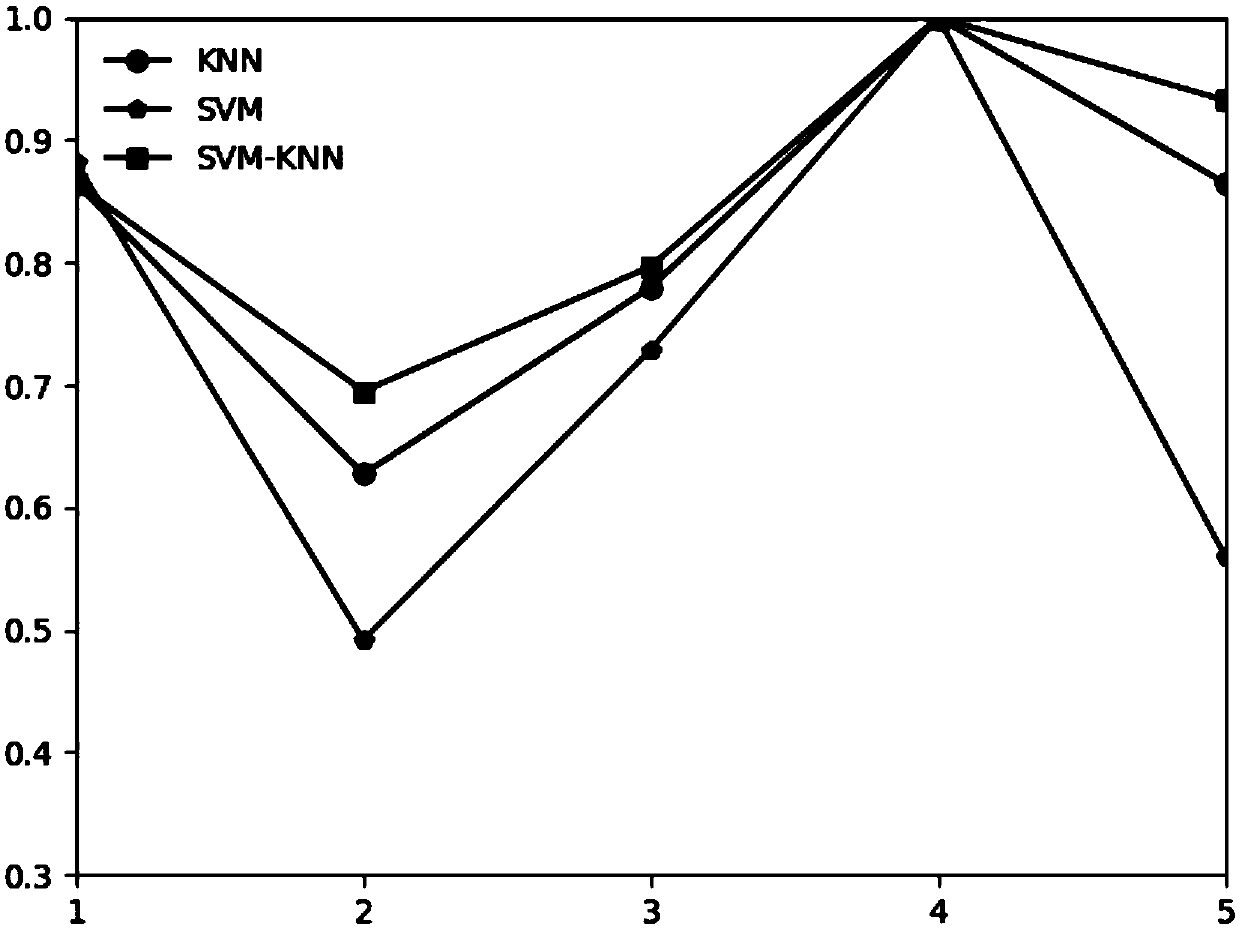

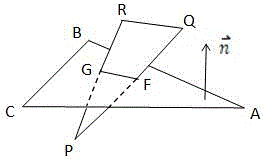

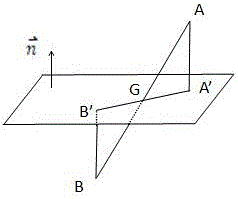

A gesture interaction system based on double finger angle domain characteristics and a working method thereof

ActiveCN109597485AEasy to separate and extractEasy to identifyInput/output for user-computer interactionCharacter and pattern recognitionInteraction controlInteraction systems

The invention relates to a gesture interaction system based on double finger angle domain characteristics and a working method thereof. The gesture interaction system comprises a data acquisition module, a characteristic extraction module, a gesture recognition module and a virtual interaction module. The data acquisition module is used for initializing Leap motion and acquiring gesture information by using the Leap motion; The feature extraction module is used for three-dimensional coordinate preprocessing and gesture feature extraction; The gesture recognition module is used for inputting the extracted double-finger angle domain features into an SVM-KNN classification algorithm to perform gesture classification; And the virtual interaction module is used for connecting Leap motion with Unity 3D to realize different interaction control of different classified gestures on instruments in a gymnasium scene. According to the invention, the user experience is improved, and the user immersion is enhanced.

Owner:SHANDONG UNIV

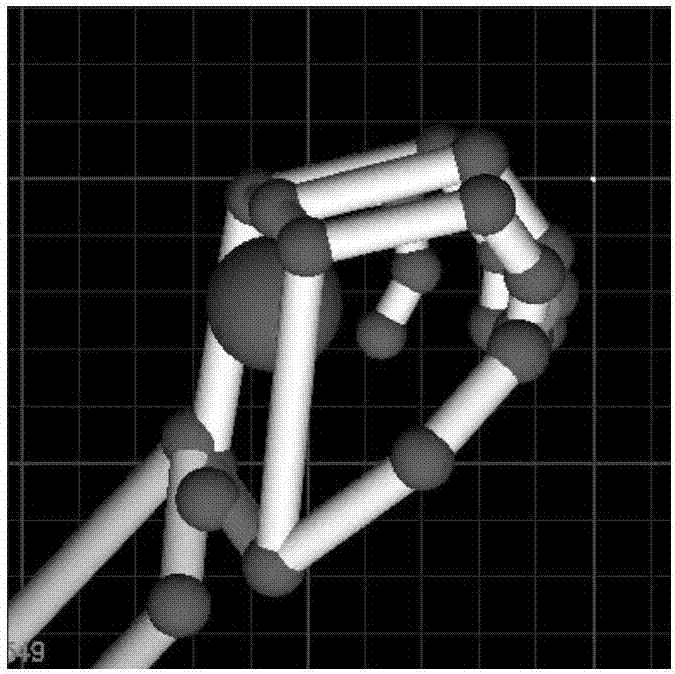

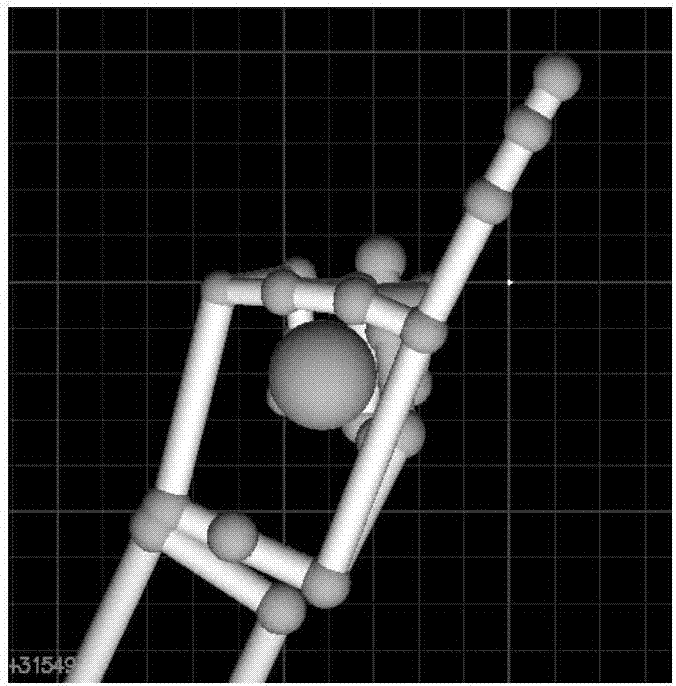

Virtual digital sculpture method based on natural gesture

ActiveCN106406875AExact joint coordinatesHuman-computer interaction is naturalInput/output for user-computer interactionSoftware designDeformation effectJoint coordinates

The invention discloses a virtual digital sculpture method based on a natural gesture. The virtual digital sculpture method comprises the following steps of obtaining a gesture position through Leap Motion; and performing virtual sculpture modeling. According to the digital sculpture method, two major models, including an established to-be-sculptured model and a virtual hand model which is established based on a joint coordinate obtained from Leap Motion, are adopted in a task of the virtual sculpture; when a human hand moves under Leap Motion to further control a virtual hand and a fixed to-be-sculptured model to be subjected to slight collision, whether a crossed point exists or not in the collision position is detected through a triangle-triangle crossing algorithm in a three-dimensional space; and then real-time correction on a crossed point coordinate is performed through a virtual sculpture deformation algorithm in an intersecting line position of the crossed point according to calculation of the collision depth, so as to achieve a sculpture deformation effect.

Owner:SOUTH CHINA UNIV OF TECH

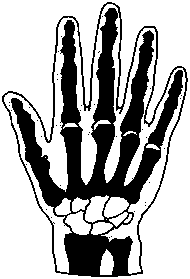

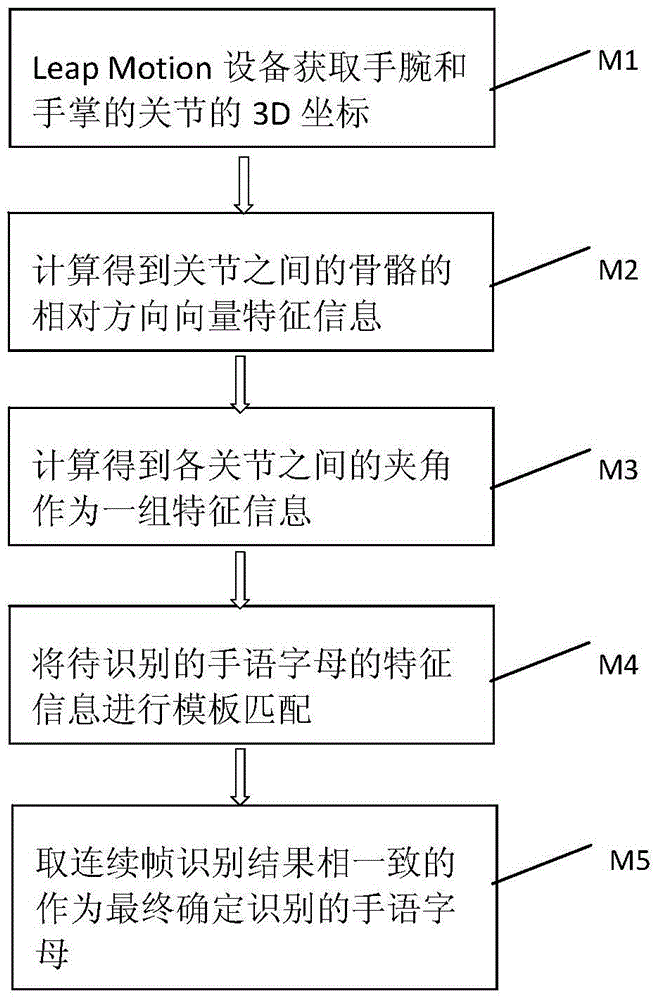

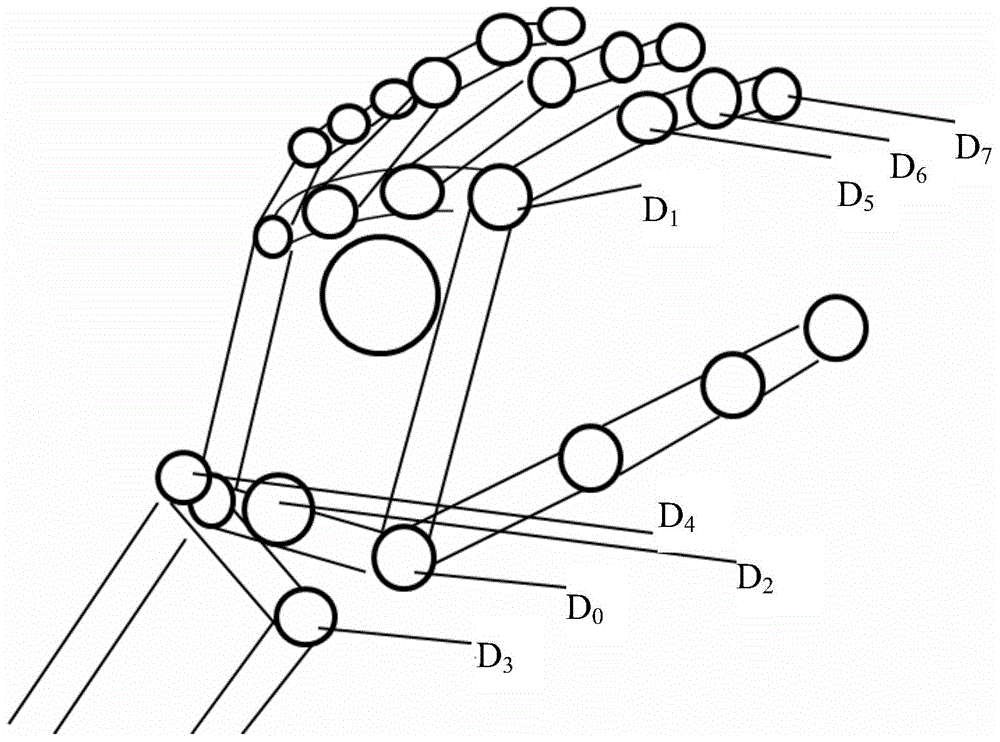

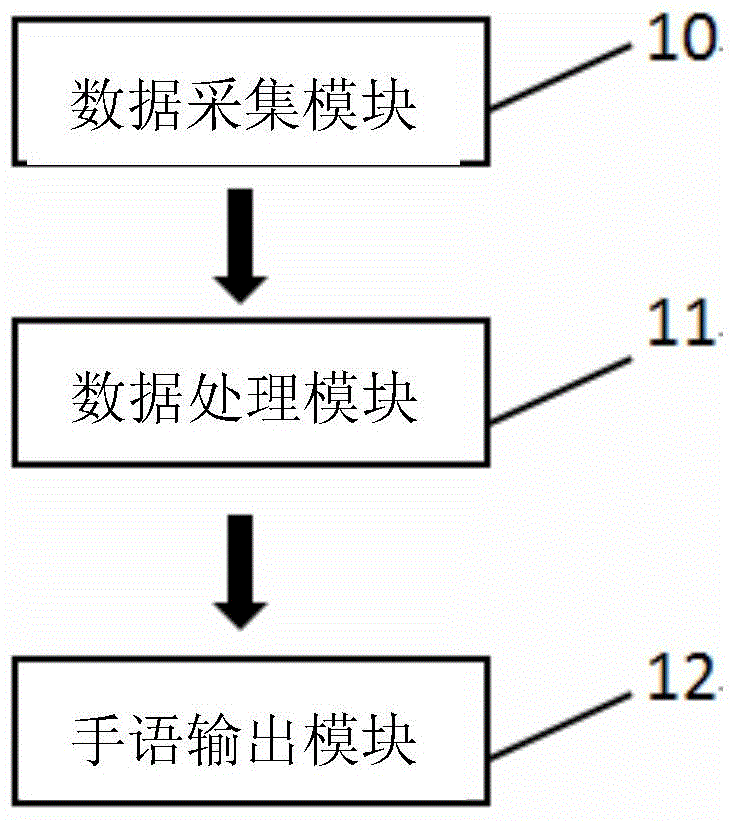

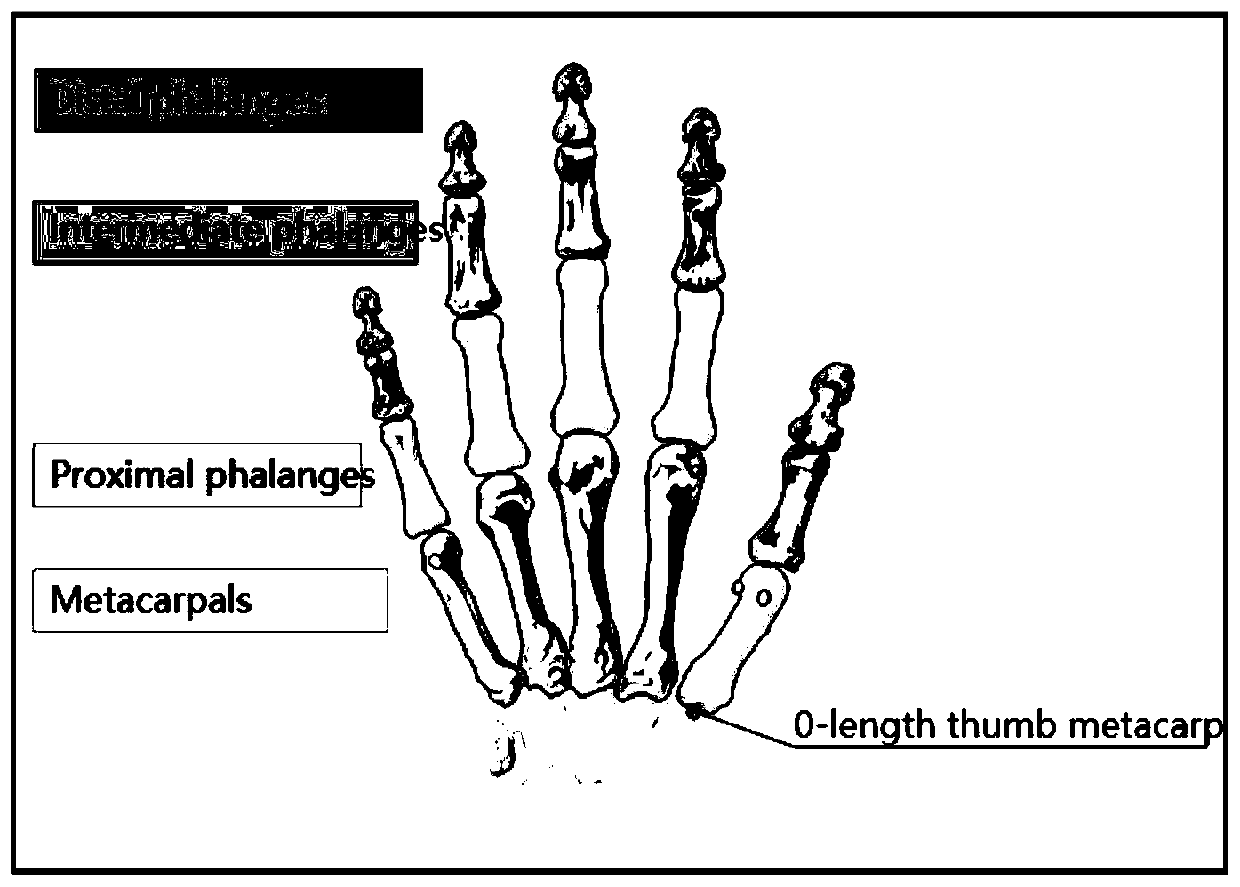

Manual alphabet identification method based on Leap Motion

InactiveCN104866824AImprove naturalnessReduce the impactCharacter and pattern recognitionAcquired characteristicNear neighbor

The invention discloses a manual alphabet identification method based on Leap Motion. The method comprises steps of acquiring palm and wrist bone articulation point 3D coordinate information through a depth camera Leap Motion device; performing correlative calculation on the 3D coordinate information so as to obtain bending angle information of hand joints; acquiring hand type feature information and wrist bending degree feature information through feature processing on the angle information; calculating the Euclidean distance between the acquired feature information and templates; and identifying manual alphabets according to a maximum probability criterion, a nearest neighbor criterion and a successive frame flow result consistent principle. By employing the method, the Chinese manual alphabets can be effectively and rapidly identified, manual alphabet elements are relatively independent, and sign language can be identified in a real time manner through the identification of manual alphabet continuous sequences. By employing the method, the sign language based on the manual alphabets can be identified in a real time manner, so that the deaf can effectively communicate with others by the use of a wearable device.

Owner:SOUTH CHINA UNIV OF TECH

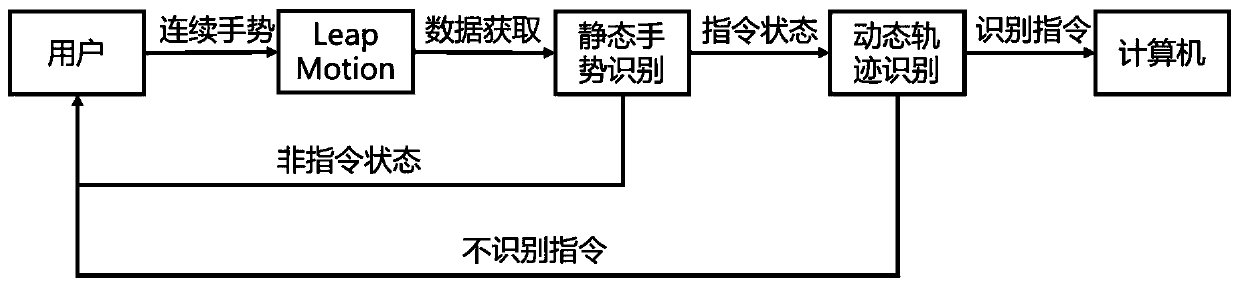

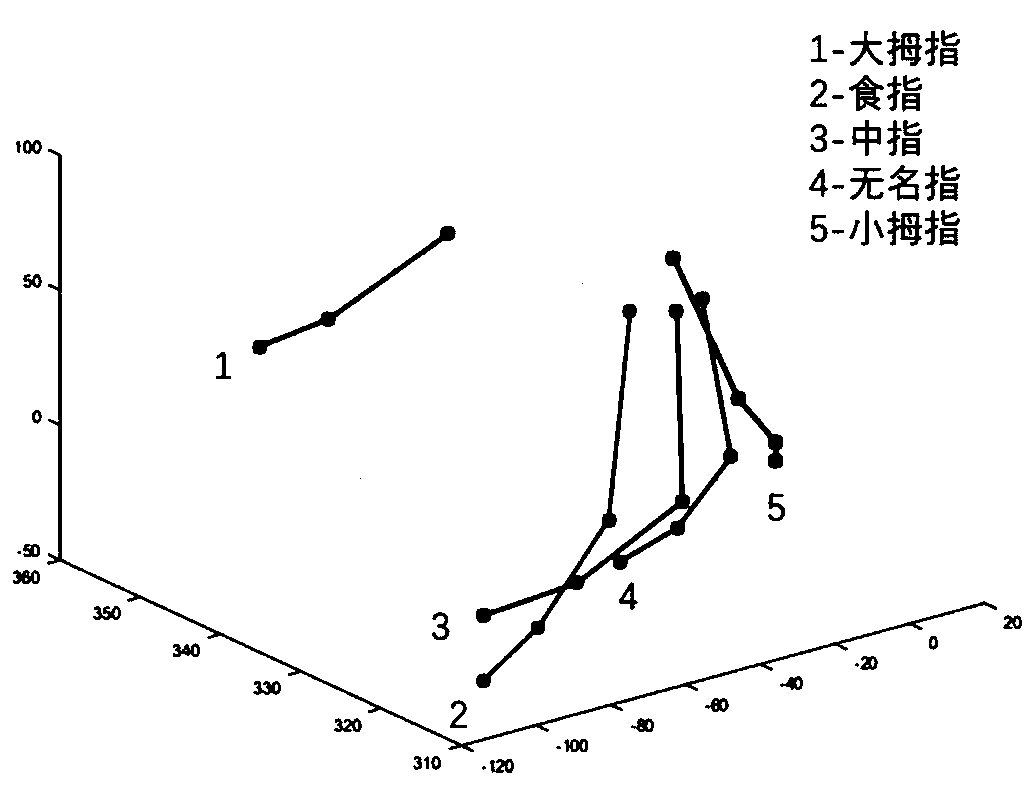

Complex dynamic gesture recognition method based on Leap Motion

ActiveCN109993073AReduced complexity requirementsImprove accuracyCharacter and pattern recognitionSupport vector machineFeature vector

The invention relates to a complex dynamic gesture recognition method based on Leap Motion, and belongs to the field of artificial intelligence and man-machine interaction. According to the method, static gesture recognition and continuous track recognition are used for complex dynamic gesture recognition, hand information in the teaching process of a user is captured through a somatosensory sensor, a support vector machine and a feature vector extraction mode based on learning are adopted for static gesture learning, and static gestures in the teaching process are all marked as instruction states. For the static gesture in the instruction state, information of the vertex of the distal bone of each finger and the central point of the palm is extracted and continuous dynamic track information is generated for learning. The complex dynamic gesture frame is decomposed by frame, and the instruction is identified after judging whether the complex dynamic gesture is the instruction gesture.According to the method, the accuracy of dynamic gesture recognition is greatly improved, the requirement for the complexity of dynamic gestures is lowered, and the man-machine interaction process ismore friendly and more natural on the basis of the visual acquisition equipment.

Owner:BEIJING UNIV OF TECH

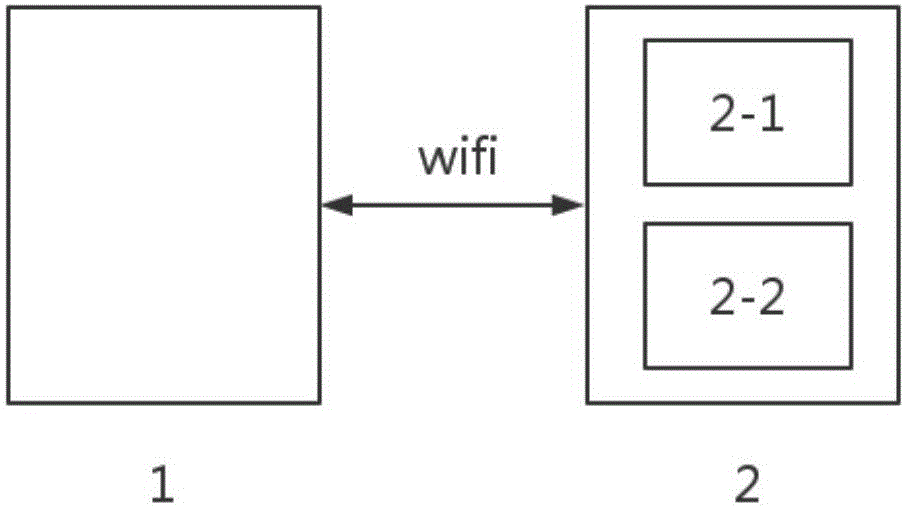

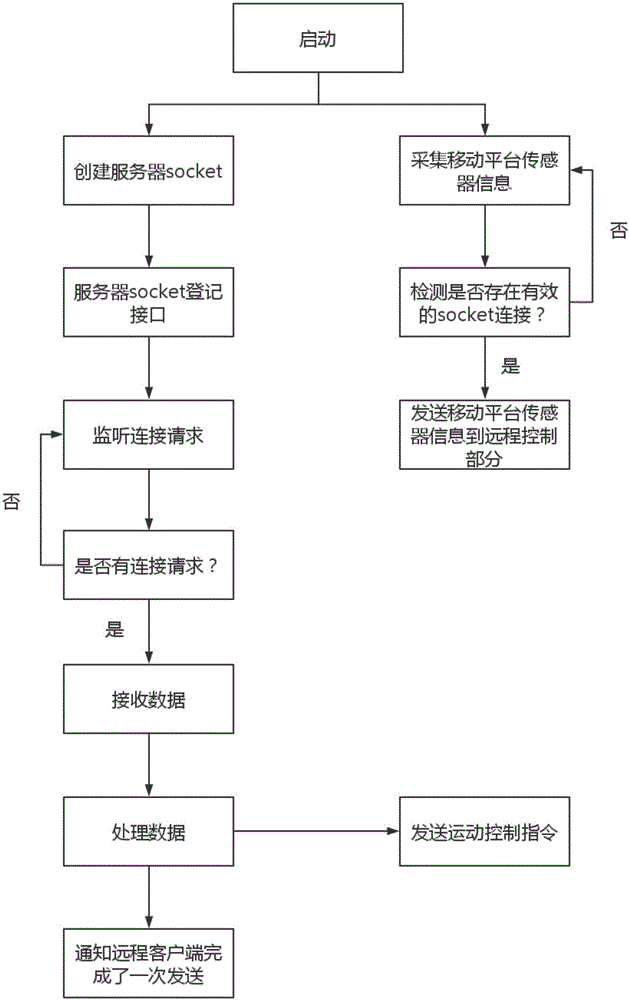

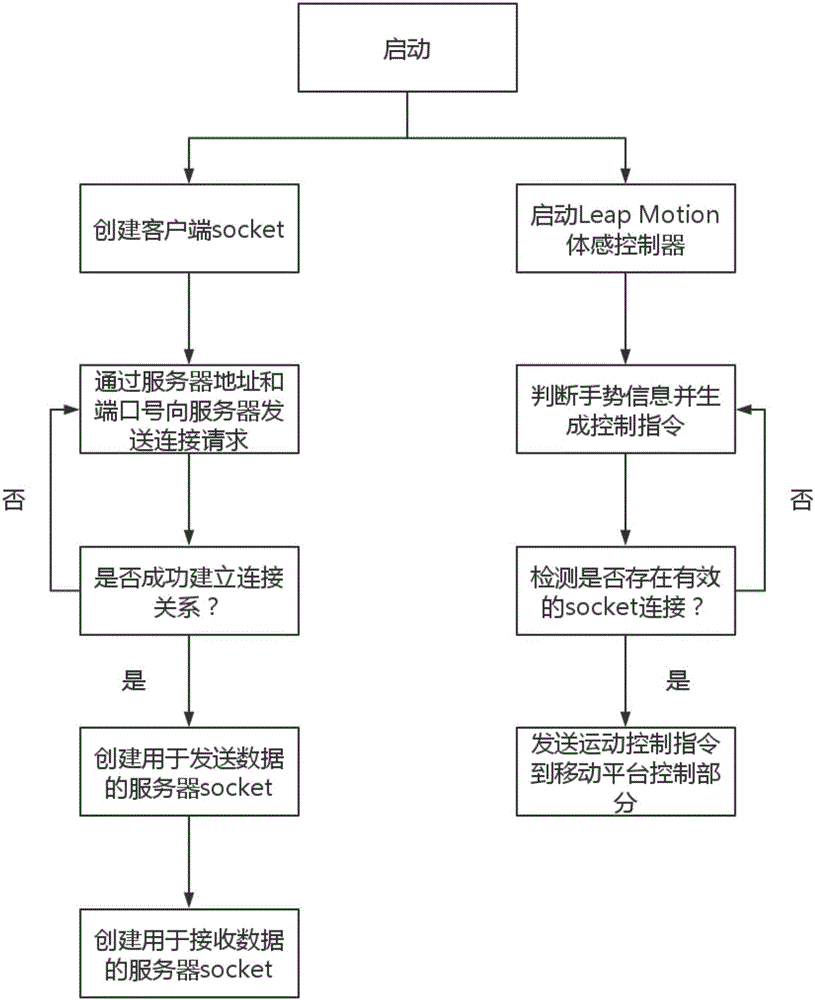

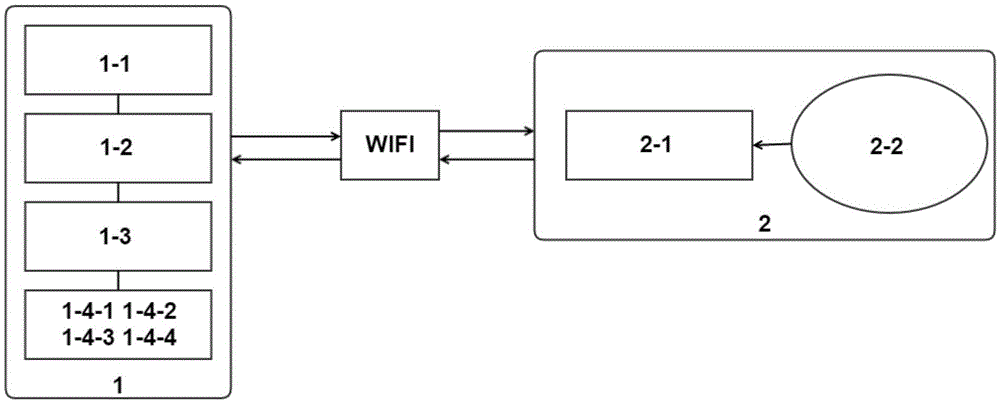

Remote control system of mobile platform based on gestures

InactiveCN105912105AEnables touchless gesture controlImprove applicabilityInput/output for user-computer interactionGraph readingControl systemRemote control

The invention relates to a remote control system of a mobile platform based on gestures, relating to the field of control systems. The remote control system comprises a mobile platform control part, a remote control part and a gesture control operation scheme. The mobile platform control part comprises a mobile platform and a mobile platform controller which is an industrial control machine. A platform for the industrial control machine operates a mobile platform control program. The remote control part comprises a Leap Motion body sensing controller and a notebook computer used for operating a remote control program. The gesture control operation scheme is characterized in that the remote control part reads the number of hands detected by the Leap Motion body sensing controller and gesture information of controller hands detected by the Leap Motion body sensing controller; and through wifi, a corresponding control instruction sent to the industrial control machine. The remote control system of the mobile platform based on gestures helps solve the defects in the prior art that will of controllers cannot be directly expressed, the man-machine interaction means are not natural and difficulty in dealing with the system is great.

Owner:HEBEI UNIV OF TECH

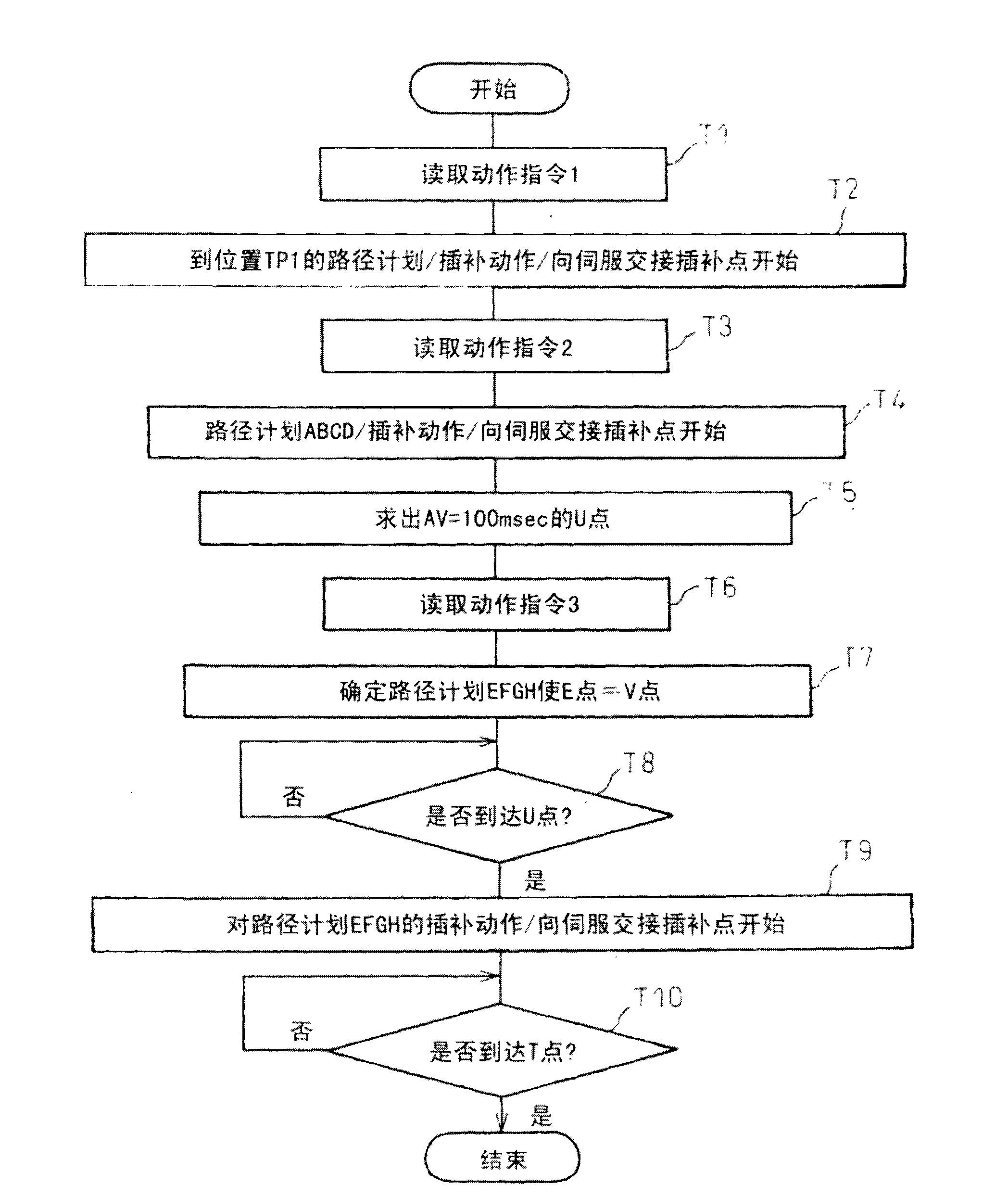

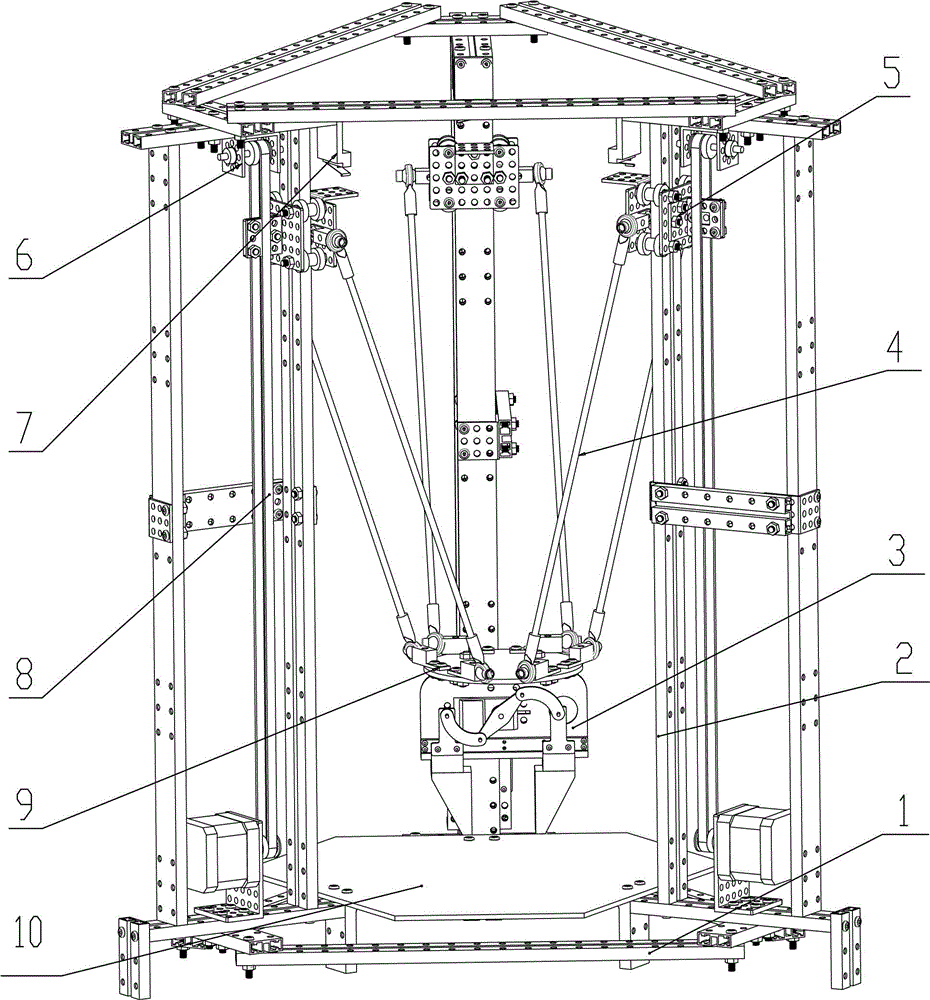

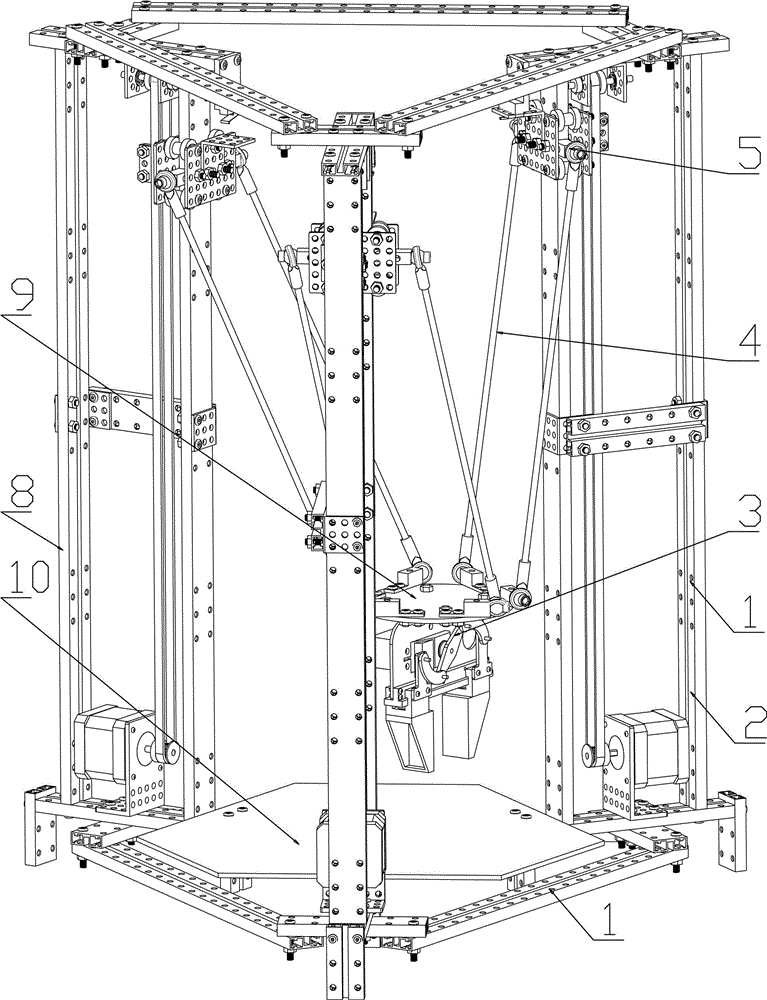

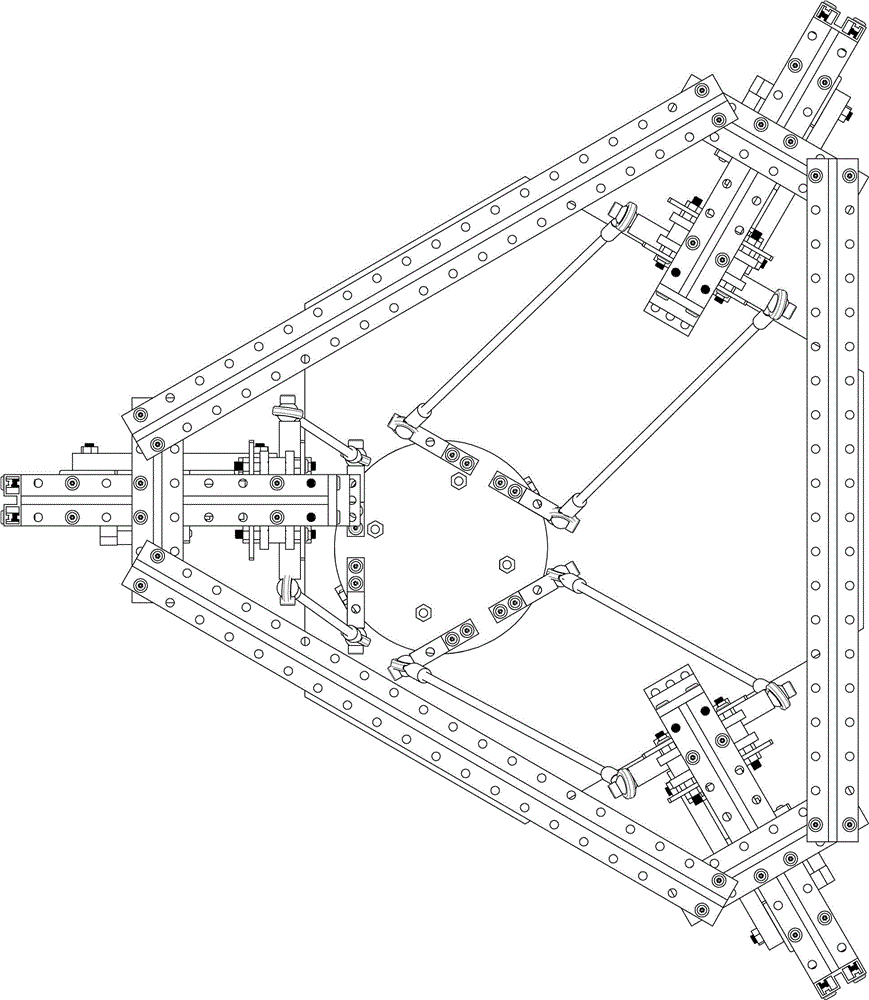

Leap motion parallel mechanical arm based on Leap Motion and operation method and control system of leap motion parallel mechanical arm

The invention relates to a leap motion parallel mechanical arm based on Leap Motion and an operation method and control system of the leap motion parallel mechanical arm. Three connecting supports and three stepping motor supports are alternately connected end to end to form a hexagonal frame base. Minitype touch switches are fixedly mounted on the stepping motor supports. Synchronous belts for driving pulley block supports to slide vertically are arranged on synchronous belt pulley frames. The upper ends of threaded rod assemblies are connected with the pulley block supports, and the lower ends of the threaded rod assemblies are connected with mechanical arm fixing pieces. A mechanical arm body for clamping objects is fixed to the lower ends of the mechanical arm fixing pieces. A bottom tray for storing the objects is arranged under the mechanical arm body. The mechanical arm body is controlled by vertical sliding of the three pulley block supports to take and place the objects stored on the bottom tray. The motion of the mechanical arm is controlled by combining the leap motion technology and the Leap Motion controller, a traditional program control method is replaced, and automatic control can be achieved conveniently.

Owner:佟彧

Wheeled mobile robot controlled by gestures and operation method of wheeled mobile robot

InactiveCN105643590ARealize remote controlControl startProgramme-controlled manipulatorDrive wheelEngineering

The invention provides a wheeled mobile robot controlled by gestures and an operation method of the wheeled mobile robot and relates to the field of robots. The wheeled mobile robot comprises a mobile operation part and a remote control part, wherein the mobile operation part comprises a three-layer aluminum alloy section framework, a Kinect camera, an embedded fanless industrial personal computer, a brushless direct-current motor controller, a lithium battery, two drive wheels and a universal wheel; the remote control part comprises a notebook computer connected with a Leap Motion sensing controller. The operation method is implemented as follows: the Leap Motion sensing controller is used for gesture recognition, and remote control is performed through a specific gesture. The wheeled mobile robot overcomes the defect that a motion instruction cannot be sent to a wheeled mobile robot in the prior art through recognition of the gestures of a controller in a long distance, and the man-machine interaction means between the controller in a long distance and the robot is rigid and unnatural.

Owner:HEBEI UNIV OF TECH

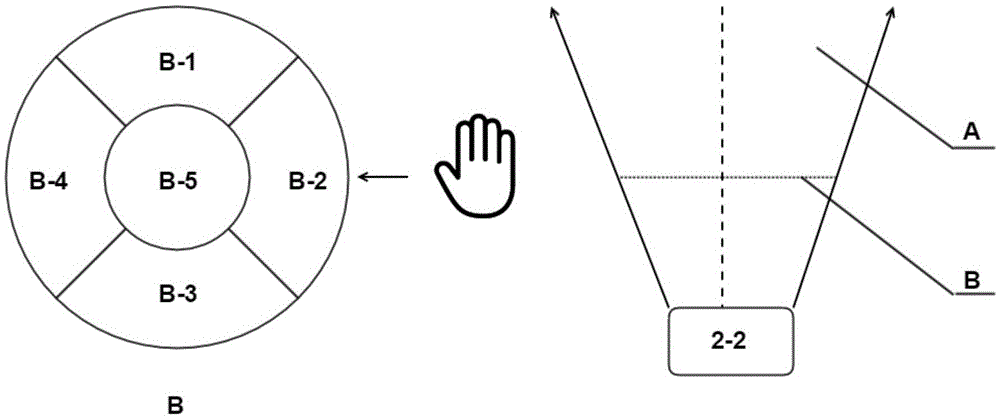

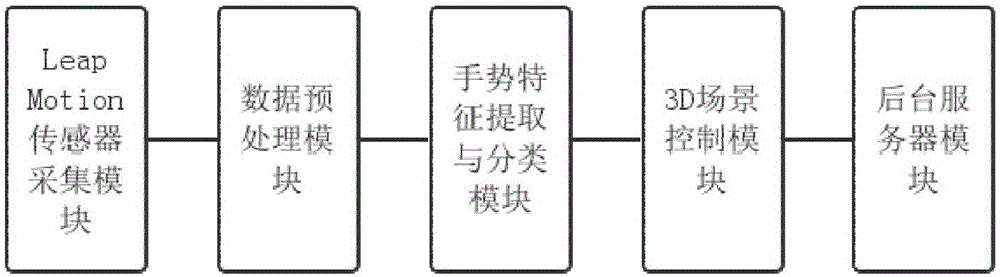

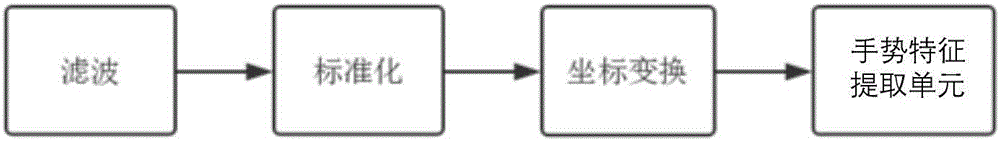

Training system for children suffering from autistic spectrum disorders

InactiveCN106383586ALow costRich interactive scenesInput/output for user-computer interactionGraph readingSpatial OrientationsAnimation

The invention discloses a training system for children suffering from autistic spectrum disorders. The training system comprises a Leap Motion sensor acquisition module, a data pre-processing module, a gesture characteristic extraction and classification module and a 3D scene control module, wherein the Leap Motion sensor acquisition module acquires original gesture data of a user, and transmits the data to the data pre-processing module; the data pre-processing module pre-processes the original gesture data; the gesture characteristic extraction and classification module extracts gesture characteristics in the gesture data, classifies the gesture characteristics, and sends a classification result to the 3D scene control module; and the 3D scene control module controls switching of a scene and motion of a model according to the gesture classification result. According to the training system disclosed by the invention, a natural gesture interaction manner and a realistic 3D scene animation are adopted; therefore, the system has the characteristics of being rich in interactive scene, convenient to use and the like; furthermore, the system is not limited by a use place of a user and spatial orientation; and training is also relatively reliable.

Owner:SOUTHEAST UNIV

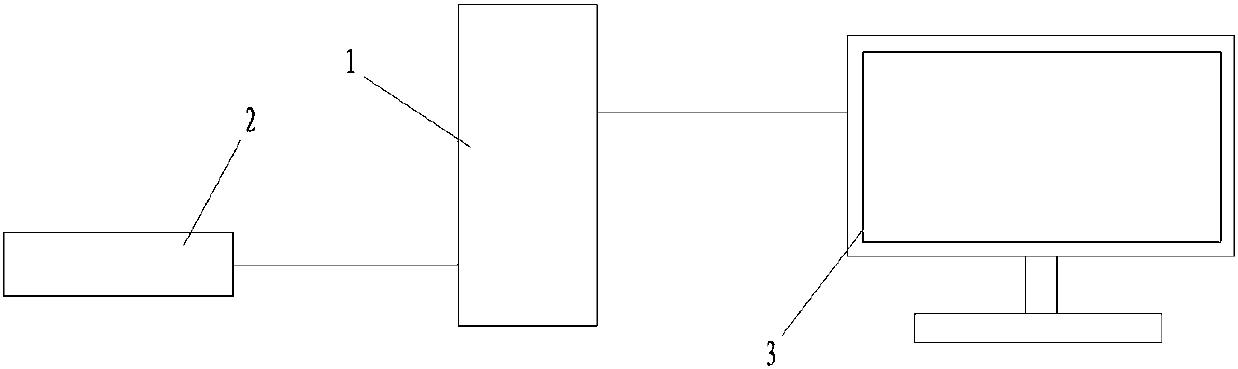

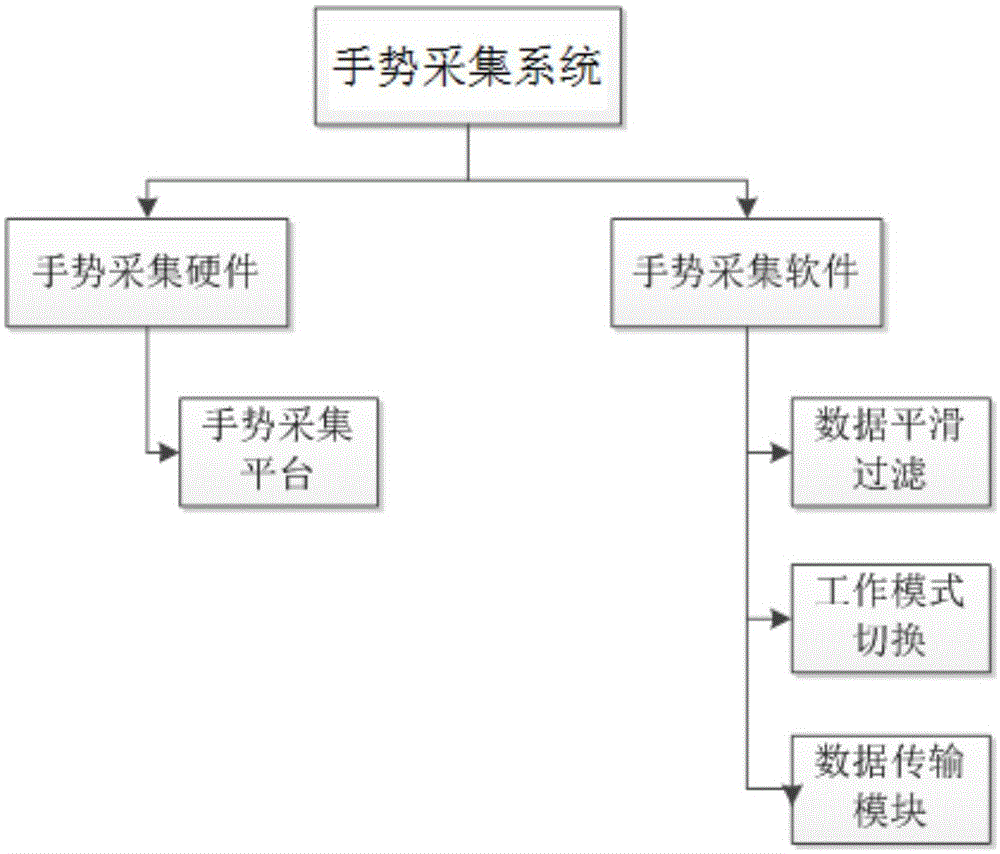

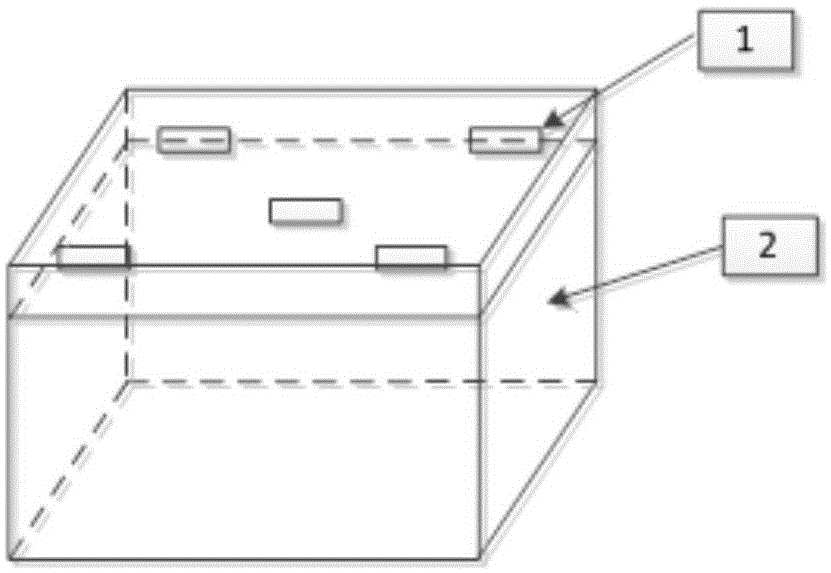

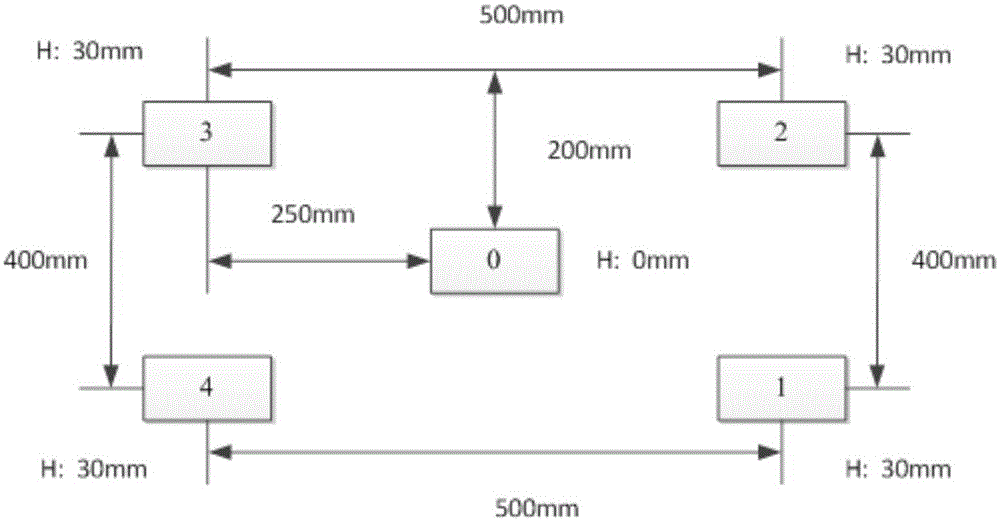

Gesture collection device for manipulating industrial robot and corresponding gesture collection method

InactiveCN105094373AIncrease workspaceImprove working precisionInput/output for user-computer interactionHabitOperational behavior

The invention discloses a gesture collection device for a manipulator industrial robot and a corresponding gesture collection method. The gesture collection device comprises a gesture collection platform. The gesture collection platform comprises a collection device box. The collection device box comprises at least two layers. The first layer is provided with at least one Leap motion sensor with an adjustable angle, the second layer is provided with a computer host, and the Leap motion sensor is in communication with the computer host. According to the gesture collection device for the manipulator industrial robot and the corresponding gesture collection method, the working space of the gesture collection device is enlarged, the precision of the gesture collection device is improved, the operation behavior habit of people is met, and the control demand of the industrial robot is met.

Owner:SHENZHEN HUIDA TECH CO LTD

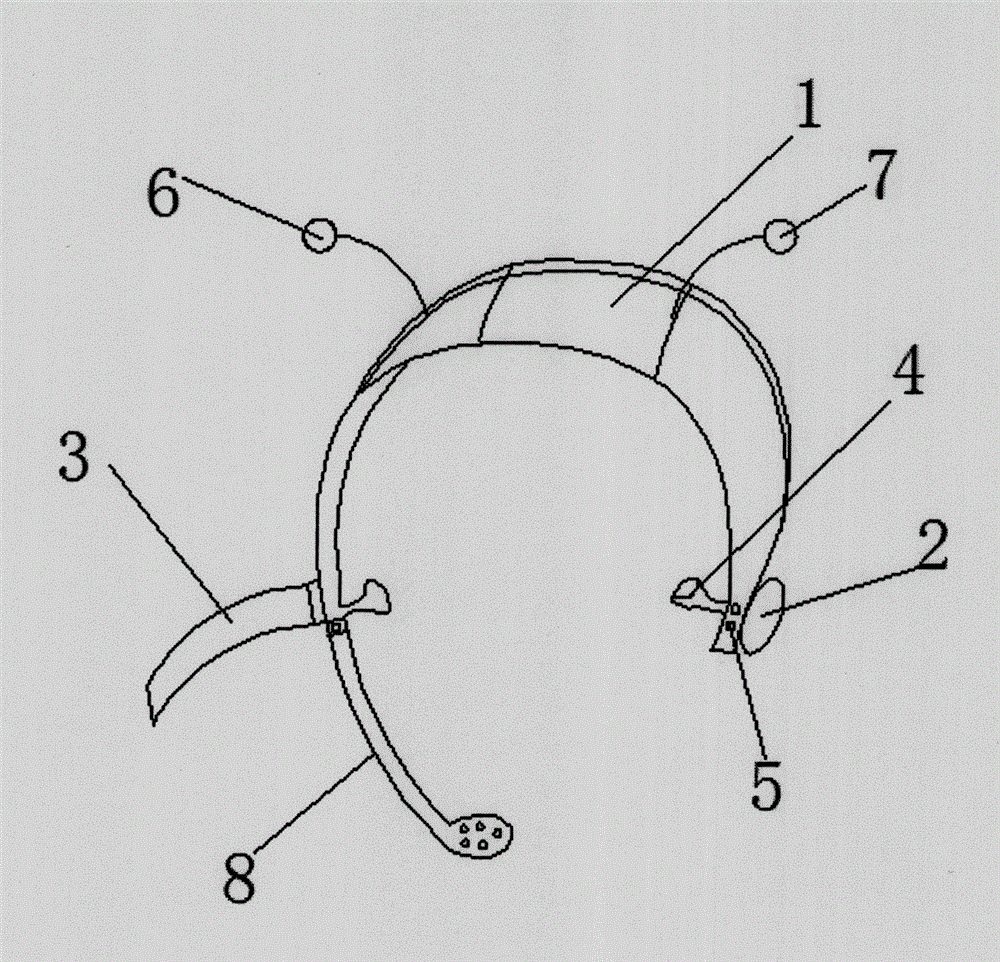

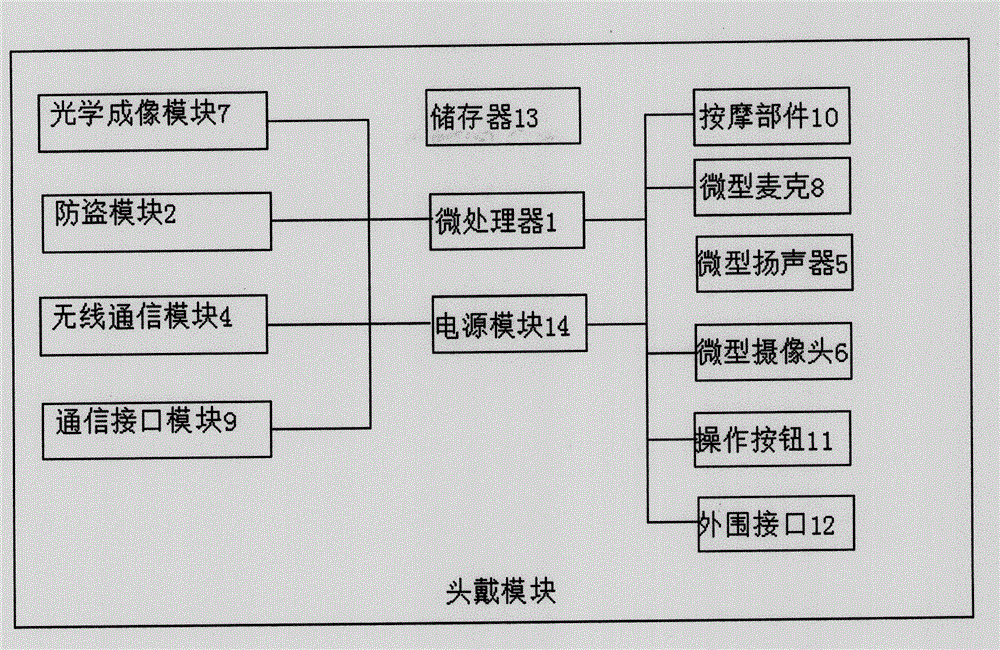

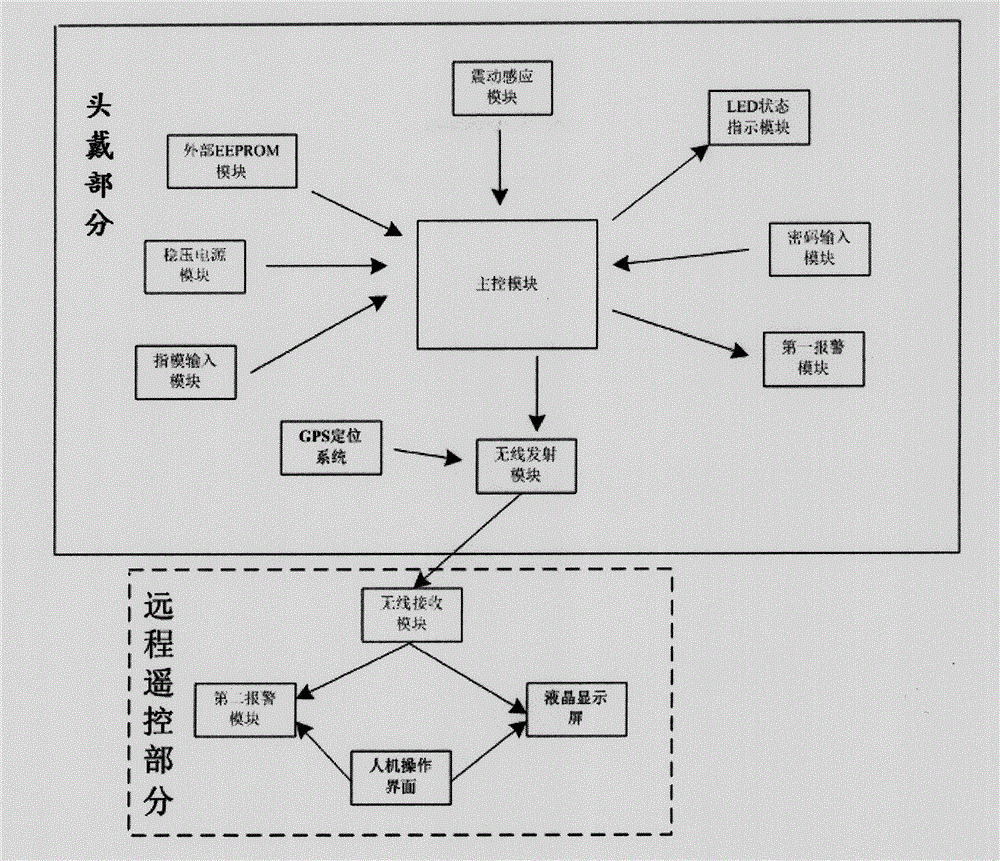

Wearable computer

InactiveCN104597971AEasy to carryEasy retrievalInput/output for user-computer interactionDigital data processing detailsOptical sensingHuman–computer interaction

Owner:XUCHANG UNIV

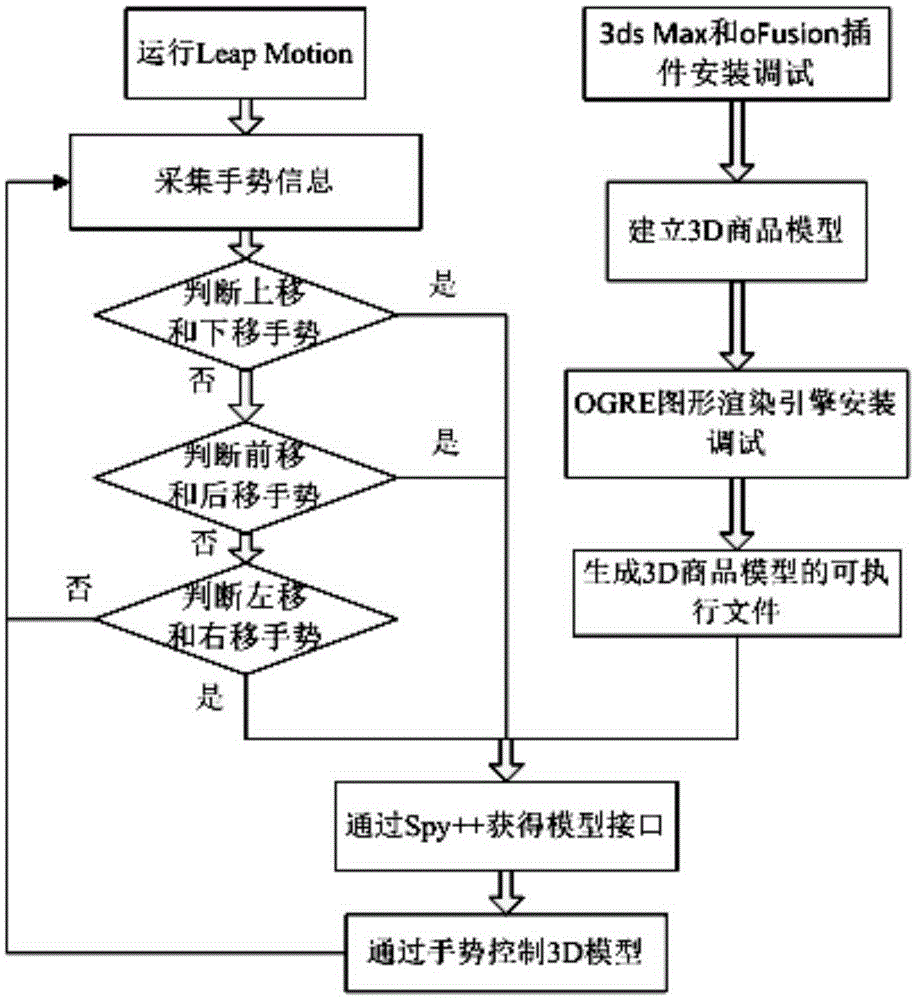

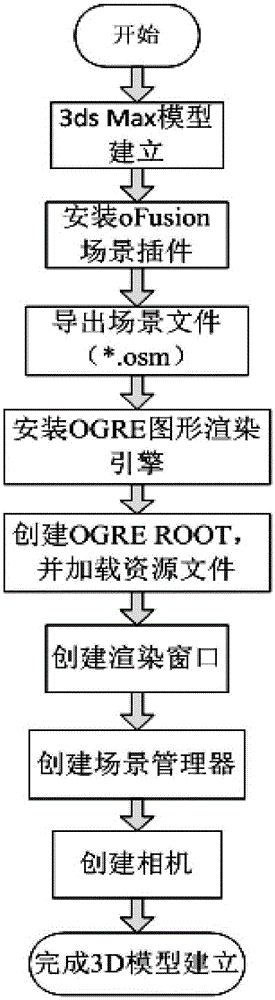

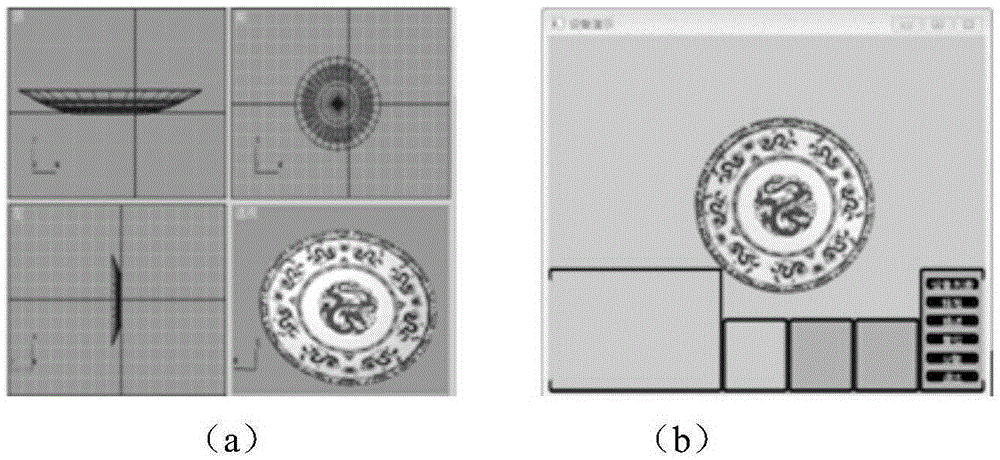

Leap Motion based 3D commodity display method

InactiveCN105354031AFull displayMake up for the lack of interactionInput/output for user-computer interactionExecution for user interfacesHuman–computer interactionLeap motion

The invention discloses a Leap Motion based 3D commodity display method, and belongs to the technical field of mode identification. The method comprises the following steps: step 1, acquiring gesture information; step 2, judging an upward-moving gesture and a downward-moving gesture, if the gesture is the upward-moving gesture or the downward-moving gesture, entering the step 6, and if the gesture is neither the upward-moving gesture nor the downward-moving gesture, judging a forward-moving gesture and a backward-moving gesture; if the gesture is the forward-moving gesture or the backward-moving gesture, entering the step 6, if the gesture is neither the forward-moving gesture nor the backward-moving gesture, judging a leftward-moving gesture and a rightward-moving gesture, if the gesture is the leftward-moving gesture or the rightward-moving gesture, entering the step 6, and if the gesture is neither the leftward-moving gesture nor the rightward-moving gesture, entering the step 1; and step 3, establishing a 3D commodity model; and step 4, exporting a model file, namely, a *.osm file, required for scene rendering.

Owner:DALIAN JIAOTONG UNIVERSITY

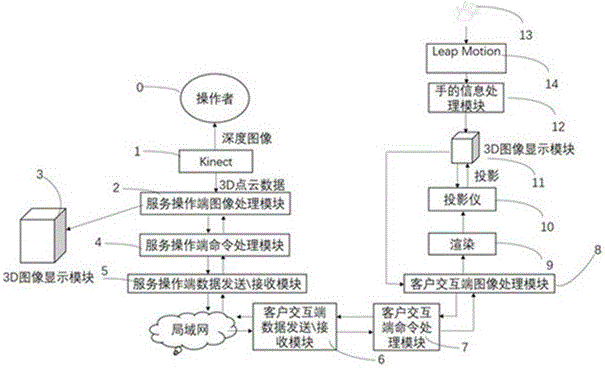

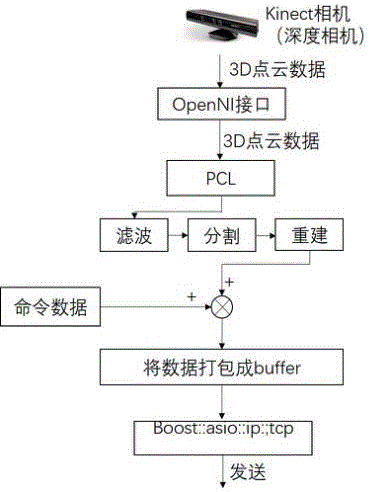

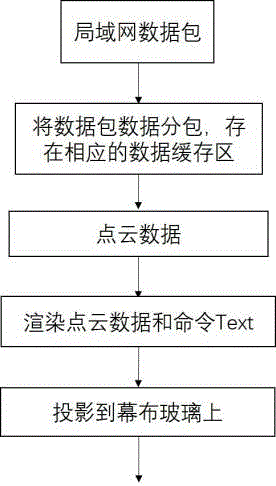

Real-time 3D remote man-machine interaction system

InactiveCN106774942AReal-time transmissionRealize the interaction between human and 3D projectionInput/output for user-computer interactionGraph readingInformation processingImaging processing

The invention discloses a real-time 3D remote man-machine interaction system. The system at least comprises a service operation end and a client interaction end, wherein the service operation end at least comprises a computer, a depth camera, an image processing module, a data transmitting / receiving module, a service operation end command processing module and a 3D image displaying module; and the client interaction end at least comprises a computer, a leap motion module, a 3D image displaying module, a command processing module, an image processing module, a data transmitting / receiving module and a hand information processing module. Information of operators is acquired by the low-cost depth camera, and is transmitted to a client side by a local area network, and real-time transmission is realized. In the aspect of interaction, human hand information is acquired by Leap Motion so as to control a simulated mouse, and human and 3D projection interaction is realized. The real-time 3D remote man-machine interaction system is convenient and simple to operate.

Owner:SOUTH CHINA UNIV OF TECH +1

Man-machine interaction integrated device based on Leap Motion equipment

InactiveCN106774938AMake up the distanceCompensation accuracyInput/output for user-computer interactionGraph readingShortest distanceComputer terminal

The invention discloses a man-machine interaction integrated device based on Leap Motion equipment. The man-machine interaction integrated device comprises a Kinect camera, the Leap Motion equipment, a camera, a processing terminal and a display terminal, wherein the Kinect camera is used for acquiring skeleton image information and deep image information of a user, the Leap Motion equipment is used for acquiring hand or tool image information of the user, the camera is used for acquiring image information positioned in a visual blind area of the Kinect camera and is positioned on one side of the Kinect camera, the processing terminal is used for receiving and processing the skeleton image information, the deep image information, the hand or tool image information and the image information positioned in the visual blind area of the Kinect camera, the display terminal is used for displaying an initial image and dynamically displaying the image information processed by the processing terminal to realize man-machine interaction operation. By the man-machine interaction integrated device, long-distance, short-distance and various gestures or tools can be recognized, and man-machine interaction Kinect enjoyment of 3D (three-dimensional) virtual reality is greatly improved.

Owner:GUANGZHOU MIDSTERO TECH CO LTD

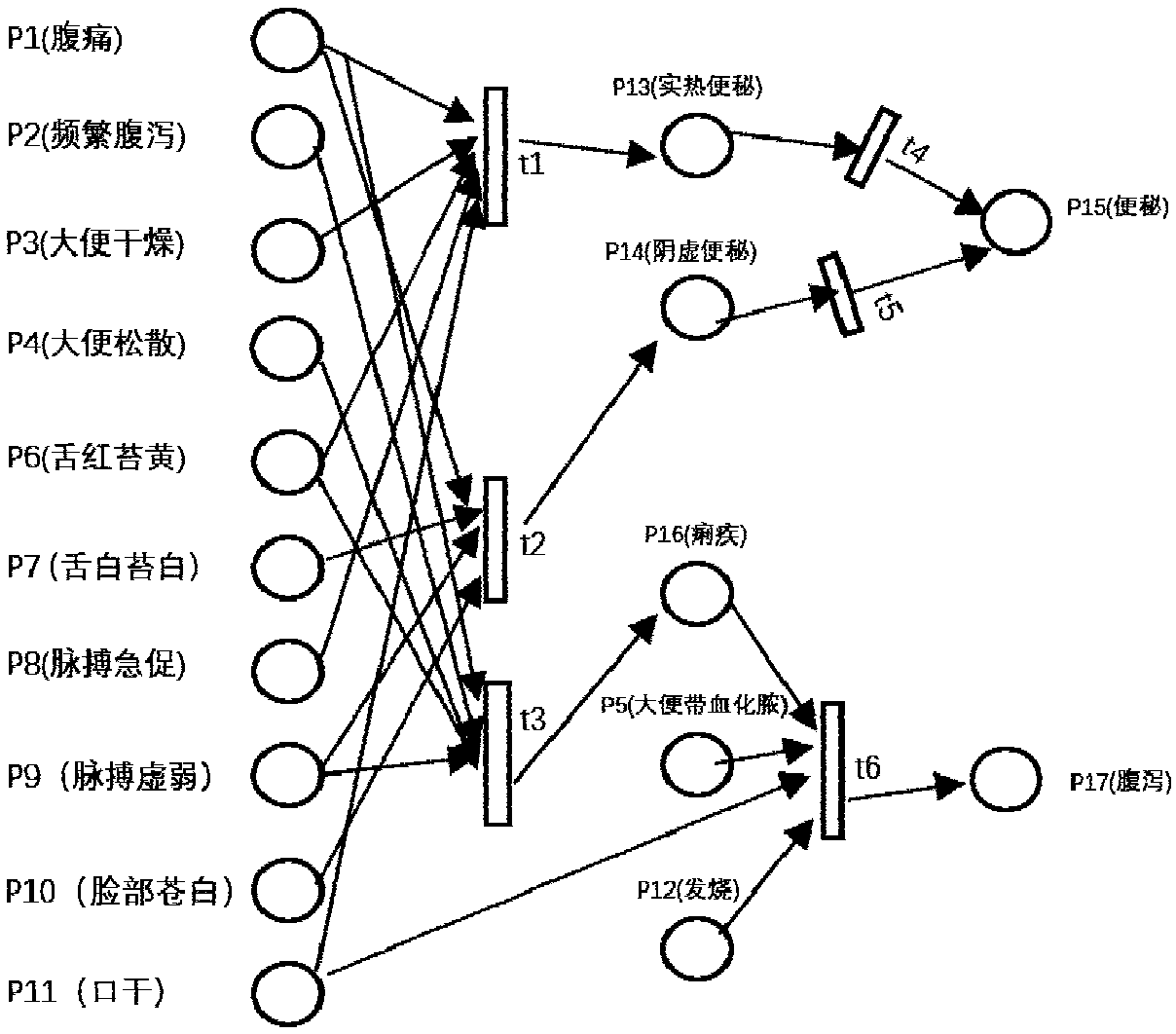

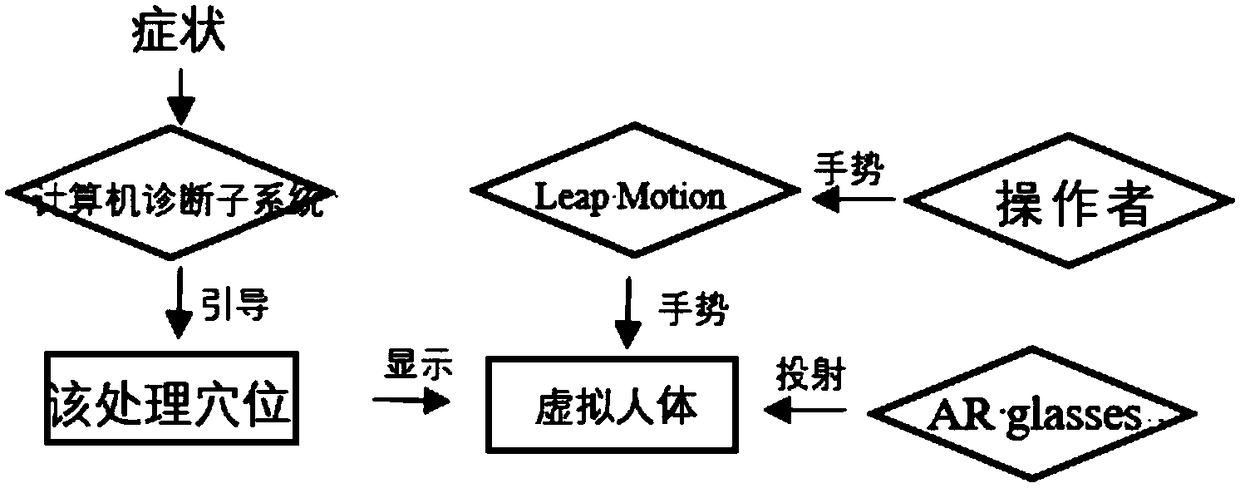

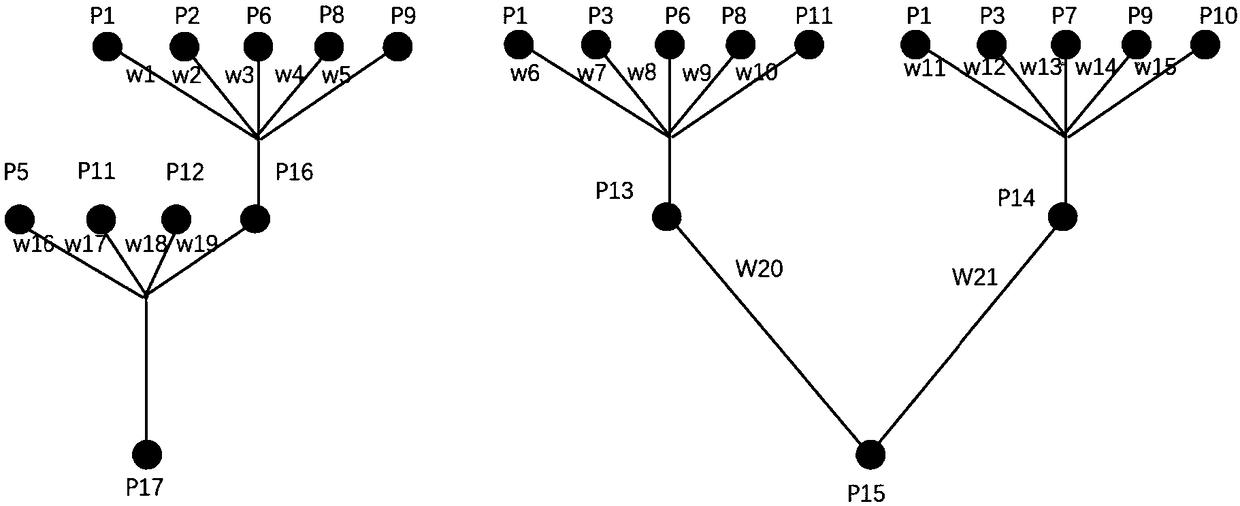

Virtual acupuncture method and system based on mobile interaction and augmented reality

ActiveCN109243575ARealize the combinationAchieve connectionInput/output for user-computer interactionMechanical/radiation/invasive therapiesDiseaseHuman body

The invention provides a virtual acupuncture method and system based on mobile interaction and augmented reality. The method is divided into five modules: augmented reality, diagnostic subsystem, coordinate system and its transformation, gesture position and direction capture, collision detection and visual feedback. In the augmented reality, the experimental human body is projected into the realworld by wearing AR equipment. In order to transmit the operations in the virtual environment to the real environment, the coordinate system needs to perform coordinate transformation. The diagnosticsubsystem constructs an IFPN model by the back propagation algorithm to learn the correspondence between different diseases and treatment acupoints. Gesture capture is realized by the Leap Motion somatosensory controller fixed on the AR glasses. The motion and the position of the shand can be calculated by the captured data. The contact points of acupuncture needles can be obtained through collision detection and then guidance can be performed by visual feedback so that the participant can understand the accuracy of action.

Owner:SOUTH CHINA UNIV OF TECH

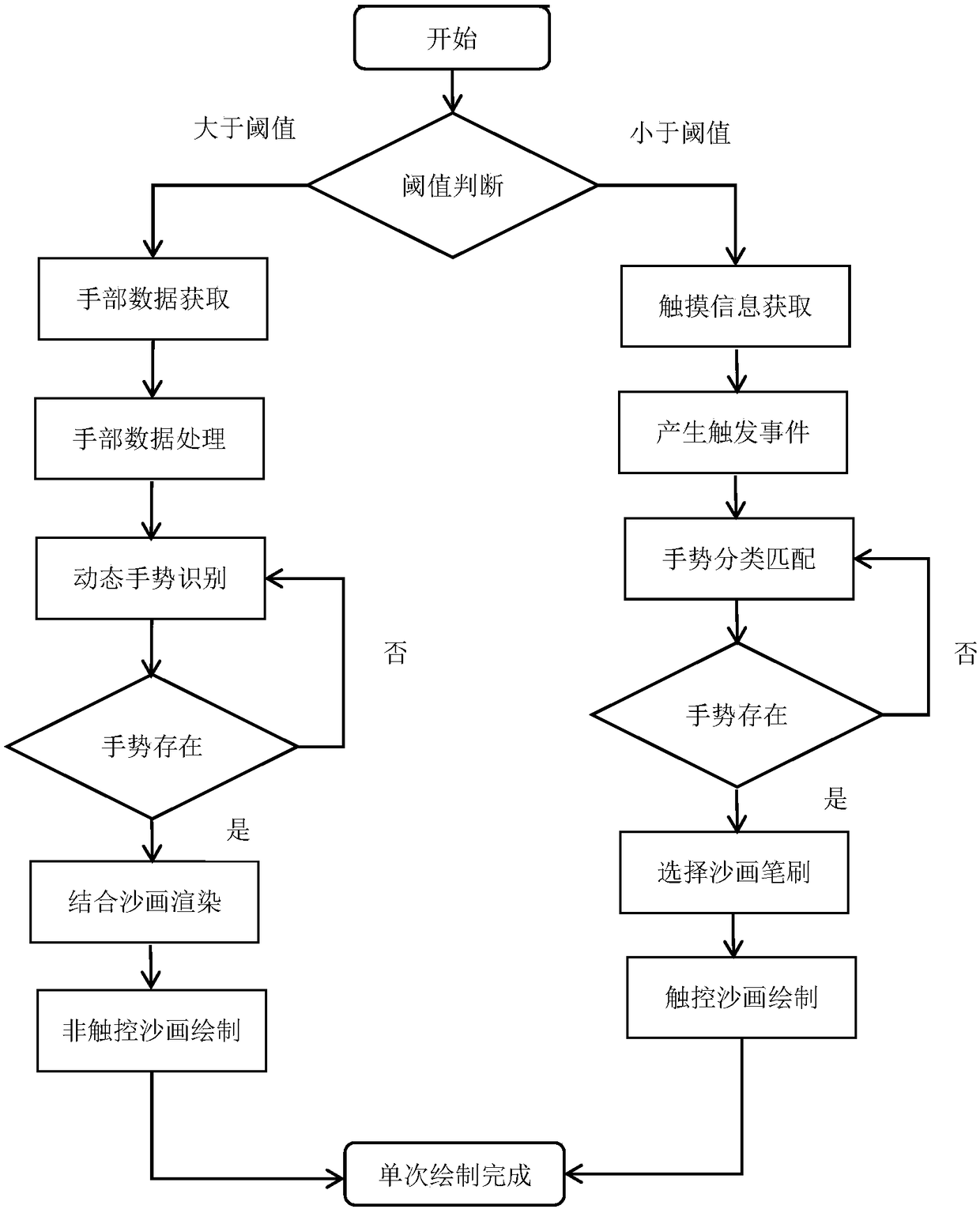

Virtual sand art interactive combination method based on multiple somatosensory devices

ActiveCN108919955AInput/output for user-computer interactionGraph readingFeature vectorTemplate matching

The invention belongs to the technical field of virtual sand painting drawing method, in particular to a virtual sand painting interactive combination method based on multi-motion sensing equipment. According the method, a data capture function of leap motion is firstly applied to obtain original hand data, thus an existing feature extraction method is adopted to preprocess the original data, newhand feature data is introduced to complete dynamic gesture tracking, gesture recognition is performed through template matching, and non-contact virtual sand painting drawing is completed by combining with a rendering effect. A PQLabs G4S touch screen is utilized to acquire touch information, a touch event is produced, a graph embedding method is adopted to convert the touch event into a featurevector for gesture recognition, and meanwhile contact virtual sand painting drawing is completed by combining with a sand grain rendering effect. Virtual sand painting drawing is performed through assistance of leap motion and a touch screen. Compared with real sand paintings, both three-dimensional drawing in the air and contact two-dimensional drawing are achieved, and the method can be well used for virtual sand painting drawing.

Owner:ZHONGBEI UNIV

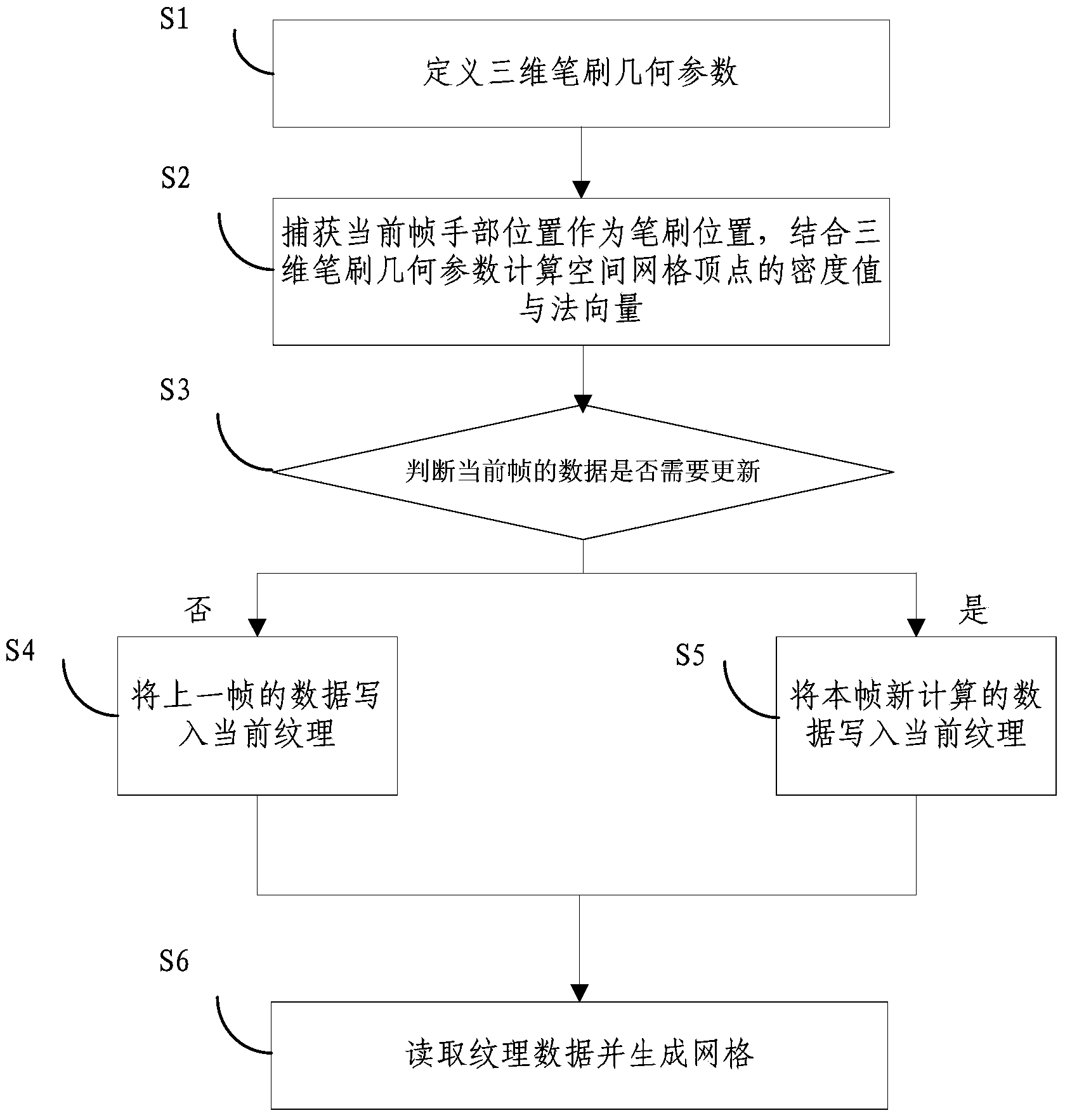

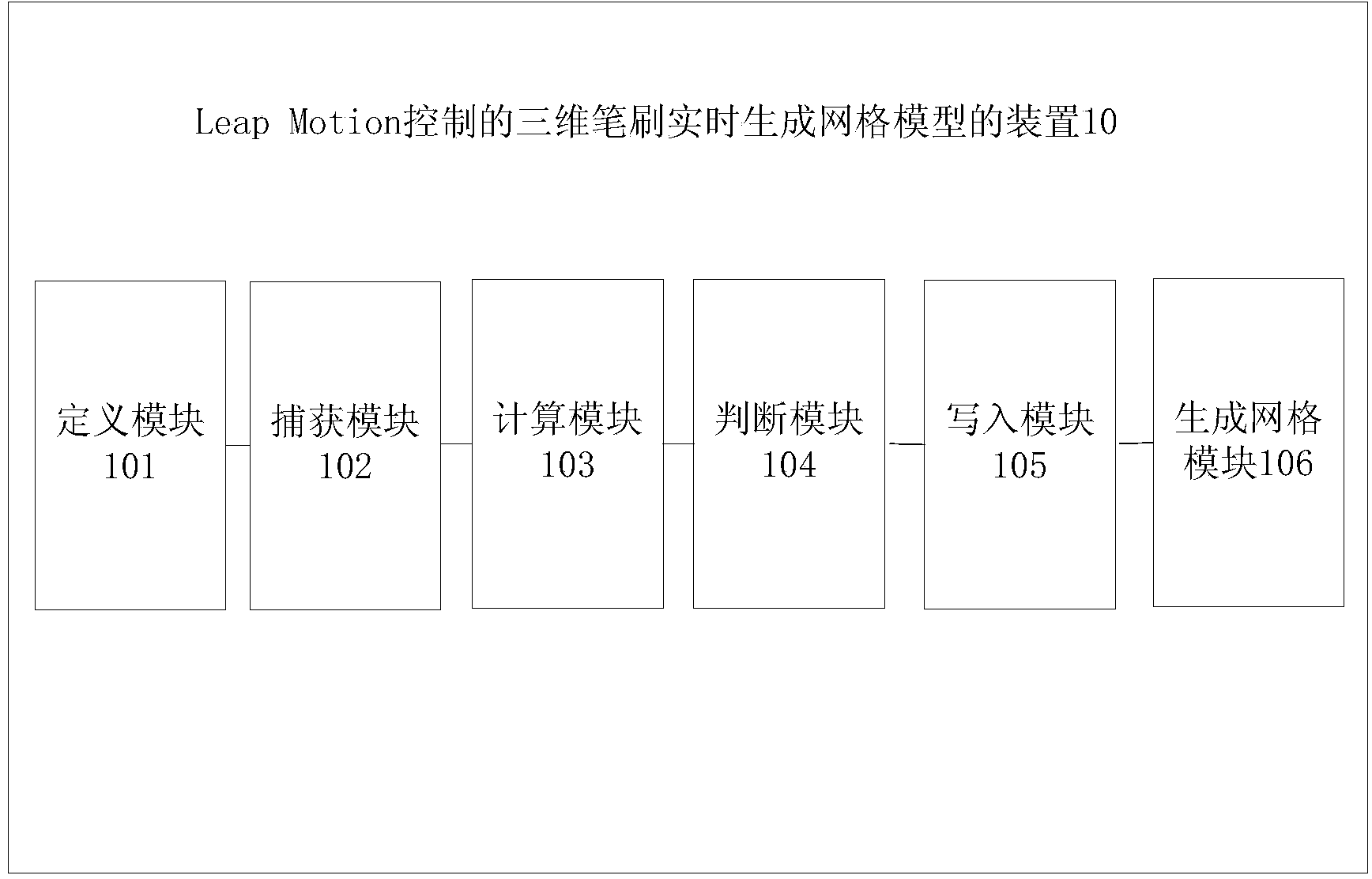

Method and device for three-dimensional brush to generate network module in real time based on Leap Motion control

The invention relates to a method and device for a three-dimensional brush to generate a network module in real time based on Leap Motion control. The method comprises the steps that the geometrical parameter of the three-dimensional brush is defined; the position of a frame handle of a current frame is captured as the position of the brush, and the density value and the normal vector of the vertex of a space lattice combined with the geometric parameter of the three-dimensional brush; if updating is needed, data which is newly calculated by the frame are written into current texture; texture data are read and the lattice is generated. According to the method, volume data in the moving process of the brush are captured through the hand position captured by Leap Motion and the geometric parameter of the three-dimensional brush, the dynamic volume data are subjected to network module visualization, the real-time interaction effect is achieved through GPU acceleration methods like rendering the volume data to the texture, and the advantages of being visible and efficient are achieved. The invention further discloses a device for the three-dimensional brush to generate the network module in real time based on Leap Motion control.

Owner:TSINGHUA UNIV

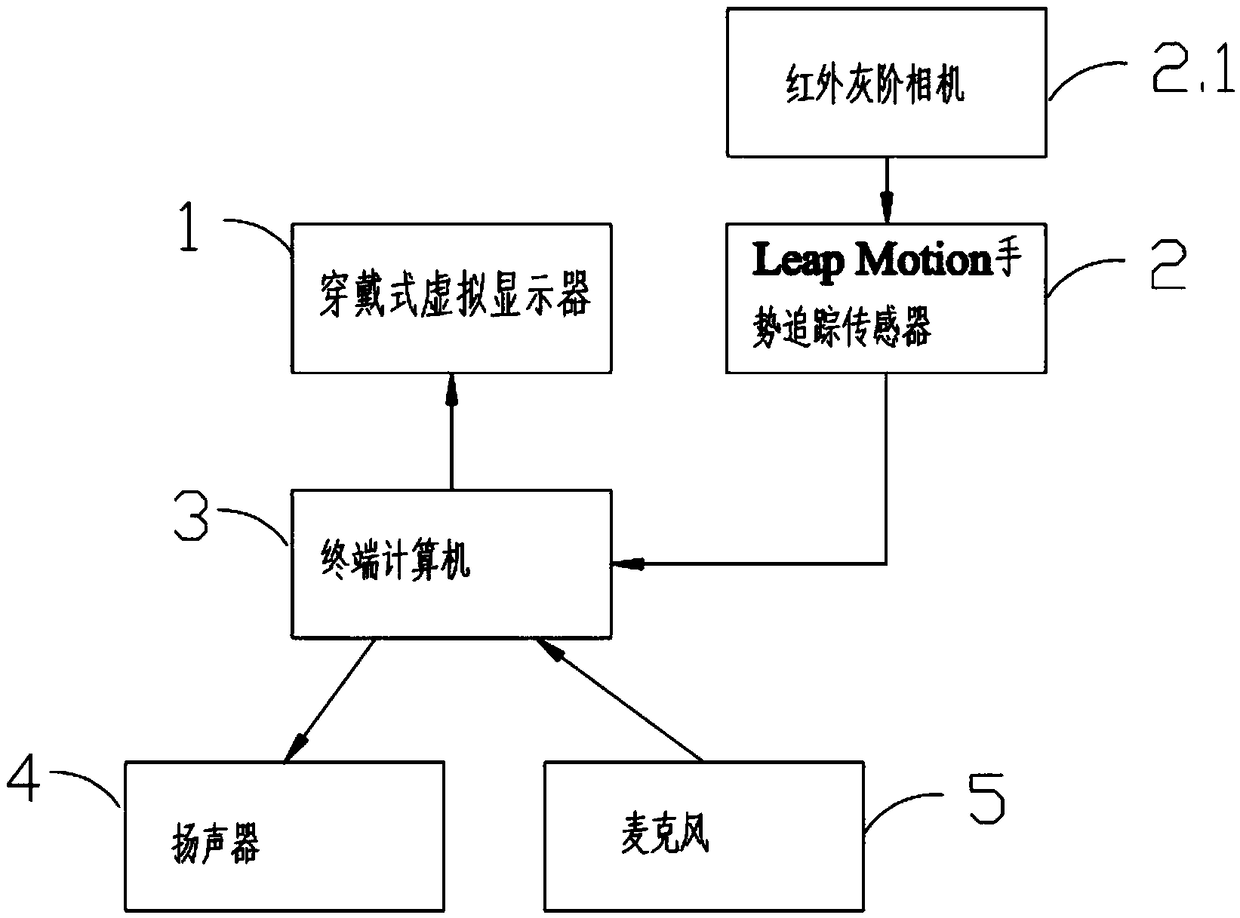

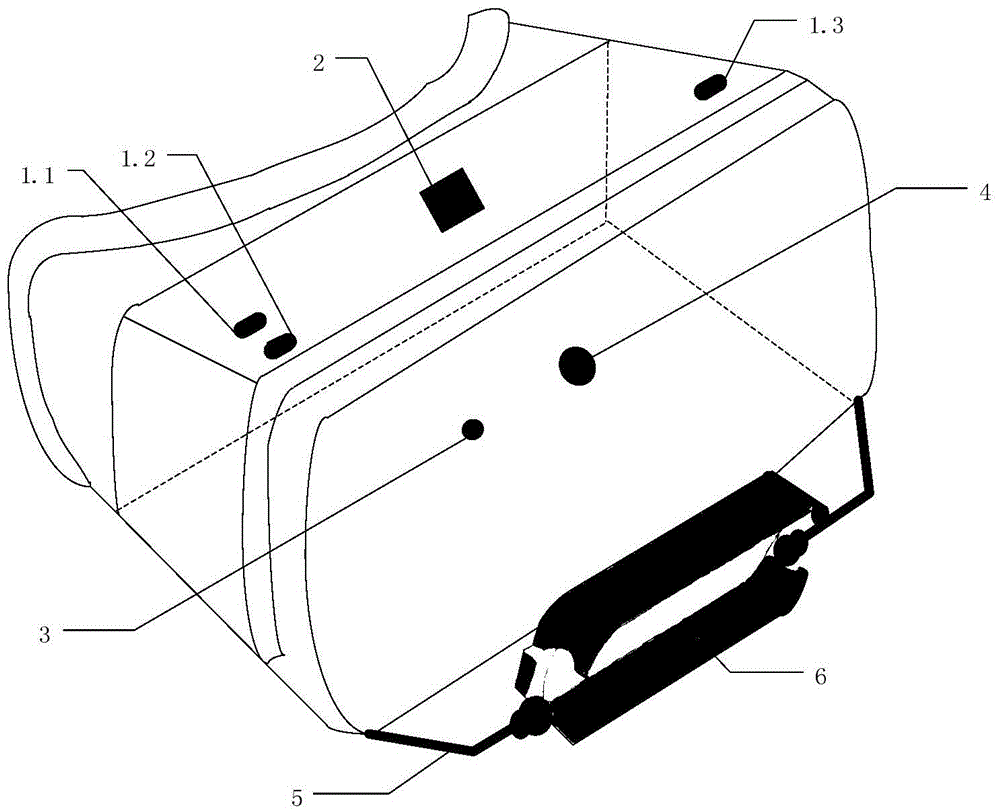

Auxiliary tool for deaf-mute based on gesture recognition and VR display, and implementation method for auxiliary tool

PendingCN108877409ASolve hearing problemsAddress language barriersTeaching apparatusComputer terminalData transmission

The invention discloses an auxiliary tool for the deaf-mute based on gesture recognition and VR display, and an implementation method for the auxiliary tool. The auxiliary tool comprises a wearable virtual display, a Leap Motion gesture tracking sensor and a terminal computer. The wearable virtual display is connected with the terminal computer through a USB port and an HDMI port to achieve data transmission and data analysis. The Leap Motion gesture tracking sensor is laterally fixed at the front part of the wearable virtual display, so that an infrared gray scale camera built in the Leap Motion gesture tracking sensor is set to face forwards. The Leap Motion gesture tracking sensor is connected with the terminal computer, so that a gesture action collected by the infrared gray scale camera in the Leap Motion gesture tracking sensor can be displayed on the wearable virtual display. Through the combination of the Leap Motion gesture tracking sensor and the wearable virtual display, thetool solves a hearing problem of language expression ability of the deaf-mute while solving also solves a hearing problem of the deaf-mute.

Owner:王钦

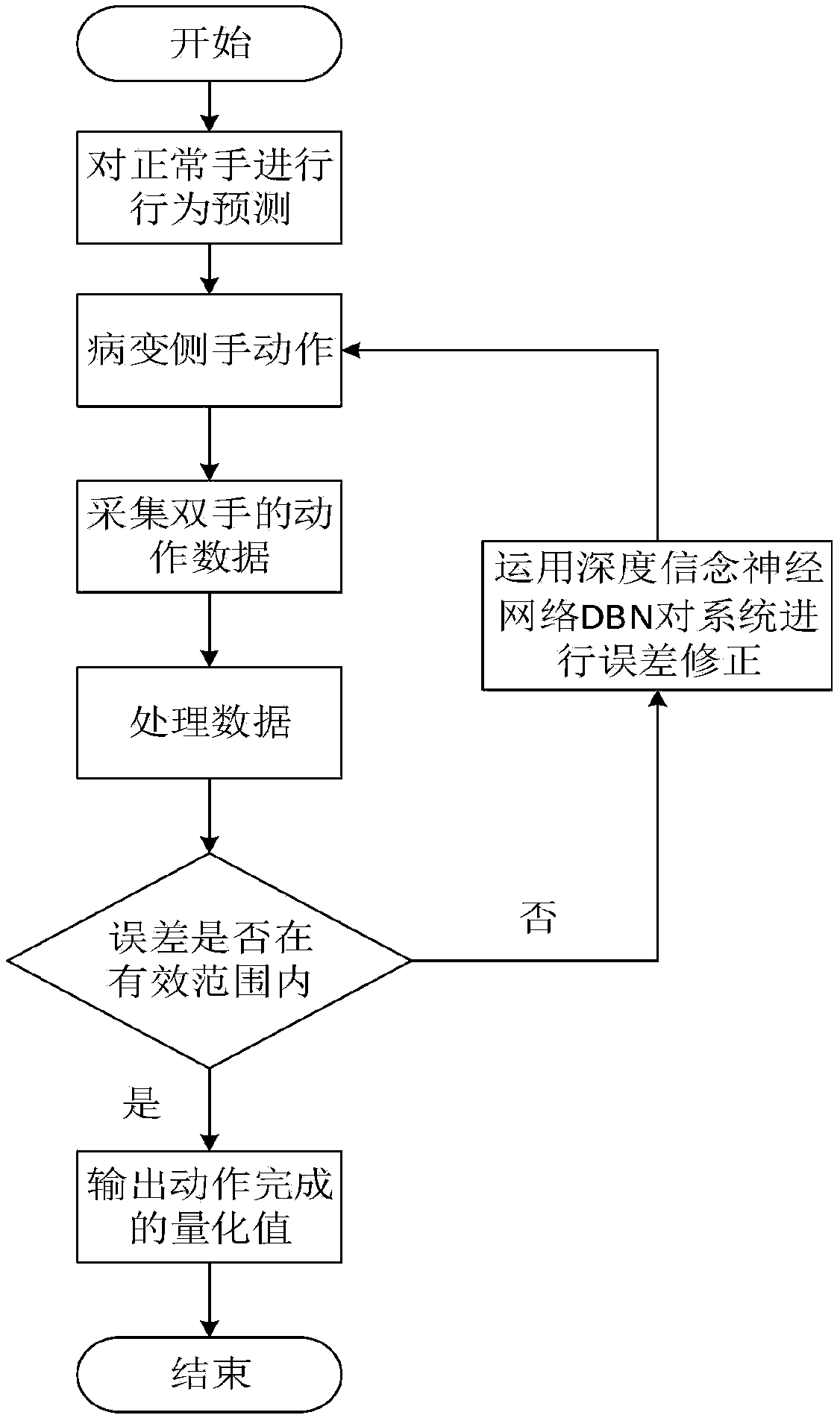

Leap Motion-based finger symmetric rehabilitation error correction method

ActiveCN108743222AImprove training efficiencyReduce recovery timeChiropractic devicesNeural architecturesHand movementsData acquisition

The invention provides a Leap Motion-based finger rehabilitation error correction method. The method comprises the steps that 1, the normal hand of a patient is subjected to behavior prediction, LeapMotion performs data collection on two-hand movements, and data is displayed in real time through an upper computer; 2, the computer compares the data of the two-hand movements; 3, if errors are within an effective range, a quantized value of complete action of hands of a patient is output, if the errors are not within the effective range, prompt messages are output, and data is corrected throughan algorithm; 4, the corrected data is transmitted to lesion side fingers, the steps from first to third continue to be executed, and finally the errors are within the effective range. Accordingly, data of rehabilitation training for the patient is displayed in real time, the time delay problem of symmetrical movements of the two hands of the patient is effectively solved, the errors of the movements are reduced, the effect of symmetric rehabilitation of the two hands of the patient is improved, the time cost of the patient is lowered, and the activity of the patient for treatment is enhanced.

Owner:NANCHANG UNIV

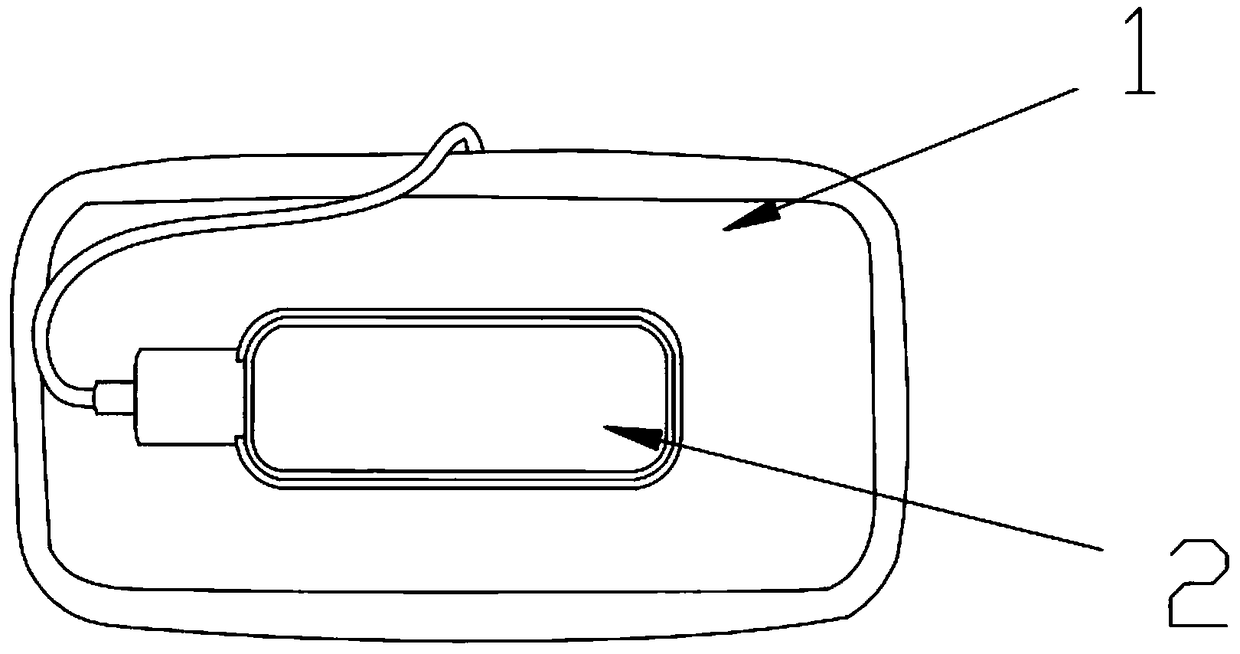

Virtual reality glasses

ActiveCN105487230AEasy to controlInput/output for user-computer interactionMouthpiece/microphone attachmentsEyewearSpeech sound

The invention provides virtual reality glasses. The virtual reality glasses comprise a virtual reality glasses body, function buttons, a direction rocking bar, a microphone, a pick-up head, a support provided with leap motion, and a clamping groove. The virtual reality glasses can realize volume control through volume increasing and decreasing buttons, can perform voice input and voice control through the microphone, can observe real environment in front through the installation of the pick-up head, and can realize identification of gestures by capturing the gestures of users through installation of the leap motion.

Owner:JINAN ZHONGJING ELECTRONICS TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com