Gesture recognition method of small quantity of training samples based on RGB-D (red, green, blue and depth) data structure

An RGB-D, training sample technology, applied in the field of gesture recognition, can solve the problem of predicting gestures without a small amount of sample data, and achieve the effect of good robustness and good recognition effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The method of the present invention will be further described below in conjunction with the accompanying drawings.

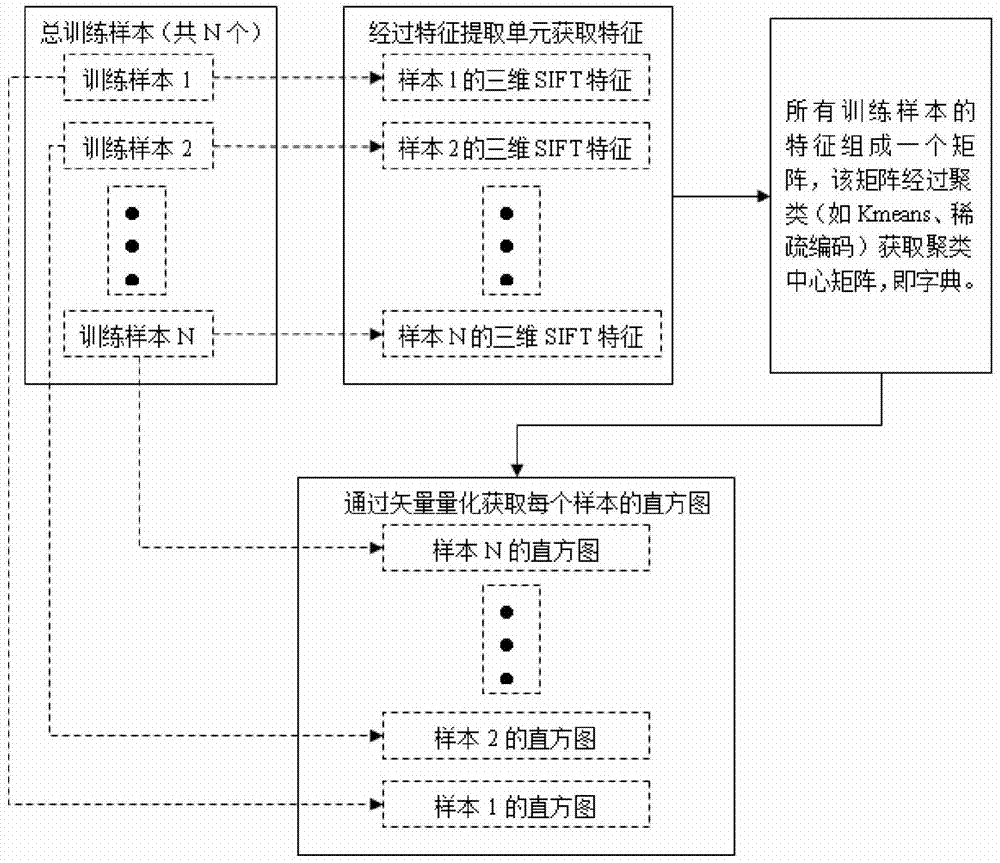

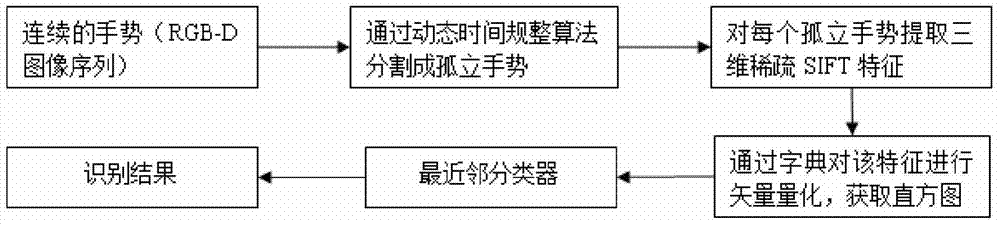

[0020] The gesture recognition method of the present invention is composed of a feature extraction unit, a training unit and a recognition unit.

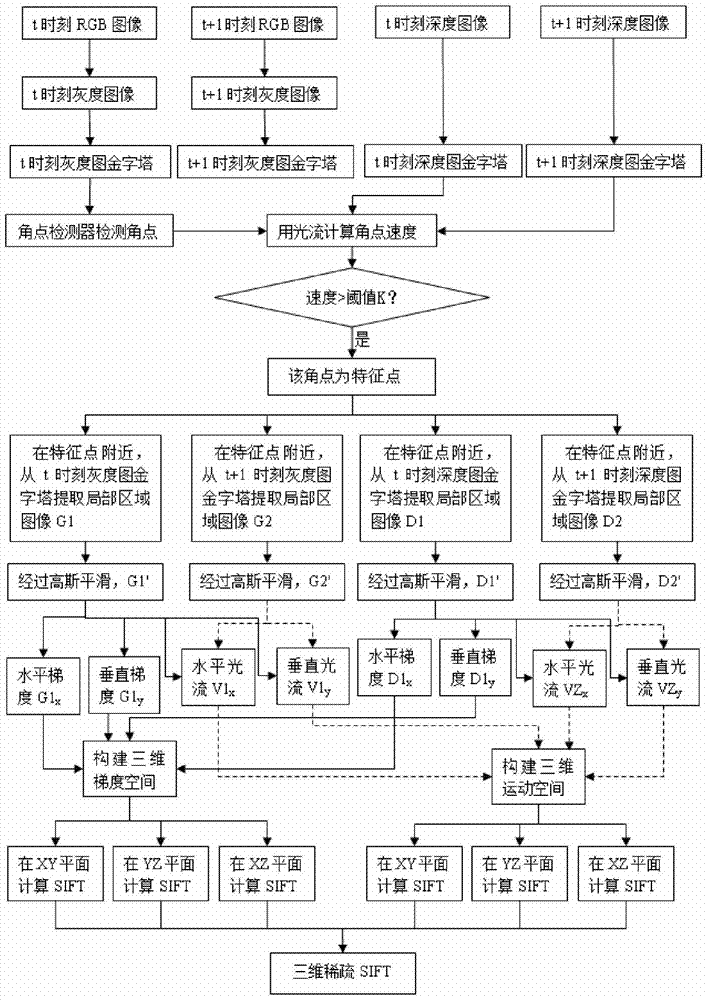

[0021] Such as figure 1 As shown, in the present invention, the specific steps of the feature extraction unit are as follows:

[0022] Step (1). A pyramid is established for each frame in the input image sequence, including a grayscale image pyramid and a depth image pyramid. Among them, the grayscale image pyramid is obtained by converting the RGB image through grayscale, and the depth image pyramid is calculated from the depth image. The first layer of the pyramid is the original image, and the nth layer is obtained by downsampling the n-1th layer.

[0023] Step (2). For the depth map pyramid at time t, use a corner detector (such as Harris, Shi-Tomasi, etc.) to detect the corner points in each layer of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com