Three-dimensional depth data based dynamic gesture recognition method

A dynamic gesture and depth data technology, applied in the field of human-computer interaction, can solve problems such as limited recognition ability and poor dynamic gesture recognition results, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be described in detail below with reference to the accompanying drawings and examples.

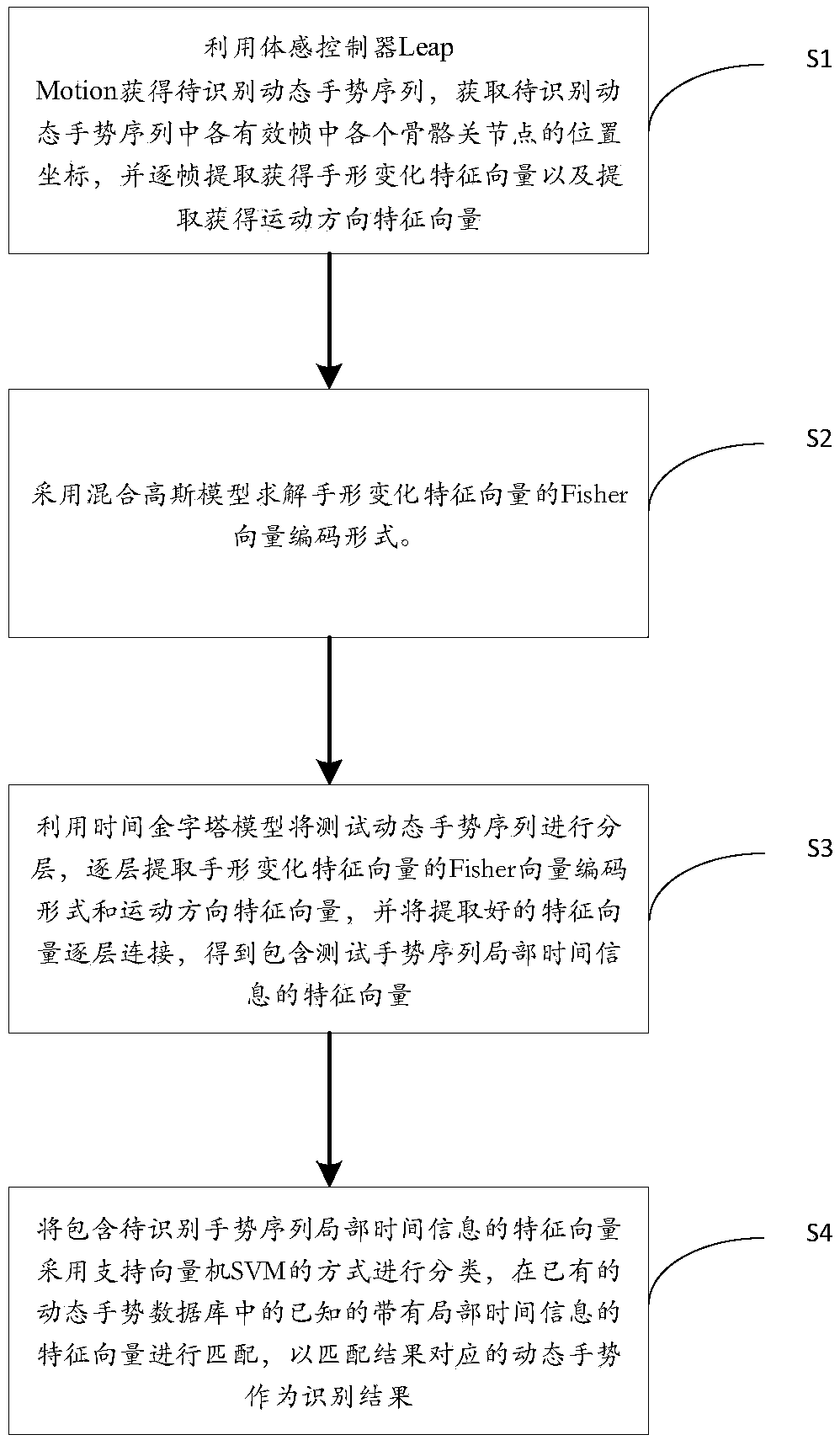

[0051] The present invention provides a dynamic gesture recognition method based on three-dimensional depth data, the process of which is as follows figure 1 shown, including the following steps:

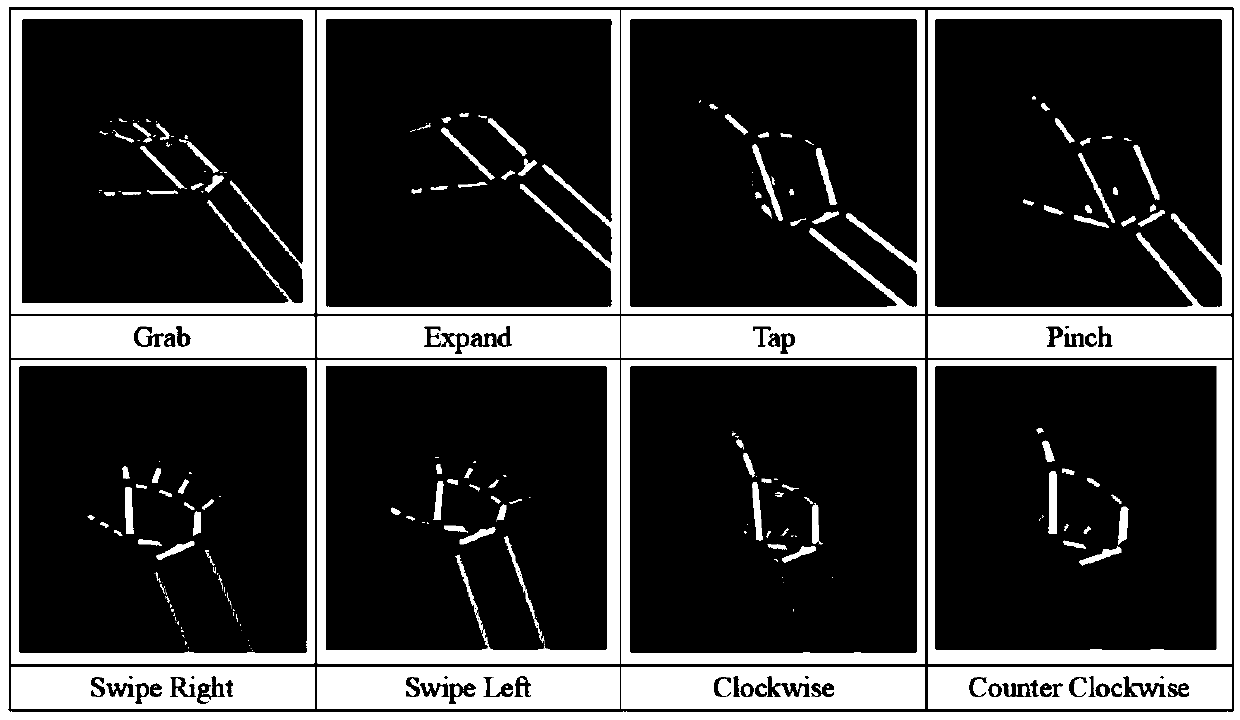

[0052]S1. Use the somatosensory controller Leap Motion to obtain the dynamic gesture sequence to be recognized, obtain the position coordinates of each skeletal joint point in each effective frame in the dynamic gesture sequence to be recognized, and extract the hand shape change feature vector and the motion direction feature vector by frame-by-frame extraction .

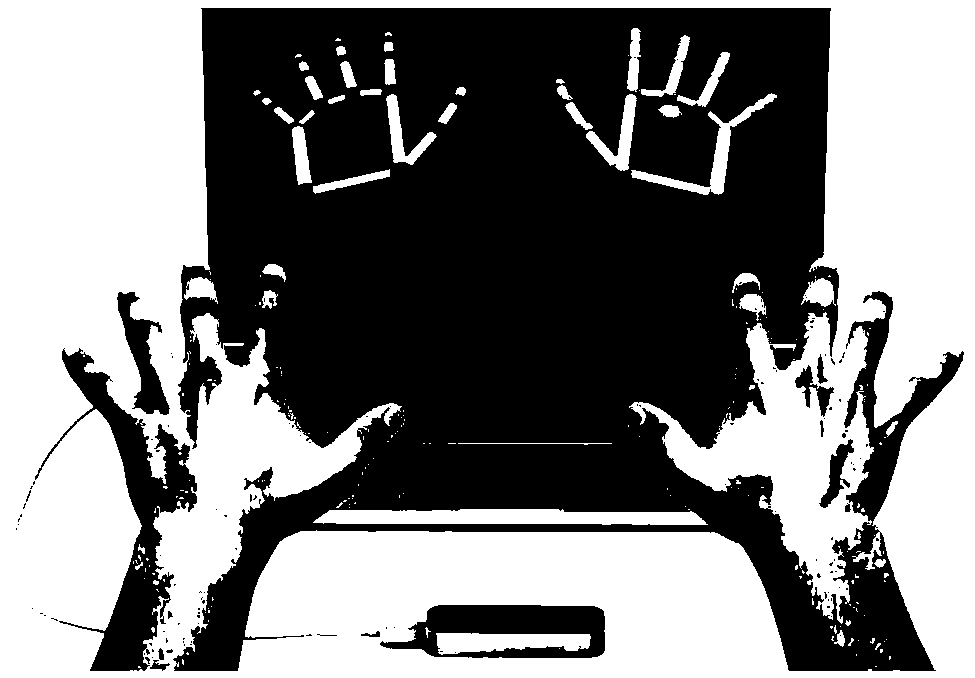

[0053] The hand bone structure provided by Leap Motion is as follows: figure 2 shown. Before officially starting the experimental task, the process and operation method of the task will be explained to the subjects to ensure that the extracted dynamic gesture database is valid.

[0054] Obtain t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com