Man-machine interaction integrated device based on Leap Motion equipment

A somatosensory device and human-computer interaction technology, which is applied in the field of human-computer interaction, can solve problems such as recognition and recognition accuracy difficulties, and achieve the effect of powerful system functions and making up for defects in recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

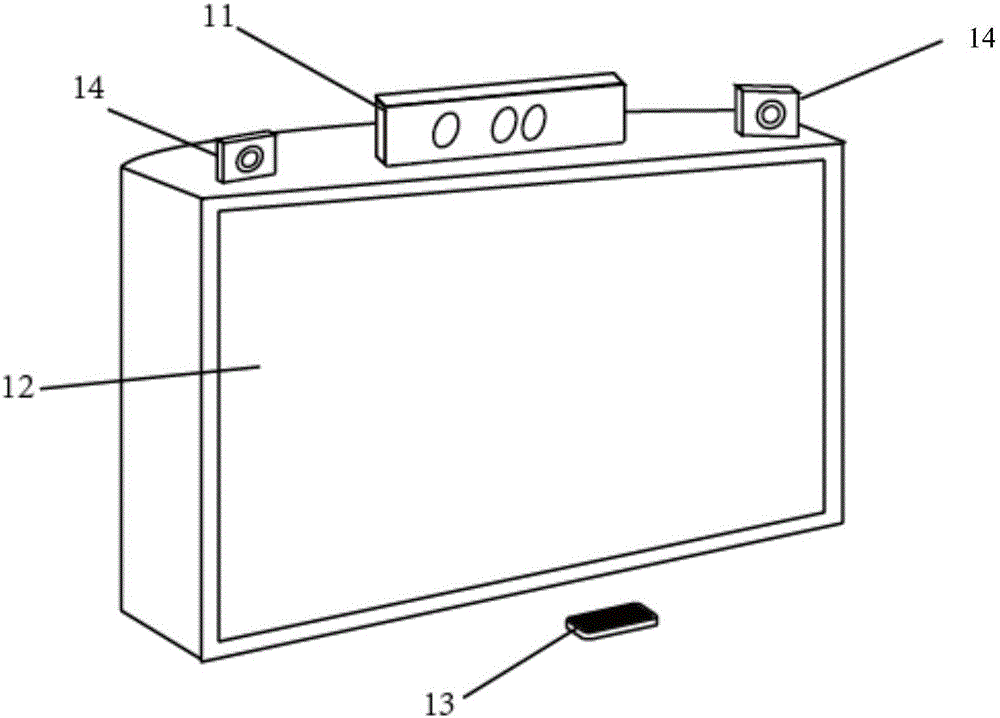

[0066] like Figure 5 As shown, the human-computer interaction integration device based on somatosensory equipment described in the present invention is applied to a 3D keyboard, that is, to perform a click operation on a 3D virtual image, wherein the touch recognition area 21 and the touch prediction area 22, the specific implementation method is as follows :

[0067] (1) Start camera 14 and Kinect camera 11 to collect information, judge which equipment identification tracking area the user is in, and carry out human eye tracking identification;

[0068] (2) The display terminal 12 is switched to the corresponding 3D playback mode;

[0069] (3) Start the Leap Motion somatosensory device 13 to collect gesture information, and the calculation processing unit 152 performs color space separation, filtering and segmentation extraction contour processing on the image collected, that is, calculates the human body finger according to the relative positional relationship between the ...

Embodiment 2

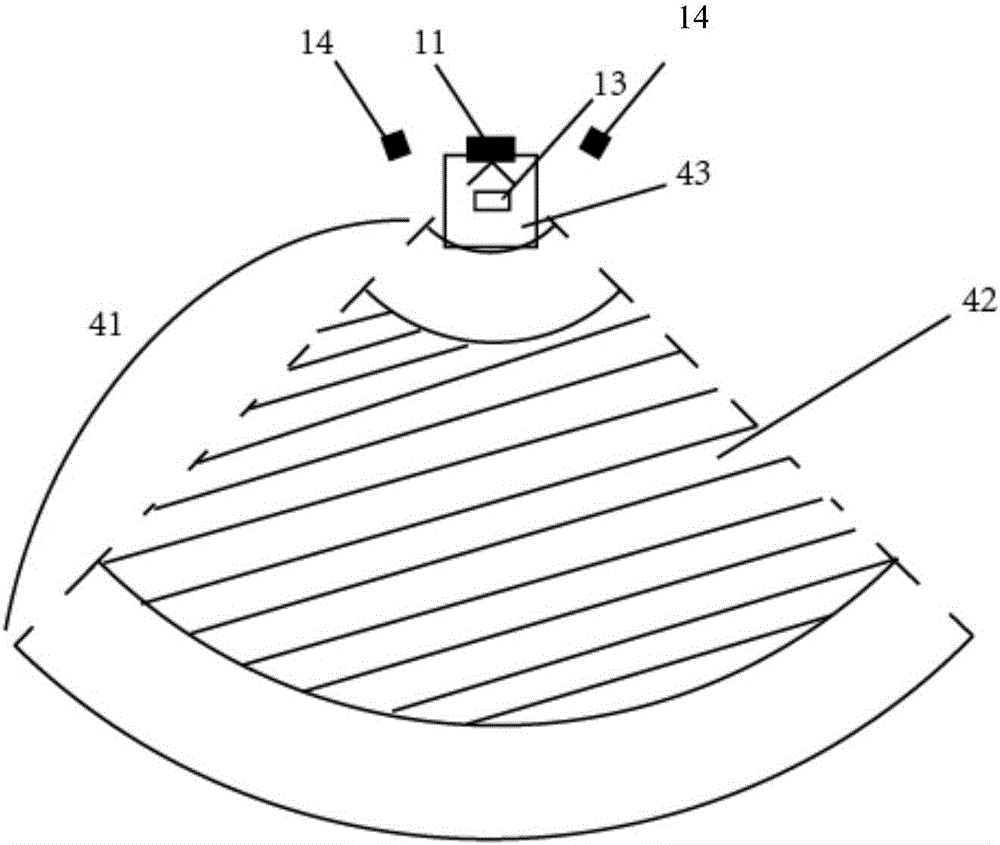

[0071] like Figure 6 As shown, the human-computer interaction integration device based on somatosensory equipment described in the present invention is used in a 3D drawing board, and the specific implementation method is as follows:

[0072] (1) start the 2D mode of the display terminal 12;

[0073] (2) start Kinect camera 11 and camera 14, gather information, judge user to be in which equipment's human eye recognition tracking range, carry out human eye tracking, and switch to under the corresponding 3D playback mode;

[0074] (3) Start the Leap Motion somatosensory device 13, the calculation processing unit 152 carries out color space separation to the image collected, filtering and segmenting and extracting contour processing, and obtains feature points, that is, according to the relative positional relationship between the human body and the detection device, calculate the holding time The spatial distribution of the feature points of the hand of the pen is combined wit...

Embodiment 3

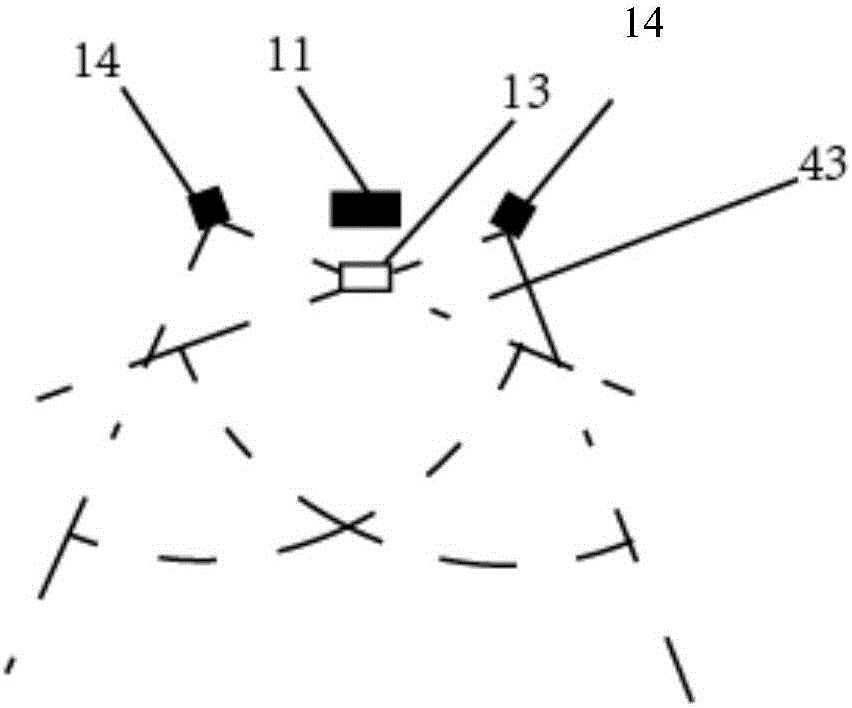

[0078] like Figure 7 As shown, the human-computer interaction integration device based on somatosensory equipment described in the present invention is used in 3D model gesture control, and the specific implementation method is as follows:

[0079] (1) start the 2D mode of the display terminal 12;

[0080] (2) start Kinect camera 11 and camera 14, gather information, judge user to be in which equipment's human eye recognition tracking range, carry out human eye tracking, and switch to under the corresponding 3D playback mode;

[0081] (3) Start the Leap Motion somatosensory device 13, the calculation processing unit 152 carries out color space separation to the image collected in real time, filters and segments and extracts contour processing, obtains feature points, that is, calculates according to the relative positional relationship between the human body and the detection device The spatial distribution of the feature points of both hands is integrated with the spatial d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com