Video playing control method and device

A control method and panoramic video technology, applied in the multimedia field, can solve serious, high consumption, video freeze and other problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

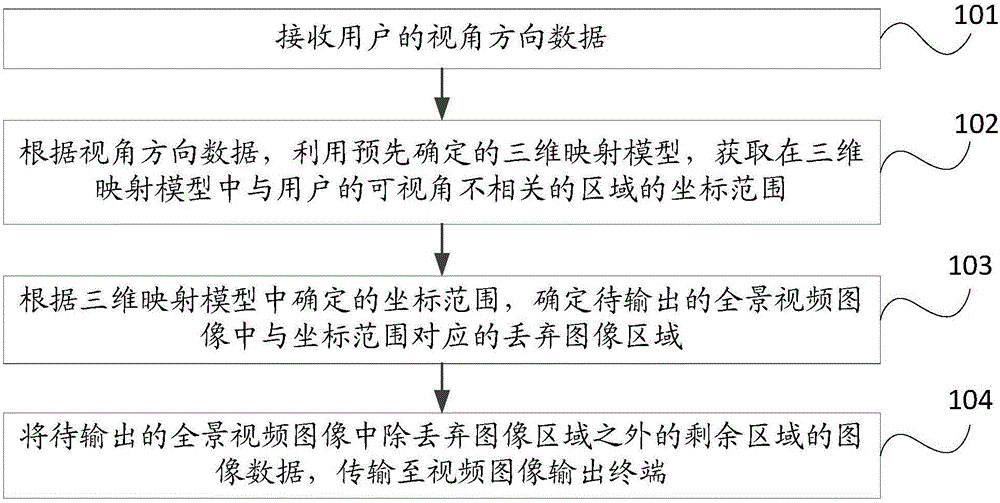

[0240] Such as figure 1As shown, it is a flow chart of the steps of the video playback control method in the first embodiment of the present invention. The control method is applied to the server side, including:

[0241] Step 101, receiving viewing direction data of a user.

[0242] In this step, the server end may receive viewing angle direction data transmitted in real time by the video image output terminal. Specifically, the view direction data may be gyroscope data, and the gyroscope data may be used to indicate the user's viewing posture, that is, to indicate the user's view direction. In this way, when the server receives the gyroscope data transmitted by the video image output terminal in real time, it can obtain the user's view direction data in real time.

[0243] Step 102 , according to the view direction data, using a predetermined 3D mapping model, to obtain a coordinate range of an area in the 3D mapping model that is not related to the user's viewing angle. ...

no. 2 example

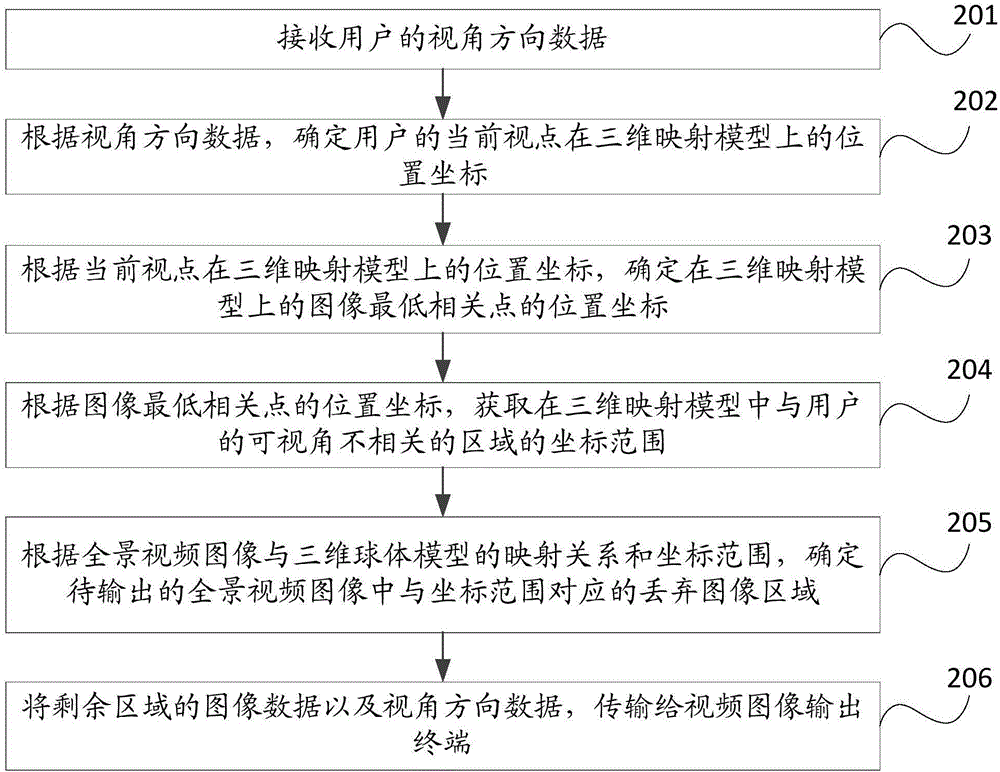

[0251] Such as figure 2 As shown, it is a flow chart of the steps of the video playback control method in the second embodiment of the present invention. The control method is applied to the server side, including:

[0252] Step 201, receiving viewing direction data of a user.

[0253] In this step, the server end may receive viewing angle direction data transmitted in real time by the video image output terminal. Specifically, the view direction data may be gyroscope data, and the gyroscope data may be used to indicate the user's viewing posture, that is, to indicate the user's view direction. In this way, when the server receives the gyroscope data transmitted by the video image output terminal in real time, it can obtain the user's view direction data in real time.

[0254] Step 202: Determine the position coordinates of the user's current viewpoint on the three-dimensional mapping model according to the view direction data.

[0255] In this step, specifically, the user...

no. 3 example

[0360] Such as Figure 11 As shown, it is a flow chart of the steps of the video playback control method in the third embodiment of the present invention. The control method is applied to the video image output terminal, including:

[0361] Step 1101, acquire the viewing angle direction data of the user in real time, and send the viewing angle direction data to the server.

[0362] In this step, specifically, the viewing direction data of the user may be gyroscope data, and the viewing direction data may be used to indicate the viewing posture of the user, that is, to indicate the viewing direction of the user. Therefore, the video image output terminal can obtain the user's viewing angle direction data through the gyroscope, and after the video image output terminal obtains the user's viewing angle direction data in real time, it can send the viewing angle direction data to the server.

[0363] Step 1102, receiving the image data of the remaining area except the discarded im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com