Human-machine interactive voice control method and device based on user emotional state, and vehicle

A technology of voice control and human-computer interaction, applied in voice analysis, voice recognition, instruments, etc., can solve problems that affect driving safety and have not yet been popularized, and achieve the effect of driving safety

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The present invention will be described more fully hereinafter with reference to the accompanying drawings, in which exemplary embodiments of the invention are illustrated.

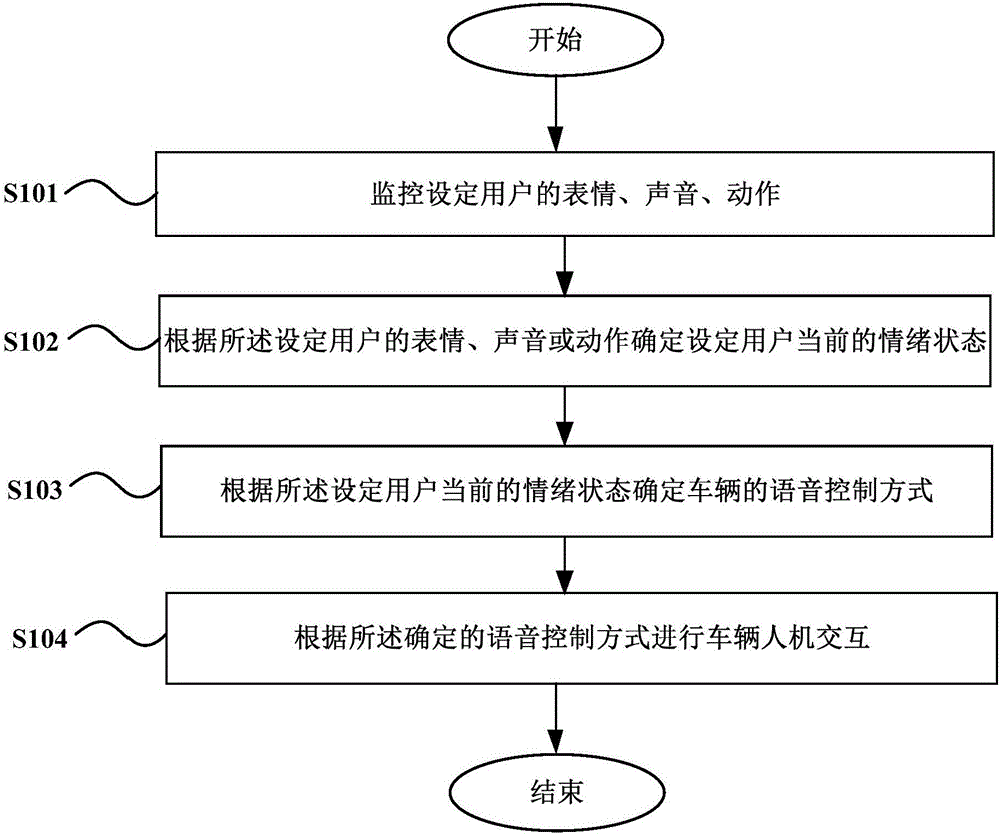

[0037] figure 1 Show a flow chart of the human-computer interaction voice control method based on the user's emotional state according to an embodiment of the present invention, refer to figure 1 As shown, the method includes:

[0038] Step 101, monitor and set user's expression, voice or action.

[0039] In one embodiment, a combination of various sensors can be used to monitor or detect the user's expression, voice or action.

[0040] For example, the vehicle's built-in fatigue driving camera can be used to monitor the user's expressions, actions, etc.; the vehicle's built-in microphone can be used to detect and set the user's voice situation.

[0041] Step 102: Determine the current emotional state of the set user according to the set user's expression, voice or action.

[0042] In one embod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com