GPU-SIFT based real time binocular vision positioning method

A binocular vision positioning and video technology, applied in navigation, instrumentation, surveying and navigation, etc., can solve the problems of time-consuming and difficult to achieve image matching, speed up the SIFT feature matching process, etc., to achieve scalability and practicability, The effect of strong environmental applicability and high positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

[0034] In this embodiment, the real-time binocular vision positioning method based on GPU-SIFT proposed by the present invention includes the following steps:

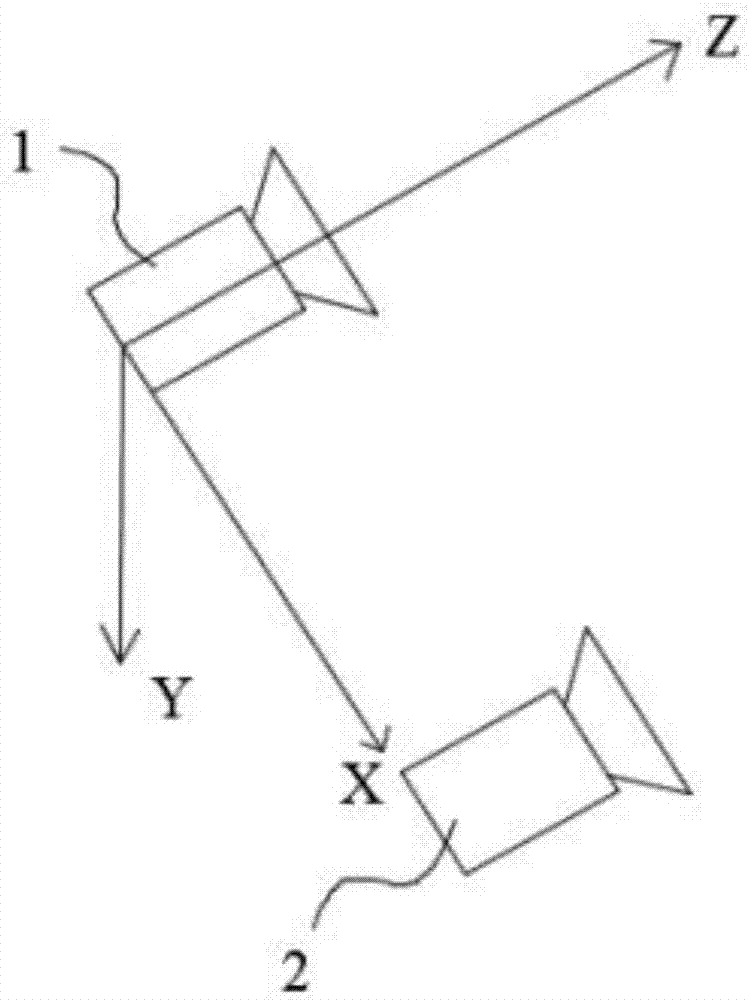

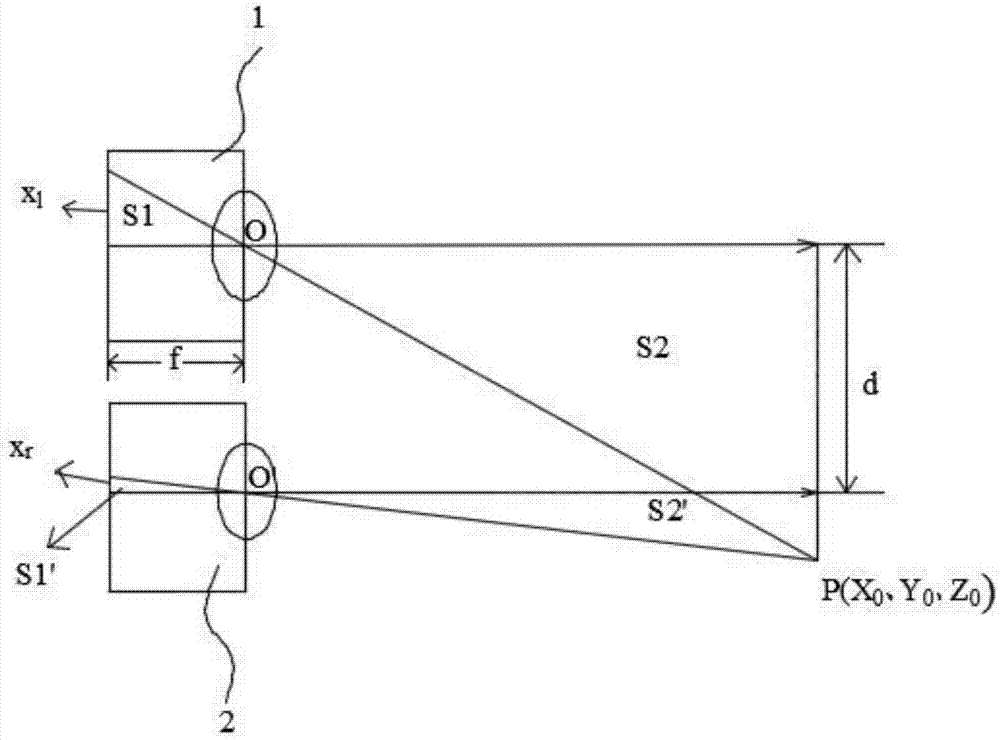

[0035] Step 1, using a parallel binocular camera to obtain a stereoscopic image video of the left and right eye images during the movement of the robot or mobile platform;

[0036] Step 2, using the GPU-SIFT feature matching algorithm to obtain the corresponding matching points in the two frames before and after the video shot during the motion;

[0037] Step 3: Estimate the displacement of the camera by solving the equation of motion by matching the coordinate changes of the points in the imaging space or establishing three-dimensional coordinates;

[0038] Step 4: After obtaining the position and rotation angle of the camera at each moment of travel, combined with kalman filtering, th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com