Storm task expansion scheduling algorithm based on data stream prediction

A scheduling algorithm and task scheduling technology, applied in digital data processing, computing, program startup/switching, etc., can solve problems such as time overhead, insufficient consideration of relevance, increased tuple processing delay, etc., to reduce processing delay , the effect of improving throughput

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

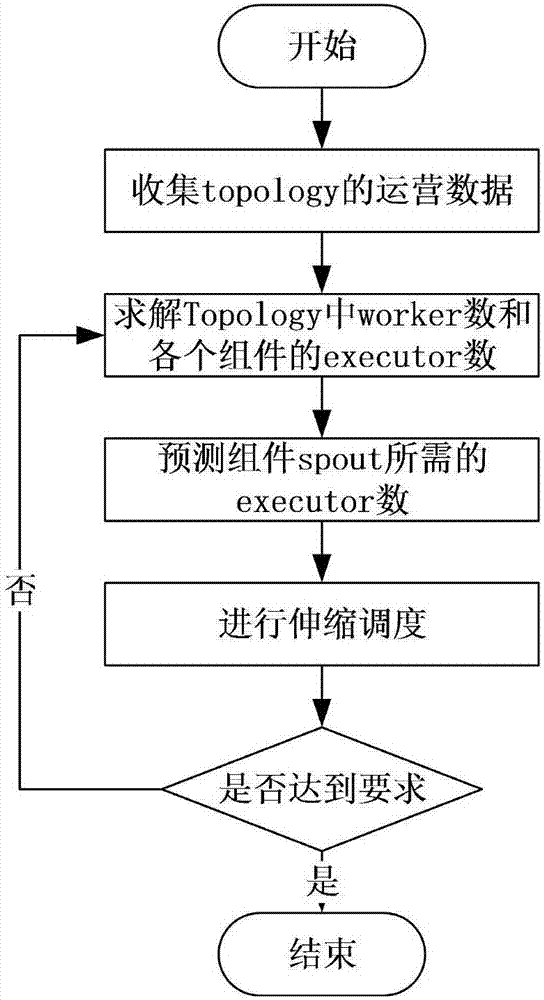

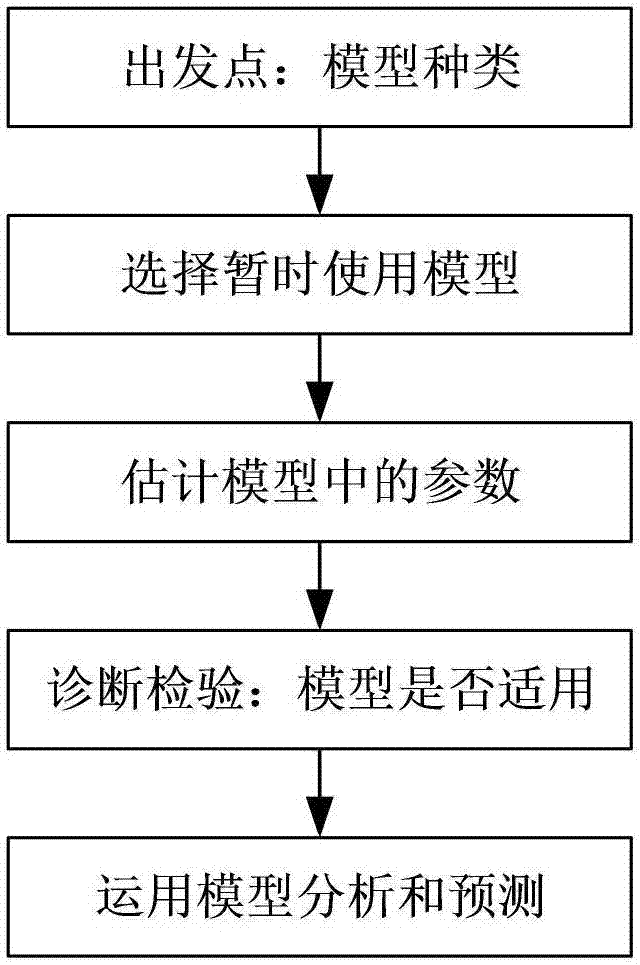

Method used

Image

Examples

Embodiment Construction

[0030] The preferred embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

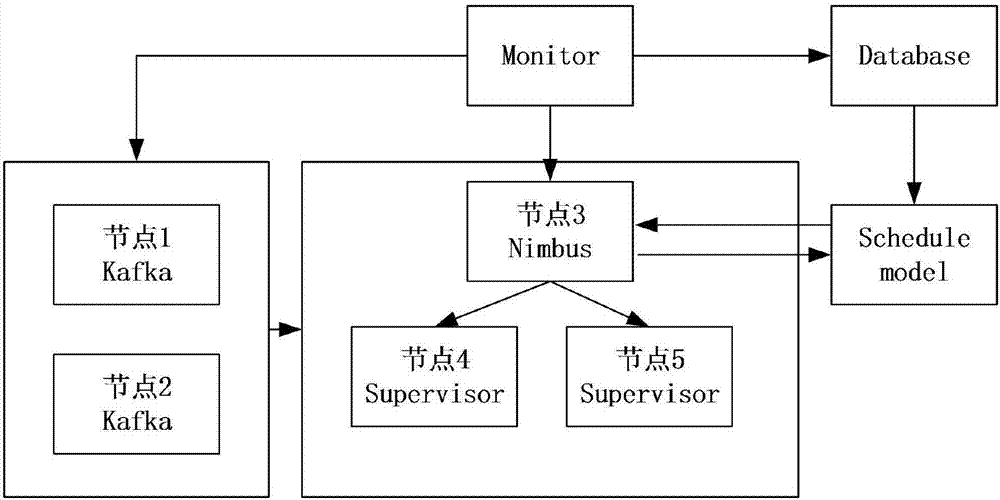

[0031] Such as image 3 As shown, the implementation of the present invention includes three modules: Topology monitoring module, scaling module and scheduling module. In the topology monitoring module, you can call the Thrift interface of NimbusClient to obtain the monitoring data of each topology running in Storm on the UI and the Ganglia cluster monitoring tool to obtain the data of each node and load, and then we save the data in the database mysql, and scale the adjustment module At the beginning of each cycle, the operation data of each Topology in mysql is saved in the previous cycle, and then the scaling solution of the Topology in this cycle is solved through the above model, and then the scheduling is performed. The present invention will be described in detail below by counting the words in the microblog as an example:

[0032]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com