3D Video Depth Image Prediction Mode Selection Method Based on Viewpoint Correlation

A technology of prediction mode and depth image, which is applied in the field of video coding and decoding, can solve the problems such as insufficient consideration of viewpoint correlation, and the complexity of depth map mode selection algorithm needs to be reduced, and achieves the effect of reducing coding complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

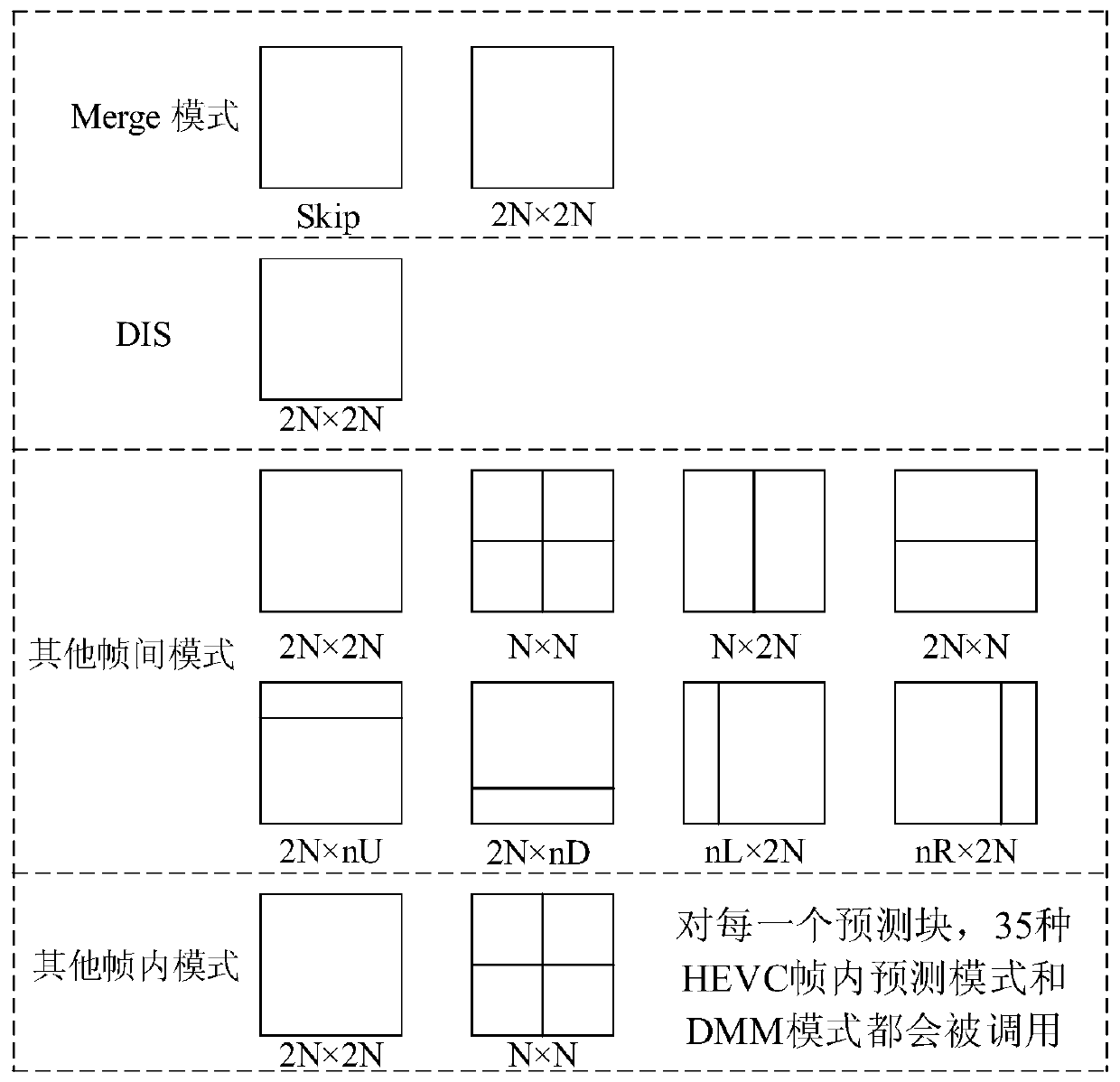

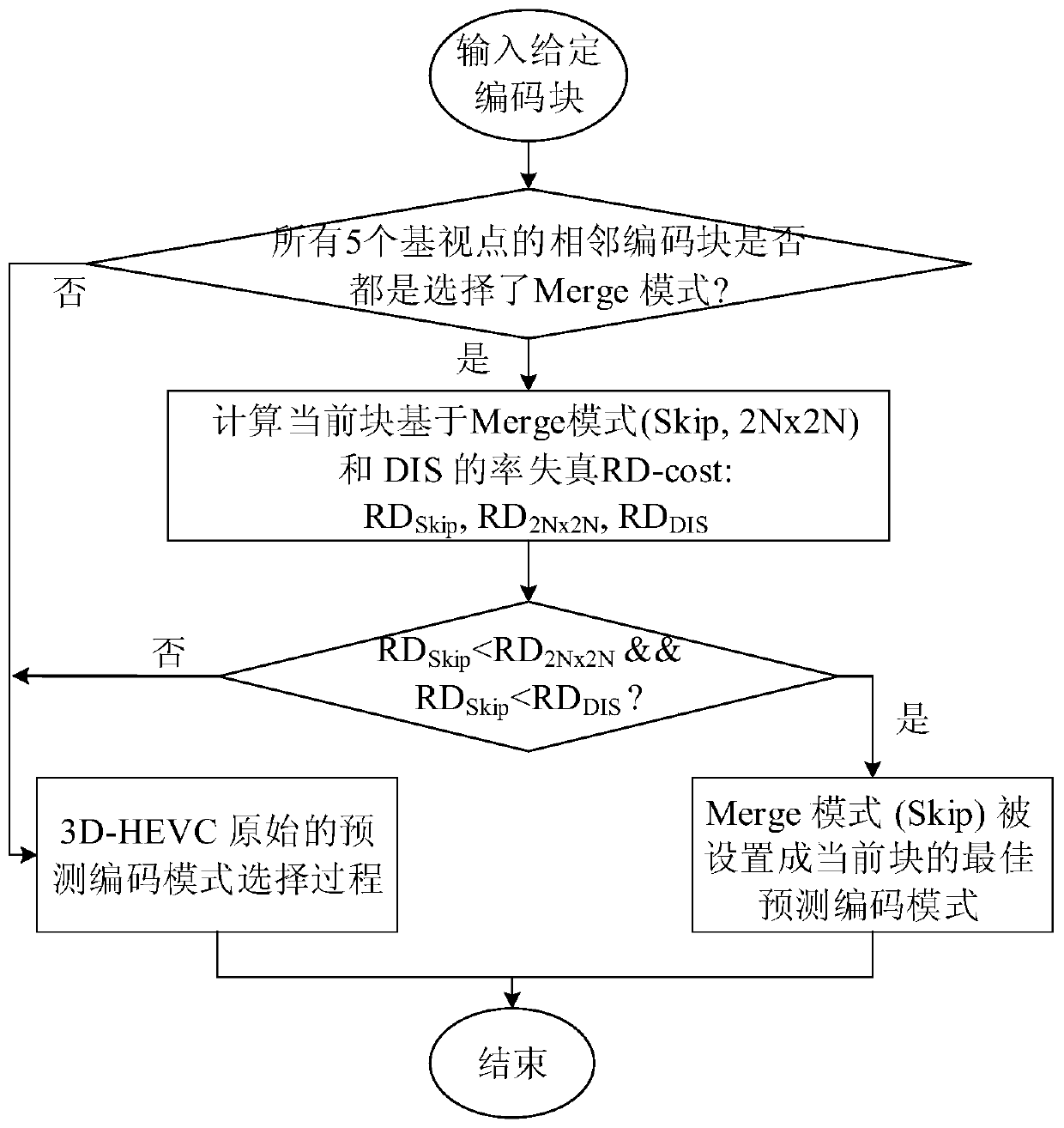

[0041] What this embodiment shows is a 3D video depth image prediction mode rapid selection method based on viewpoint correlation, and its process is as follows image 3 As shown, the steps include:

[0042] Step 1: For the input non-independent view coding block, judge whether the 5 adjacent reference coding blocks of the base view have selected Merge mode as its predictive coding mode, if yes, go to step 2; if not, skip to step 5;

[0043] Step 2: Calculate the rate-distortion RD-cost of the current encoding block based on Skip mode, 2N×2N Merge mode and DIS mode, and the calculation formula is:

[0044] J(m)=D VSO (m)+λ·B(m) m∈C

[0045] Step 3: Determine whether the rate-distortion of Skip mode is smaller than 2N×2N Merge mode and DIS mode, if yes, go to step 4; if not, go to step 5;

[0046] Step 4: Set the Skip mode as the prediction mode of the current coding block, and the prediction mode selection process is terminated early, and skip to step 6;

[0047] Step 5: ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com