Concurrent calculation system based on Spark and GPU (Graphics Processing Unit)

A parallel computing and distributed computing technology, applied in computing, computers, digital computer components, etc., can solve problems such as inability to perceive GPU resources, inability to distinguish between CPU tasks and GPU tasks, and task execution failures

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] The technical scheme of the present invention will be further described below in conjunction with the accompanying drawings.

[0055] figure 1 It is a block diagram of model training and image recognition, mainly including:

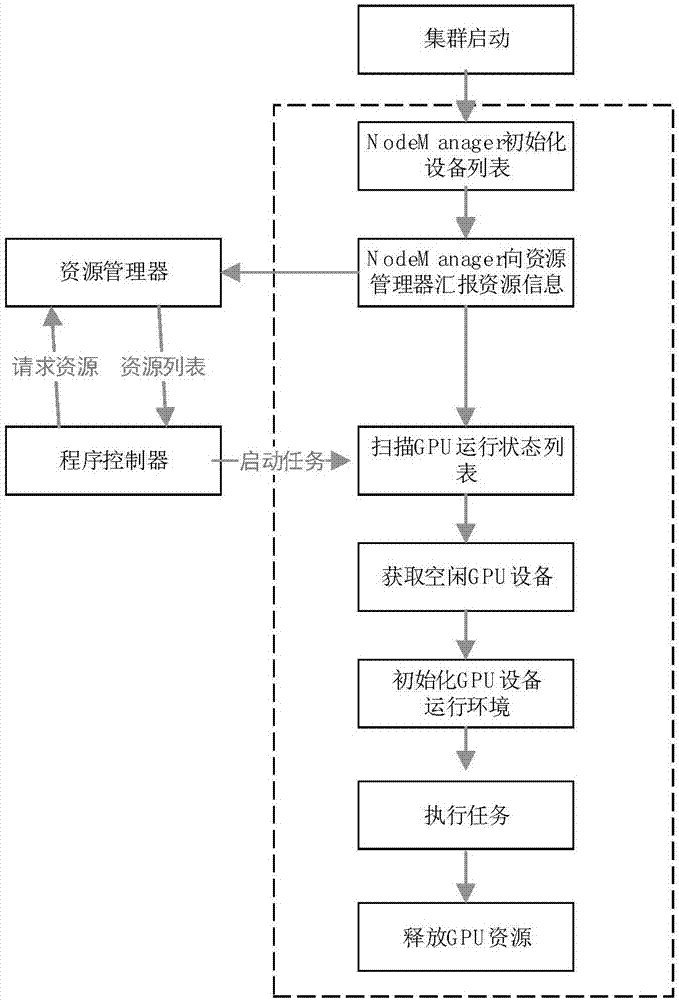

[0056] 1. In terms of resource representation, you can first customize the number of GPU devices included in the node, and modify the resource representation protocol to increase the representation of GPU resources. When the node is started, the node manager initializes the resource list, and reports the resource information of the node to the resource manager through the heartbeat mechanism.

[0057] 2. In terms of resource scheduling, the present invention adds GPU, CPU, and memory resources to the hierarchical management queue of the resource management platform.

[0058] 3. The resource manager sends the resource list information to be released to the corresponding node manager through the heartbeat mechanism. When the node manager detects th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com