Decision tree incremental learning method oriented to information big data

A technology of incremental learning and decision tree, applied in machine learning, computing model, computing and other directions, can solve problems such as unacceptable, reduced classification accuracy, and huge decision tree.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

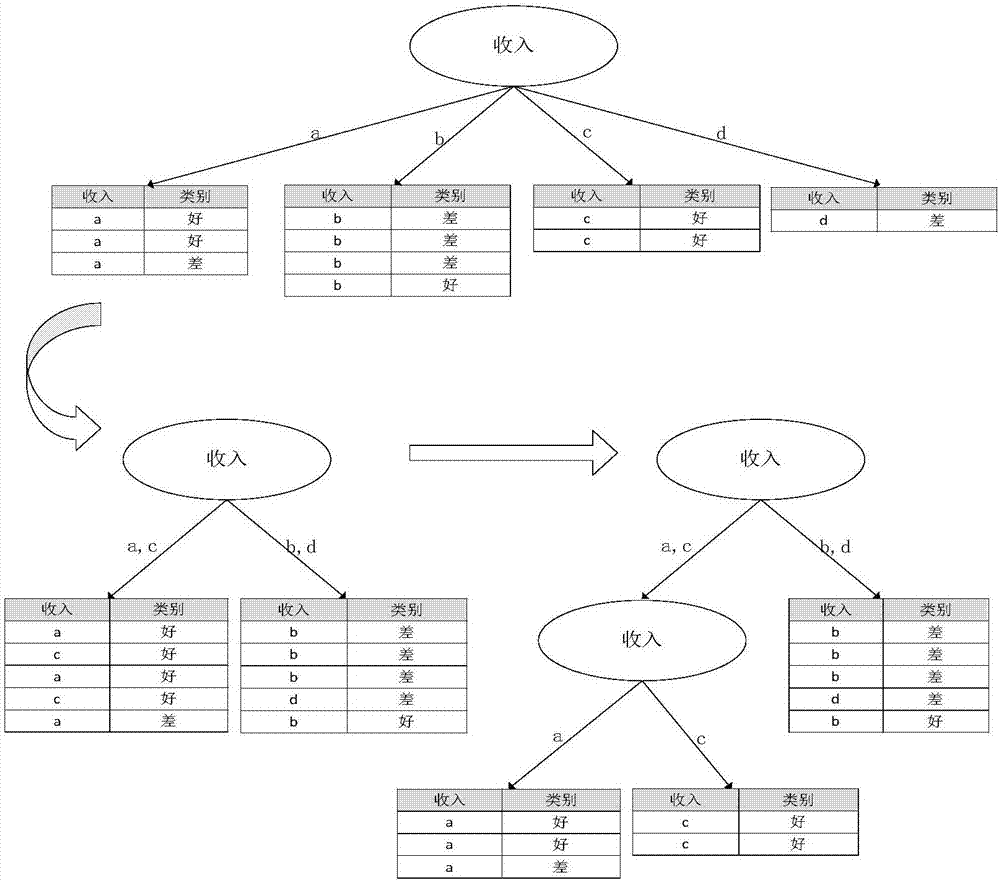

[0024] Combine below figure 1 , give an example to describe the present invention in more detail.

[0025] Step 1, node n 0 as the root node of the decision tree T. calculate n 0 The node splitting metric SC(n 0 ), if n 0 is a separable node, then the n 0 Put it into the set Q of nodes to be split. The node splitting criterion is in refers to the node that belongs to n i The number of records, MG(n i ) is the node n i Maximum information gain when splitting into two branches.

[0026] Step 2. If the number of leaf nodes in the decision tree T is less than the limited maximum number of leaf nodes and the set Q is not empty, repeat the following operations for all nodes in the set Q;

[0027] Step 3: From the candidate classification node set Q, select the node n with the largest splitting metric value b , and the node n b Deleted from set Q.

[0028] Step 4. Split node n b , and compute the split n b The node splitting metrics of the two child nodes generated...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com