Interaction area and interaction time period identification method, storage equipment and mobile terminal

A recognition method and time segment technology, applied in character and pattern recognition, image data processing, instruments, etc., can solve the problem of no human-interactive activity area identification, loss of computing efficiency and real-time performance, and no complete record of activity area activities Time and other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

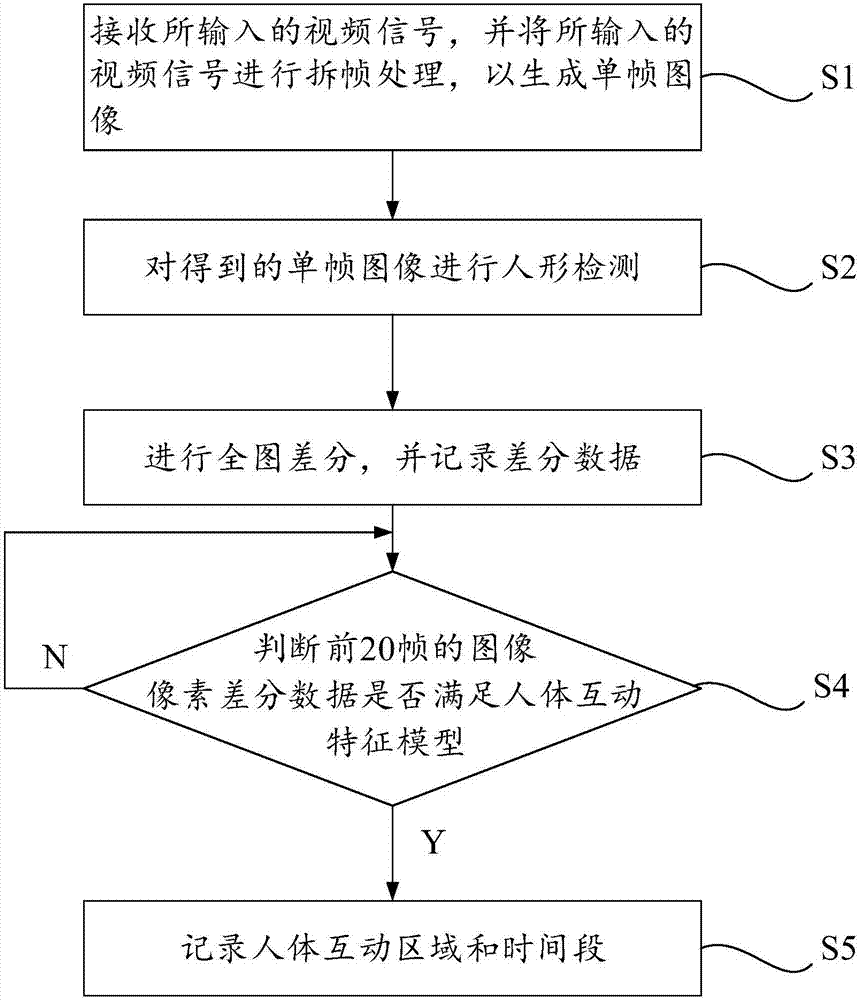

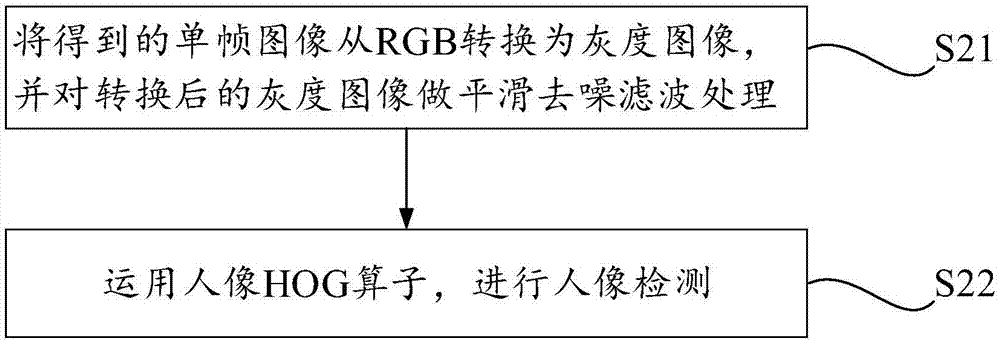

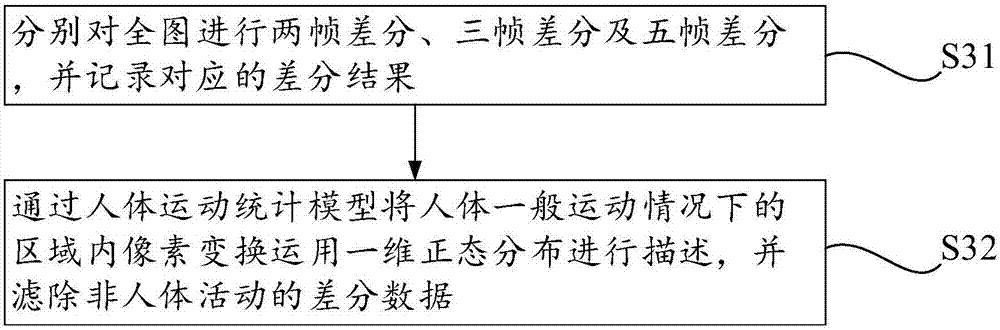

[0057] Please refer to figure 1 As shown, it is a flow chart of a preferred embodiment of a method for identifying an interaction area and an interaction time period of a differential pixel according to the present invention. A preferred implementation of the method for identifying the interaction area and interaction time period of the differential pixels includes the following steps:

[0058] Step S1: receiving an input video signal, and performing frame splitting processing on the input video signal to generate a single-frame image.

[0059] Frame rate refers to the number of still pictures played per second in a video format, so the video signal can be disassembled into several still pictures, that is, deframed. There are many existing softwares that can realize the frame splitting function, so I won’t go into details here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com