Color video matting method based on depth foreground tracking

A color video and color image technology, applied in the field of color video matting based on depth foreground tracking, can solve the problems that the processing time cannot meet the real-time requirements, the manual interaction workload is large, the local edge artifacts, etc., to ensure the continuity of space and time. performance, low computational cost, and simplified operation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments.

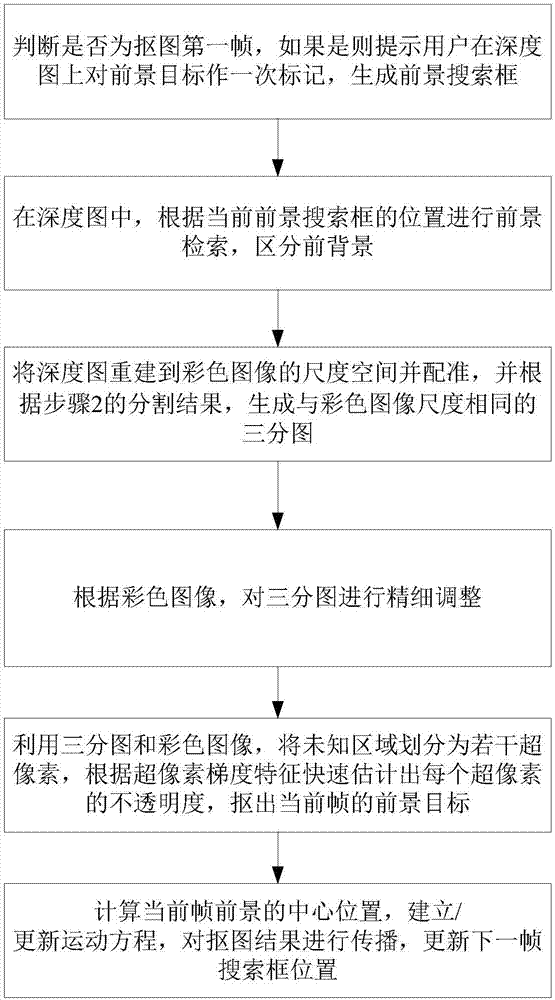

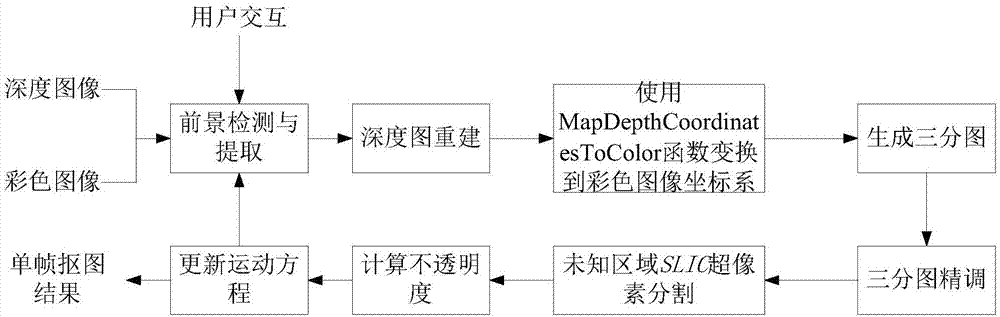

[0019] A color video matting method based on deep foreground tracking, such as figure 1 As shown in Fig. 1, before starting the image matting, the user specifies the approximate area of the foreground on the depth image to clarify the search range and improve the accuracy of matting. Then in the search box, the foreground object is segmented by using the depth information difference between the foreground and the background. Reconstruct the depth image to the resolution of the color image and perform registration so that the two correspond pixel by pixel to generate a three-part map; according to the color information of the color image, finely adjust the three-part map so that the value in the three-part map is Pixels with a value of 1 correspond to the foreground of the color image, pixels with a value of 0 correspond to the background of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com