Camera tracking method for depth cameras

A technology of depth camera and camera, which is applied in the field of intelligent perception, can solve the problems of limited scope of application and limited scope of application, and achieve the effect of reducing the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings.

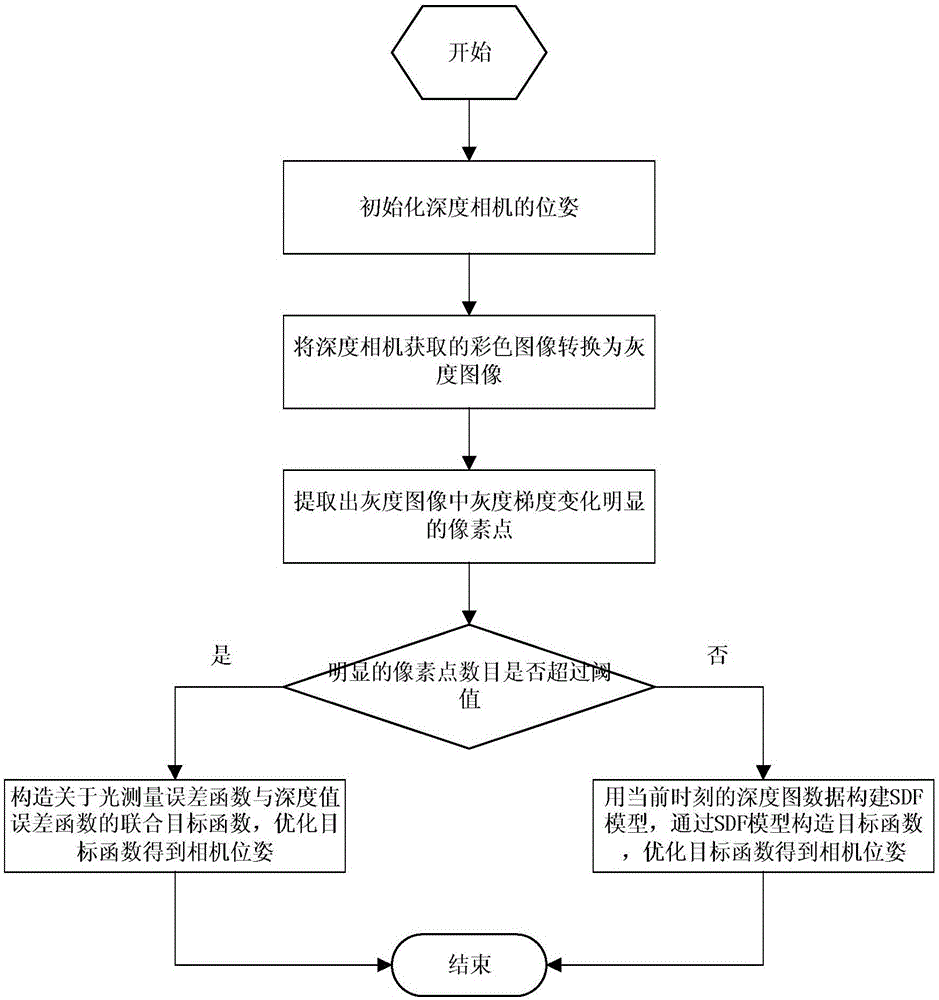

[0042] A camera tracking method for depth cameras, such as figure 1 As shown, the specific steps are as follows.

[0043] Step 1: Initialize the pose of the depth camera.

[0044] Step 2: Convert the color image acquired by the depth camera to a grayscale image.

[0045] Step 3: Extract the pixels in the grayscale image whose grayscale gradient changes are greater than the set threshold a, and use these pixels as pixels with obvious grayscale gradient changes.

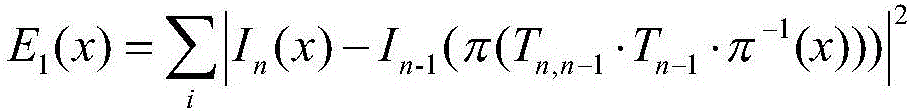

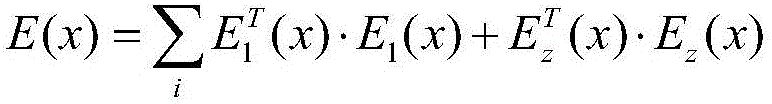

[0046]Step 4: If the number of pixels with obvious gray gradient changes is greater than the set threshold b, then for pixels with obvious gray gradients, construct a light measurement error function and a depth value error function, and use the two norms of these two functions Construct a joint objective function, optimize the joint objective function to estimate the change of c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com