Method and device for training language model of neural network and voice recognition method and device

A language model and neural network technology, applied in speech recognition, speech analysis, instruments, etc., can solve the problems of long training time and time-consuming neural network language model training, and achieve high classification accuracy, less training changes, and realization of simple effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] Various preferred embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

[0075]

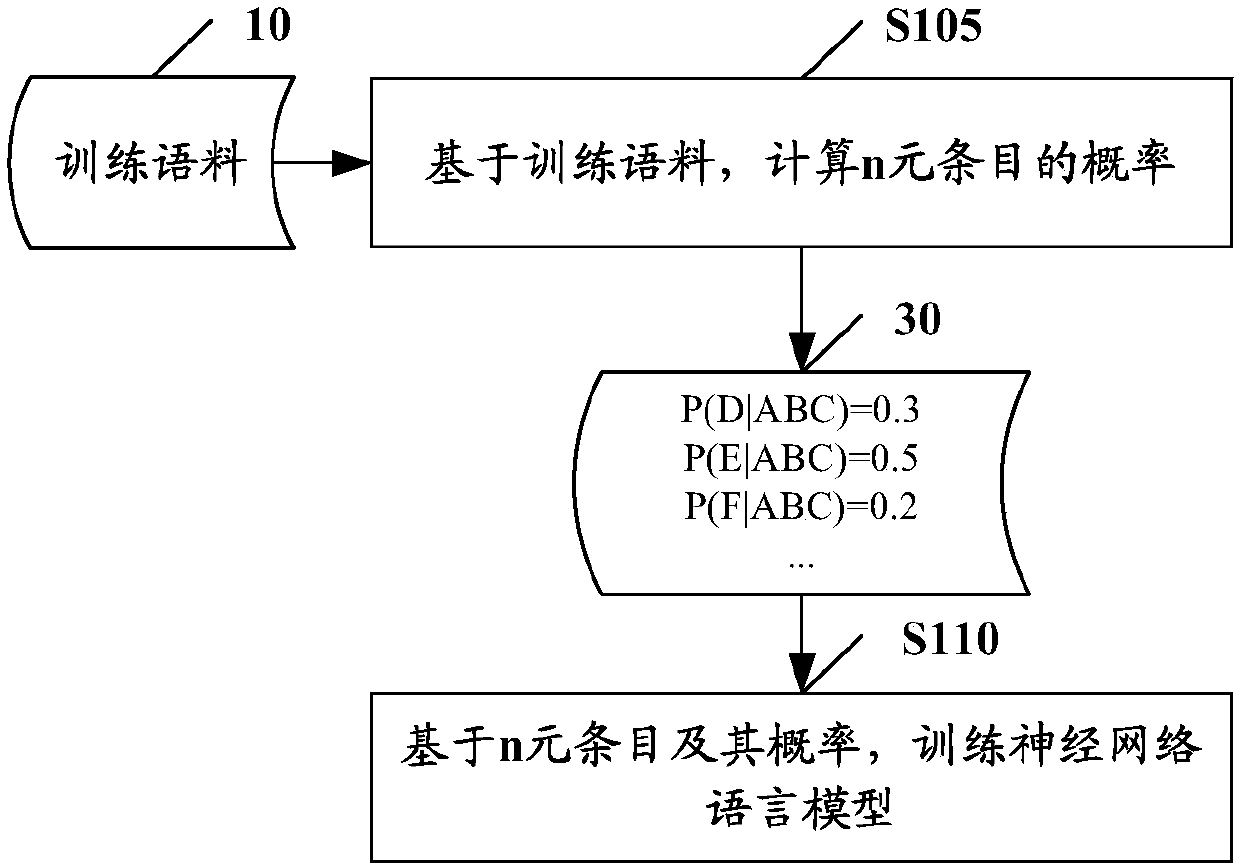

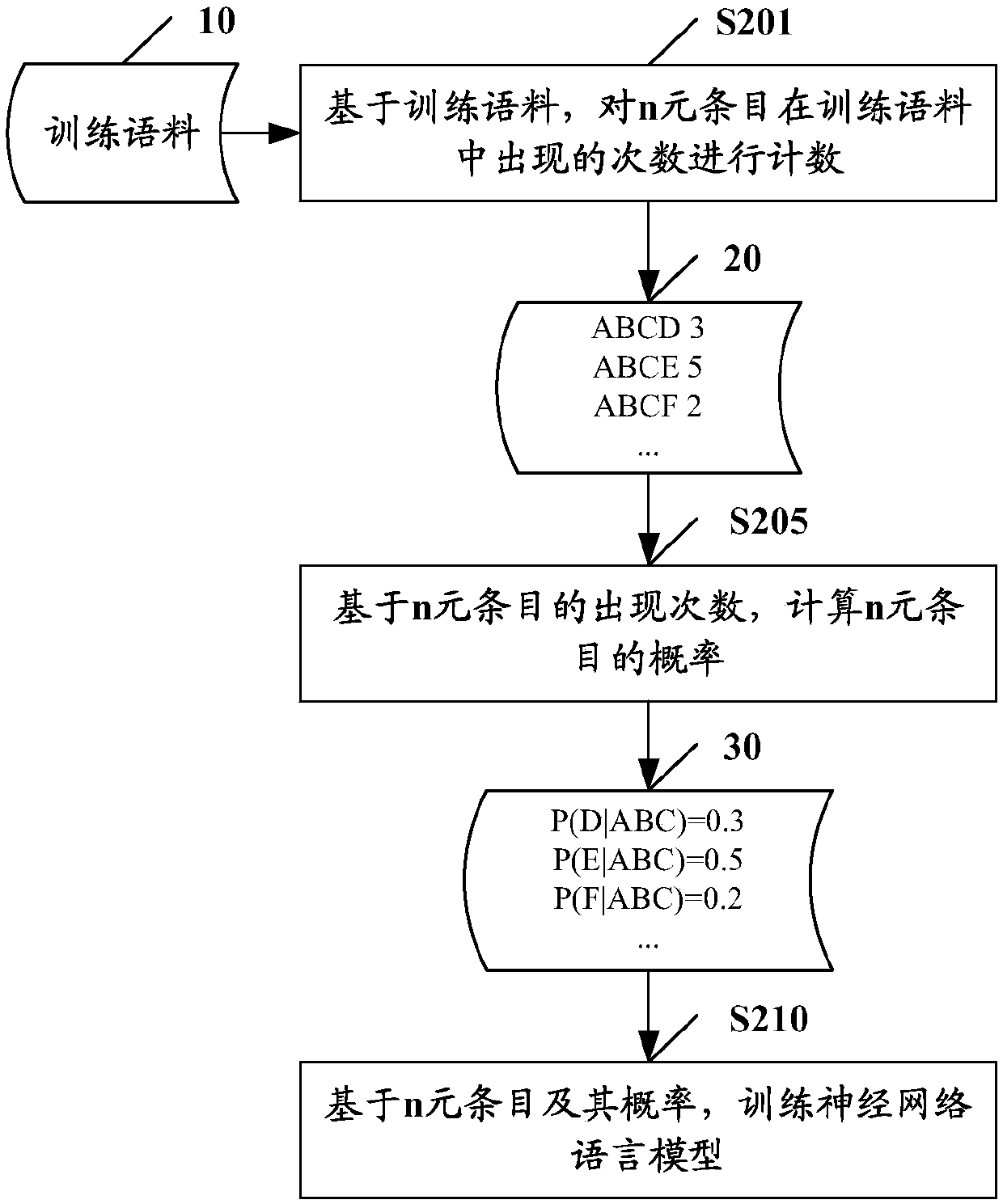

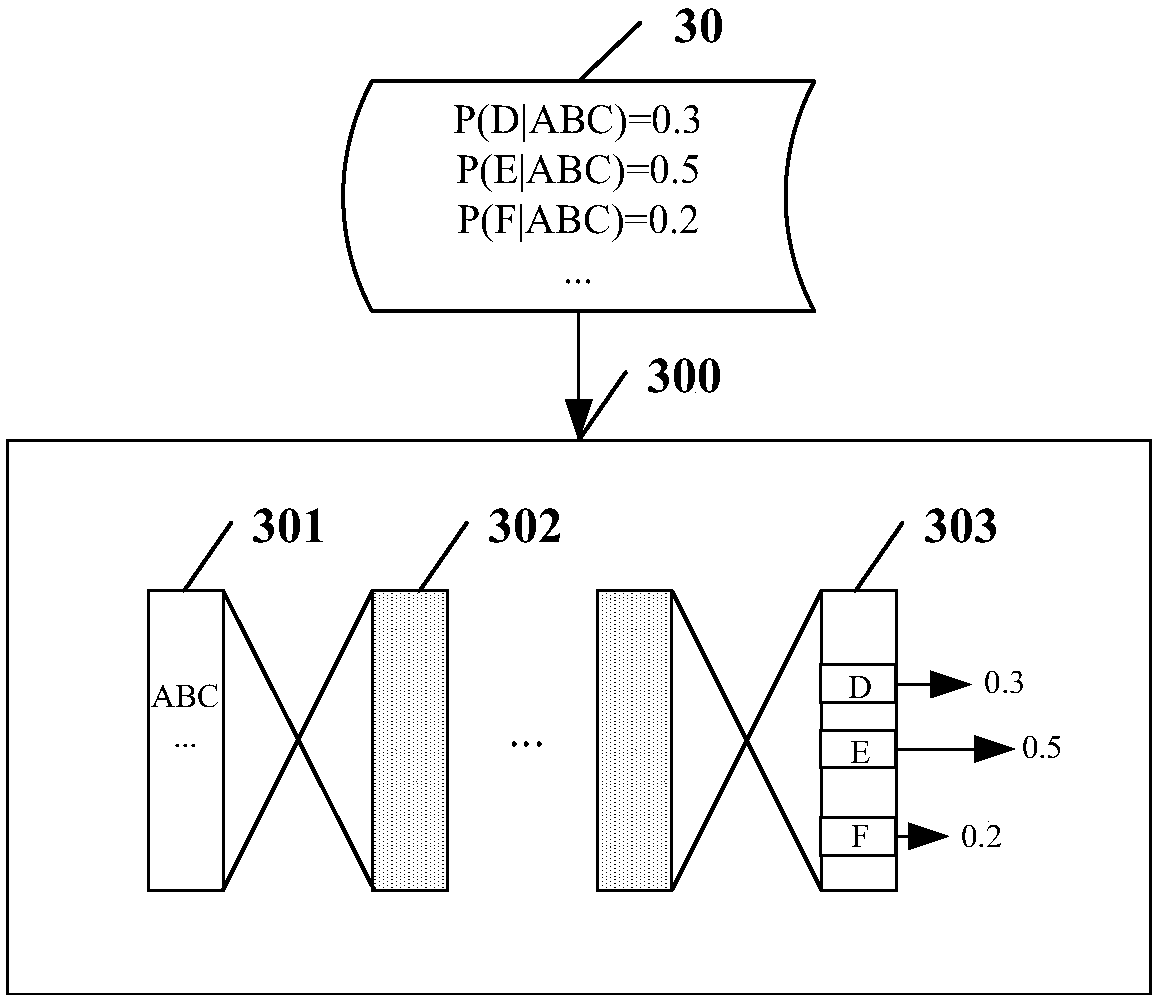

[0076] figure 1 is a flowchart of a method for training a neural network language model according to an embodiment of the present invention.

[0077] The method for training a neural network language model in this embodiment includes: calculating the probability of n-gram entries based on the training corpus; and training the above-mentioned neural network language model based on the above-mentioned n-gram entries and their probabilities.

[0078] like figure 1 As shown, firstly, in step S105, based on the training corpus 10, the probability of the n-gram entry is calculated.

[0079] In this embodiment, the training corpus 10 is a word-segmented corpus. An n-gram entry refers to an n-gram word sequence. For example, when n is 4, the n-gram entry is "w1w2w3w4". The probability of an n-gram entry refers to the probability ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com